Adhavan Jayabalan

Decentralized Connectivity-Preserving Deployment of Large-Scale Robot Swarms

Jun 01, 2018

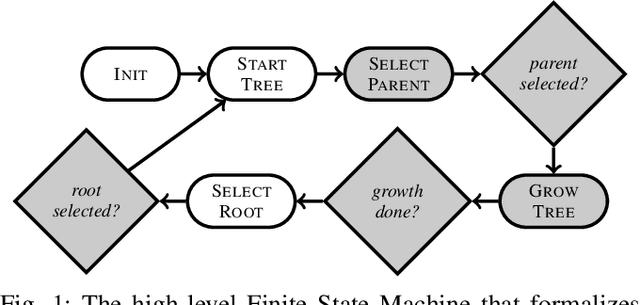

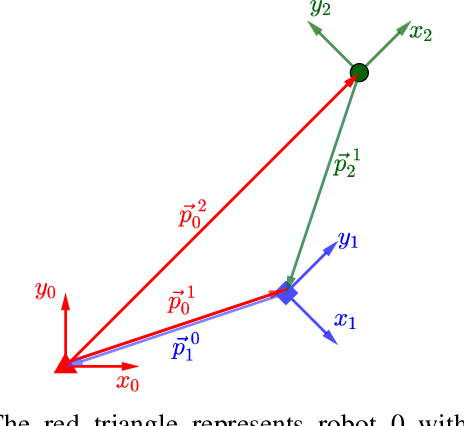

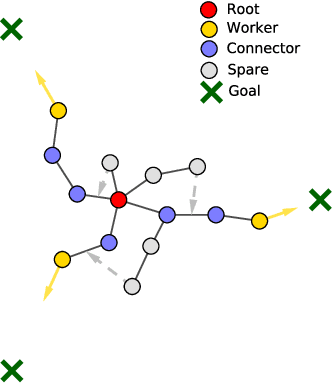

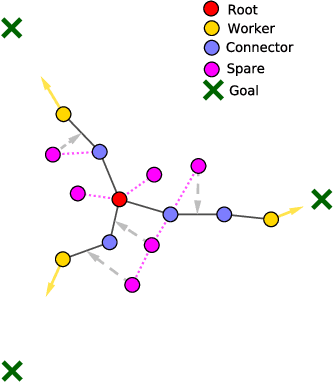

Abstract:We present a decentralized and scalable approach for deployment of a robot swarm. Our approach tackles scenarios in which the swarm must reach multiple spatially distributed targets, and enforce the constraint that the robot network cannot be split. The basic idea behind our work is to construct a logical tree topology over the physical network formed by the robots. The logical tree acts as a backbone used by robots to enforce connectivity constraints. We study and compare two algorithms to form the logical tree: outwards and inwards. These algorithms differ in the order in which the robots join the tree: the outwards algorithm starts at the tree root and grows towards the targets, while the inwards algorithm proceeds in the opposite manner. Both algorithms perform periodic reconfiguration, to prevent suboptimal topologies from halting the growth of the tree. Our contributions are (i) The formulation of the two algorithms; (ii) A comparison of the algorithms in extensive physics-based simulations; (iii) A validation of our findings through real-robot experiments.

Dynamic Action Recognition: A convolutional neural network model for temporally organized joint location data

Dec 20, 2016

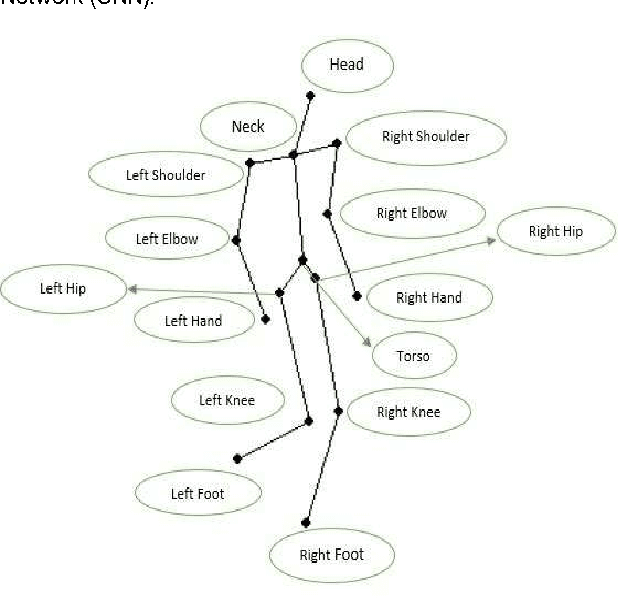

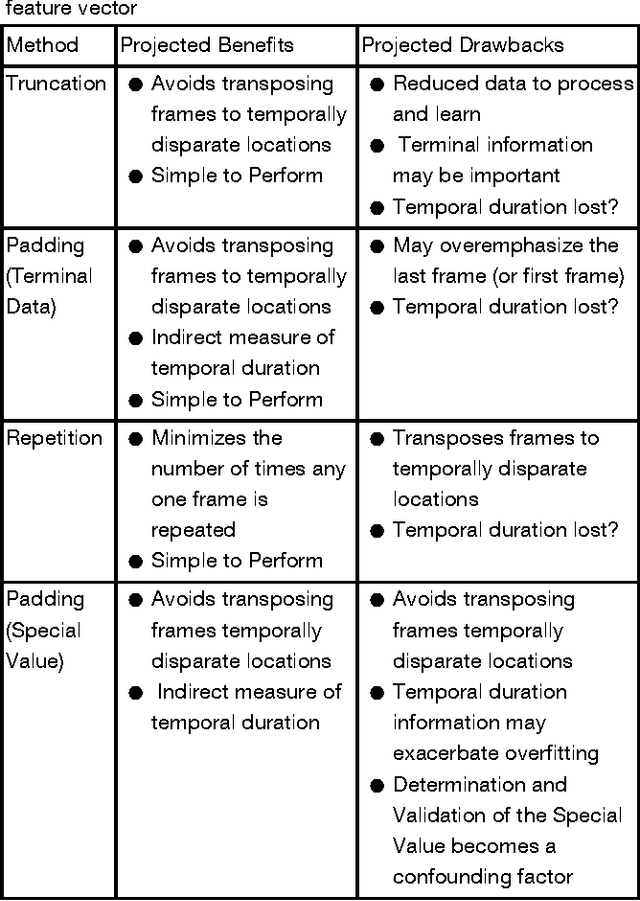

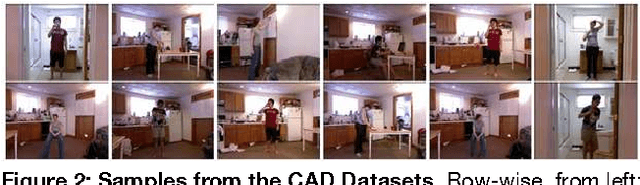

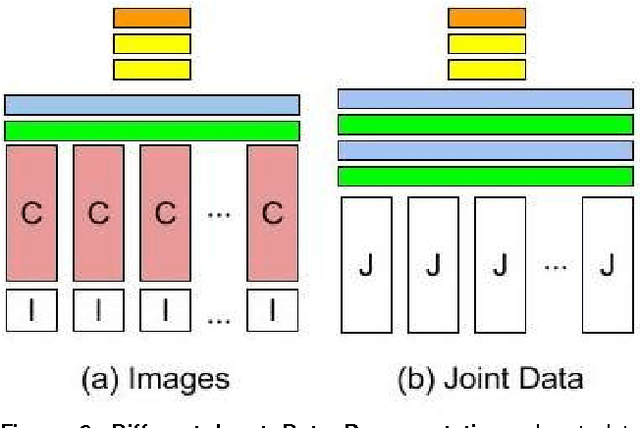

Abstract:Motivation: Recognizing human actions in a video is a challenging task which has applications in various fields. Previous works in this area have either used images from a 2D or 3D camera. Few have used the idea that human actions can be easily identified by the movement of the joints in the 3D space and instead used a Recurrent Neural Network (RNN) for modeling. Convolutional neural networks (CNN) have the ability to recognise even the complex patterns in data which makes it suitable for detecting human actions. Thus, we modeled a CNN which can predict the human activity using the joint data. Furthermore, using the joint data representation has the benefit of lower dimensionality than image or video representations. This makes our model simpler and faster than the RNN models. In this study, we have developed a six layer convolutional network, which reduces each input feature vector of the form 15x1961x4 to an one dimensional binary vector which gives us the predicted activity. Results: Our model is able to recognise an activity correctly upto 87% accuracy. Joint data is taken from the Cornell Activity Datasets which have day to day activities like talking, relaxing, eating, cooking etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge