Harish Karunakaran

Trajectory Control for Differential Drive Mobile Manipulators

Mar 31, 2023

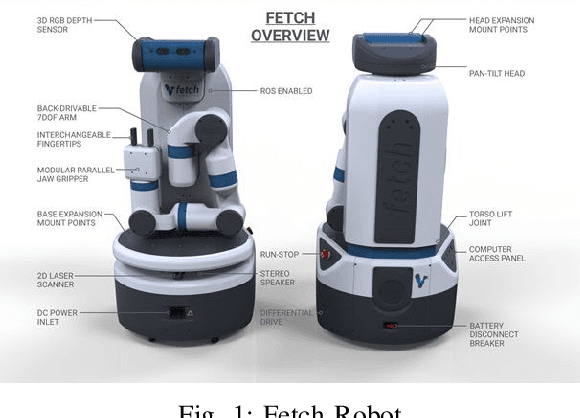

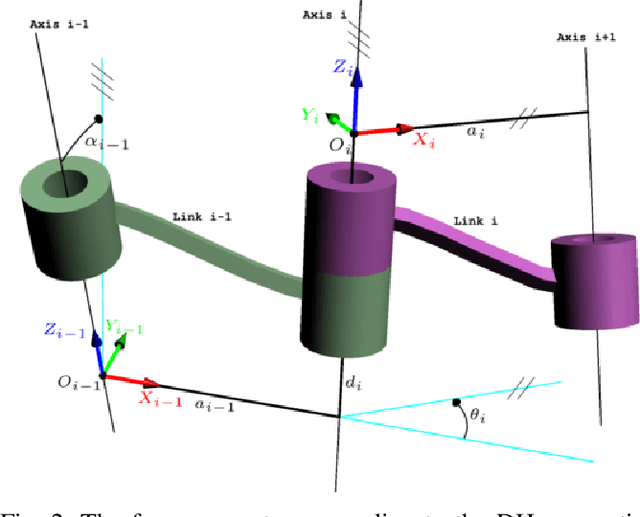

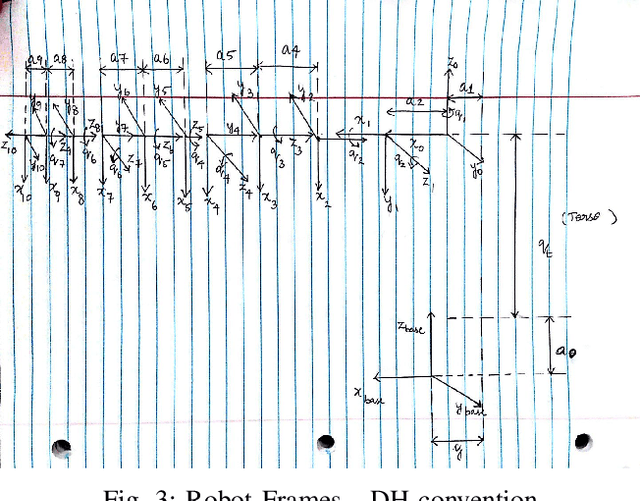

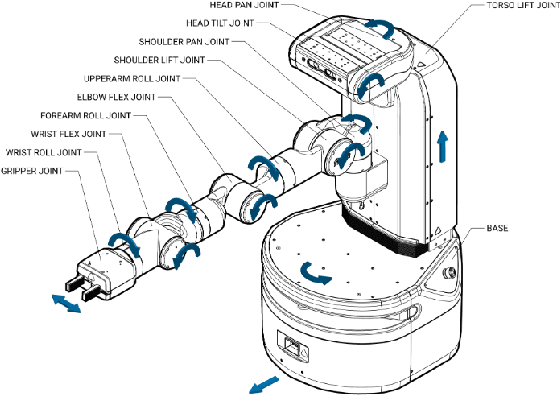

Abstract:Mobile manipulator systems are comprised of a mobile platform with one or more manipulators and are of great interest in a number of applications such as indoor warehouses, mining, construction, forestry etc. We present an approach for computing actuator commands for such systems so that they can follow desired end-effector and platform trajectories without the violation of the nonholonomic constraints of the system in an indoor warehouse environment. We work with the Fetch robot which consists of a 7-DOF manipulator with a differential drive mobile base to validate our method. The major contributions of our project are, writing the dynamics of the system, Trajectory planning for the manipulator and the mobile base, state machine for the pick and place task and the inverse kinematics of the manipulator. Our results indicate that we are able to successfully implement trajectory control on the mobile base and the manipulator of the Fetch robot.

Dynamic Action Recognition: A convolutional neural network model for temporally organized joint location data

Dec 20, 2016

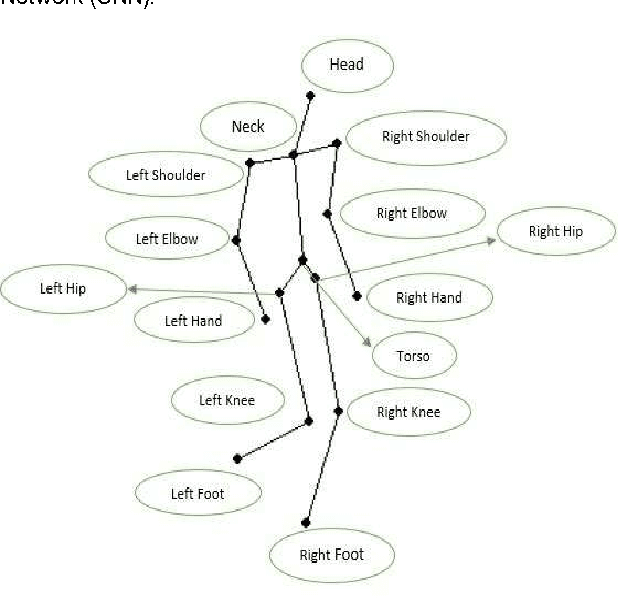

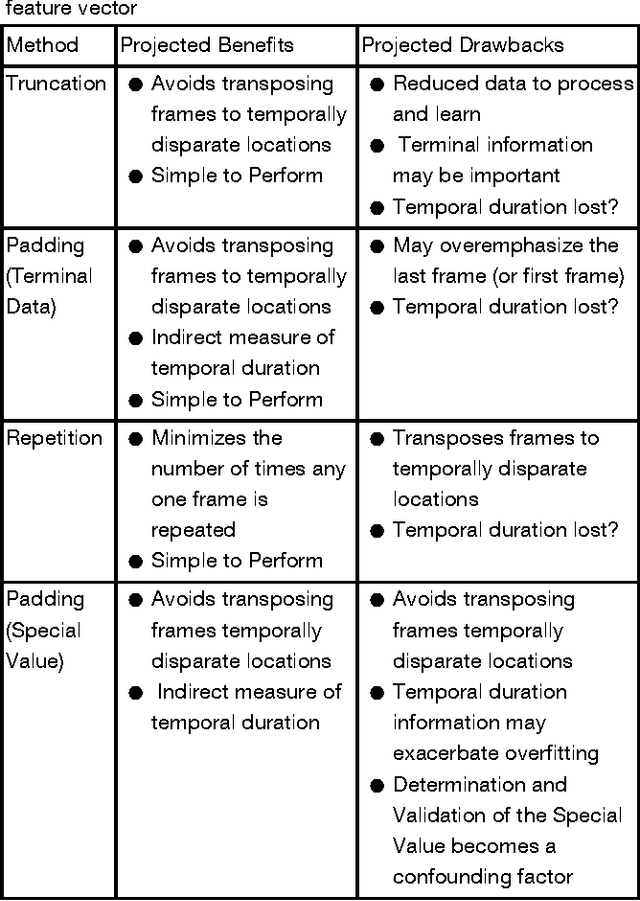

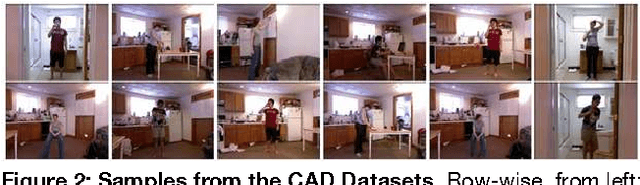

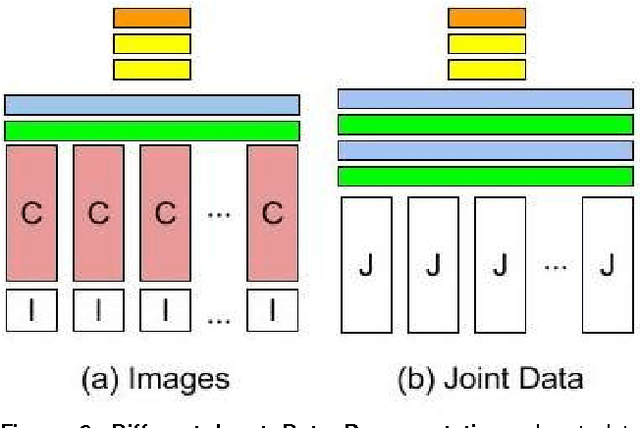

Abstract:Motivation: Recognizing human actions in a video is a challenging task which has applications in various fields. Previous works in this area have either used images from a 2D or 3D camera. Few have used the idea that human actions can be easily identified by the movement of the joints in the 3D space and instead used a Recurrent Neural Network (RNN) for modeling. Convolutional neural networks (CNN) have the ability to recognise even the complex patterns in data which makes it suitable for detecting human actions. Thus, we modeled a CNN which can predict the human activity using the joint data. Furthermore, using the joint data representation has the benefit of lower dimensionality than image or video representations. This makes our model simpler and faster than the RNN models. In this study, we have developed a six layer convolutional network, which reduces each input feature vector of the form 15x1961x4 to an one dimensional binary vector which gives us the predicted activity. Results: Our model is able to recognise an activity correctly upto 87% accuracy. Joint data is taken from the Cornell Activity Datasets which have day to day activities like talking, relaxing, eating, cooking etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge