Nahas Pareekutty

qRRT: Quality-Biased Incremental RRT for Optimal Motion Planning in Non-Holonomic Systems

Jan 07, 2021

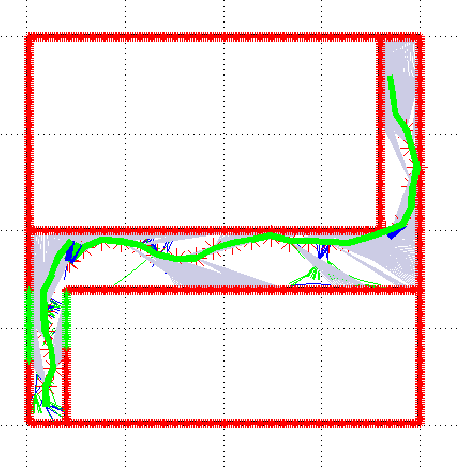

Abstract:This paper presents a sampling-based method for optimal motion planning in non-holonomic systems in the absence of known cost functions. It uses the principle of learning through experience to deduce the cost-to-go of regions within the workspace. This cost information is used to bias an incremental graph-based search algorithm that produces solution trajectories. Iterative improvement of cost information and search biasing produces solutions that are proven to be asymptotically optimal. The proposed framework builds on incremental Rapidly-exploring Random Trees (RRT) for random sampling-based search and Reinforcement Learning (RL) to learn workspace costs. A series of experiments were performed to evaluate and demonstrate the performance of the proposed method.

Learning to Prevent Monocular SLAM Failure using Reinforcement Learning

Dec 23, 2018

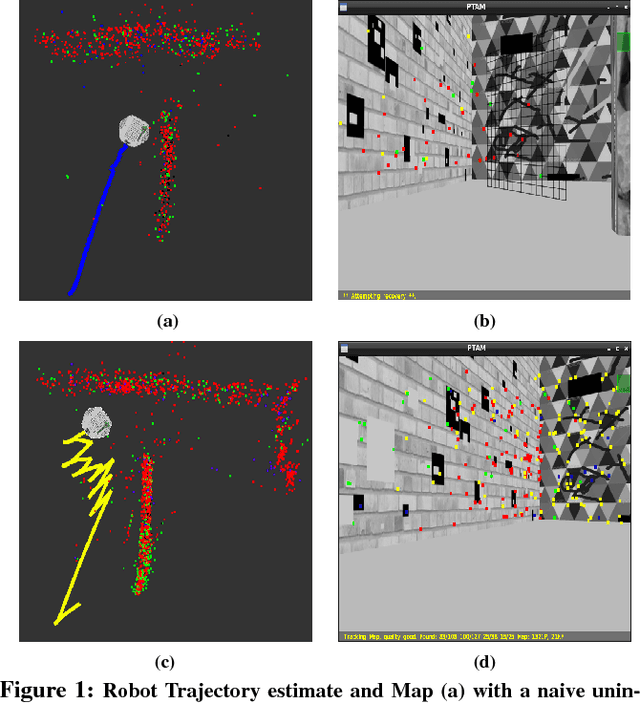

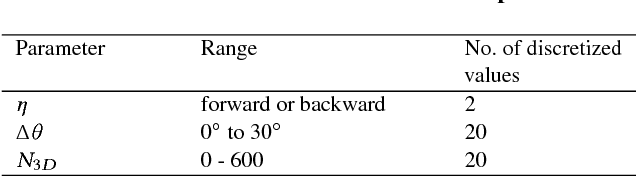

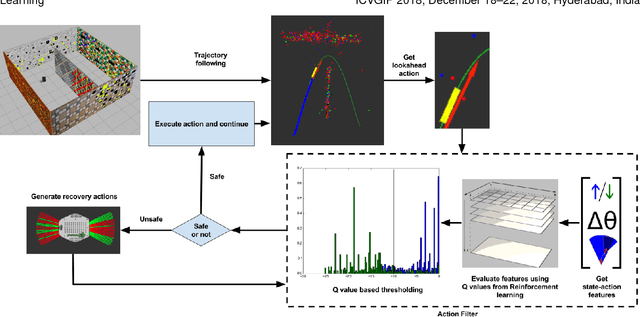

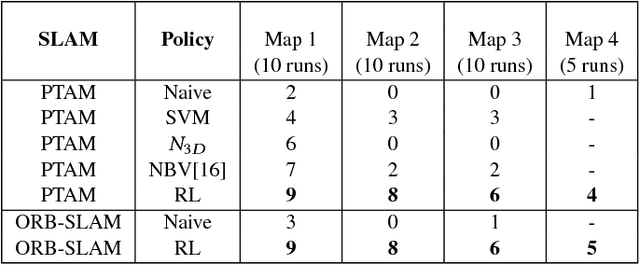

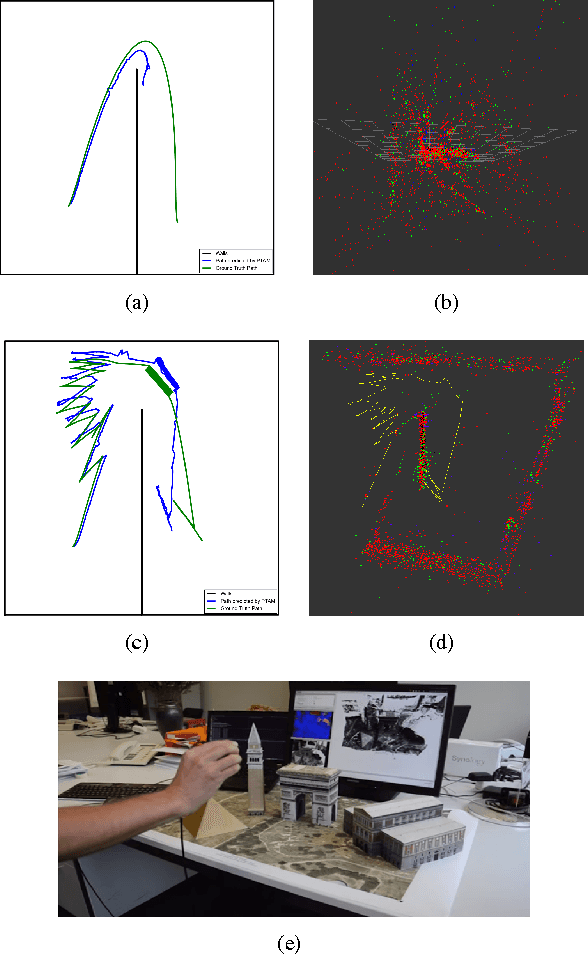

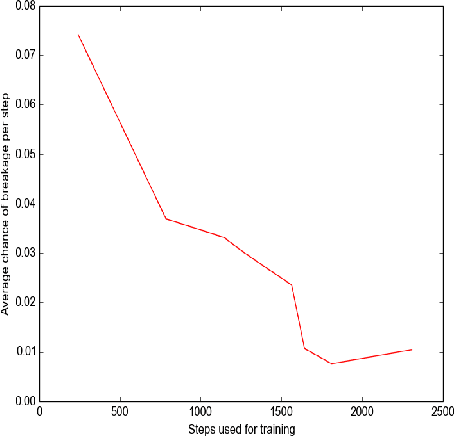

Abstract:Monocular SLAM refers to using a single camera to estimate robot ego motion while building a map of the environment. While Monocular SLAM is a well studied problem, automating Monocular SLAM by integrating it with trajectory planning frameworks is particularly challenging. This paper presents a novel formulation based on Reinforcement Learning (RL) that generates fail safe trajectories wherein the SLAM generated outputs do not deviate largely from their true values. Quintessentially, the RL framework successfully learns the otherwise complex relation between perceptual inputs and motor actions and uses this knowledge to generate trajectories that do not cause failure of SLAM. We show systematically in simulations how the quality of the SLAM dramatically improves when trajectories are computed using RL. Our method scales effectively across Monocular SLAM frameworks in both simulation and in real world experiments with a mobile robot.

SLAM-Safe Planner: Preventing Monocular SLAM Failure using Reinforcement Learning

Mar 03, 2017

Abstract:Effective SLAM using a single monocular camera is highly preferred due to its simplicity. However, when compared to trajectory planning methods using depth-based SLAM, Monocular SLAM in loop does need additional considerations. One main reason being that for the optimization, in the form of Bundle Adjustment (BA), to be robust, the SLAM system needs to scan the area for a reasonable duration. Most monocular SLAM systems do not tolerate large camera rotations between successive views and tend to breakdown. Other reasons for Monocular SLAM failure include ambiguities in decomposition of the Essential Matrix, feature-sparse scenes and more layers of non linear optimization apart from BA. This paper presents a novel formulation based on Reinforcement Learning (RL) that generates fail safe trajectories wherein the SLAM generated outputs (scene structure and camera motion) do not deviate largely from their true values. Quintessentially, the RL framework successfully learns the otherwise complex relation between motor actions and perceptual inputs that result in trajectories that do not cause failure of SLAM, which are almost intractable to capture in an obvious mathematical formulation. We show systematically in simulations how the quality of the SLAM map and trajectory dramatically improves when trajectories are computed by using RL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge