Mohammad Naiseh

Adaptive Human-Swarm Interaction based on Workload Measurement using Functional Near-Infrared Spectroscopy

May 13, 2024Abstract:One of the challenges of human-swarm interaction (HSI) is how to manage the operator's workload. In order to do this, we propose a novel neurofeedback technique for the real-time measurement of workload using functional near-infrared spectroscopy (fNIRS). The objective is to develop a baseline for workload measurement in human-swarm interaction using fNIRS and to develop an interface that dynamically adapts to the operator's workload. The proposed method consists of using fNIRS device to measure brain activity, process this through a machine learning algorithm, and pass it on to the HSI interface. By dynamically adapting the HSI interface, the swarm operator's workload could be reduced and the performance improved.

The Effect of Predictive Formal Modelling at Runtime on Performance in Human-Swarm Interaction

Jan 22, 2024Abstract:Formal Modelling is often used as part of the design and testing process of software development to ensure that components operate within suitable bounds even in unexpected circumstances. In this paper, we use predictive formal modelling (PFM) at runtime in a human-swarm mission and show that this integration can be used to improve the performance of human-swarm teams. We recruited 60 participants to operate a simulated aerial swarm to deliver parcels to target locations. In the PFM condition, operators were informed of the estimated completion times given the number of drones deployed, whereas in the No-PFM condition, operators did not have this information. The operators could control the mission by adding or removing drones from the mission and thereby, increasing or decreasing the overall mission cost. The evaluation of human-swarm performance relied on four key metrics: the time taken to complete tasks, the number of agents involved, the total number of tasks accomplished, and the overall cost associated with the human-swarm task. Our results show that PFM modelling at runtime improves mission performance without significantly affecting the operator's workload or the system's usability.

Outlining the design space of eXplainable swarm (xSwarm): experts perspective

Sep 03, 2023Abstract:In swarm robotics, agents interact through local roles to solve complex tasks beyond an individual's ability. Even though swarms are capable of carrying out some operations without the need for human intervention, many safety-critical applications still call for human operators to control and monitor the swarm. There are novel challenges to effective Human-Swarm Interaction (HSI) that are only beginning to be addressed. Explainability is one factor that can facilitate effective and trustworthy HSI and improve the overall performance of Human-Swarm team. Explainability was studied across various Human-AI domains, such as Human-Robot Interaction and Human-Centered ML. However, it is still ambiguous whether explanations studied in Human-AI literature would be beneficial in Human-Swarm research and development. Furthermore, the literature lacks foundational research on the prerequisites for explainability requirements in swarm robotics, i.e., what kind of questions an explainable swarm is expected to answer, and what types of explanations a swarm is expected to generate. By surveying 26 swarm experts, we seek to answer these questions and identify challenges experts faced to generate explanations in Human-Swarm environments. Our work contributes insights into defining a new area of research of eXplainable Swarm (xSwarm) which looks at how explainability can be implemented and developed in swarm systems. This paper opens the discussion on xSwarm and paves the way for more research in the field.

The Effect of Data Visualisation Quality and Task Density on Human-Swarm Interaction

Jul 17, 2023Abstract:Despite the advantages of having robot swarms, human supervision is required for real-world applications. The performance of the human-swarm system depends on several factors including the data availability for the human operators. In this paper, we study the human factors aspect of the human-swarm interaction and investigate how having access to high-quality data can affect the performance of the human-swarm system - the number of tasks completed and the human trust level in operation. We designed an experiment where a human operator is tasked to operate a swarm to identify casualties in an area within a given time period. One group of operators had the option to request high-quality pictures while the other group had to base their decision on the available low-quality images. We performed a user study with 120 participants and recorded their success rate (directly logged via the simulation platform) as well as their workload and trust level (measured through a questionnaire after completing a human-swarm scenario). The findings from our study indicated that the group granted access to high-quality data exhibited an increased workload and placed greater trust in the swarm, thus confirming our initial hypothesis. However, we also found that the number of accurately identified casualties did not significantly vary between the two groups, suggesting that data quality had no impact on the successful completion of tasks.

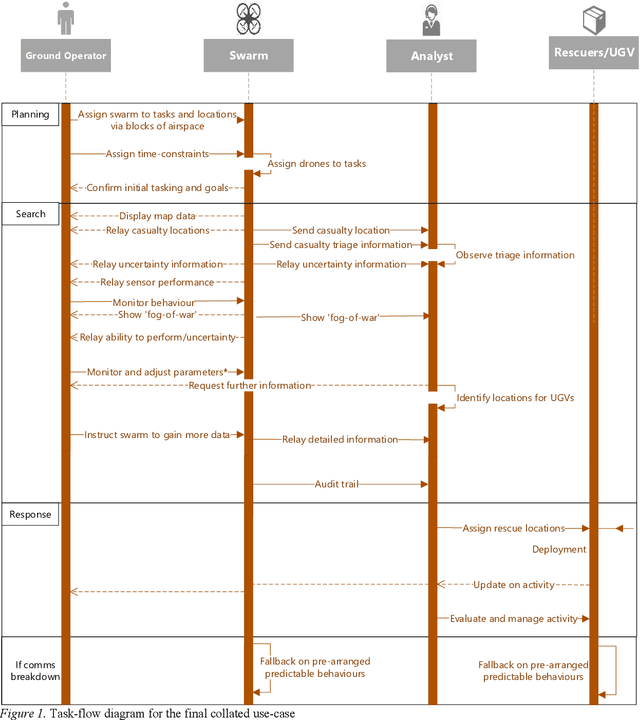

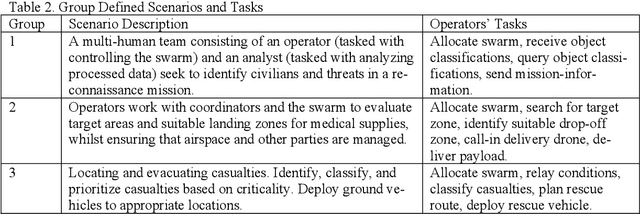

Industry Led Use-Case Development for Human-Swarm Operations

Jul 24, 2022

Abstract:In the domain of unmanned vehicles, autonomous robotic swarms promise to deliver increased efficiency and collective autonomy. How these swarms will operate in the future, and what communication requirements and operational boundaries will arise are yet to be sufficiently defined. A workshop was conducted with 11 professional unmanned-vehicle operators and designers with the objective of identifying use-cases for developing and testing robotic swarms. Three scenarios were defined by experts and were then compiled to produce a single use case outlining the scenario, objectives, agents, communication requirements and stages of operation when collaborating with highly autonomous swarms. Our compiled use case is intended for researchers, designers, and manufacturers alike to test and tailor their design pipeline to accommodate for some of the key issues in human-swarm ininteraction. Examples of application include informing simulation development, forming the basis of further design workshops, and identifying trust issues that may arise between human operators and the swarm.

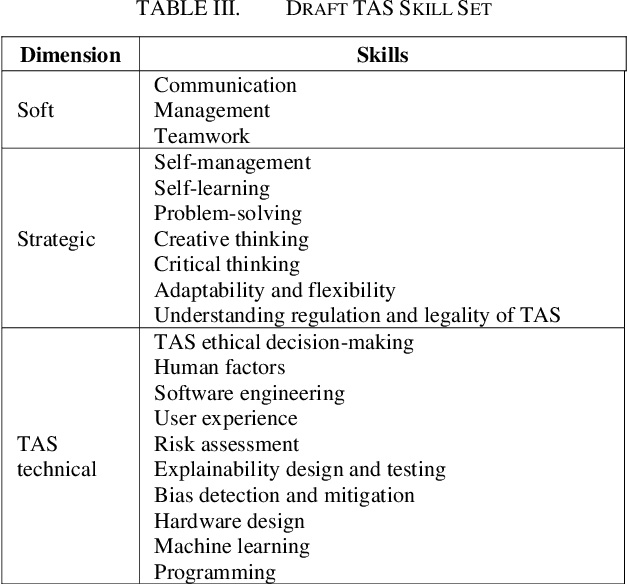

Trustworthy Autonomous Systems (TAS): Engaging TAS experts in curriculum design

Feb 16, 2022

Abstract:Recent advances in artificial intelligence, specifically machine learning, contributed positively to enhancing the autonomous systems industry, along with introducing social, technical, legal and ethical challenges to make them trustworthy. Although Trustworthy Autonomous Systems (TAS) is an established and growing research direction that has been discussed in multiple disciplines, e.g., Artificial Intelligence, Human-Computer Interaction, Law, and Psychology. The impact of TAS on education curricula and required skills for future TAS engineers has rarely been discussed in the literature. This study brings together the collective insights from a number of TAS leading experts to highlight significant challenges for curriculum design and potential TAS required skills posed by the rapid emergence of TAS. Our analysis is of interest not only to the TAS education community but also to other researchers, as it offers ways to guide future research toward operationalising TAS education.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge