Ming-Kun Xie

Positive-Unlabeled Reinforcement Learning Distillation for On-Premise Small Models

Jan 28, 2026Abstract:Due to constraints on privacy, cost, and latency, on-premise deployment of small models is increasingly common. However, most practical pipelines stop at supervised fine-tuning (SFT) and fail to reach the reinforcement learning (RL) alignment stage. The main reason is that RL alignment typically requires either expensive human preference annotation or heavy reliance on high-quality reward models with large-scale API usage and ongoing engineering maintenance, both of which are ill-suited to on-premise settings. To bridge this gap, we propose a positive-unlabeled (PU) RL distillation method for on-premise small-model deployment. Without human-labeled preferences or a reward model, our method distills the teacher's preference-optimization capability from black-box generations into a locally trainable student. For each prompt, we query the teacher once to obtain an anchor response, locally sample multiple student candidates, and perform anchor-conditioned self-ranking to induce pairwise or listwise preferences, enabling a fully local training loop via direct preference optimization or group relative policy optimization. Theoretical analysis justifies that the induced preference signal by our method is order-consistent and concentrates on near-optimal candidates, supporting its stability for preference optimization. Experiments demonstrate that our method achieves consistently strong performance under a low-cost setting.

Learning to Trust Bellman Updates: Selective State-Adaptive Regularization for Offline RL

May 26, 2025Abstract:Offline reinforcement learning (RL) aims to learn an effective policy from a static dataset. To alleviate extrapolation errors, existing studies often uniformly regularize the value function or policy updates across all states. However, due to substantial variations in data quality, the fixed regularization strength often leads to a dilemma: Weak regularization strength fails to address extrapolation errors and value overestimation, while strong regularization strength shifts policy learning toward behavior cloning, impeding potential performance enabled by Bellman updates. To address this issue, we propose the selective state-adaptive regularization method for offline RL. Specifically, we introduce state-adaptive regularization coefficients to trust state-level Bellman-driven results, while selectively applying regularization on high-quality actions, aiming to avoid performance degradation caused by tight constraints on low-quality actions. By establishing a connection between the representative value regularization method, CQL, and explicit policy constraint methods, we effectively extend selective state-adaptive regularization to these two mainstream offline RL approaches. Extensive experiments demonstrate that the proposed method significantly outperforms the state-of-the-art approaches in both offline and offline-to-online settings on the D4RL benchmark.

Optimistic Critic Reconstruction and Constrained Fine-Tuning for General Offline-to-Online RL

Dec 25, 2024

Abstract:Offline-to-online (O2O) reinforcement learning (RL) provides an effective means of leveraging an offline pre-trained policy as initialization to improve performance rapidly with limited online interactions. Recent studies often design fine-tuning strategies for a specific offline RL method and cannot perform general O2O learning from any offline method. To deal with this problem, we disclose that there are evaluation and improvement mismatches between the offline dataset and the online environment, which hinders the direct application of pre-trained policies to online fine-tuning. In this paper, we propose to handle these two mismatches simultaneously, which aims to achieve general O2O learning from any offline method to any online method. Before online fine-tuning, we re-evaluate the pessimistic critic trained on the offline dataset in an optimistic way and then calibrate the misaligned critic with the reliable offline actor to avoid erroneous update. After obtaining an optimistic and and aligned critic, we perform constrained fine-tuning to combat distribution shift during online learning. We show empirically that the proposed method can achieve stable and efficient performance improvement on multiple simulated tasks when compared to the state-of-the-art methods.

Context-Based Semantic-Aware Alignment for Semi-Supervised Multi-Label Learning

Dec 25, 2024

Abstract:Due to the lack of extensive precisely-annotated multi-label data in real word, semi-supervised multi-label learning (SSMLL) has gradually gained attention. Abundant knowledge embedded in vision-language models (VLMs) pre-trained on large-scale image-text pairs could alleviate the challenge of limited labeled data under SSMLL setting.Despite existing methods based on fine-tuning VLMs have achieved advances in weakly-supervised multi-label learning, they failed to fully leverage the information from labeled data to enhance the learning of unlabeled data. In this paper, we propose a context-based semantic-aware alignment method to solve the SSMLL problem by leveraging the knowledge of VLMs. To address the challenge of handling multiple semantics within an image, we introduce a novel framework design to extract label-specific image features. This design allows us to achieve a more compact alignment between text features and label-specific image features, leading the model to generate high-quality pseudo-labels. To incorporate the model with comprehensive understanding of image, we design a semi-supervised context identification auxiliary task to enhance the feature representation by capturing co-occurrence information. Extensive experiments on multiple benchmark datasets demonstrate the effectiveness of our proposed method.

Dirichlet-Based Coarse-to-Fine Example Selection For Open-Set Annotation

Sep 26, 2024

Abstract:Active learning (AL) has achieved great success by selecting the most valuable examples from unlabeled data. However, they usually deteriorate in real scenarios where open-set noise gets involved, which is studied as open-set annotation (OSA). In this paper, we owe the deterioration to the unreliable predictions arising from softmax-based translation invariance and propose a Dirichlet-based Coarse-to-Fine Example Selection (DCFS) strategy accordingly. Our method introduces simplex-based evidential deep learning (EDL) to break translation invariance and distinguish known and unknown classes by considering evidence-based data and distribution uncertainty simultaneously. Furthermore, hard known-class examples are identified by model discrepancy generated from two classifier heads, where we amplify and alleviate the model discrepancy respectively for unknown and known classes. Finally, we combine the discrepancy with uncertainties to form a two-stage strategy, selecting the most informative examples from known classes. Extensive experiments on various openness ratio datasets demonstrate that DCFS achieves state-of-art performance.

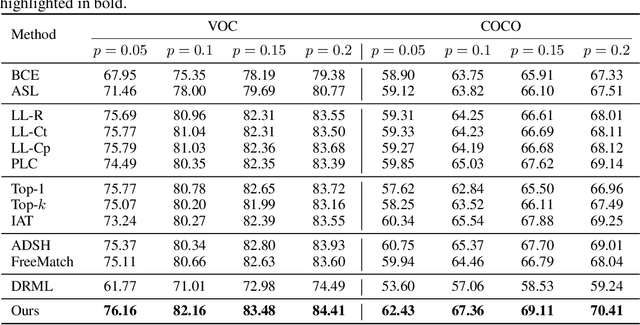

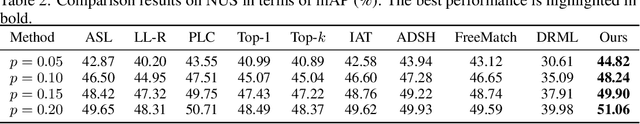

Dual-Decoupling Learning and Metric-Adaptive Thresholding for Semi-Supervised Multi-Label Learning

Jul 26, 2024

Abstract:Semi-supervised multi-label learning (SSMLL) is a powerful framework for leveraging unlabeled data to reduce the expensive cost of collecting precise multi-label annotations. Unlike semi-supervised learning, one cannot select the most probable label as the pseudo-label in SSMLL due to multiple semantics contained in an instance. To solve this problem, the mainstream method developed an effective thresholding strategy to generate accurate pseudo-labels. Unfortunately, the method neglected the quality of model predictions and its potential impact on pseudo-labeling performance. In this paper, we propose a dual-perspective method to generate high-quality pseudo-labels. To improve the quality of model predictions, we perform dual-decoupling to boost the learning of correlative and discriminative features, while refining the generation and utilization of pseudo-labels. To obtain proper class-wise thresholds, we propose the metric-adaptive thresholding strategy to estimate the thresholds, which maximize the pseudo-label performance for a given metric on labeled data. Experiments on multiple benchmark datasets show the proposed method can achieve the state-of-the-art performance and outperform the comparative methods with a significant margin.

Counterfactual Reasoning for Multi-Label Image Classification via Patching-Based Training

Apr 09, 2024

Abstract:The key to multi-label image classification (MLC) is to improve model performance by leveraging label correlations. Unfortunately, it has been shown that overemphasizing co-occurrence relationships can cause the overfitting issue of the model, ultimately leading to performance degradation. In this paper, we provide a causal inference framework to show that the correlative features caused by the target object and its co-occurring objects can be regarded as a mediator, which has both positive and negative impacts on model predictions. On the positive side, the mediator enhances the recognition performance of the model by capturing co-occurrence relationships; on the negative side, it has the harmful causal effect that causes the model to make an incorrect prediction for the target object, even when only co-occurring objects are present in an image. To address this problem, we propose a counterfactual reasoning method to measure the total direct effect, achieved by enhancing the direct effect caused only by the target object. Due to the unknown location of the target object, we propose patching-based training and inference to accomplish this goal, which divides an image into multiple patches and identifies the pivot patch that contains the target object. Experimental results on multiple benchmark datasets with diverse configurations validate that the proposed method can achieve state-of-the-art performance.

Dirichlet-Based Prediction Calibration for Learning with Noisy Labels

Jan 13, 2024Abstract:Learning with noisy labels can significantly hinder the generalization performance of deep neural networks (DNNs). Existing approaches address this issue through loss correction or example selection methods. However, these methods often rely on the model's predictions obtained from the softmax function, which can be over-confident and unreliable. In this study, we identify the translation invariance of the softmax function as the underlying cause of this problem and propose the \textit{Dirichlet-based Prediction Calibration} (DPC) method as a solution. Our method introduces a calibrated softmax function that breaks the translation invariance by incorporating a suitable constant in the exponent term, enabling more reliable model predictions. To ensure stable model training, we leverage a Dirichlet distribution to assign probabilities to predicted labels and introduce a novel evidence deep learning (EDL) loss. The proposed loss function encourages positive and sufficiently large logits for the given label, while penalizing negative and small logits for other labels, leading to more distinct logits and facilitating better example selection based on a large-margin criterion. Through extensive experiments on diverse benchmark datasets, we demonstrate that DPC achieves state-of-the-art performance. The code is available at https://github.com/chenchenzong/DPC.

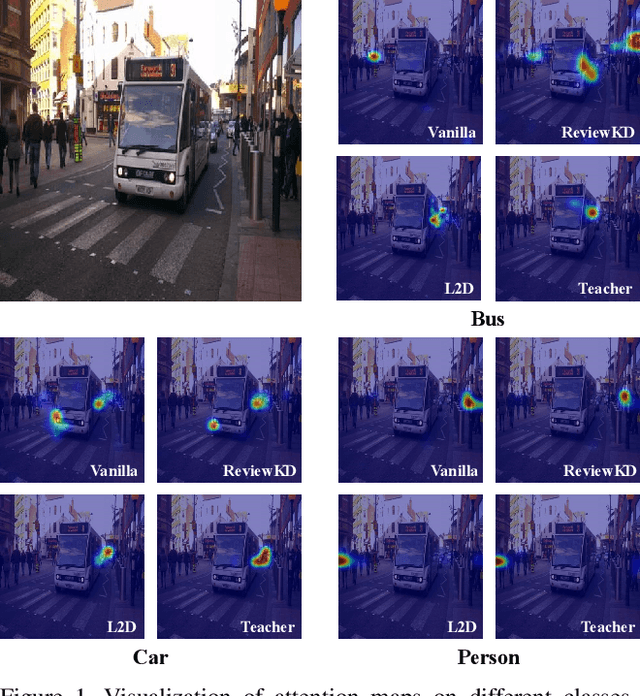

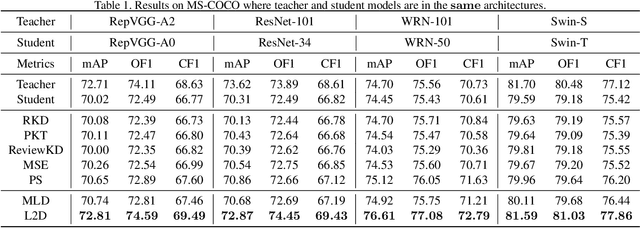

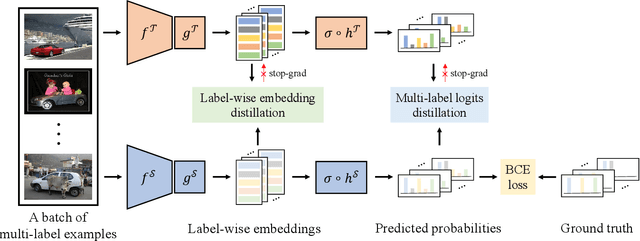

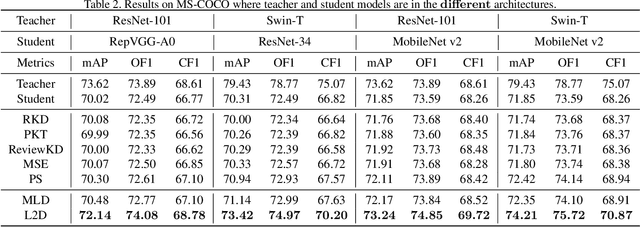

Multi-Label Knowledge Distillation

Aug 12, 2023

Abstract:Existing knowledge distillation methods typically work by imparting the knowledge of output logits or intermediate feature maps from the teacher network to the student network, which is very successful in multi-class single-label learning. However, these methods can hardly be extended to the multi-label learning scenario, where each instance is associated with multiple semantic labels, because the prediction probabilities do not sum to one and feature maps of the whole example may ignore minor classes in such a scenario. In this paper, we propose a novel multi-label knowledge distillation method. On one hand, it exploits the informative semantic knowledge from the logits by dividing the multi-label learning problem into a set of binary classification problems; on the other hand, it enhances the distinctiveness of the learned feature representations by leveraging the structural information of label-wise embeddings. Experimental results on multiple benchmark datasets validate that the proposed method can avoid knowledge counteraction among labels, thus achieving superior performance against diverse comparing methods. Our code is available at: https://github.com/penghui-yang/L2D

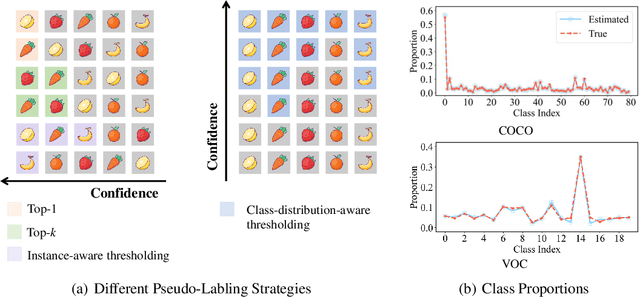

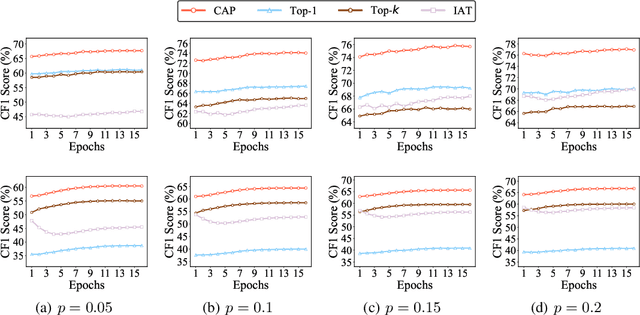

Class-Distribution-Aware Pseudo Labeling for Semi-Supervised Multi-Label Learning

May 04, 2023

Abstract:Pseudo labeling is a popular and effective method to leverage the information of unlabeled data. Conventional instance-aware pseudo labeling methods often assign each unlabeled instance with a pseudo label based on its predicted probabilities. However, due to the unknown number of true labels, these methods cannot generalize well to semi-supervised multi-label learning (SSMLL) scenarios, since they would suffer from the risk of either introducing false positive labels or neglecting true positive ones. In this paper, we propose to solve the SSMLL problems by performing Class-distribution-Aware Pseudo labeling (CAP), which encourages the class distribution of pseudo labels to approximate the true one. Specifically, we design a regularized learning framework consisting of the class-aware thresholds to control the number of pseudo labels for each class. Given that the labeled and unlabeled examples are sampled according to the same distribution, we determine the thresholds by exploiting the empirical class distribution, which can be treated as a tight approximation to the true one. Theoretically, we show that the generalization performance of the proposed method is dependent on the pseudo labeling error, which can be significantly reduced by the CAP strategy. Extensive experimental results on multiple benchmark datasets validate that CAP can effectively solve the SSMLL problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge