Michal Perdoch

Multiface: A Dataset for Neural Face Rendering

Jul 22, 2022

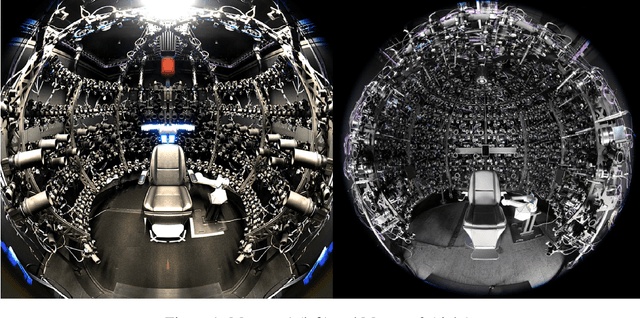

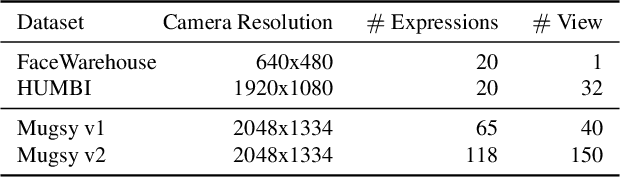

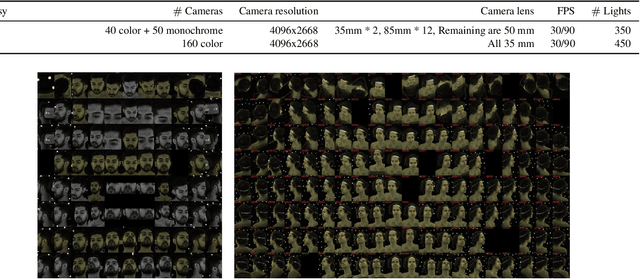

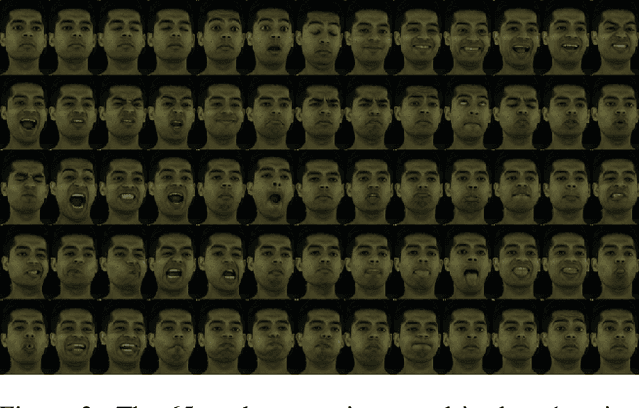

Abstract:Photorealistic avatars of human faces have come a long way in recent years, yet research along this area is limited by a lack of publicly available, high-quality datasets covering both, dense multi-view camera captures, and rich facial expressions of the captured subjects. In this work, we present Multiface, a new multi-view, high-resolution human face dataset collected from 13 identities at Reality Labs Research for neural face rendering. We introduce Mugsy, a large scale multi-camera apparatus to capture high-resolution synchronized videos of a facial performance. The goal of Multiface is to close the gap in accessibility to high quality data in the academic community and to enable research in VR telepresence. Along with the release of the dataset, we conduct ablation studies on the influence of different model architectures toward the model's interpolation capacity of novel viewpoint and expressions. With a conditional VAE model serving as our baseline, we found that adding spatial bias, texture warp field, and residual connections improves performance on novel view synthesis. Our code and data is available at: https://github.com/facebookresearch/multiface

BabelCalib: A Universal Approach to Calibrating Central Cameras

Sep 20, 2021

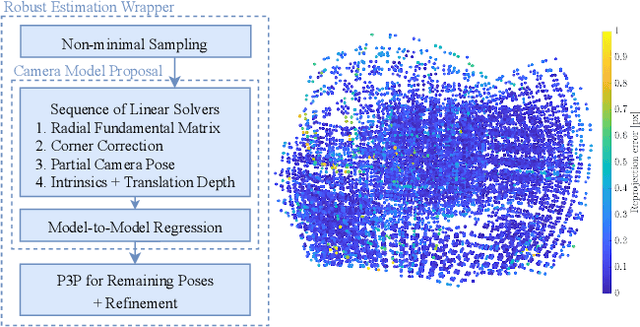

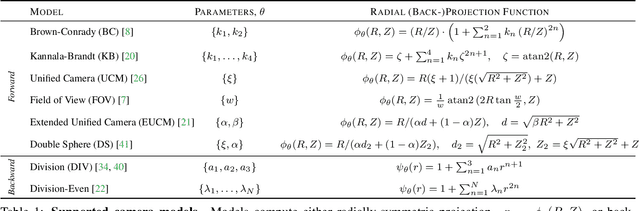

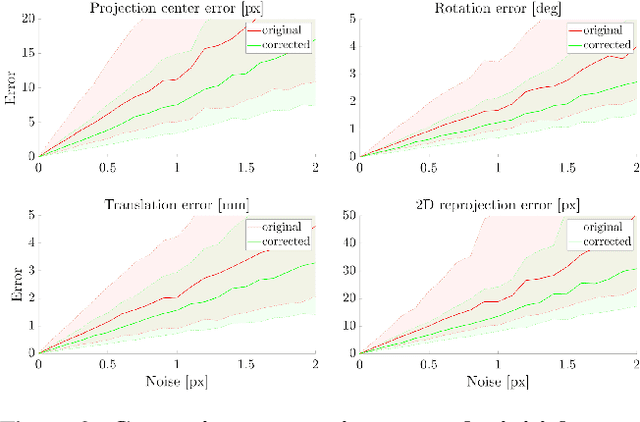

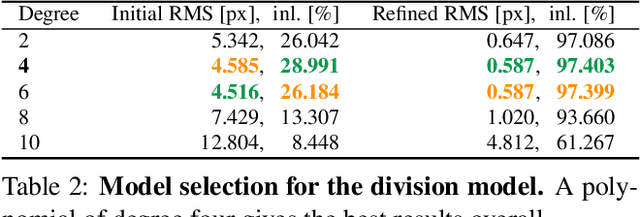

Abstract:Existing calibration methods occasionally fail for large field-of-view cameras due to the non-linearity of the underlying problem and the lack of good initial values for all parameters of the used camera model. This might occur because a simpler projection model is assumed in an initial step, or a poor initial guess for the internal parameters is pre-defined. A lot of the difficulties of general camera calibration lie in the use of a forward projection model. We side-step these challenges by first proposing a solver to calibrate the parameters in terms of a back-projection model and then regress the parameters for a target forward model. These steps are incorporated in a robust estimation framework to cope with outlying detections. Extensive experiments demonstrate that our approach is very reliable and returns the most accurate calibration parameters as measured on the downstream task of absolute pose estimation on test sets. The code is released at https://github.com/ylochman/babelcalib.

MODS: Fast and Robust Method for Two-View Matching

May 01, 2016

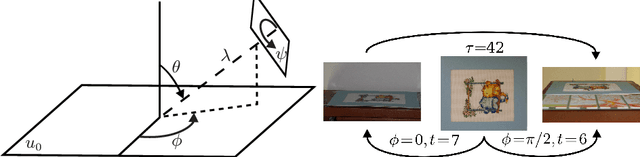

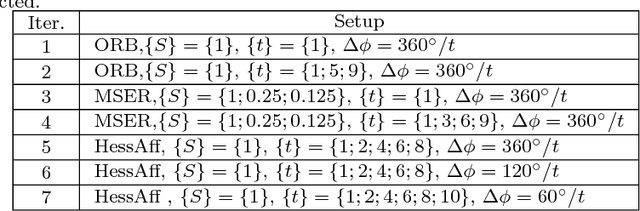

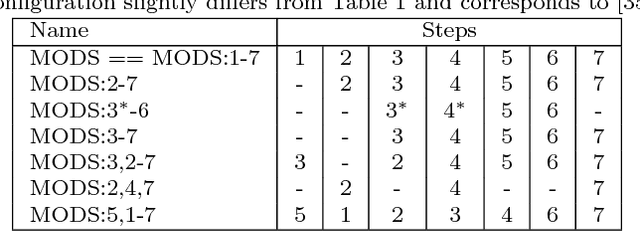

Abstract:A novel algorithm for wide-baseline matching called MODS - Matching On Demand with view Synthesis - is presented. The MODS algorithm is experimentally shown to solve a broader range of wide-baseline problems than the state of the art while being nearly as fast as standard matchers on simple problems. The apparent robustness vs. speed trade-off is finessed by the use of progressively more time-consuming feature detectors and by on-demand generation of synthesized images that is performed until a reliable estimate of geometry is obtained. We introduce an improved method for tentative correspondence selection, applicable both with and without view synthesis. A modification of the standard first to second nearest distance rule increases the number of correct matches by 5-20% at no additional computational cost. Performance of the MODS algorithm is evaluated on several standard publicly available datasets, and on a new set of geometrically challenging wide baseline problems that is made public together with the ground truth. Experiments show that the MODS outperforms the state-of-the-art in robustness and speed. Moreover, MODS performs well on other classes of difficult two-view problems like matching of images from different modalities, with wide temporal baseline or with significant lighting changes.

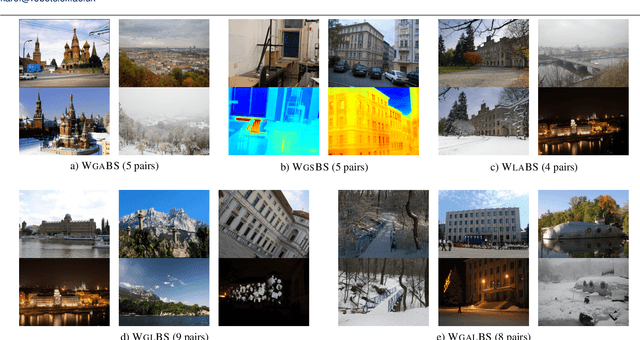

WxBS: Wide Baseline Stereo Generalizations

May 12, 2015

Abstract:We have presented a new problem -- the wide multiple baseline stereo (WxBS) -- which considers matching of images that simultaneously differ in more than one image acquisition factor such as viewpoint, illumination, sensor type or where object appearance changes significantly, e.g. over time. A new dataset with the ground truth for evaluation of matching algorithms has been introduced and will be made public. We have extensively tested a large set of popular and recent detectors and descriptors and show than the combination of RootSIFT and HalfRootSIFT as descriptors with MSER and Hessian-Affine detectors works best for many different nuisance factors. We show that simple adaptive thresholding improves Hessian-Affine, DoG, MSER (and possibly other) detectors and allows to use them on infrared and low contrast images. A novel matching algorithm for addressing the WxBS problem has been introduced. We have shown experimentally that the WxBS-M matcher dominantes the state-of-the-art methods both on both the new and existing datasets.

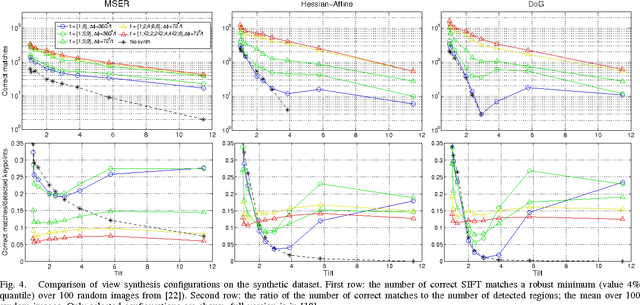

Two-View Matching with View Synthesis Revisited

Nov 11, 2013

Abstract:Wide-baseline matching focussing on problems with extreme viewpoint change is considered. We introduce the use of view synthesis with affine-covariant detectors to solve such problems and show that matching with the Hessian-Affine or MSER detectors outperforms the state-of-the-art ASIFT. To minimise the loss of speed caused by view synthesis, we propose the Matching On Demand with view Synthesis algorithm (MODS) that uses progressively more synthesized images and more (time-consuming) detectors until reliable estimation of geometry is possible. We show experimentally that the MODS algorithm solves problems beyond the state-of-the-art and yet is comparable in speed to standard wide-baseline matchers on simpler problems. Minor contributions include an improved method for tentative correspondence selection, applicable both with and without view synthesis and a view synthesis setup greatly improving MSER robustness to blur and scale change that increase its running time by 10% only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge