Michaela Vollmer

Adaptive Fusion of Multi-view Remote Sensing data for Optimal Sub-field Crop Yield Prediction

Jan 22, 2024

Abstract:Accurate crop yield prediction is of utmost importance for informed decision-making in agriculture, aiding farmers, and industry stakeholders. However, this task is complex and depends on multiple factors, such as environmental conditions, soil properties, and management practices. Combining heterogeneous data views poses a fusion challenge, like identifying the view-specific contribution to the predictive task. We present a novel multi-view learning approach to predict crop yield for different crops (soybean, wheat, rapeseed) and regions (Argentina, Uruguay, and Germany). Our multi-view input data includes multi-spectral optical images from Sentinel-2 satellites and weather data as dynamic features during the crop growing season, complemented by static features like soil properties and topographic information. To effectively fuse the data, we introduce a Multi-view Gated Fusion (MVGF) model, comprising dedicated view-encoders and a Gated Unit (GU) module. The view-encoders handle the heterogeneity of data sources with varying temporal resolutions by learning a view-specific representation. These representations are adaptively fused via a weighted sum. The fusion weights are computed for each sample by the GU using a concatenation of the view-representations. The MVGF model is trained at sub-field level with 10 m resolution pixels. Our evaluations show that the MVGF outperforms conventional models on the same task, achieving the best results by incorporating all the data sources, unlike the usual fusion results in the literature. For Argentina, the MVGF model achieves an R2 value of 0.68 at sub-field yield prediction, while at field level evaluation (comparing field averages), it reaches around 0.80 across different countries. The GU module learned different weights based on the country and crop-type, aligning with the variable significance of each data source to the prediction task.

Predicting Crop Yield With Machine Learning: An Extensive Analysis Of Input Modalities And Models On a Field and sub-field Level

Aug 17, 2023

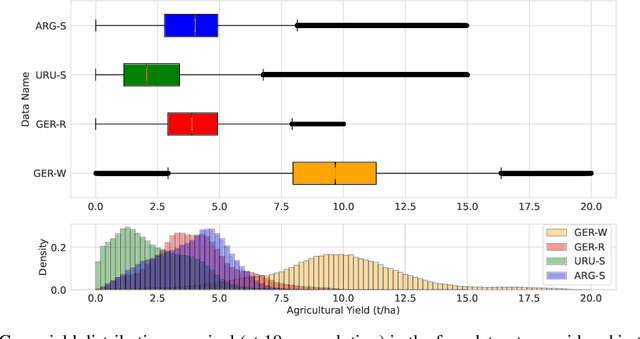

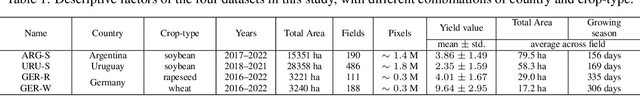

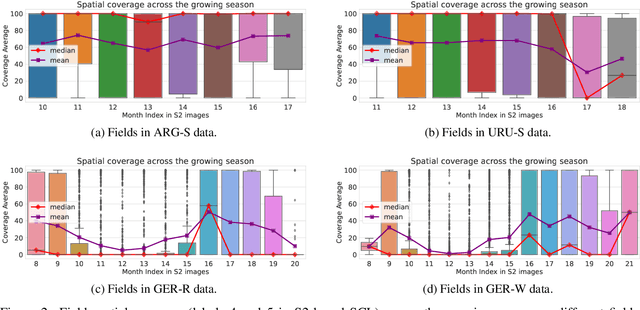

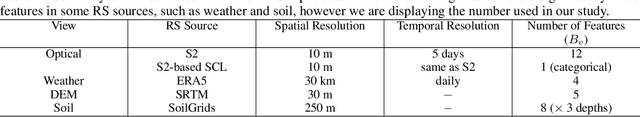

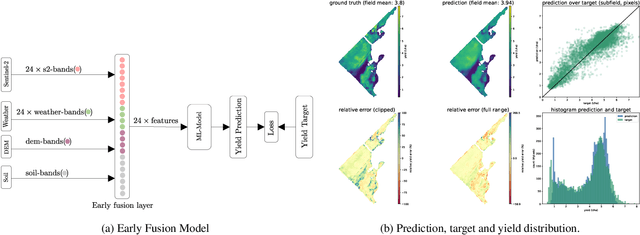

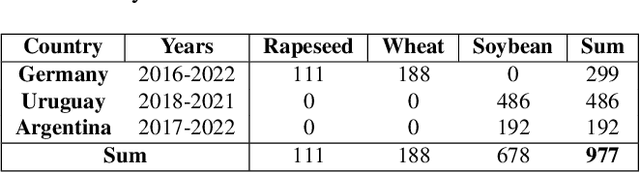

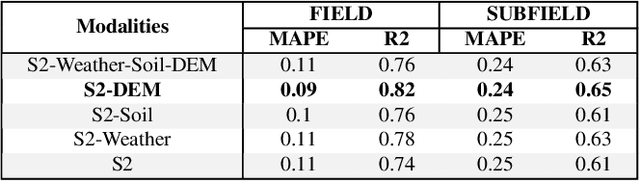

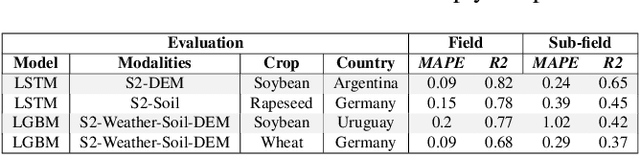

Abstract:We introduce a simple yet effective early fusion method for crop yield prediction that handles multiple input modalities with different temporal and spatial resolutions. We use high-resolution crop yield maps as ground truth data to train crop and machine learning model agnostic methods at the sub-field level. We use Sentinel-2 satellite imagery as the primary modality for input data with other complementary modalities, including weather, soil, and DEM data. The proposed method uses input modalities available with global coverage, making the framework globally scalable. We explicitly highlight the importance of input modalities for crop yield prediction and emphasize that the best-performing combination of input modalities depends on region, crop, and chosen model.

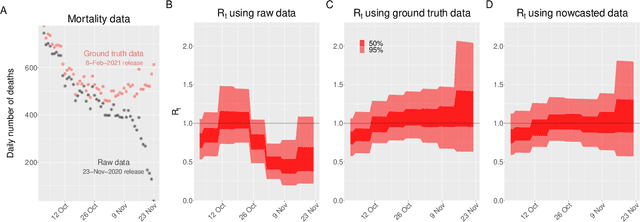

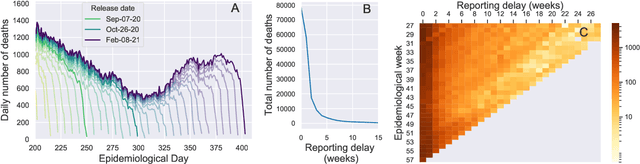

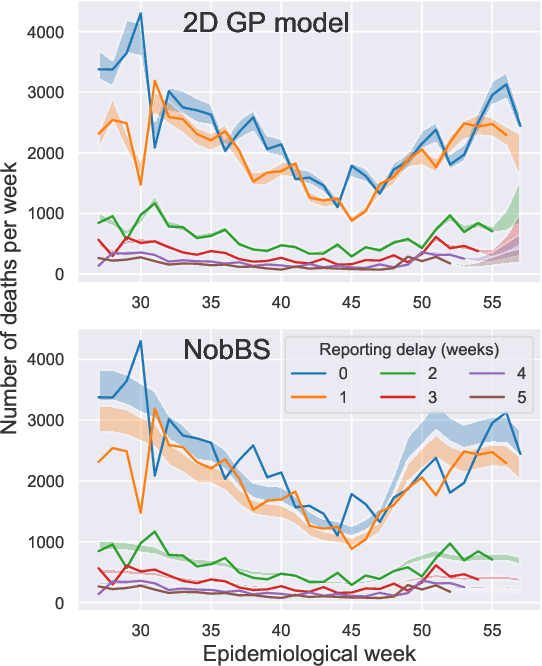

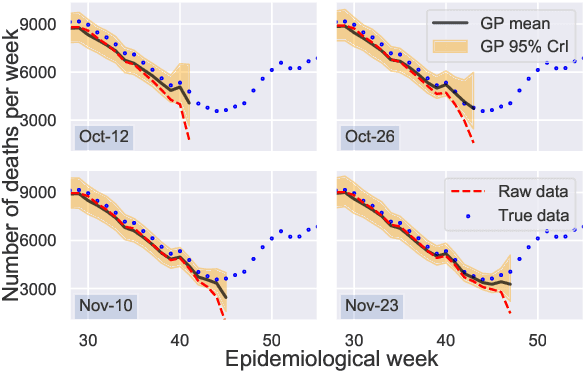

Gaussian Process Nowcasting: Application to COVID-19 Mortality Reporting

Feb 22, 2021

Abstract:Updating observations of a signal due to the delays in the measurement process is a common problem in signal processing, with prominent examples in a wide range of fields. An important example of this problem is the nowcasting of COVID-19 mortality: given a stream of reported counts of daily deaths, can we correct for the delays in reporting to paint an accurate picture of the present, with uncertainty? Without this correction, raw data will often mislead by suggesting an improving situation. We present a flexible approach using a latent Gaussian process that is capable of describing the changing auto-correlation structure present in the reporting time-delay surface. This approach also yields robust estimates of uncertainty for the estimated nowcasted numbers of deaths. We test assumptions in model specification such as the choice of kernel or hyper priors, and evaluate model performance on a challenging real dataset from Brazil. Our experiments show that Gaussian process nowcasting performs favourably against both comparable methods, and a small sample of expert human predictions. Our approach has substantial practical utility in disease modelling -- by applying our approach to COVID-19 mortality data from Brazil, where reporting delays are large, we can make informative predictions on important epidemiological quantities such as the current effective reproduction number.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge