Michael Penwarden

Kolmogorov n-Widths for Multitask Physics-Informed Machine Learning (PIML) Methods: Towards Robust Metrics

Feb 16, 2024

Abstract:Physics-informed machine learning (PIML) as a means of solving partial differential equations (PDE) has garnered much attention in the Computational Science and Engineering (CS&E) world. This topic encompasses a broad array of methods and models aimed at solving a single or a collection of PDE problems, called multitask learning. PIML is characterized by the incorporation of physical laws into the training process of machine learning models in lieu of large data when solving PDE problems. Despite the overall success of this collection of methods, it remains incredibly difficult to analyze, benchmark, and generally compare one approach to another. Using Kolmogorov n-widths as a measure of effectiveness of approximating functions, we judiciously apply this metric in the comparison of various multitask PIML architectures. We compute lower accuracy bounds and analyze the model's learned basis functions on various PDE problems. This is the first objective metric for comparing multitask PIML architectures and helps remove uncertainty in model validation from selective sampling and overfitting. We also identify avenues of improvement for model architectures, such as the choice of activation function, which can drastically affect model generalization to "worst-case" scenarios, which is not observed when reporting task-specific errors. We also incorporate this metric into the optimization process through regularization, which improves the models' generalizability over the multitask PDE problem.

Neural Operator Learning for Ultrasound Tomography Inversion

Apr 06, 2023

Abstract:Neural operator learning as a means of mapping between complex function spaces has garnered significant attention in the field of computational science and engineering (CS&E). In this paper, we apply Neural operator learning to the time-of-flight ultrasound computed tomography (USCT) problem. We learn the mapping between time-of-flight (TOF) data and the heterogeneous sound speed field using a full-wave solver to generate the training data. This novel application of operator learning circumnavigates the need to solve the computationally intensive iterative inverse problem. The operator learns the non-linear mapping offline and predicts the heterogeneous sound field with a single forward pass through the model. This is the first time operator learning has been used for ultrasound tomography and is the first step in potential real-time predictions of soft tissue distribution for tumor identification in beast imaging.

A unified scalable framework for causal sweeping strategies for Physics-Informed Neural Networks (PINNs) and their temporal decompositions

Feb 28, 2023

Abstract:Physics-informed neural networks (PINNs) as a means of solving partial differential equations (PDE) have garnered much attention in Computational Science and Engineering (CS&E). However, a recent topic of interest is exploring various training (i.e., optimization) challenges - in particular, arriving at poor local minima in the optimization landscape results in a PINN approximation giving an inferior, and sometimes trivial, solution when solving forward time-dependent PDEs with no data. This problem is also found in, and in some sense more difficult, with domain decomposition strategies such as temporal decomposition using XPINNs. To address this problem, we first enable a general categorization for previous causality methods, from which we identify a gap in the previous approaches. We then furnish examples and explanations for different training challenges, their cause, and how they relate to information propagation and temporal decomposition. We propose a solution to fill this gap by reframing these causality concepts into a generalized information propagation framework in which any prior method or combination of methods can be described. Our unified framework moves toward reducing the number of PINN methods to consider and the implementation and retuning cost for thorough comparisons. We propose a new stacked-decomposition method that bridges the gap between time-marching PINNs and XPINNs. We also introduce significant computational speed-ups by using transfer learning concepts to initialize subnetworks in the domain and loss tolerance-based propagation for the subdomains. We formulate a new time-sweeping collocation point algorithm inspired by the previous PINNs causality literature, which our framework can still describe, and provides a significant computational speed-up via reduced-cost collocation point segmentation. Finally, we provide numerical results on baseline PDE problems.

Deep neural operators can serve as accurate surrogates for shape optimization: A case study for airfoils

Feb 02, 2023

Abstract:Deep neural operators, such as DeepONets, have changed the paradigm in high-dimensional nonlinear regression from function regression to (differential) operator regression, paving the way for significant changes in computational engineering applications. Here, we investigate the use of DeepONets to infer flow fields around unseen airfoils with the aim of shape optimization, an important design problem in aerodynamics that typically taxes computational resources heavily. We present results which display little to no degradation in prediction accuracy, while reducing the online optimization cost by orders of magnitude. We consider NACA airfoils as a test case for our proposed approach, as their shape can be easily defined by the four-digit parametrization. We successfully optimize the constrained NACA four-digit problem with respect to maximizing the lift-to-drag ratio and validate all results by comparing them to a high-order CFD solver. We find that DeepONets have low generalization error, making them ideal for generating solutions of unseen shapes. Specifically, pressure, density, and velocity fields are accurately inferred at a fraction of a second, hence enabling the use of general objective functions beyond the maximization of the lift-to-drag ratio considered in the current work.

Meta Learning of Interface Conditions for Multi-Domain Physics-Informed Neural Networks

Oct 23, 2022

Abstract:Physics-informed neural networks (PINNs) are emerging as popular mesh-free solvers for partial differential equations (PDEs). Recent extensions decompose the domain, applying different PINNs to solve the equation in each subdomain and aligning the solution at the interface of the subdomains. Hence, they can further alleviate the problem complexity, reduce the computational cost, and allow parallelization. However, the performance of the multi-domain PINNs is sensitive to the choice of the interface conditions for solution alignment. While quite a few conditions have been proposed, there is no suggestion about how to select the conditions according to specific problems. To address this gap, we propose META Learning of Interface Conditions (METALIC), a simple, efficient yet powerful approach to dynamically determine the optimal interface conditions for solving a family of parametric PDEs. Specifically, we develop two contextual multi-arm bandit models. The first one applies to the entire training procedure, and online updates a Gaussian process (GP) reward surrogate that given the PDE parameters and interface conditions predicts the solution error. The second one partitions the training into two stages, one is the stochastic phase and the other deterministic phase; we update a GP surrogate for each phase to enable different condition selections at the two stages so as to further bolster the flexibility and performance. We have shown the advantage of METALIC on four bench-mark PDE families.

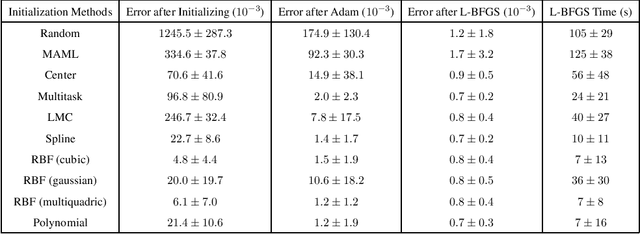

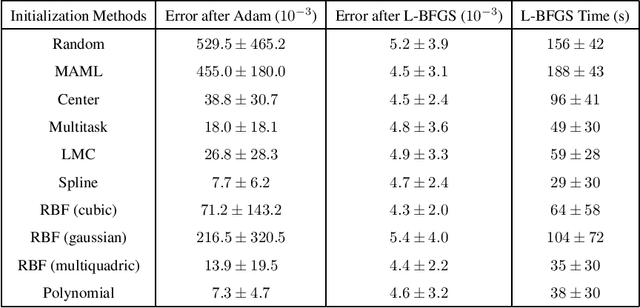

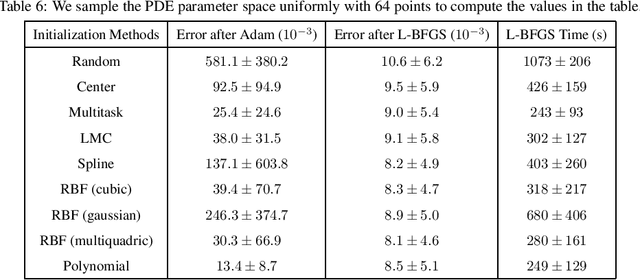

Physics-Informed Neural Networks for Parameterized PDEs: A Metalearning Approach

Oct 26, 2021

Abstract:Physics-informed neural networks (PINNs) as a means of discretizing partial differential equations (PDEs) are garnering much attention in the Computational Science and Engineering (CS&E) world. At least two challenges exist for PINNs at present: an understanding of accuracy and convergence characteristics with respect to tunable parameters and identification of optimization strategies that make PINNs as efficient as other computational science tools. The cost of PINNs training remains a major challenge of Physics-informed Machine Learning (PiML) -- and, in fact, machine learning (ML) in general. This paper is meant to move towards addressing the latter through the study of PINNs for parameterized PDEs. Following the ML world, we introduce metalearning of PINNs for parameterized PDEs. By introducing metalearning and transfer learning concepts, we can greatly accelerate the PINNs optimization process. We present a survey of model-agnostic metalearning, and then discuss our model-aware metalearning applied to PINNs. We provide theoretically motivated and empirically backed assumptions that make our metalearning approach possible. We then test our approach on various canonical forward parameterized PDEs that have been presented in the emerging PINNs literature.

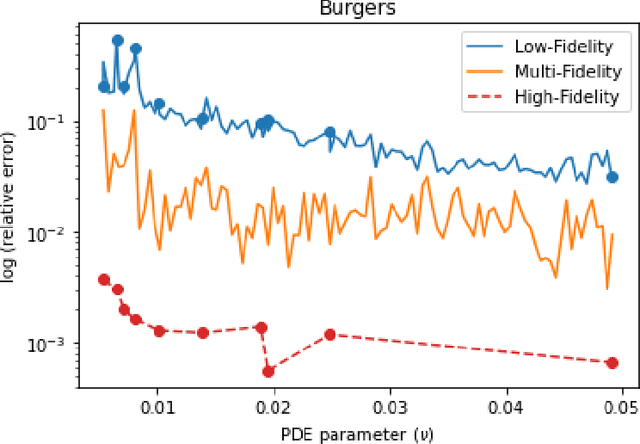

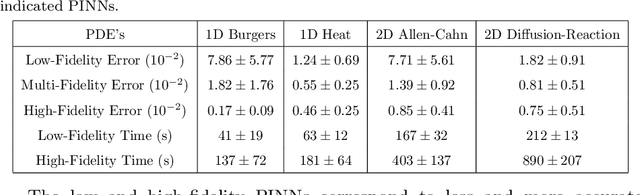

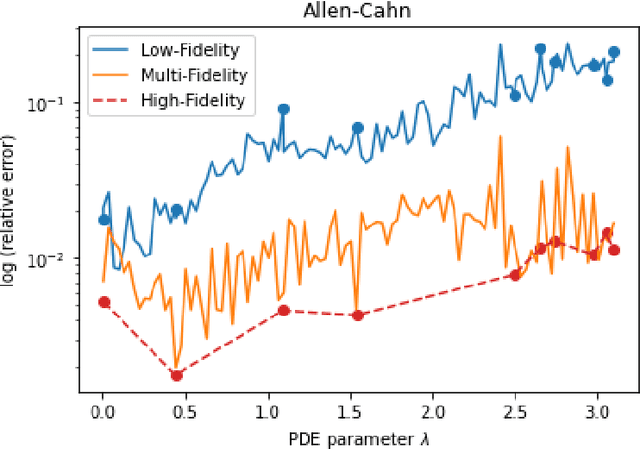

Multifidelity Modeling for Physics-Informed Neural Networks (PINNs)

Jun 25, 2021

Abstract:Multifidelity simulation methodologies are often used in an attempt to judiciously combine low-fidelity and high-fidelity simulation results in an accuracy-increasing, cost-saving way. Candidates for this approach are simulation methodologies for which there are fidelity differences connected with significant computational cost differences. Physics-informed Neural Networks (PINNs) are candidates for these types of approaches due to the significant difference in training times required when different fidelities (expressed in terms of architecture width and depth as well as optimization criteria) are employed. In this paper, we propose a particular multifidelity approach applied to PINNs that exploits low-rank structure. We demonstrate that width, depth, and optimization criteria can be used as parameters related to model fidelity, and show numerical justification of cost differences in training due to fidelity parameter choices. We test our multifidelity scheme on various canonical forward PDE models that have been presented in the emerging PINNs literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge