Mhafuzul Islam

Improving the Environmental Perception of Autonomous Vehicles using Deep Learning-based Audio Classification

Sep 09, 2022

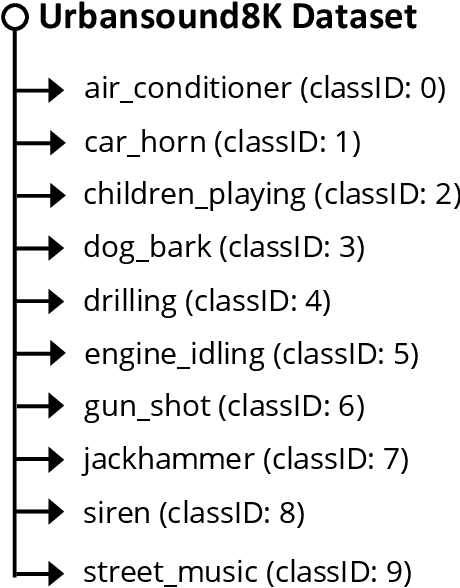

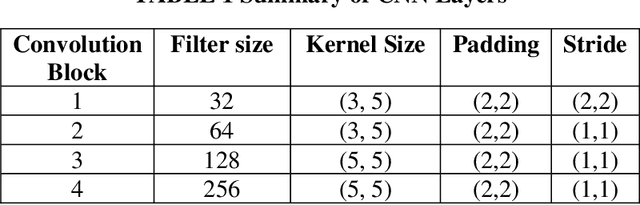

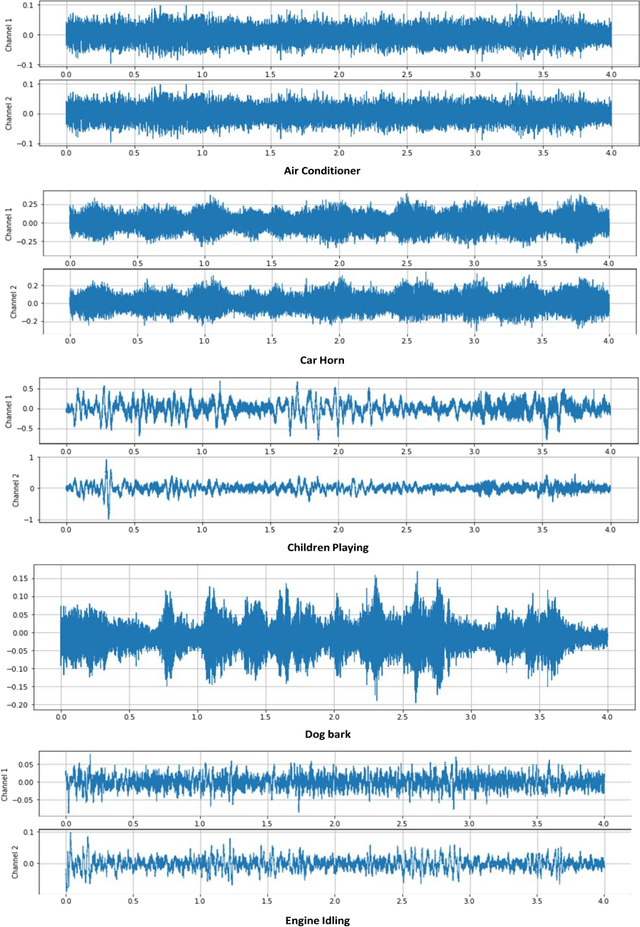

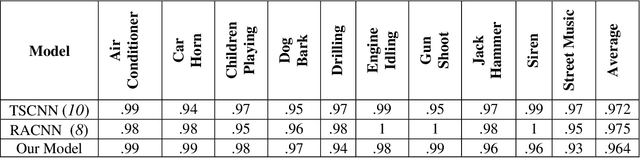

Abstract:Sense of hearing is crucial for autonomous vehicles (AVs) to better perceive its surrounding environment. Although visual sensors of an AV, such as camera, lidar, and radar, help to see its surrounding environment, an AV cannot see beyond those sensors line of sight. On the other hand, an AV s sense of hearing cannot be obstructed by line of sight. For example, an AV can identify an emergency vehicle s siren through audio classification even though the emergency vehicle is not within the line of sight of the AV. Thus, auditory perception is complementary to the camera, lidar, and radar-based perception systems. This paper presents a deep learning-based robust audio classification framework aiming to achieve improved environmental perception for AVs. The presented framework leverages a deep Convolution Neural Network (CNN) to classify different audio classes. UrbanSound8k, an urban environment dataset, is used to train and test the developed framework. Seven audio classes i.e., air conditioner, car horn, children playing, dog bark, engine idling, gunshot, and siren, are identified from the UrbanSound8k dataset because of their relevancy related to AVs. Our framework can classify different audio classes with 97.82% accuracy. Moreover, the audio classification accuracies with all ten classes are presented, which proves that our framework performed better in the case of AV-related sounds compared to the existing audio classification frameworks.

Hybrid Quantum-Classical Neural Network for Cloud-supported In-Vehicle Cyberattack Detection

Oct 14, 2021

Abstract:A classical computer works with ones and zeros, whereas a quantum computer uses ones, zeros, and superpositions of ones and zeros, which enables quantum computers to perform a vast number of calculations simultaneously compared to classical computers. In a cloud-supported cyber-physical system environment, running a machine learning application in quantum computers is often difficult, due to the existing limitations of the current quantum devices. However, with the combination of quantum-classical neural networks (NN), complex and high-dimensional features can be extracted by the classical NN to a reduced but more informative feature space to be processed by the existing quantum computers. In this study, we develop a hybrid quantum-classical NN to detect an amplitude shift cyber-attack on an in-vehicle control area network (CAN) dataset. We show that using the hybrid quantum classical NN, it is possible to achieve an attack detection accuracy of 94%, which is higher than a Long short-term memory (LSTM) NN (87%) or quantum NN alone (62%)

A Sensor Fusion-based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles

Aug 19, 2021

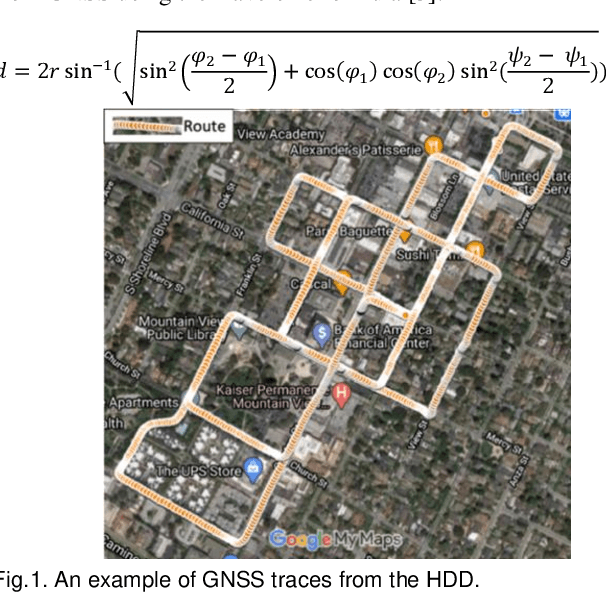

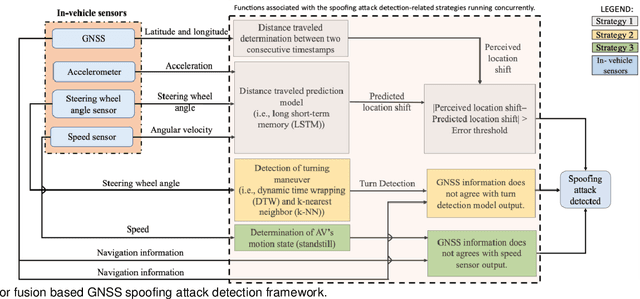

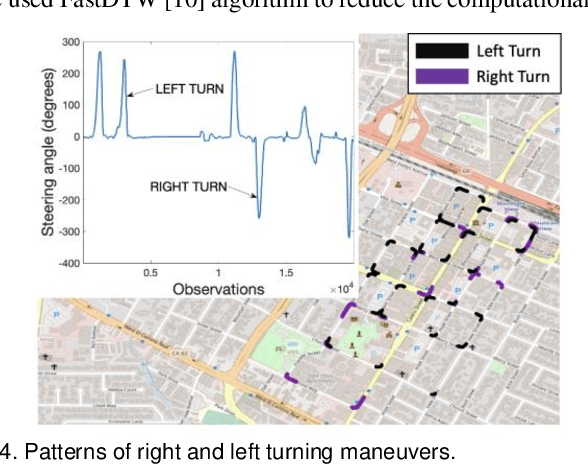

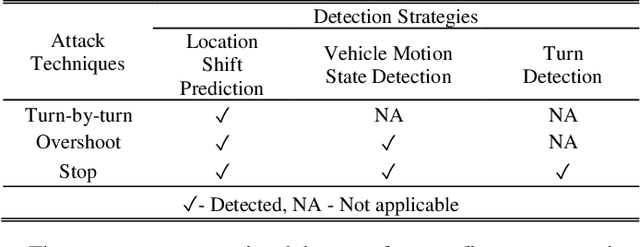

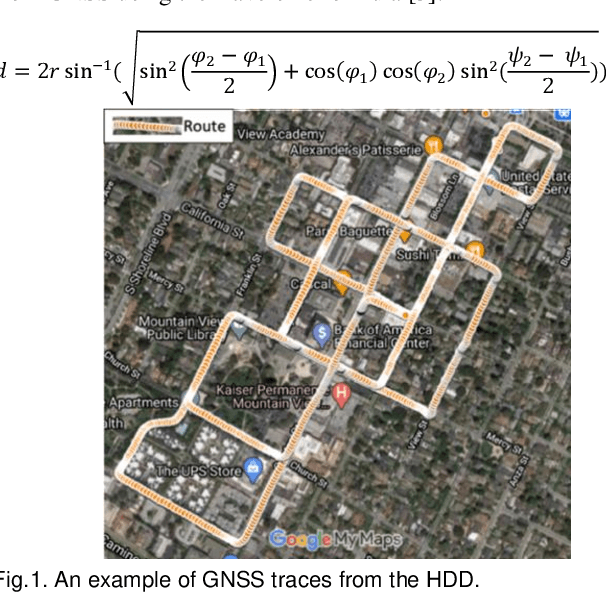

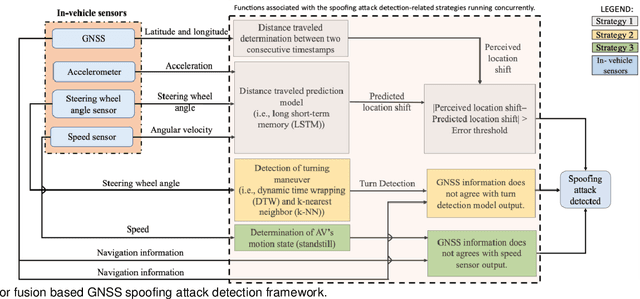

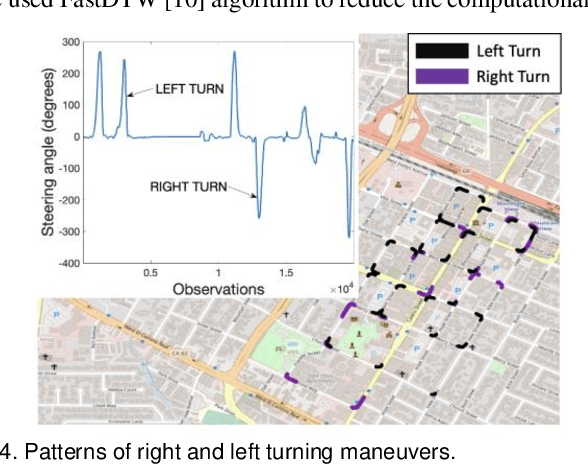

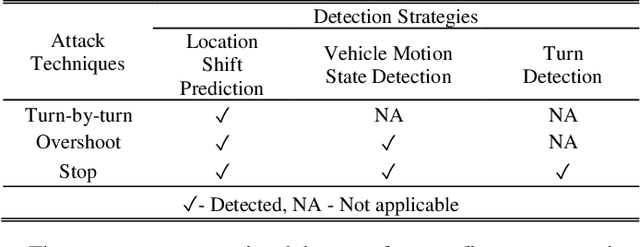

Abstract:This paper presents a sensor fusion based Global Navigation Satellite System (GNSS) spoofing attack detection framework for autonomous vehicles (AV) that consists of two concurrent strategies: (i) detection of vehicle state using predicted location shift -- i.e., distance traveled between two consecutive timestamps -- and monitoring of vehicle motion state -- i.e., standstill/ in motion; and (ii) detection and classification of turns (i.e., left or right). Data from multiple low-cost in-vehicle sensors (i.e., accelerometer, steering angle sensor, speed sensor, and GNSS) are fused and fed into a recurrent neural network model, which is a long short-term memory (LSTM) network for predicting the location shift, i.e., the distance that an AV travels between two consecutive timestamps. This location shift is then compared with the GNSS-based location shift to detect an attack. We have then combined k-Nearest Neighbors (k-NN) and Dynamic Time Warping (DTW) algorithms to detect and classify left and right turns using data from the steering angle sensor. To prove the efficacy of the sensor fusion-based attack detection framework, attack datasets are created for four unique and sophisticated spoofing attacks-turn-by-turn, overshoot, wrong turn, and stop, using the publicly available real-world Honda Research Institute Driving Dataset (HDD). Our analysis reveals that the sensor fusion-based detection framework successfully detects all four types of spoofing attacks within the required computational latency threshold.

Sensor Fusion-based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles

Jun 05, 2021

Abstract:In this study, a sensor fusion based GNSS spoofing attack detection framework is presented that consists of three concurrent strategies for an autonomous vehicle (AV): (i) prediction of location shift, (ii) detection of turns (left or right), and (iii) recognition of motion state (including standstill state). Data from multiple low-cost in-vehicle sensors (i.e., accelerometer, steering angle sensor, speed sensor, and GNSS) are fused and fed into a recurrent neural network model, which is a long short-term memory (LSTM) network for predicting the location shift, i.e., the distance that an AV travels between two consecutive timestamps. We have then combined k-Nearest Neighbors (k-NN) and Dynamic Time Warping (DTW) algorithms to detect turns using data from the steering angle sensor. In addition, data from an AV's speed sensor is used to recognize the AV's motion state including the standstill state. To prove the efficacy of the sensor fusion-based attack detection framework, attack datasets are created for three unique and sophisticated spoofing attacks turn by turn, overshoot, and stop using the publicly available real-world Honda Research Institute Driving Dataset (HDD). Our analysis reveals that the sensor fusion-based detection framework successfully detects all three types of spoofing attacks within the required computational latency threshold.

Prediction-Based GNSS Spoofing Attack Detection for Autonomous Vehicles

Oct 16, 2020

Abstract:Global Navigation Satellite System (GNSS) provides Positioning, Navigation, and Timing (PNT) services for autonomous vehicles (AVs) using satellites and radio communications. Due to the lack of encryption, open-access of the coarse acquisition (C/A) codes, and low strength of the signal, GNSS is vulnerable to spoofing attacks compromising the navigational capability of the AV. A spoofed attack is difficult to detect as a spoofer (attacker who performs spoofing attack) can mimic the GNSS signal and transmit inaccurate location coordinates to an AV. In this study, we have developed a prediction-based spoofing attack detection strategy using the long short-term memory (LSTM) model, a recurrent neural network model. The LSTM model is used to predict the distance traveled between two consecutive locations of an autonomous vehicle. In order to develop the LSTM prediction model, we have used a publicly available real-world comma2k19 driving dataset. The training dataset contains different features (i.e., acceleration, steering wheel angle, speed, and distance traveled between two consecutive locations) extracted from the controlled area network (CAN), GNSS, and inertial measurement unit (IMU) sensors of AVs. Based on the predicted distance traveled between the current location and the immediate future location of an autonomous vehicle, a threshold value is established using the positioning error of the GNSS device and prediction error (i.e., maximum absolute error) related to distance traveled between the current location and the immediate future location. Our analysis revealed that the prediction-based spoofed attack detection strategy can successfully detect the attack in real-time.

Change Point Models for Real-time Cyber Attack Detection in Connected Vehicle Environment

Mar 05, 2020

Abstract:Connected vehicle (CV) systems are cognizant of potential cyber attacks because of increasing connectivity between its different components such as vehicles, roadside infrastructure, and traffic management centers. However, it is a challenge to detect security threats in real-time and develop appropriate or effective countermeasures for a CV system because of the dynamic behavior of such attacks, high computational power requirement, and a historical data requirement for training detection models. To address these challenges, statistical models, especially change point models, have potentials for real-time anomaly detections. Thus, the objective of this study is to investigate the efficacy of two change point models, Expectation Maximization (EM) and two forms of Cumulative Summation (CUSUM) algorithms (i.e., typical and adaptive), for real-time V2I cyber attack detection in a CV Environment. To prove the efficacy of these models, we evaluated these two models for three different type of cyber attack, denial of service (DOS), impersonation, and false information, using basic safety messages (BSMs) generated from CVs through simulation. Results from numerical analysis revealed that EM, CUSUM, and adaptive CUSUM could detect these cyber attacks, DOS, impersonation, and false information, with an accuracy of (99%, 100%, 100%), (98%, 10%, 100%), and (100%, 98%, 100%) respectively.

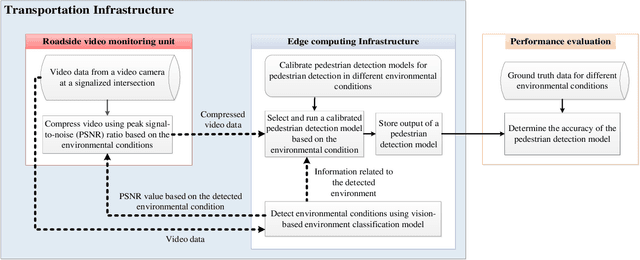

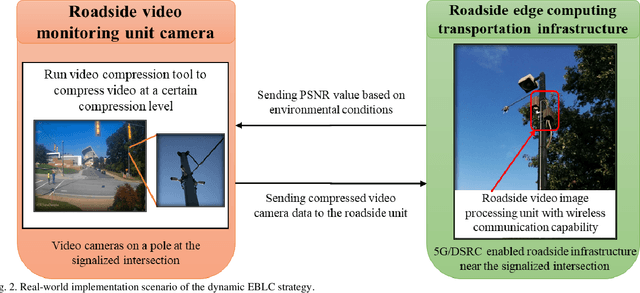

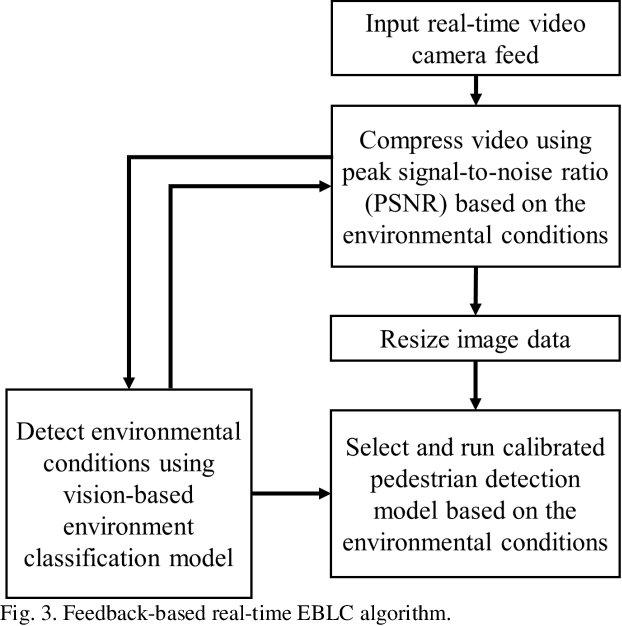

Dynamic Error-bounded Lossy Compression (EBLC) to Reduce the Bandwidth Requirement for Real-time Vision-based Pedestrian Safety Applications

Jan 29, 2020

Abstract:As camera quality improves and their deployment moves to areas with limited bandwidth, communication bottlenecks can impair real-time constraints of an ITS application, such as video-based real-time pedestrian detection. Video compression reduces the bandwidth requirement to transmit the video but degrades the video quality. As the quality level of the video decreases, it results in the corresponding decreases in the accuracy of the vision-based pedestrian detection model. Furthermore, environmental conditions (e.g., rain and darkness) alter the compression ratio and can make maintaining a high pedestrian detection accuracy more difficult. The objective of this study is to develop a real-time error-bounded lossy compression (EBLC) strategy to dynamically change the video compression level depending on different environmental conditions in order to maintain a high pedestrian detection accuracy. We conduct a case study to show the efficacy of our dynamic EBLC strategy for real-time vision-based pedestrian detection under adverse environmental conditions. Our strategy selects the error tolerances dynamically for lossy compression that can maintain a high detection accuracy across a representative set of environmental conditions. Analyses reveal that our strategy increases pedestrian detection accuracy up to 14% and reduces the communication bandwidth up to 14x for adverse environmental conditions compared to the same conditions but without our dynamic EBLC strategy. Our dynamic EBLC strategy is independent of detection models and environmental conditions allowing other detection models and environmental conditions to be easily incorporated in our strategy.

Vision-based Pedestrian Alert Safety System (PASS) for Signalized Intersections

Jul 02, 2019

Abstract:Although Vehicle-to-Pedestrian (V2P) communication can significantly improve pedestrian safety at a signalized intersection, this safety is hindered as pedestrians often do not carry hand-held devices (e.g., Dedicated short-range communication (DSRC) and 5G enabled cell phone) to communicate with connected vehicles nearby. To overcome this limitation, in this study, traffic cameras at a signalized intersection were used to accurately detect and locate pedestrians via a vision-based deep learning technique to generate safety alerts in real-time about possible conflicts between vehicles and pedestrians. The contribution of this paper lies in the development of a system using a vision-based deep learning model that is able to generate personal safety messages (PSMs) in real-time (every 100 milliseconds). We develop a pedestrian alert safety system (PASS) to generate a safety alert of an imminent pedestrian-vehicle crash using generated PSMs to improve pedestrian safety at a signalized intersection. Our approach estimates the location and velocity of a pedestrian more accurately than existing DSRC-enabled pedestrian hand-held devices. A connected vehicle application, the Pedestrian in Signalized Crosswalk Warning (PSCW), was developed to evaluate the vision-based PASS. Numerical analyses show that our vision-based PASS is able to satisfy the accuracy and latency requirements of pedestrian safety applications in a connected vehicle environment.

In-Vehicle False Information Attack Detection and Mitigation Framework using Machine Learning and Software Defined Networking

Jun 24, 2019

Abstract:A modern vehicle contains many electronic control units (ECUs), which communicate with each other through the Controller Area Network (CAN) bus to ensure vehicle safety and performance. Emerging Connected and Automated Vehicles (CAVs) will have more ECUs and coupling between them due to the vast array of additional sensors, advanced driving features (such as lane keeping and navigation) and Vehicle-to-Everything (V2X) connectivity. As a result, CAVs will have more vulnerabilities within the in-vehicle network. In this study, we develop a software defined networking (SDN) based in-vehicle networking framework for security against false information attacks on CAN frames. We then created an attack model and attack datasets for false information attacks on brake-related ECUs in an SDN based in-vehicle network. We subsequently developed a machine-learning based false information attack/anomaly detection model for the real-time detection of anomalies within the in-vehicle network. Specifically, we utilized the concept of time-series classification and developed a Long Short-Term Memory (LSTM) based model that detects false information within the CAN data traffic. Additionally, based on our research, we highlighted policies for mitigating the effect of cyber-attacks using the SDN framework. The SDN-based attack detection model can detect false information with an accuracy, precision and recall of 95%, 95% and 87%, respectively, while satisfying the real-time communication and computational requirements.

Vision-based Navigation of Autonomous Vehicle in Roadway Environments with Unexpected Hazards

Sep 27, 2018

Abstract:Vision-based navigation of modern autonomous vehicles primarily depends on Deep Neural Network (DNN) based systems in which the controller obtains input from sensors/detectors such as cameras, and produces an output such as a steering wheel angle to navigate the vehicle safely in roadway traffic. Typically, these DNN-based systems are trained through supervised and/or transfer learning; however, recent studies show that these systems can be compromised by perturbation or adversarial input features on the trained DNN-based models. Similarly, this perturbation can be introduced into the autonomous vehicle DNN-based system by roadway hazards such as debris and roadblocks. In this study, we first introduce a roadway hazardous environment (both intentional and unintentional) that can compromise the DNN-based system of an autonomous vehicle, producing an incorrect vehicle navigational output such as a steering wheel angle, which can cause crashes resulting in fatality and injury. Then, we develop an approach based on object detection and semantic segmentation to mitigate the adverse effect of this hazardous environment, one that helps the autonomous vehicle to navigate safely around such hazards. This study finds the DNN-based model with hazardous object detection, and semantic segmentation improves the ability of an autonomous vehicle to avoid potential crashes by 21% compared to the traditional DNN-based autonomous driving system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge