Meihua Zhou

DCL-SE: Dynamic Curriculum Learning for Spatiotemporal Encoding of Brain Imaging

Nov 19, 2025

Abstract:High-dimensional neuroimaging analyses for clinical diagnosis are often constrained by compromises in spatiotemporal fidelity and by the limited adaptability of large-scale, general-purpose models. To address these challenges, we introduce Dynamic Curriculum Learning for Spatiotemporal Encoding (DCL-SE), an end-to-end framework centered on data-driven spatiotemporal encoding (DaSE). We leverage Approximate Rank Pooling (ARP) to efficiently encode three-dimensional volumetric brain data into information-rich, two-dimensional dynamic representations, and then employ a dynamic curriculum learning strategy, guided by a Dynamic Group Mechanism (DGM), to progressively train the decoder, refining feature extraction from global anatomical structures to fine pathological details. Evaluated across six publicly available datasets, including Alzheimer's disease and brain tumor classification, cerebral artery segmentation, and brain age prediction, DCL-SE consistently outperforms existing methods in accuracy, robustness, and interpretability. These findings underscore the critical importance of compact, task-specific architectures in the era of large-scale pretrained networks.

MIPD: A Multi-sensory Interactive Perception Dataset for Embodied Intelligent Driving

Nov 08, 2024

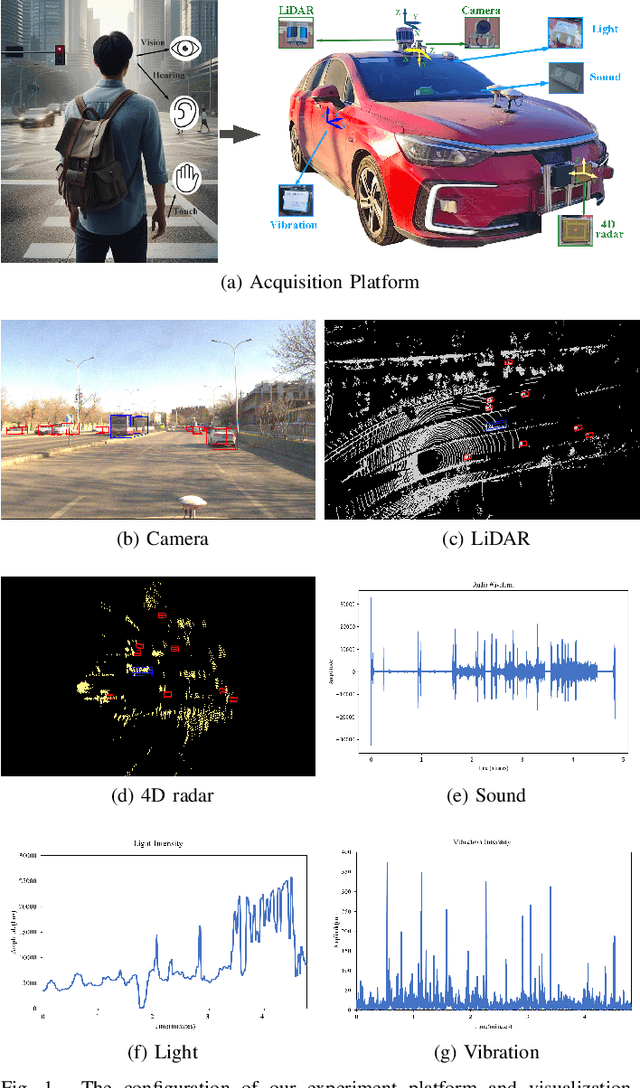

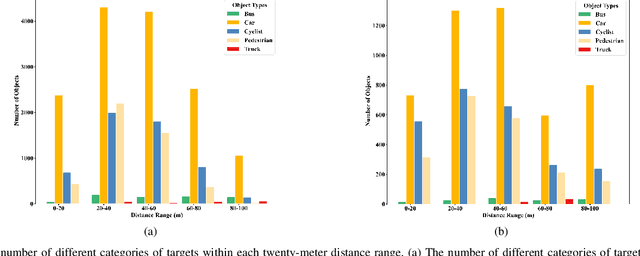

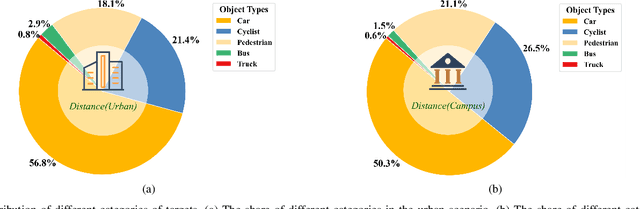

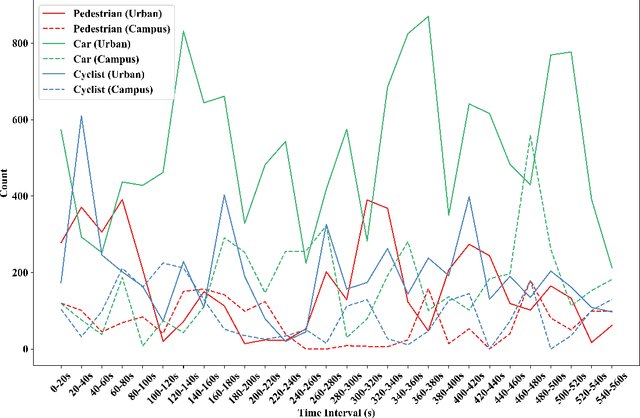

Abstract:During the process of driving, humans usually rely on multiple senses to gather information and make decisions. Analogously, in order to achieve embodied intelligence in autonomous driving, it is essential to integrate multidimensional sensory information in order to facilitate interaction with the environment. However, the current multi-modal fusion sensing schemes often neglect these additional sensory inputs, hindering the realization of fully autonomous driving. This paper considers multi-sensory information and proposes a multi-modal interactive perception dataset named MIPD, enabling expanding the current autonomous driving algorithm framework, for supporting the research on embodied intelligent driving. In addition to the conventional camera, lidar, and 4D radar data, our dataset incorporates multiple sensor inputs including sound, light intensity, vibration intensity and vehicle speed to enrich the dataset comprehensiveness. Comprising 126 consecutive sequences, many exceeding twenty seconds, MIPD features over 8,500 meticulously synchronized and annotated frames. Moreover, it encompasses many challenging scenarios, covering various road and lighting conditions. The dataset has undergone thorough experimental validation, producing valuable insights for the exploration of next-generation autonomous driving frameworks.

LostNet: A smart way for lost and find

Jan 05, 2023Abstract:Due to the enormous population growth of cities in recent years, objects are frequently lost and unclaimed on public transportation, in restaurants, or any other public areas. While services like Find My iPhone can easily identify lost electronic devices, more valuable objects cannot be tracked in an intelligent manner, making it impossible for administrators to reclaim a large number of lost and found items in a timely manner. We present a method that significantly reduces the complexity of searching by comparing previous images of lost and recovered things provided by the owner with photos taken when registered lost and found items are received. In this research, we will primarily design a photo matching network by combining the fine-tuning method of MobileNetv2 with CBAM Attention and using the Internet framework to develop an online lost and found image identification system. Our implementation gets a testing accuracy of 96.8% using only 665.12M GLFOPs and 3.5M training parameters. It can recognize practice images and can be run on a regular laptop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge