Mayank Goswami

Psychological stress during Examination and its estimation by handwriting in answer script

Nov 08, 2025Abstract:This research explores the fusion of graphology and artificial intelligence to quantify psychological stress levels in students by analyzing their handwritten examination scripts. By leveraging Optical Character Recognition and transformer based sentiment analysis models, we present a data driven approach that transcends traditional grading systems, offering deeper insights into cognitive and emotional states during examinations. The system integrates high resolution image processing, TrOCR, and sentiment entropy fusion using RoBERTa based models to generate a numerical Stress Index. Our method achieves robustness through a five model voting mechanism and unsupervised anomaly detection, making it an innovative framework in academic forensics.

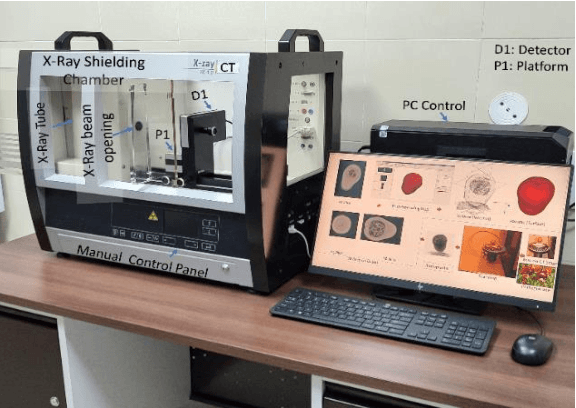

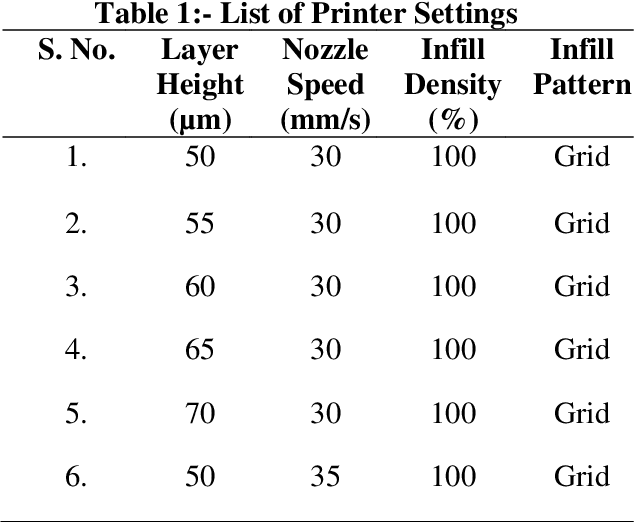

Additive Manufacturing Processes Protocol Prediction by Artificial Intelligence using X-ray Computed Tomography data

Jan 24, 2025Abstract:The quality of the part fabricated from the Additive Manufacturing (AM) process depends upon the process parameters used, and therefore, optimization is required for apt quality. A methodology is proposed to set these parameters non-iteratively without human intervention. It utilizes Artificial Intelligence (AI) to fully automate the process, with the capability to self-train any apt AI model by further assimilating the training data.This study includes three commercially available 3D printers for soft material printing based on the Material Extrusion (MEX) AM process. The samples are 3D printed for six different AM process parameters obtained by varying layer height and nozzle speed. The novelty part of the methodology is incorporating an AI-based image segmentation step in the decision-making stage that uses quality inspected training data from the Non-Destructive Testing (NDT) method. The performance of the trained AI model is compared with the two software tools based on the classical thresholding method. The AI-based Artificial Neural Network (ANN) model is trained from NDT-assessed and AI-segmented data to automate the selection of optimized process parameters. The AI-based model is 99.3 % accurate, while the best available commercial classical image method is 83.44 % accurate. The best value of overall R for training ANN is 0.82. The MEX process gives a 22.06 % porosity error relative to the design. The NDT-data trained two AI models integrated into a series pipeline for optimal process parameters are proposed and verified by classical optimization and mechanical testing methods.

Prescanning Assembly Optimization Criteria for Computed Tomography

Sep 29, 2023Abstract:Computerized Tomography assembly and system configuration are optimized for enhanced invertibility in sparse data reconstruction. Assembly generating maximum principal components/condition number of weight matrix is designated as best configuration. The gamma CT system is used for testing. The unoptimized sample location placement with 7.7% variation results in a maximum 50% root mean square error, 16.5% loss of similarity index, and 40% scattering noise in the reconstructed image relative to the optimized sample location when the proposed criteria are used. The method can help to automate the CT assembly, resulting in relatively artifact-free recovery and reducing the iteration to figure out the best scanning configuration for a given sample size, thus saving time, dosage, and operational cost.

Learning to Segment from Noisy Annotations: A Spatial Correction Approach

Jul 21, 2023Abstract:Noisy labels can significantly affect the performance of deep neural networks (DNNs). In medical image segmentation tasks, annotations are error-prone due to the high demand in annotation time and in the annotators' expertise. Existing methods mostly assume noisy labels in different pixels are \textit{i.i.d}. However, segmentation label noise usually has strong spatial correlation and has prominent bias in distribution. In this paper, we propose a novel Markov model for segmentation noisy annotations that encodes both spatial correlation and bias. Further, to mitigate such label noise, we propose a label correction method to recover true label progressively. We provide theoretical guarantees of the correctness of the proposed method. Experiments show that our approach outperforms current state-of-the-art methods on both synthetic and real-world noisy annotations.

AI pipeline for accurate retinal layer segmentation using OCT 3D images

Feb 15, 2023Abstract:Image data set from a multi-spectral animal imaging system is used to address two issues: (a) registering the oscillation in optical coherence tomography (OCT) images due to mouse eye movement and (b) suppressing the shadow region under the thick vessels/structures. Several classical and AI-based algorithms in combination are tested for each task to see their compatibility with data from the combined animal imaging system. Hybridization of AI with optical flow followed by Homography transformation is shown to be working (correlation value>0.7) for registration. Resnet50 backbone is shown to be working better than the famous U-net model for shadow region detection with a loss value of 0.9. A simple-to-implement analytical equation is shown to be working for brightness manipulation with a 1% increment in mean pixel values and a 77% decrease in the number of zeros. The proposed equation allows formulating a constraint optimization problem using a controlling factor {\alpha} for minimization of number of zeros, standard deviation of pixel value and maximizing the mean pixel value. For Layer segmentation, the standard U-net model is used. The AI-Pipeline consists of CNN, Optical flow, RCNN, pixel manipulation model, and U-net models in sequence. The thickness estimation process has a 6% error as compared to manual annotated standard data.

Characterization of 3D Printers and X-Ray Computerized Tomography

May 27, 2022

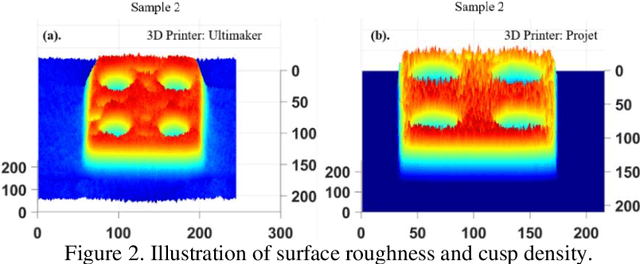

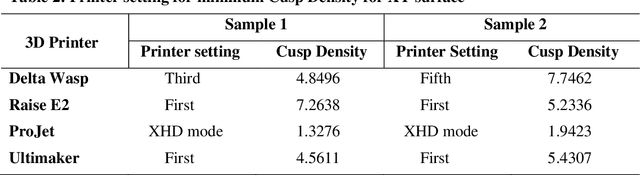

Abstract:The 3D printing process flow requires several inputs for the best printing quality. These settings may vary from sample to sample, printer to printer, and depend upon users' previous experience. The involved operational parameters for 3D Printing are varied to test the optimality. Thirty-eight samples are printed using four commercially available 3D printers, namely: (a) Ultimaker 2 Extended+, (b) Delta Wasp, (c) Raise E2, and (d) ProJet MJP. The sample profiles contain uniform and non-uniform distribution of the assorted size of cubes and spheres with a known amount of porosity. These samples are scanned using X-Ray Computed Tomography system. Functional Imaging analysis is performed using AI-based segmentation codes to (a) characterize these 3D printers and (b) find Three-dimensional surface roughness of three teeth and one sandstone pebble (from riverbed) with naturally deposited layers is also compared with printed sample values. Teeth has best quality. It is found that ProJet MJP gives the best quality of printed samples with the least amount of surface roughness and almost near to the actual porosity value. As expected, 100% infill density value, best spatial resolution for printing or Layer height, and minimum nozzle speed give the best quality of 3D printing.

LabVIEW is faster and C is economical interfacing tool for UCT automation

May 17, 2022

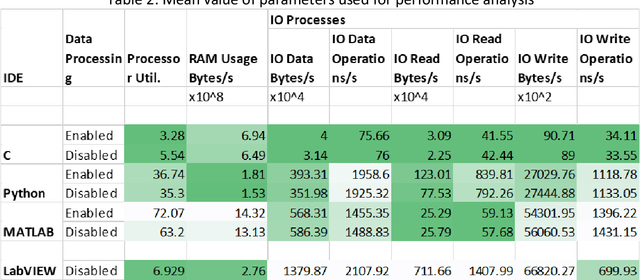

Abstract:An in-house developed 2D ultrasound computerized Tomography system is fully automated. Performance analysis of instrument and software interfacing soft tools, namely the LabVIEW, MATLAB, C, and Python, is presented. The instrument interfacing algorithms, hardware control algorithms, signal processing, and analysis codes are written using above mentioned soft tool platforms. Total of eight performance indices are used to compare the ease of (a) realtime control of electromechanical assembly, (b) sensors, instruments integration, (c) synchronized data acquisition, and (d) simultaneous raw data processing. It is found that C utilizes the least processing power and performs a lower number of processes to perform the same task. In runtime analysis (data acquisition and realtime control), LabVIEW performs best, taking 365.69s in comparison to MATLAB (623.83s), Python ( 1505.54s), and C (1252.03s) to complete the experiment. Python performs better in establishing faster interfacing and minimum RAM usage. LabVIEW is recommended for its fast process execution. C is recommended for the most economical implementation. Python is recommended for complex system automation having a very large number of components involved. This article provides a methodology to select optimal soft tools for instrument automation-related aspects.

A Manifold View of Adversarial Risk

Apr 08, 2022

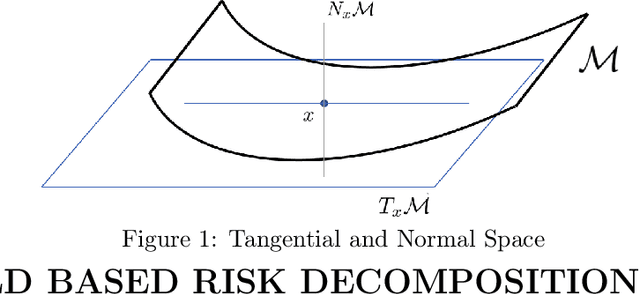

Abstract:The adversarial risk of a machine learning model has been widely studied. Most previous works assume that the data lies in the whole ambient space. We propose to take a new angle and take the manifold assumption into consideration. Assuming data lies in a manifold, we investigate two new types of adversarial risk, the normal adversarial risk due to perturbation along normal direction, and the in-manifold adversarial risk due to perturbation within the manifold. We prove that the classic adversarial risk can be bounded from both sides using the normal and in-manifold adversarial risks. We also show with a surprisingly pessimistic case that the standard adversarial risk can be nonzero even when both normal and in-manifold risks are zero. We finalize the paper with empirical studies supporting our theoretical results. Our results suggest the possibility of improving the robustness of a classifier by only focusing on the normal adversarial risk.

AI and conventional methods for UCT projection data estimation

Aug 17, 2021

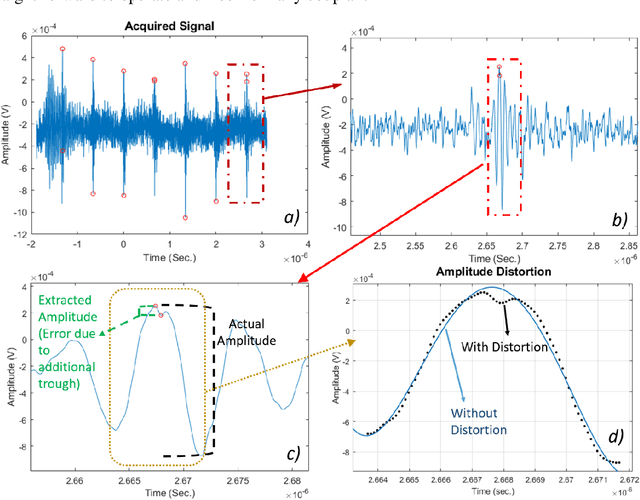

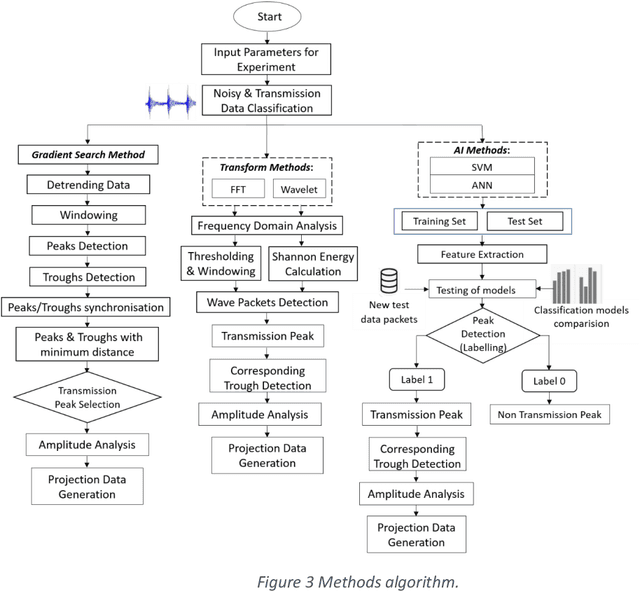

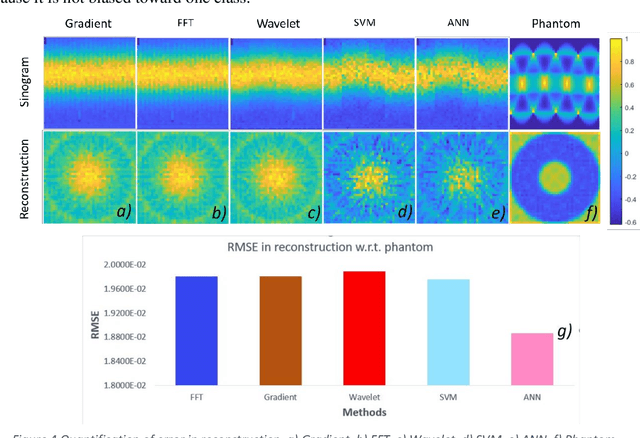

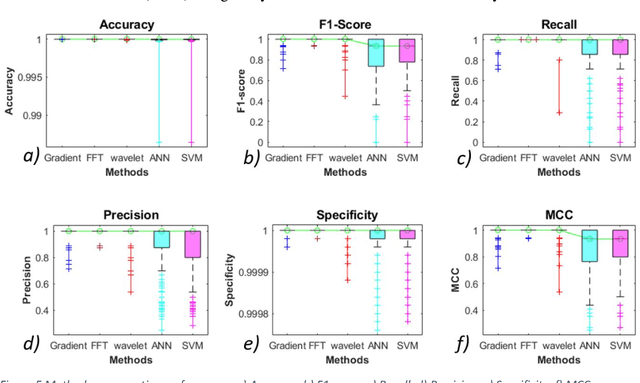

Abstract:A 2D Compact ultrasound computerized tomography (UCT) system is developed. Fully automatic post processing tools involving signal and image processing are developed as well. Square of the amplitude values are used in transmission mode with natural 1.5 MHz frequency and rise time 10.4 ns and fall time 8.4 ns and duty cycle of 4.32%. Highest peak to corresponding trough values are considered as transmitting wave between transducers in direct line talk. Sensitivity analysis of methods to extract peak to corresponding trough per transducer are discussed in this paper. Total five methods are tested. These methods are taken from broad categories: (a) Conventional and (b) Artificial Intelligence (AI) based methods. Conventional methods, namely: (a) simple gradient based peak detection, (b) Fourier based, (c) wavelet transform are compared with AI based methods: (a) support vector machine (SVM), (b) artificial neural network (ANN). Classification step was performed as well to discard the signal which does not has contribution of transmission wave. It is found that conventional methods have better performance. Reconstruction error, accuracy, F-Score, recall, precision, specificity and MCC for 40 x 40 data 1600 data files are measured. Each data file contains 50,002 data point. Ten such data files are used for training the Neural Network. Each data file has 7/8 wave packets and each packet corresponds to one transmission amplitude data. Reconstruction error is found to be minimum for ANN method. Other performance indices show that FFT method is processing the UCT signal with best recovery.

AI Algorithm for Mode Classification of PCF SPR Sensor Design

Jul 19, 2021

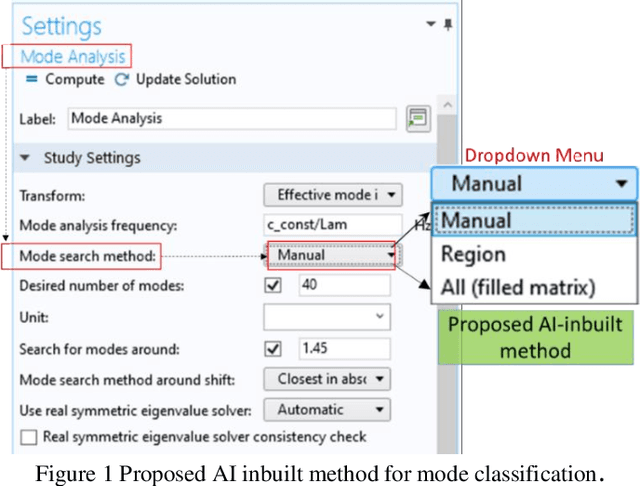

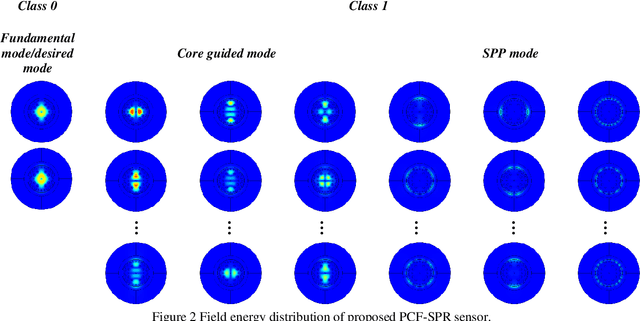

Abstract:Photonic Crystal Fiber design based on surface plasmon resonance phenomenon (PCF SPR) is optimized before it is fabricated for a particular application. An artificial intelligence algorithm is evaluated here to increase the ease of the simulation process for common users. COMSOL MultiPhysics is used. The algorithm suggests best among eight standard machine learning and one deep learning model to automatically select the desired mode, chosen visually by the experts otherwise. Total seven performance indices: namely Precision, Recall, Accuracy, F1-Score, Specificity, Matthew correlation coefficient, are utilized to make the optimal decision. Robustness towards variations in sensor geometry design is also considered as an optimal parameter. Several PCF-SPR based Photonic sensor designs are tested, and a large range optimal (based on phase matching) design is proposed. For this design algorithm has selected Support Vector Machine (SVM) as the best option with an accuracy of 96%, F1-Score is 95.83%, and MCC of 92.30%. The average sensitivity of the proposed sensor design with respect to change in refractive index (1.37-1.41) is 5500 nm/RIU. Resolution is 2.0498x10^(-5) RIU^(-1). The algorithm can be integrated into commercial software as an add-on or as a module in academic codes. The proposed novel step has saved approximately 75 minutes in the overall design process. The present work is equally applicable for mode selection of sensor other than PCF-SPR sensing geometries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge