Matthias König

Automated Design of Linear Bounding Functions for Sigmoidal Nonlinearities in Neural Networks

Jun 14, 2024Abstract:The ubiquity of deep learning algorithms in various applications has amplified the need for assuring their robustness against small input perturbations such as those occurring in adversarial attacks. Existing complete verification techniques offer provable guarantees for all robustness queries but struggle to scale beyond small neural networks. To overcome this computational intractability, incomplete verification methods often rely on convex relaxation to over-approximate the nonlinearities in neural networks. Progress in tighter approximations has been achieved for piecewise linear functions. However, robustness verification of neural networks for general activation functions (e.g., Sigmoid, Tanh) remains under-explored and poses new challenges. Typically, these networks are verified using convex relaxation techniques, which involve computing linear upper and lower bounds of the nonlinear activation functions. In this work, we propose a novel parameter search method to improve the quality of these linear approximations. Specifically, we show that using a simple search method, carefully adapted to the given verification problem through state-of-the-art algorithm configuration techniques, improves the average global lower bound by 25% on average over the current state of the art on several commonly used local robustness verification benchmarks.

Rediscovering Argumentation Principles Utilizing Collective Attacks

May 06, 2022

Abstract:Argumentation Frameworks (AFs) are a key formalism in AI research. Their semantics have been investigated in terms of principles, which define characteristic properties in order to deliver guidance for analysing established and developing new semantics. Because of the simple structure of AFs, many desired properties hold almost trivially, at the same time hiding interesting concepts behind syntactic notions. We extend the principle-based approach to Argumentation Frameworks with Collective Attacks (SETAFs) and provide a comprehensive overview of common principles for their semantics. Our analysis shows that investigating principles based on decomposing the given SETAF (e.g. directionality or SCC-recursiveness) poses additional challenges in comparison to usual AFs. We introduce the notion of the reduct as well as the modularization principle for SETAFs which will prove beneficial for this kind of investigation. We then demonstrate how our findings can be utilized for incremental computation of extensions and give a novel parameterized tractability result for verifying preferred extensions.

Aspartix-V21

Sep 07, 2021

Abstract:In this solver description we present ASPARTIX-V, in its 2021 edition, which participates in the International Competition on Computational Models of Argumentation (ICCMA) 2021. ASPARTIX-V is capable of solving all classical (static) reasoning tasks part of ICCMA'21 and extends the ASPARTIX system suite by incorporation of recent ASP language constructs (e.g. conditional literals), domain heuristics within ASP, and multi-shot methods. In this light ASPARTIX-V deviates from the traditional focus of ASPARTIX on monolithic approaches (i.e., one-shot solving via a single ASP encoding) to further enhance performance.

This Far, No Further: Introducing Virtual Borders to Mobile Robots Using a Laser Pointer

Mar 04, 2019

Abstract:We address the problem of controlling the workspace of a 3-DoF mobile robot. In a human-robot shared space, robots should navigate in a human-acceptable way according to the users' demands. For this purpose, we employ virtual borders, that are non-physical borders, to allow a user the restriction of the robot's workspace. To this end, we propose an interaction method based on a laser pointer to intuitively define virtual borders. This interaction method uses a previously developed framework based on robot guidance to change the robot's navigational behavior. Furthermore, we extend this framework to increase the flexibility by considering different types of virtual borders, i.e. polygons and curves separating an area. We evaluated our method with 15 non-expert users concerning correctness, accuracy and teaching time. The experimental results revealed a high accuracy and linear teaching time with respect to the border length while correctly incorporating the borders into the robot's navigational map. Finally, our user study showed that non-expert users can employ our interaction method.

Virtual Border Teaching Using a Network Robot System

Feb 19, 2019

Abstract:Virtual borders are employed to allow users the flexible and interactive definition of their mobile robots' workspaces and to ensure a socially aware navigation in human-centered environments. They have been successfully defined using methods from human-robot interaction where a user directly interacts with the robot. However, since we recently witness an emergence of network robot systems (NRS) enhancing the perceptual and interaction abilities of a robot, we investigate the effect of such a NRS on the teaching of virtual borders and answer the question if an intelligent environment can improve the teaching process of virtual borders. For this purpose, we propose an interaction method based on a NRS and laser pointer as interaction device. This interaction method comprises an architecture that integrates robots into intelligent environments with the purpose of supporting the teaching process in terms of interaction and feedback, the cooperation between stationary and mobile cameras to perceive laser spots and an algorithm allowing the extraction of virtual borders from multiple camera observations. Our experimental results acquired from 15 participants' performances show that our system is equally successful and accurate while featuring a significant lower teaching time and a higher user experience compared to an approach without support of a NRS.

Virtual Borders: Accurate Definition of a Mobile Robot's Workspace Using Augmented Reality

Oct 08, 2018

Abstract:We address the problem of interactively controlling the workspace of a mobile robot to ensure a human-aware navigation. This is especially of relevance for non-expert users living in human-robot shared spaces, e.g. home environments, since they want to keep the control of their mobile robots, such as vacuum cleaning or companion robots. Therefore, we introduce virtual borders that are respected by a robot while performing its tasks. For this purpose, we employ a RGB-D Google Tango tablet as human-robot interface in combination with an augmented reality application to flexibly define virtual borders. We evaluated our system with 15 non-expert users concerning accuracy, teaching time and correctness and compared the results with other baseline methods based on visual markers and a laser pointer. The experimental results show that our method features an equally high accuracy while reducing the teaching time significantly compared to the baseline methods. This holds for different border lengths, shapes and variations in the teaching process. Finally, we demonstrated the correctness of the approach, i.e. the mobile robot changes its navigational behavior according to the user-defined virtual borders.

A Joint Motion Model for Human-Like Robot-Human Handover

Aug 28, 2018

Abstract:In future, robots will be present in everyday life. The development of these supporting robots is a challenge. A fundamental task for assistance robots is to pick up and hand over objects to humans. By interacting with users, soft factors such as predictability, safety and reliability become important factors for development. Previous works show that collaboration with robots is more acceptable when robots behave and move human-like. In this paper, we present a motion model based on the motion profiles of individual joints. These motion profiles are based on observations and measurements of joint movements in human-human handover. We implemented this joint motion model (JMM) on a humanoid and a non-humanoidal industrial robot to show the movements to subjects. Particular attention was paid to the recognizability and human similarity of the movements. The results show that people are able to recognize human-like movements and perceive the movements of the JMM as more human-like compared to a traditional model. Furthermore, it turns out that the differences between a linear joint space trajectory and JMM are more noticeable in an industrial robot than in a humanoid robot.

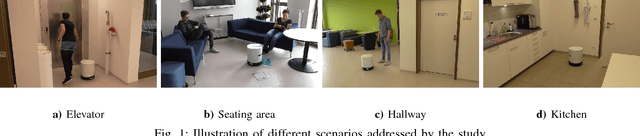

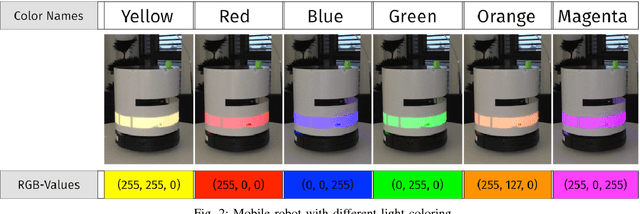

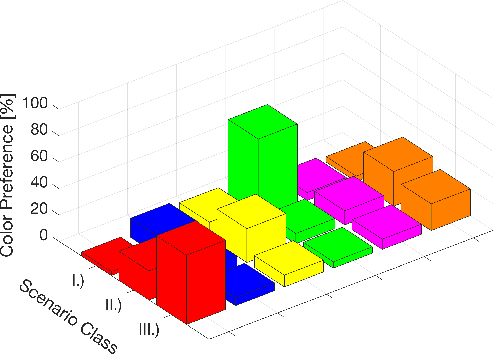

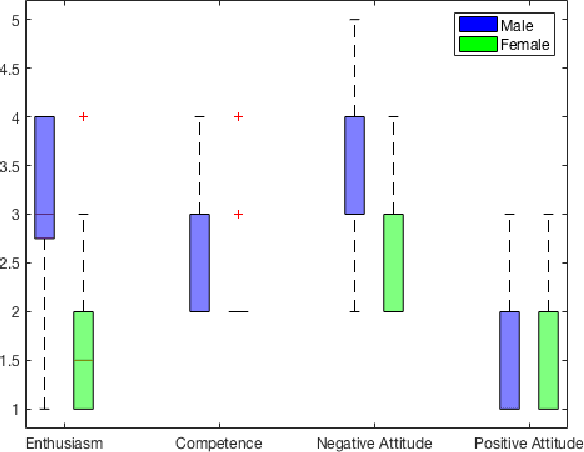

The Power of Color: A Study on the Effective Use of Colored Light in Human-Robot Interaction

Feb 21, 2018

Abstract:In times of more and more complex interaction techniques, we point out the powerfulness of colored light as a simple and cheap feedback mechanism. Since it is visible over a distance and does not interfere with other modalities, it is especially interesting for mobile robots. In an online survey, we asked 56 participants to choose the most appropriate colors for scenarios that were presented in the form of videos. In these scenarios a mobile robot accomplished tasks, in some with success, in others it failed because the task is not feasible, in others it stopped because it waited for help. We analyze in what way the color preferences differ between these three categories. The results show a connection between colors and meanings and that it depends on the participants' technical affinity, experience with robots and gender how clear the color preference is for a certain category. Finally, we found out that the participants' favorite color is not related to color preferences.

SwarmRob: A Toolkit for Reproducibility and Sharing of Experimental Artifacts in Robotics Research

Jan 25, 2018

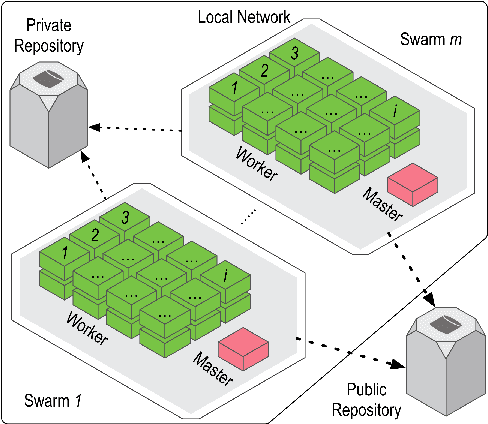

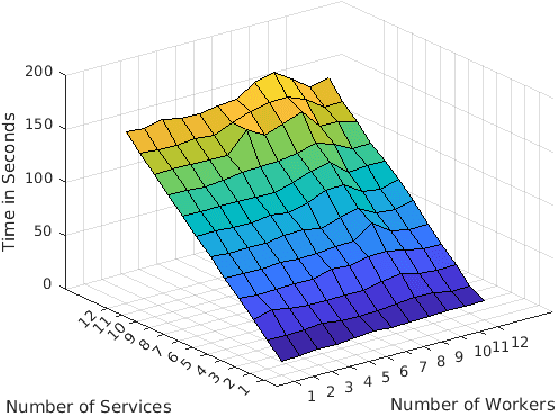

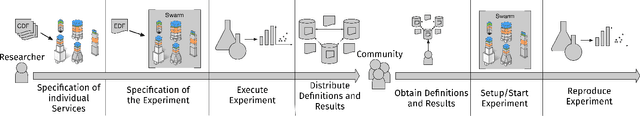

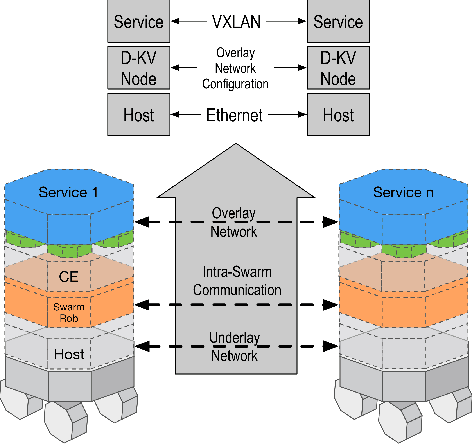

Abstract:Due to the complexity of robotics, the reproducibility of results and experiments is one of the fundamental problems in robotics research. While the problem has been identified by the community, the approaches that address the problem appropriately are limited. The toolkit proposed in this paper tries to deal with the problem of reproducibility and sharing of experimental artifacts in robotics research by a holistic approach based on operating-system-level virtualization. The experimental artifacts of an experiment are isolated in "containers" that can be distributed to other researchers. Based on this, this paper presents a novel experimental workflow to describe, execute and distribute experimental software-artifacts to heterogeneous robots dynamically. As a result, the proposed solution supports researchers in executing and reproducing experimental evaluations.

A Framework for Interactive Teaching of Virtual Borders to Mobile Robots

Feb 16, 2017

Abstract:The increasing number of robots in home environments leads to an emerging coexistence between humans and robots. Robots undertake common tasks and support the residents in their everyday life. People appreciate the presence of robots in their environment as long as they keep the control over them. One important aspect is the control of a robot's workspace. Therefore, we introduce virtual borders to precisely and flexibly define the workspace of mobile robots. First, we propose a novel framework that allows a person to interactively restrict a mobile robot's workspace. To show the validity of this framework, a concrete implementation based on visual markers is implemented. Afterwards, the mobile robot is capable of performing its tasks while respecting the new virtual borders. The approach is accurate, flexible and less time consuming than explicit robot programming. Hence, even non-experts are able to teach virtual borders to their robots which is especially interesting in domains like vacuuming or service robots in home environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge