Klaus Tönnies

Learning Multi-Modal Volumetric Prostate Registration with Weak Inter-Subject Spatial Correspondence

Feb 09, 2021

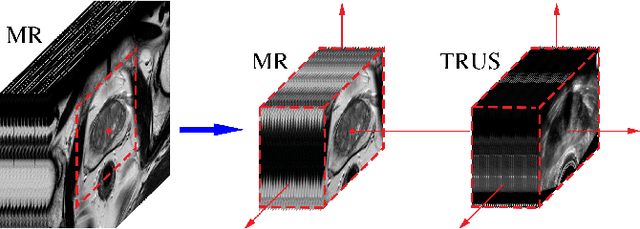

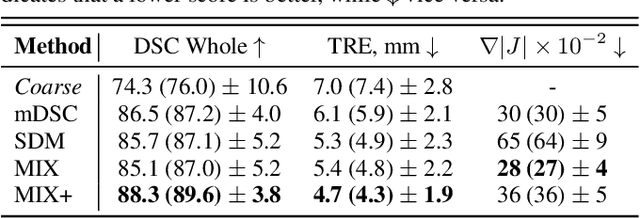

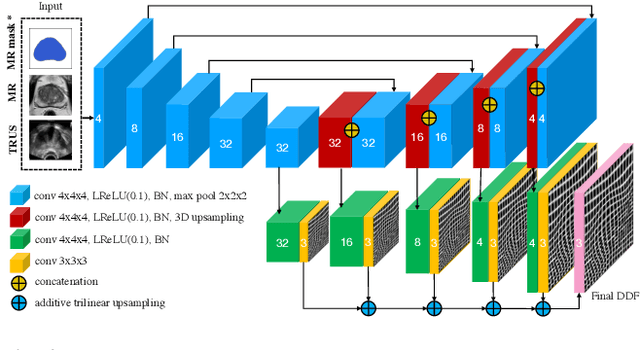

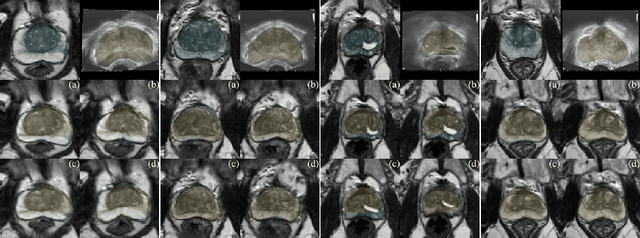

Abstract:Recent studies demonstrated the eligibility of convolutional neural networks (CNNs) for solving the image registration problem. CNNs enable faster transformation estimation and greater generalization capability needed for better support during medical interventions. Conventional fully-supervised training requires a lot of high-quality ground truth data such as voxel-to-voxel transformations, which typically are attained in a too tedious and error-prone manner. In our work, we use weakly-supervised learning, which optimizes the model indirectly only via segmentation masks that are a more accessible ground truth than the deformation fields. Concerning the weak supervision, we investigate two segmentation similarity measures: multiscale Dice similarity coefficient (mDSC) and the similarity between segmentation-derived signed distance maps (SDMs). We show that the combination of mDSC and SDM similarity measures results in a more accurate and natural transformation pattern together with a stronger gradient coverage. Furthermore, we introduce an auxiliary input to the neural network for the prior information about the prostate location in the MR sequence, which mostly is available preoperatively. This approach significantly outperforms the standard two-input models. With weakly labelled MR-TRUS prostate data, we showed registration quality comparable to the state-of-the-art deep learning-based method.

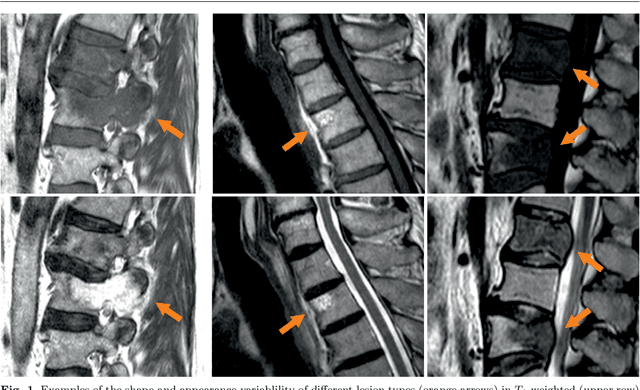

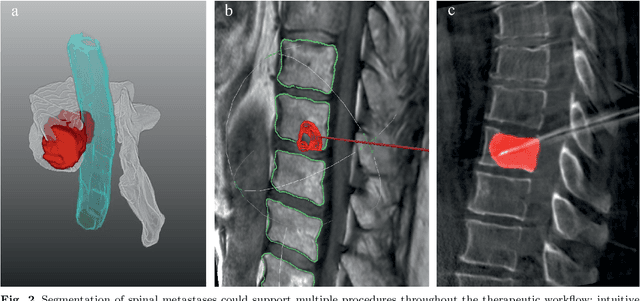

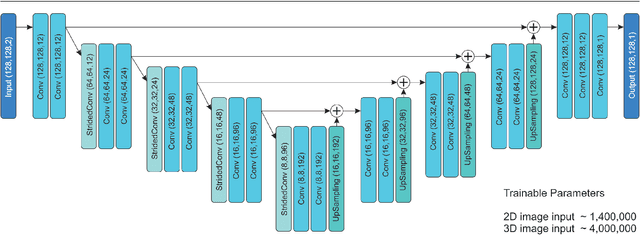

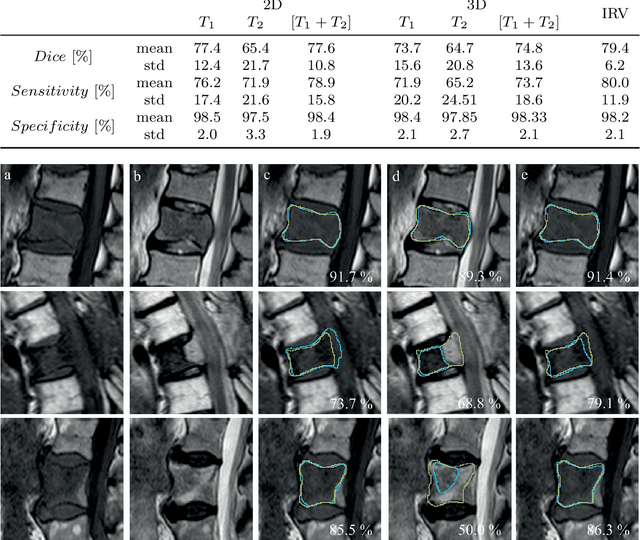

Spinal Metastases Segmentation in MR Imaging using Deep Convolutional Neural Networks

Jan 28, 2020

Abstract:This study's objective was to segment spinal metastases in diagnostic MR images using a deep learning-based approach. Segmentation of such lesions can present a pivotal step towards enhanced therapy planning and validation, as well as intervention support during minimally invasive and image-guided surgeries like radiofrequency ablations. For this purpose, we used a U-Net like architecture trained with 40 clinical cases including both, lytic and sclerotic lesion types and various MR sequences. Our proposed method was evaluated with regards to various factors influencing the segmentation quality, e.g. the used MR sequences and the input dimension. We quantitatively assessed our experiments using Dice coefficients, sensitivity and specificity rates. Compared to expertly annotated lesion segmentations, the experiments yielded promising results with average Dice scores up to 77.6% and mean sensitivity rates up to 78.9%. To our best knowledge, our proposed study is one of the first to tackle this particular issue, which limits direct comparability with related works. In respect to similar deep learning-based lesion segmentations, e.g. in liver MR images or spinal CT images, our experiments showed similar or in some respects superior segmentation quality. Overall, our automatic approach can provide almost expert-like segmentation accuracy in this challenging and ambitious task.

This Far, No Further: Introducing Virtual Borders to Mobile Robots Using a Laser Pointer

Mar 04, 2019

Abstract:We address the problem of controlling the workspace of a 3-DoF mobile robot. In a human-robot shared space, robots should navigate in a human-acceptable way according to the users' demands. For this purpose, we employ virtual borders, that are non-physical borders, to allow a user the restriction of the robot's workspace. To this end, we propose an interaction method based on a laser pointer to intuitively define virtual borders. This interaction method uses a previously developed framework based on robot guidance to change the robot's navigational behavior. Furthermore, we extend this framework to increase the flexibility by considering different types of virtual borders, i.e. polygons and curves separating an area. We evaluated our method with 15 non-expert users concerning correctness, accuracy and teaching time. The experimental results revealed a high accuracy and linear teaching time with respect to the border length while correctly incorporating the borders into the robot's navigational map. Finally, our user study showed that non-expert users can employ our interaction method.

Virtual Border Teaching Using a Network Robot System

Feb 19, 2019

Abstract:Virtual borders are employed to allow users the flexible and interactive definition of their mobile robots' workspaces and to ensure a socially aware navigation in human-centered environments. They have been successfully defined using methods from human-robot interaction where a user directly interacts with the robot. However, since we recently witness an emergence of network robot systems (NRS) enhancing the perceptual and interaction abilities of a robot, we investigate the effect of such a NRS on the teaching of virtual borders and answer the question if an intelligent environment can improve the teaching process of virtual borders. For this purpose, we propose an interaction method based on a NRS and laser pointer as interaction device. This interaction method comprises an architecture that integrates robots into intelligent environments with the purpose of supporting the teaching process in terms of interaction and feedback, the cooperation between stationary and mobile cameras to perceive laser spots and an algorithm allowing the extraction of virtual borders from multiple camera observations. Our experimental results acquired from 15 participants' performances show that our system is equally successful and accurate while featuring a significant lower teaching time and a higher user experience compared to an approach without support of a NRS.

Virtual Borders: Accurate Definition of a Mobile Robot's Workspace Using Augmented Reality

Oct 08, 2018

Abstract:We address the problem of interactively controlling the workspace of a mobile robot to ensure a human-aware navigation. This is especially of relevance for non-expert users living in human-robot shared spaces, e.g. home environments, since they want to keep the control of their mobile robots, such as vacuum cleaning or companion robots. Therefore, we introduce virtual borders that are respected by a robot while performing its tasks. For this purpose, we employ a RGB-D Google Tango tablet as human-robot interface in combination with an augmented reality application to flexibly define virtual borders. We evaluated our system with 15 non-expert users concerning accuracy, teaching time and correctness and compared the results with other baseline methods based on visual markers and a laser pointer. The experimental results show that our method features an equally high accuracy while reducing the teaching time significantly compared to the baseline methods. This holds for different border lengths, shapes and variations in the teaching process. Finally, we demonstrated the correctness of the approach, i.e. the mobile robot changes its navigational behavior according to the user-defined virtual borders.

A Framework for Interactive Teaching of Virtual Borders to Mobile Robots

Feb 16, 2017

Abstract:The increasing number of robots in home environments leads to an emerging coexistence between humans and robots. Robots undertake common tasks and support the residents in their everyday life. People appreciate the presence of robots in their environment as long as they keep the control over them. One important aspect is the control of a robot's workspace. Therefore, we introduce virtual borders to precisely and flexibly define the workspace of mobile robots. First, we propose a novel framework that allows a person to interactively restrict a mobile robot's workspace. To show the validity of this framework, a concrete implementation based on visual markers is implemented. Afterwards, the mobile robot is capable of performing its tasks while respecting the new virtual borders. The approach is accurate, flexible and less time consuming than explicit robot programming. Hence, even non-experts are able to teach virtual borders to their robots which is especially interesting in domains like vacuuming or service robots in home environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge