Matias Quiroz

A Scalable Gradient-Based Optimization Framework for Sparse Minimum-Variance Portfolio Selection

May 15, 2025Abstract:Portfolio optimization involves selecting asset weights to minimize a risk-reward objective, such as the portfolio variance in the classical minimum-variance framework. Sparse portfolio selection extends this by imposing a cardinality constraint: only $k$ assets from a universe of $p$ may be included. The standard approach models this problem as a mixed-integer quadratic program and relies on commercial solvers to find the optimal solution. However, the computational costs of such methods increase exponentially with $k$ and $p$, making them too slow for problems of even moderate size. We propose a fast and scalable gradient-based approach that transforms the combinatorial sparse selection problem into a constrained continuous optimization task via Boolean relaxation, while preserving equivalence with the original problem on the set of binary points. Our algorithm employs a tunable parameter that transmutes the auxiliary objective from a convex to a concave function. This allows a stable convex starting point, followed by a controlled path toward a sparse binary solution as the tuning parameter increases and the objective moves toward concavity. In practice, our method matches commercial solvers in asset selection for most instances and, in rare instances, the solution differs by a few assets whilst showing a negligible error in portfolio variance.

Variance reduction properties of the reparameterization trick

Oct 05, 2018

Abstract:The reparameterization trick is widely used in variational inference as it yields more accurate estimates of the gradient of the variational objective than alternative approaches such as the score function method. Although there is overwhelming empirical evidence in the literature showing its success, there is relatively little research exploring why the reparameterization trick is so effective. We explore this under the idealized assumptions that the variational approximation is a mean-field Gaussian density and that the log of the joint density of the model parameters and the data is a quadratic function that depends on the variational mean. From this, we show that the marginal variances of the reparameterization gradient estimator are smaller than those of the score function gradient estimator. We apply the result of our idealized analysis to real-world examples.

Subsampling MCMC - An introduction for the survey statistician

Sep 20, 2018

Abstract:The rapid development of computing power and efficient Markov Chain Monte Carlo (MCMC) simulation algorithms have revolutionized Bayesian statistics, making it a highly practical inference method in applied work. However, MCMC algorithms tend to be computationally demanding, and are particularly slow for large datasets. Data subsampling has recently been suggested as a way to make MCMC methods scalable on massively large data, utilizing efficient sampling schemes and estimators from the survey sampling literature. These developments tend to be unknown by many survey statisticians who traditionally work with non-Bayesian methods, and rarely use MCMC. Our article explains the idea of data subsampling in MCMC by reviewing one strand of work, Subsampling MCMC, a so called pseudo-marginal MCMC approach to speeding up MCMC through data subsampling. The review is written for a survey statistician without previous knowledge of MCMC methods since our aim is to motivate survey sampling experts to contribute to the growing Subsampling MCMC literature.

Subsampling Sequential Monte Carlo for Static Bayesian Models

May 08, 2018

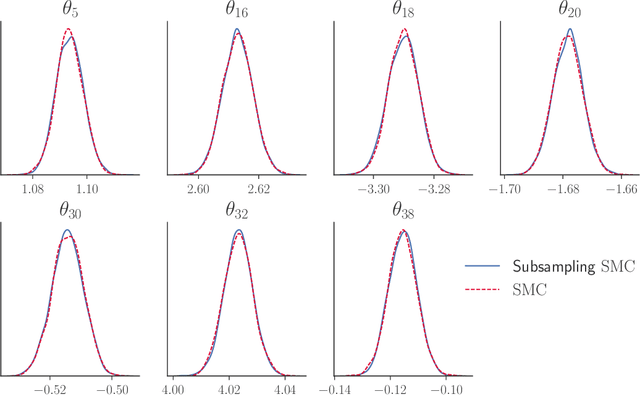

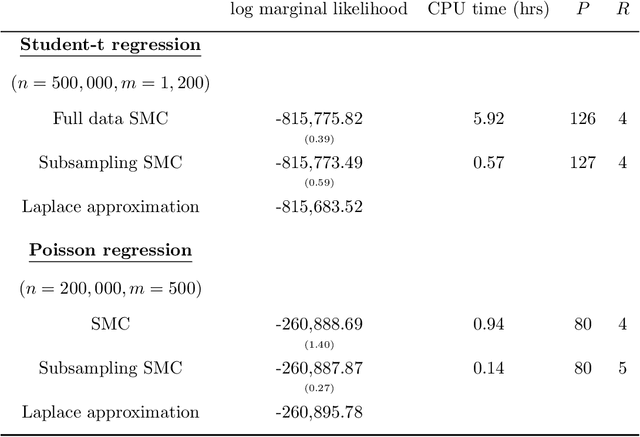

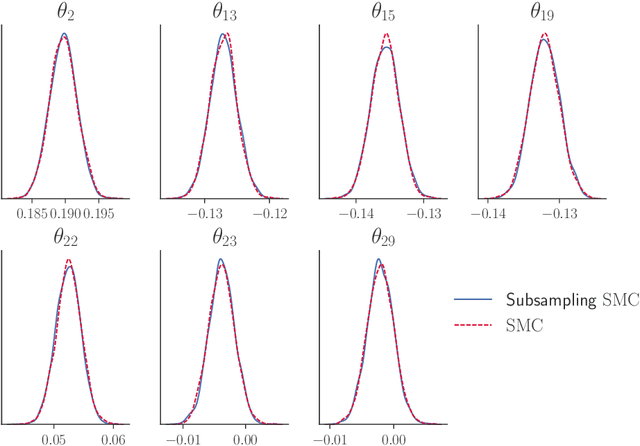

Abstract:Our article shows how to carry out Bayesian inference by combining data subsampling with Sequential Monte Carlo (SMC). This takes advantage of the attractive properties of SMC for Bayesian computations with the ability of subsampling to tackle big data problems. SMC sequentially updates a cloud of particles through a sequence of densities, beginning with a density that is easy to sample from such as the prior and ending with the posterior density. Each update of the particle cloud consists of three steps: reweighting, resampling, and moving. In the move step, each particle is moved using a Markov kernel and this is typically the most computationally expensive part, particularly when the dataset is large. It is crucial to have an efficient move step to ensure particle diversity. Our article makes two important contributions. First, in order to speed up the SMC computation, we use an approximately unbiased and efficient annealed likelihood estimator based on data subsampling. The subsampling approach is more memory efficient than the corresponding full data SMC, which is a great advantage for parallel computation. Second, we use a Metropolis within Gibbs kernel with two conditional updates. First, a Hamiltonian Monte Carlo update makes distant moves for the model parameters. Second, a block pseudo-marginal proposal is used for the particles corresponding to the auxiliary variables for the data subsampling. We demonstrate the usefulness of the methodology using two large datasets.

Gaussian variational approximation for high-dimensional state space models

Apr 25, 2018

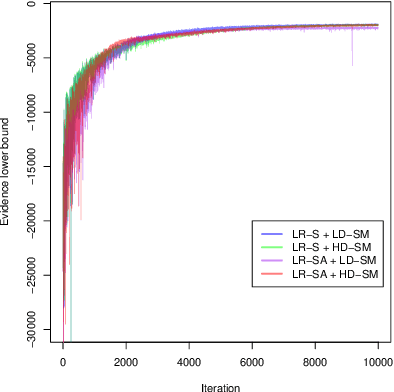

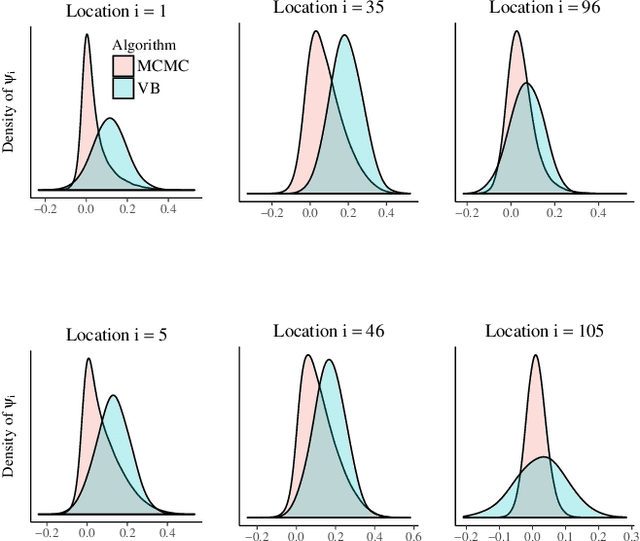

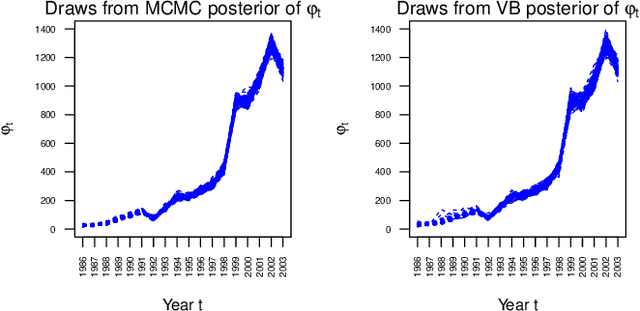

Abstract:This article considers variational approximations of the posterior distribution in a high-dimensional state space model. The variational approximation is a multivariate Gaussian density, in which the variational parameters to be optimized are a mean vector and a covariance matrix. The number of parameters in the covariance matrix grows as the square of the number of model parameters, so it is necessary to find simple yet effective parametrizations of the covariance structure when the number of model parameters is large. The joint posterior distribution over the high-dimensional state vectors is approximated using a dynamic factor model, with Markovian dependence in time and a factor covariance structure for the states. This gives a reduced dimension description of the dependence structure for the states, as well as a temporal conditional independence structure similar to that in the true posterior. We illustrate our approach in two high-dimensional applications which are challenging for Markov chain Monte Carlo sampling. The first is a spatio-temporal model for the spread of the Eurasian Collared-Dove across North America. The second is a multivariate stochastic volatility model for financial returns via a Wishart process.

The block-Poisson estimator for optimally tuned exact subsampling MCMC

Apr 10, 2018

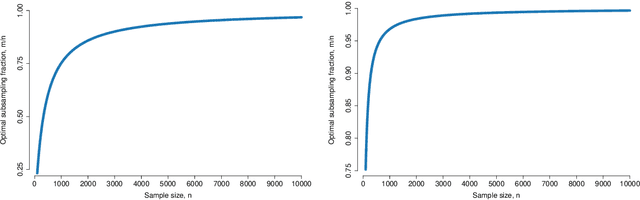

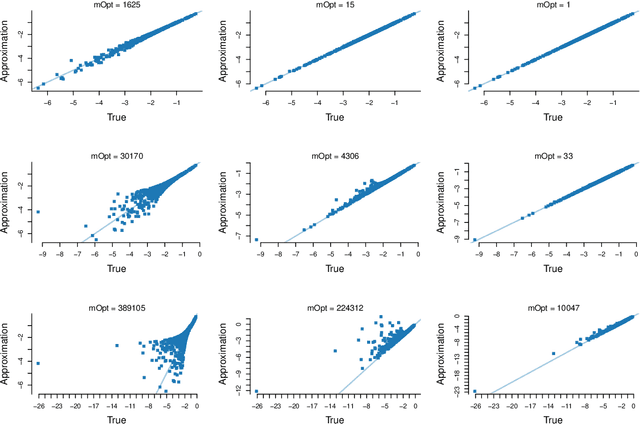

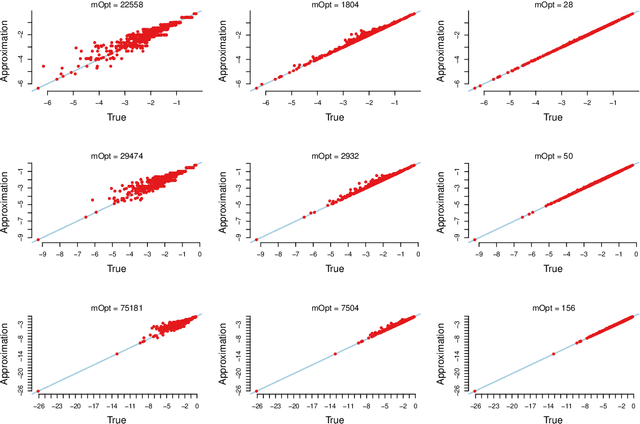

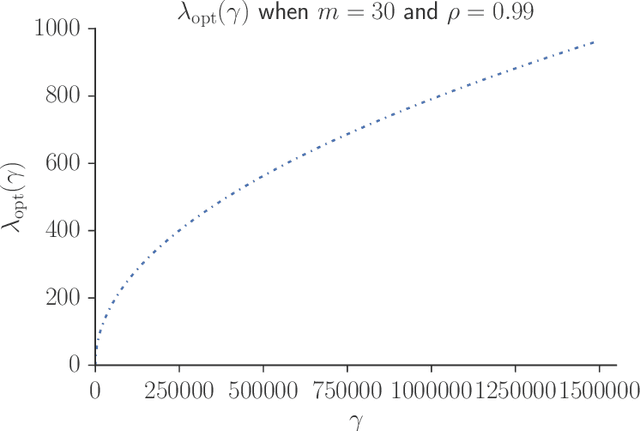

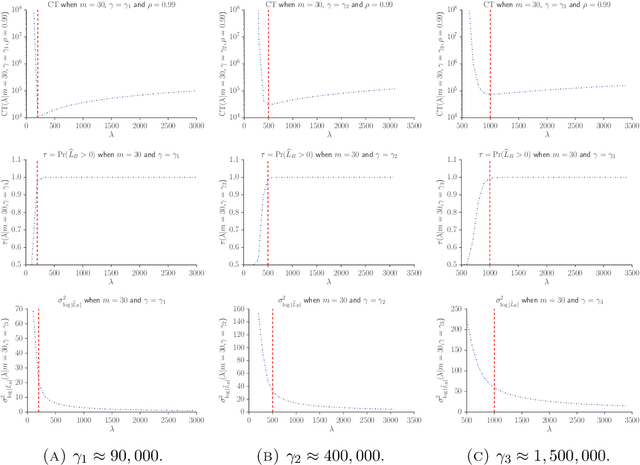

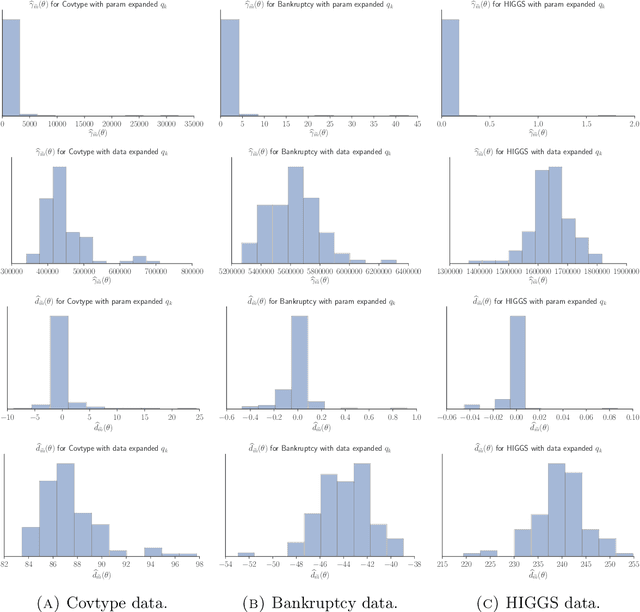

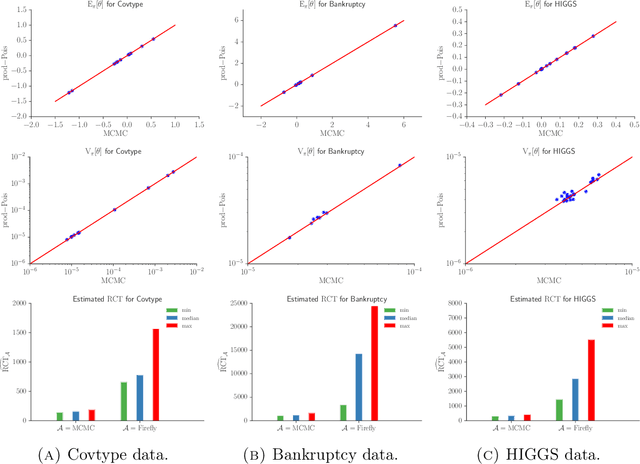

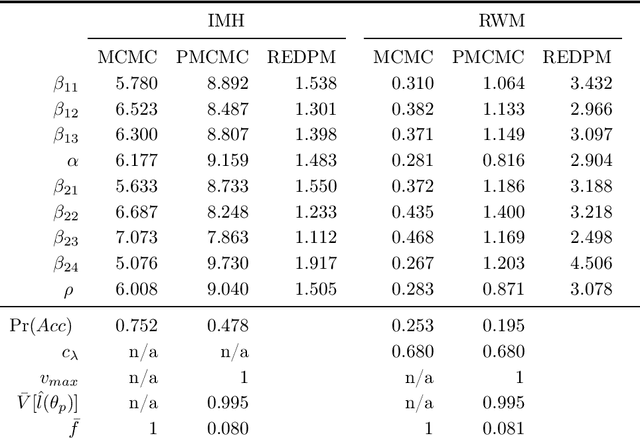

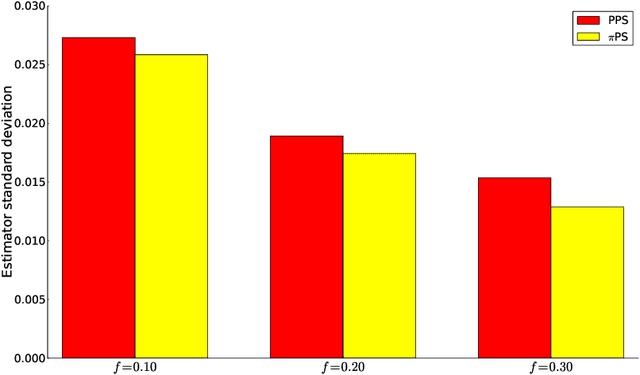

Abstract:Speeding up Markov Chain Monte Carlo (MCMC) for datasets with many observations by data subsampling has recently received considerable attention in the literature. The currently available methods are either approximate, highly inefficient or limited to small dimensional models. We propose a pseudo-marginal MCMC method that estimates the likelihood by data subsampling using a block-Poisson estimator. The estimator is a product of Poisson estimators, each based on an independent subset of the observations. The construction allows us to update a subset of the blocks in each MCMC iteration, thereby inducing a controllable correlation between the estimates at the current and proposed draw in the Metropolis-Hastings ratio. This makes it possible to use highly variable likelihood estimators without adversely affecting the sampling efficiency. Poisson estimators are unbiased but not necessarily positive. We therefore follow Lyne et al. (2015) and run the MCMC on the absolute value of the estimator and use an importance sampling correction for occasionally negative likelihood estimates to estimate expectations of any function of the parameters. We provide analytically derived guidelines to select the optimal tuning parameters for the algorithm by minimizing the variance of the importance sampling corrected estimator per unit of computing time. The guidelines are derived under idealized conditions, but are demonstrated to be quite accurate in empirical experiments. The guidelines apply to any pseudo-marginal algorithm if the likelihood is estimated by the block-Poisson estimator, including the class of doubly intractable problems in Lyne et al. (2015). We illustrate the method in a logistic regression example and find dramatic improvements compared to regular MCMC without subsampling and a popular exact subsampling approach recently proposed in the literature.

Speeding Up MCMC by Efficient Data Subsampling

Jan 01, 2018

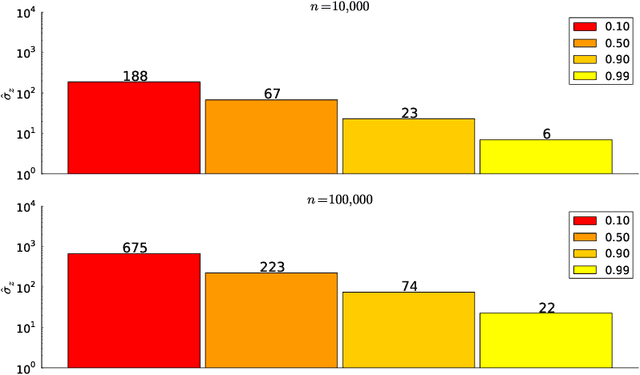

Abstract:We propose Subsampling MCMC, a Markov Chain Monte Carlo (MCMC) framework where the likelihood function for $n$ observations is estimated from a random subset of $m$ observations. We introduce a highly efficient unbiased estimator of the log-likelihood based on control variates, such that the computing cost is much smaller than that of the full log-likelihood in standard MCMC. The likelihood estimate is bias-corrected and used in two dependent pseudo-marginal algorithms to sample from a perturbed posterior, for which we derive the asymptotic error with respect to $n$ and $m$, respectively. We propose a practical estimator of the error and show that the error is negligible even for a very small $m$ in our applications. We demonstrate that Subsampling MCMC is substantially more efficient than standard MCMC in terms of sampling efficiency for a given computational budget, and that it outperforms other subsampling methods for MCMC proposed in the literature.

Hamiltonian Monte Carlo with Energy Conserving Subsampling

Aug 02, 2017

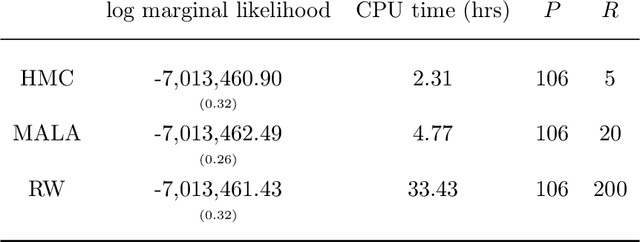

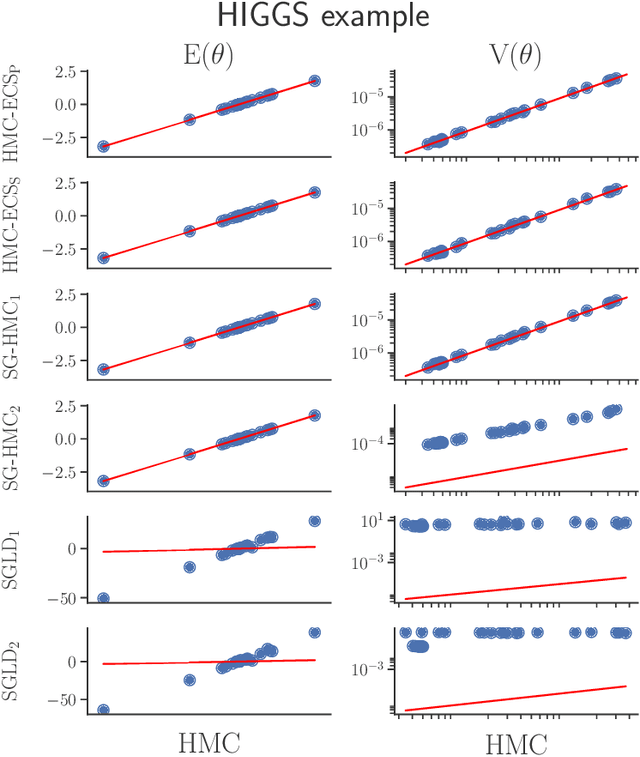

Abstract:Hamiltonian Monte Carlo (HMC) has recently received considerable attention in the literature due to its ability to overcome the slow exploration of the parameter space inherent in random walk proposals. In tandem, data subsampling has been extensively used to overcome the computational bottlenecks in posterior sampling algorithms that require evaluating the likelihood over the whole data set, or its gradient. However, while data subsampling has been successful in traditional MCMC algorithms such as Metropolis-Hastings, it has been demonstrated to be unsuccessful in the context of HMC, both in terms of poor sampling efficiency and in producing highly biased inferences. We propose an efficient HMC-within-Gibbs algorithm that utilizes data subsampling to speed up computations and simulates from a slightly perturbed target, which is within $O(m^{-2})$ of the true target, where $m$ is the size of the subsample. We also show how to modify the method to obtain exact inference on any function of the parameters. Contrary to previous unsuccessful approaches, we perform subsampling in a way that conserves energy but for a modified Hamiltonian. We can therefore maintain high acceptance rates even for distant proposals. We apply the method for simulating from the posterior distribution of a high-dimensional spline model for bankruptcy data and document speed ups of several orders of magnitude compare to standard HMC and, moreover, demonstrate a negligible bias.

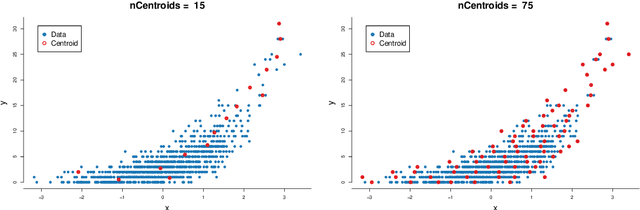

Scalable MCMC for Large Data Problems using Data Subsampling and the Difference Estimator

Aug 02, 2017

Abstract:We propose a generic Markov Chain Monte Carlo (MCMC) algorithm to speed up computations for datasets with many observations. A key feature of our approach is the use of the highly efficient difference estimator from the survey sampling literature to estimate the log-likelihood accurately using only a small fraction of the data. Our algorithm improves on the $O(n)$ complexity of regular MCMC by operating over local data clusters instead of the full sample when computing the likelihood. The likelihood estimate is used in a Pseudo-marginal framework to sample from a perturbed posterior which is within $O(m^{-1/2})$ of the true posterior, where $m$ is the subsample size. The method is applied to a logistic regression model to predict firm bankruptcy for a large data set. We document a significant speed up in comparison to the standard MCMC on the full dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge