Mariella Dreissig

Hierarchical Insights: Exploiting Structural Similarities for Reliable 3D Semantic Segmentation

Apr 09, 2024

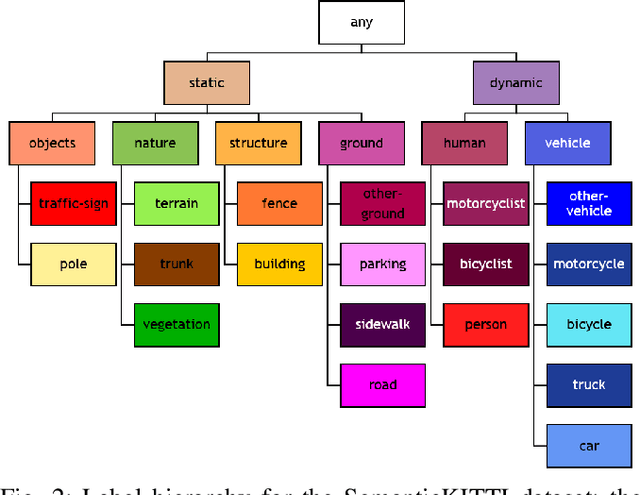

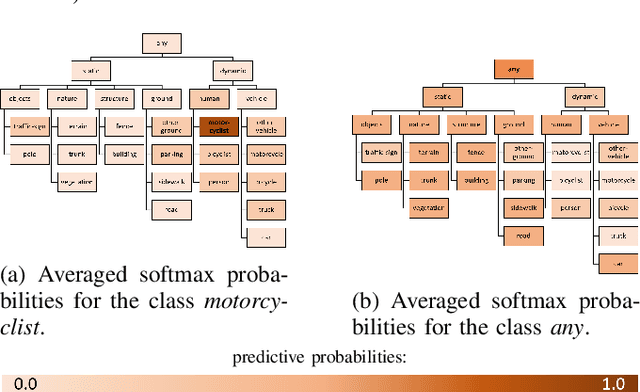

Abstract:Safety-critical applications like autonomous driving call for robust 3D environment perception algorithms which can withstand highly diverse and ambiguous surroundings. The predictive performance of any classification model strongly depends on the underlying dataset and the prior knowledge conveyed by the annotated labels. While the labels provide a basis for the learning process, they usually fail to represent inherent relations between the classes - representations, which are a natural element of the human perception system. We propose a training strategy which enables a 3D LiDAR semantic segmentation model to learn structural relationships between the different classes through abstraction. We achieve this by implicitly modeling those relationships through a learning rule for hierarchical multi-label classification (HMC). With a detailed analysis we show, how this training strategy not only improves the model's confidence calibration, but also preserves additional information for downstream tasks like fusion, prediction and planning.

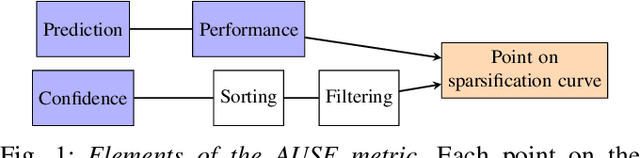

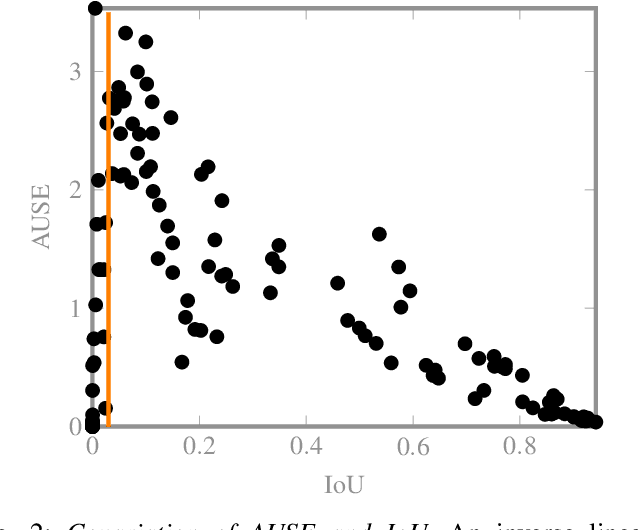

On the Calibration of Uncertainty Estimation in LiDAR-based Semantic Segmentation

Aug 04, 2023

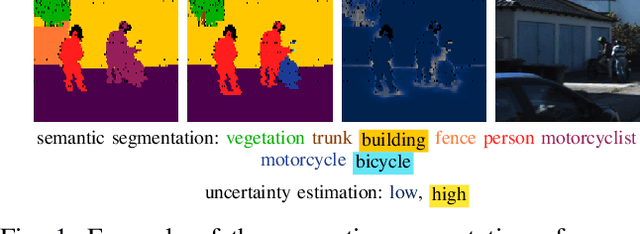

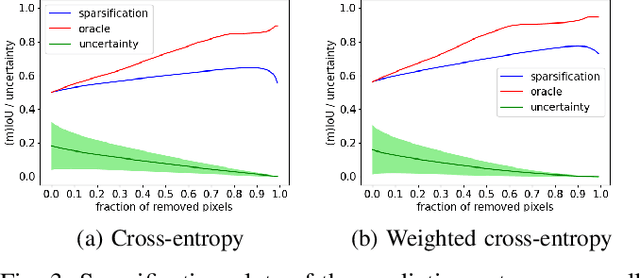

Abstract:The confidence calibration of deep learning-based perception models plays a crucial role in their reliability. Especially in the context of autonomous driving, downstream tasks like prediction and planning depend on accurate confidence estimates. In point-wise multiclass classification tasks like sematic segmentation the model has to deal with heavy class imbalances. Due to their underrepresentation, the confidence calibration of classes with smaller instances is challenging but essential, not only for safety reasons. We propose a metric to measure the confidence calibration quality of a semantic segmentation model with respect to individual classes. It is calculated by computing sparsification curves for each class based on the uncertainty estimates. We use the classification calibration metric to evaluate uncertainty estimation methods with respect to their confidence calibration of underrepresented classes. We furthermore suggest a double use for the method to automatically find label problems to improve the quality of hand- or auto-annotated datasets.

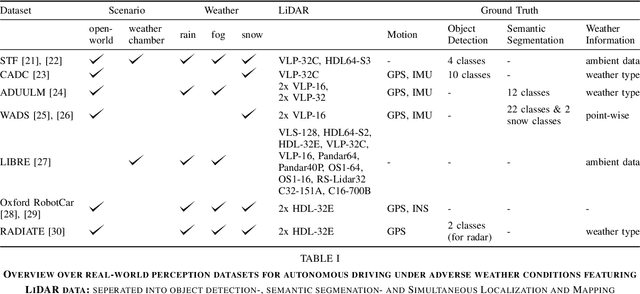

Survey on LiDAR Perception in Adverse Weather Conditions

Apr 13, 2023

Abstract:Autonomous vehicles rely on a variety of sensors to gather information about their surrounding. The vehicle's behavior is planned based on the environment perception, making its reliability crucial for safety reasons. The active LiDAR sensor is able to create an accurate 3D representation of a scene, making it a valuable addition for environment perception for autonomous vehicles. Due to light scattering and occlusion, the LiDAR's performance change under adverse weather conditions like fog, snow or rain. This limitation recently fostered a large body of research on approaches to alleviate the decrease in perception performance. In this survey, we gathered, analyzed, and discussed different aspects on dealing with adverse weather conditions in LiDAR-based environment perception. We address topics such as the availability of appropriate data, raw point cloud processing and denoising, robust perception algorithms and sensor fusion to mitigate adverse weather induced shortcomings. We furthermore identify the most pressing gaps in the current literature and pinpoint promising research directions.

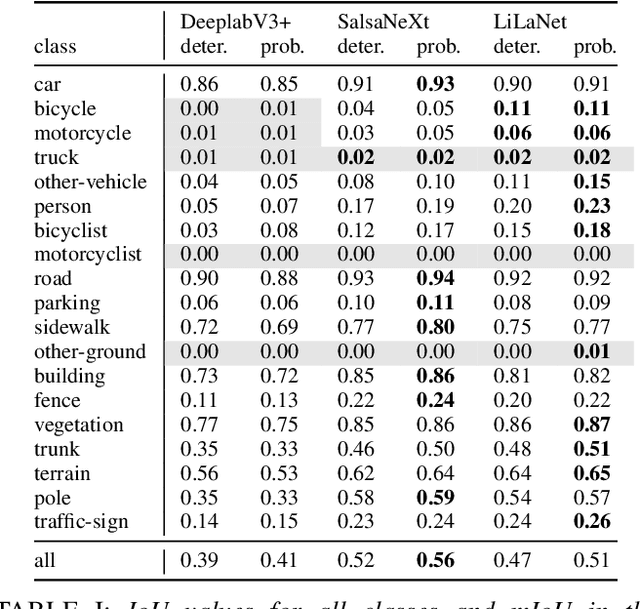

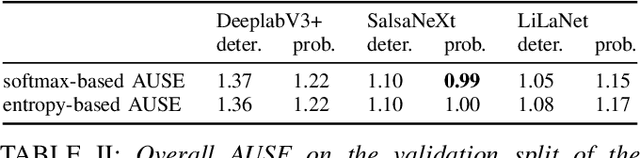

On the calibration of underrepresented classes in LiDAR-based semantic segmentation

Oct 13, 2022

Abstract:The calibration of deep learning-based perception models plays a crucial role in their reliability. Our work focuses on a class-wise evaluation of several model's confidence performance for LiDAR-based semantic segmentation with the aim of providing insights into the calibration of underrepresented classes. Those classes often include VRUs and are thus of particular interest for safety reasons. With the help of a metric based on sparsification curves we compare the calibration abilities of three semantic segmentation models with different architectural concepts, each in a in deterministic and a probabilistic version. By identifying and describing the dependency between the predictive performance of a class and the respective calibration quality we aim to facilitate the model selection and refinement for safety-critical applications.

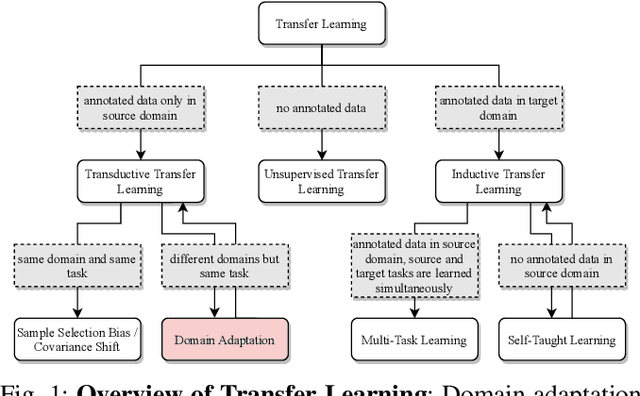

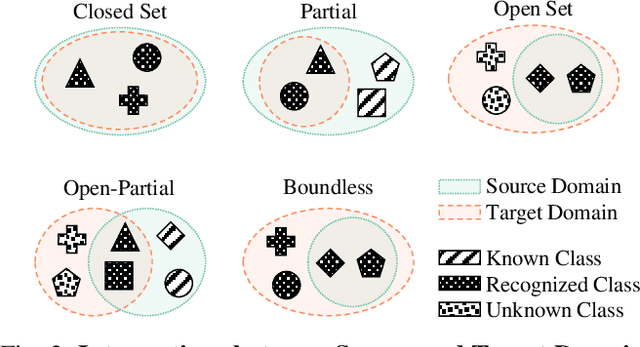

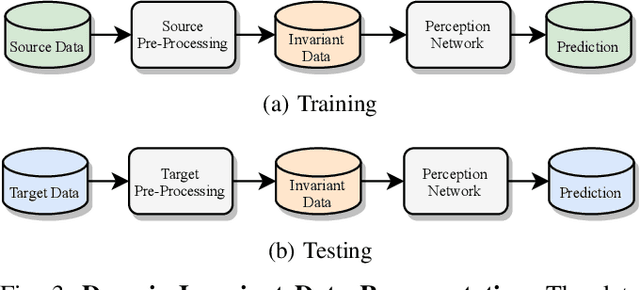

A Survey on Deep Domain Adaptation for LiDAR Perception

Jun 07, 2021

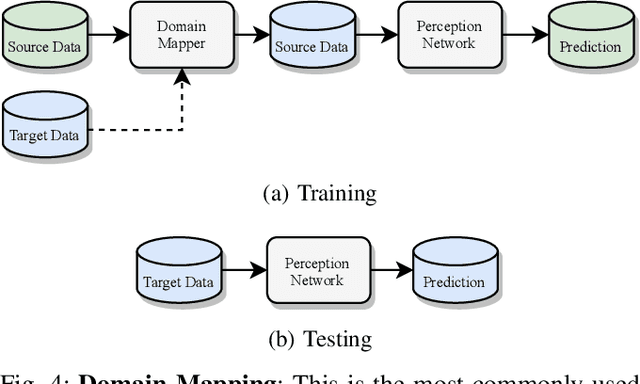

Abstract:Scalable systems for automated driving have to reliably cope with an open-world setting. This means, the perception systems are exposed to drastic domain shifts, like changes in weather conditions, time-dependent aspects, or geographic regions. Covering all domains with annotated data is impossible because of the endless variations of domains and the time-consuming and expensive annotation process. Furthermore, fast development cycles of the system additionally introduce hardware changes, such as sensor types and vehicle setups, and the required knowledge transfer from simulation. To enable scalable automated driving, it is therefore crucial to address these domain shifts in a robust and efficient manner. Over the last years, a vast amount of different domain adaptation techniques evolved. There already exists a number of survey papers for domain adaptation on camera images, however, a survey for LiDAR perception is absent. Nevertheless, LiDAR is a vital sensor for automated driving that provides detailed 3D scans of the vehicle's surroundings. To stimulate future research, this paper presents a comprehensive review of recent progress in domain adaptation methods and formulates interesting research questions specifically targeted towards LiDAR perception.

Driver Drowsiness Classification Based on Eye Blink and Head Movement Features Using the k-NN Algorithm

Sep 28, 2020

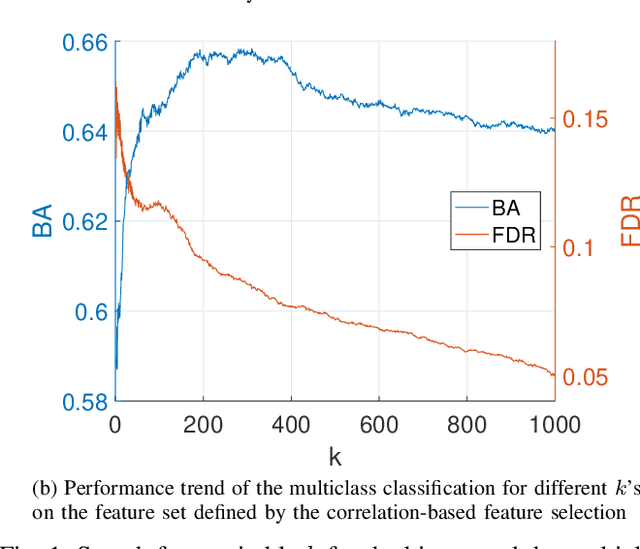

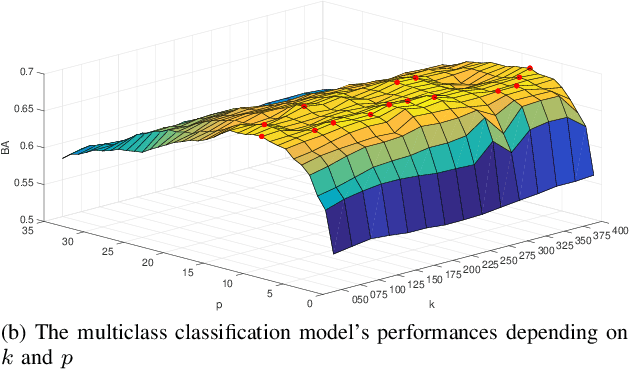

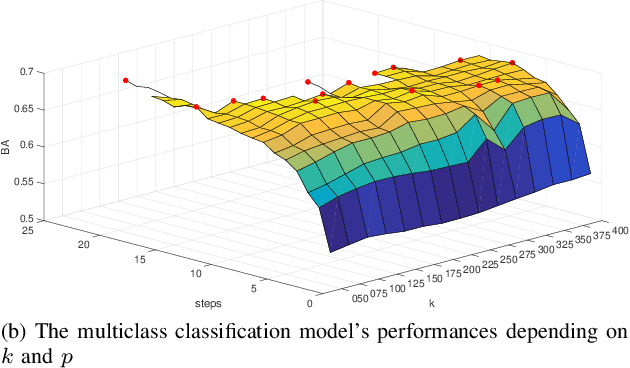

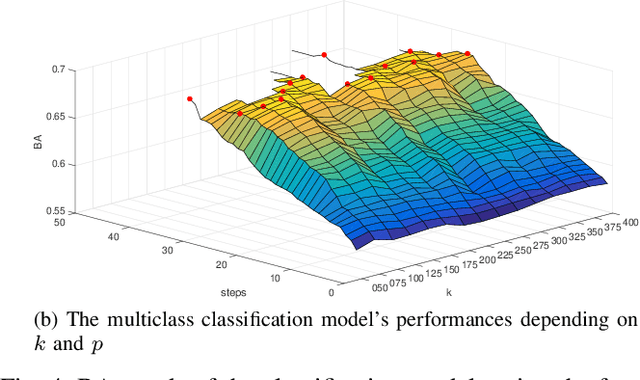

Abstract:Modern advanced driver-assistance systems analyze the driving performance to gather information about the driver's state. Such systems are able, for example, to detect signs of drowsiness by evaluating the steering or lane keeping behavior and to alert the driver when the drowsiness state reaches a critical level. However, these kinds of systems have no access to direct cues about the driver's state. Hence, the aim of this work is to extend the driver drowsiness detection in vehicles using signals of a driver monitoring camera. For this purpose, 35 features related to the driver's eye blinking behavior and head movements are extracted in driving simulator experiments. Based on that large dataset, we developed and evaluated a feature selection method based on the k-Nearest Neighbor algorithm for the driver's state classification. A concluding analysis of the best performing feature sets yields valuable insights about the influence of drowsiness on the driver's blink behavior and head movements. These findings will help in the future development of robust and reliable driver drowsiness monitoring systems to prevent fatigue-induced accidents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge