Marco Micheletto

Deep Data Hiding for ICAO-Compliant Face Images: A Survey

Aug 26, 2025Abstract:ICAO-compliant facial images, initially designed for secure biometric passports, are increasingly becoming central to identity verification in a wide range of application contexts, including border control, digital travel credentials, and financial services. While their standardization enables global interoperability, it also facilitates practices such as morphing and deepfakes, which can be exploited for harmful purposes like identity theft and illegal sharing of identity documents. Traditional countermeasures like Presentation Attack Detection (PAD) are limited to real-time capture and offer no post-capture protection. This survey paper investigates digital watermarking and steganography as complementary solutions that embed tamper-evident signals directly into the image, enabling persistent verification without compromising ICAO compliance. We provide the first comprehensive analysis of state-of-the-art techniques to evaluate the potential and drawbacks of the underlying approaches concerning the applications involving ICAO-compliant images and their suitability under standard constraints. We highlight key trade-offs, offering guidance for secure deployment in real-world identity systems.

Fragile Watermarking for Image Certification Using Deep Steganographic Embedding

Apr 18, 2025Abstract:Modern identity verification systems increasingly rely on facial images embedded in biometric documents such as electronic passports. To ensure global interoperability and security, these images must comply with strict standards defined by the International Civil Aviation Organization (ICAO), which specify acquisition, quality, and format requirements. However, once issued, these images may undergo unintentional degradations (e.g., compression, resizing) or malicious manipulations (e.g., morphing) and deceive facial recognition systems. In this study, we explore fragile watermarking, based on deep steganographic embedding as a proactive mechanism to certify the authenticity of ICAO-compliant facial images. By embedding a hidden image within the official photo at the time of issuance, we establish an integrity marker that becomes sensitive to any post-issuance modification. We assess how a range of image manipulations affects the recovered hidden image and show that degradation artifacts can serve as robust forensic cues. Furthermore, we propose a classification framework that analyzes the revealed content to detect and categorize the type of manipulation applied. Our experiments demonstrate high detection accuracy, including cross-method scenarios with multiple deep steganography-based models. These findings support the viability of fragile watermarking via steganographic embedding as a valuable tool for biometric document integrity verification.

Improving fingerprint presentation attack detection by an approach integrated into the personal verification stage

Apr 15, 2025

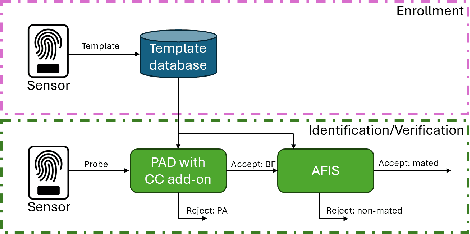

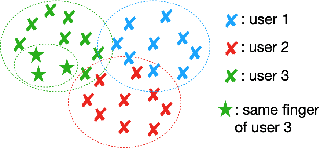

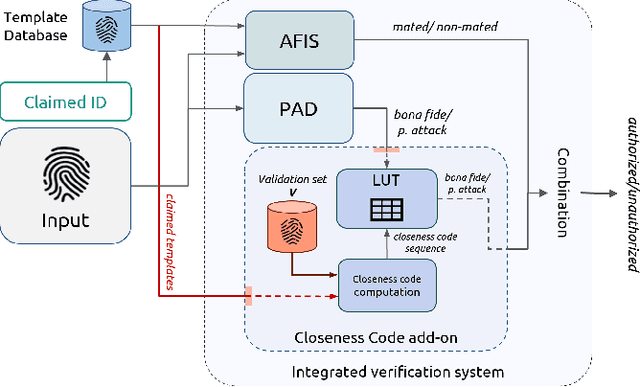

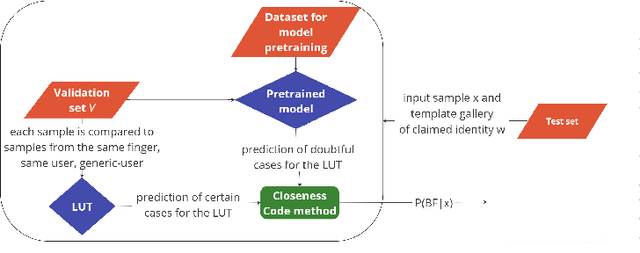

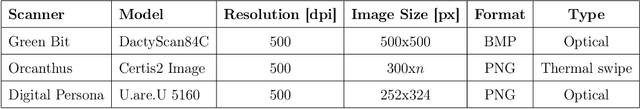

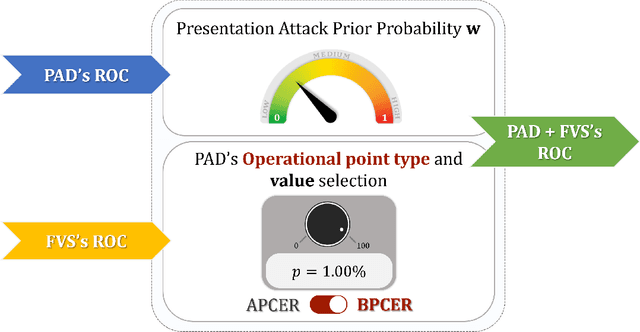

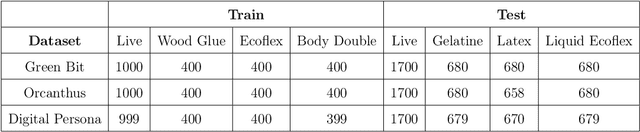

Abstract:Presentation Attack Detection (PAD) systems are usually designed independently of the fingerprint verification system. While this can be acceptable for use cases where specific user templates are not predetermined, it represents a missed opportunity to enhance security in scenarios where integrating PAD with the fingerprint verification system could significantly leverage users' templates, which are the real target of a potential presentation attack. This does not mean that a PAD should be specifically designed for such users; that would imply the availability of many enrolled users' PAI and, consequently, complexity, time, and cost increase. On the contrary, we propose to equip a basic PAD, designed according to the state of the art, with an innovative add-on module called the Closeness Binary Code (CC) module. The term "closeness" refers to a peculiar property of the bona fide-related features: in an Euclidean feature space, genuine fingerprints tend to cluster in a specific pattern. First, samples from the same finger are close to each other, then samples from other fingers of the same user and finally, samples from fingers of other users. This property is statistically verified in our previous publication, and further confirmed in this paper. It is independent of the user population and the feature set class, which can be handcrafted or deep network-based (embeddings). Therefore, the add-on can be designed without the need for the targeted user samples; moreover, it exploits her/his samples' "closeness" property during the verification stage. Extensive experiments on benchmark datasets and state-of-the-art PAD methods confirm the benefits of the proposed add-on, which can be easily coupled with the main PAD module integrated into the fingerprint verification system.

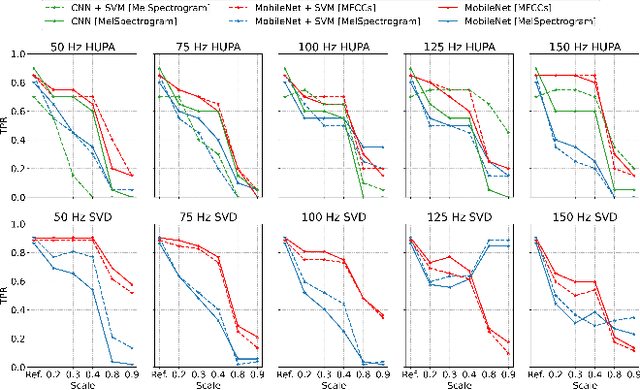

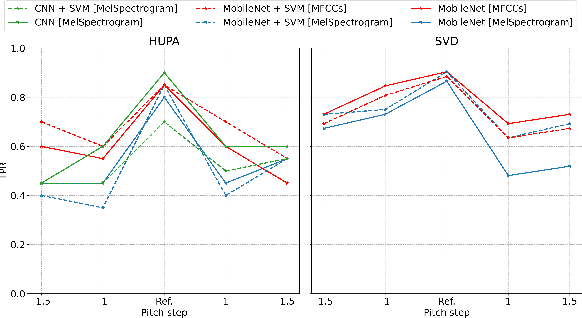

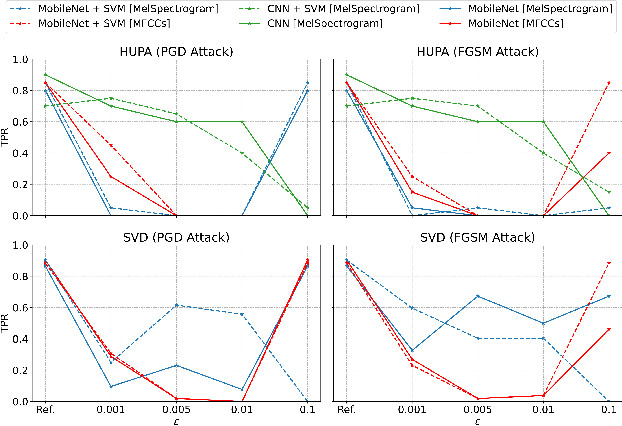

Vulnerabilities in Machine Learning-Based Voice Disorder Detection Systems

Oct 21, 2024

Abstract:The impact of voice disorders is becoming more widely acknowledged as a public health issue. Several machine learning-based classifiers with the potential to identify disorders have been used in recent studies to differentiate between normal and pathological voices and sounds. In this paper, we focus on analyzing the vulnerabilities of these systems by exploring the possibility of attacks that can reverse classification and compromise their reliability. Given the critical nature of personal health information, understanding which types of attacks are effective is a necessary first step toward improving the security of such systems. Starting from the original audios, we implement various attack methods, including adversarial, evasion, and pitching techniques, and evaluate how state-of-the-art disorder detection models respond to them. Our findings identify the most effective attack strategies, underscoring the need to address these vulnerabilities in machine-learning systems used in the healthcare domain.

Deepfake Media Forensics: State of the Art and Challenges Ahead

Aug 01, 2024Abstract:AI-generated synthetic media, also called Deepfakes, have significantly influenced so many domains, from entertainment to cybersecurity. Generative Adversarial Networks (GANs) and Diffusion Models (DMs) are the main frameworks used to create Deepfakes, producing highly realistic yet fabricated content. While these technologies open up new creative possibilities, they also bring substantial ethical and security risks due to their potential misuse. The rise of such advanced media has led to the development of a cognitive bias known as Impostor Bias, where individuals doubt the authenticity of multimedia due to the awareness of AI's capabilities. As a result, Deepfake detection has become a vital area of research, focusing on identifying subtle inconsistencies and artifacts with machine learning techniques, especially Convolutional Neural Networks (CNNs). Research in forensic Deepfake technology encompasses five main areas: detection, attribution and recognition, passive authentication, detection in realistic scenarios, and active authentication. Each area tackles specific challenges, from tracing the origins of synthetic media and examining its inherent characteristics for authenticity. This paper reviews the primary algorithms that address these challenges, examining their advantages, limitations, and future prospects.

LivDet2023 -- Fingerprint Liveness Detection Competition: Advancing Generalization

Sep 27, 2023

Abstract:The International Fingerprint Liveness Detection Competition (LivDet) is a biennial event that invites academic and industry participants to prove their advancements in Fingerprint Presentation Attack Detection (PAD). This edition, LivDet2023, proposed two challenges, Liveness Detection in Action and Fingerprint Representation, to evaluate the efficacy of PAD embedded in verification systems and the effectiveness and compactness of feature sets. A third, hidden challenge is the inclusion of two subsets in the training set whose sensor information is unknown, testing participants ability to generalize their models. Only bona fide fingerprint samples were provided to participants, and the competition reports and assesses the performance of their algorithms suffering from this limitation in data availability.

Review of the Fingerprint Liveness Detection (LivDet) competition series: from 2009 to 2021

Feb 15, 2022

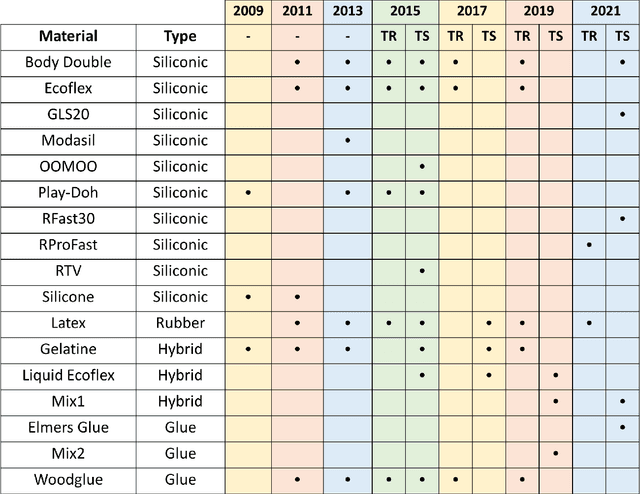

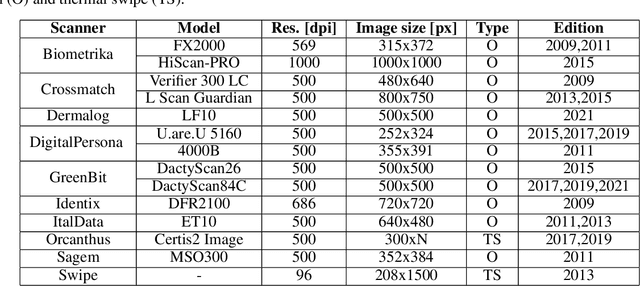

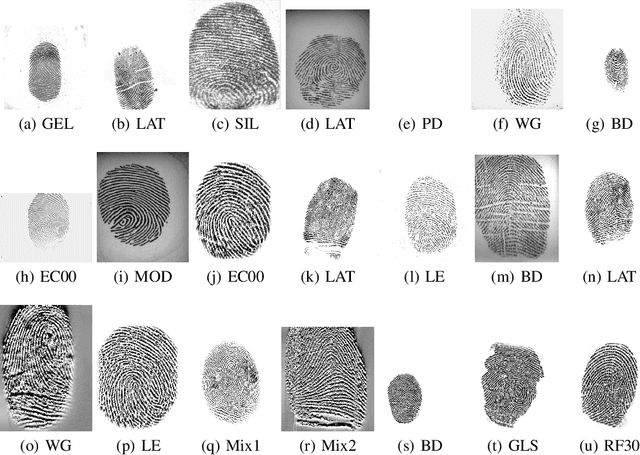

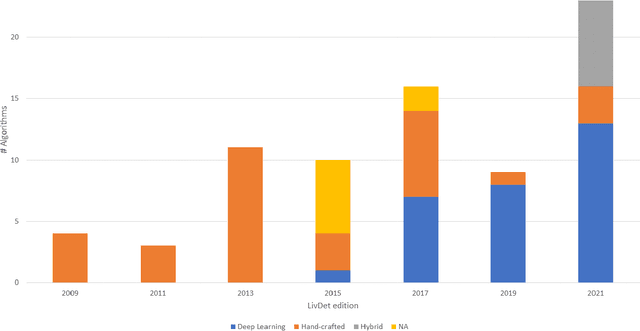

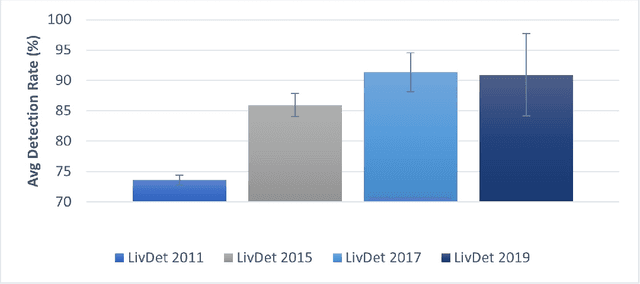

Abstract:Fingerprint authentication systems are highly vulnerable to artificial reproductions of fingerprint, called fingerprint presentation attacks. Detecting presentation attacks is not trivial because attackers refine their replication techniques from year to year. The International Fingerprint liveness Detection Competition (LivDet), an open and well-acknowledged meeting point of academies and private companies that deal with the problem of presentation attack detection, has the goal to assess the performance of fingerprint presentation attack detection (FPAD) algorithms by using standard experimental protocols and data sets. Each LivDet edition, held biannually since 2009, is characterized by a different set of challenges against which competitors must be dealt with. The continuous increase of competitors and the noticeable decrease in error rates across competitions demonstrate a growing interest in the topic. This paper reviews the LivDet editions from 2009 to 2021 and points out their evolution over the years.

Fingerprint recognition with embedded presentation attacks detection: are we ready?

Oct 20, 2021

Abstract:The diffusion of fingerprint verification systems for security applications makes it urgent to investigate the embedding of software-based presentation attack detection algorithms (PAD) into such systems. Companies and institutions need to know whether such integration would make the system more "secure" and whether the technology available is ready, and, if so, at what operational working conditions. Despite significant improvements, especially by adopting deep learning approaches to fingerprint PAD, current research did not state much about their effectiveness when embedded in fingerprint verification systems. We believe that the lack of works is explained by the lack of instruments to investigate the problem, that is, modeling the cause-effect relationships when two non-zero error-free systems work together. Accordingly, this paper explores the fusion of PAD into verification systems by proposing a novel investigation instrument: a performance simulator based on the probabilistic modeling of the relationships among the Receiver Operating Characteristics (ROC) of the two individual systems when PAD and verification stages are implemented sequentially. As a matter of fact, this is the most straightforward, flexible, and widespread approach. We carry out simulations on the PAD algorithms' ROCs submitted to the most recent editions of LivDet (2017-2019), the state-of-the-art NIST Bozorth3, and the top-level Veryfinger 12 matchers. Reported experiments explore significant scenarios to get the conditions under which fingerprint matching with embedded PAD can improve, rather than degrade, the overall personal verification performance.

LivDet 2021 Fingerprint Liveness Detection Competition -- Into the unknown

Aug 23, 2021

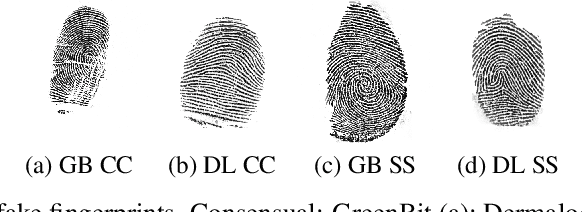

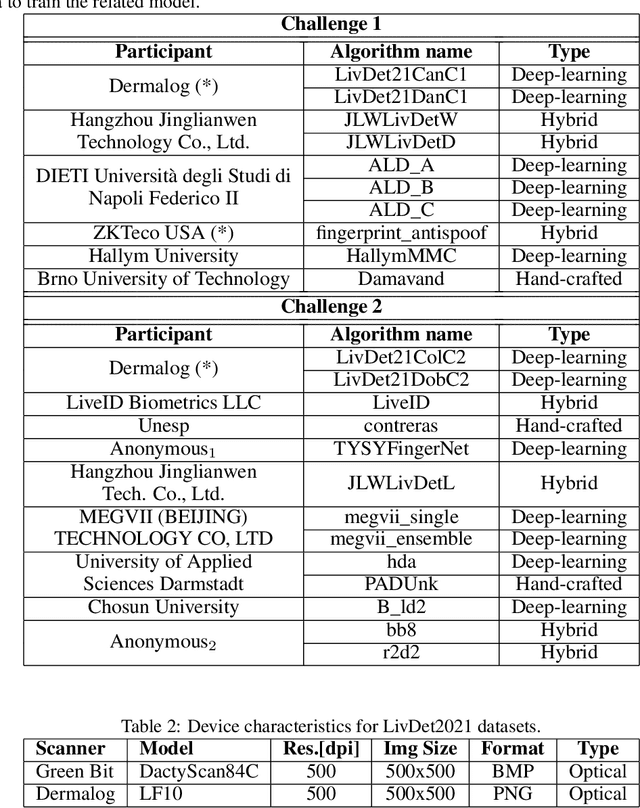

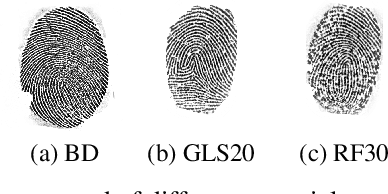

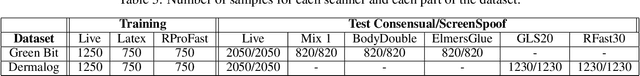

Abstract:The International Fingerprint Liveness Detection Competition is an international biennial competition open to academia and industry with the aim to assess and report advances in Fingerprint Presentation Attack Detection. The proposed "Liveness Detection in Action" and "Fingerprint representation" challenges were aimed to evaluate the impact of a PAD embedded into a verification system, and the effectiveness and compactness of feature sets for mobile applications. Furthermore, we experimented a new spoof fabrication method that has particularly affected the final results. Twenty-three algorithms were submitted to the competition, the maximum number ever achieved by LivDet.

* Preprint version of a paper accepted at IJCB 2021

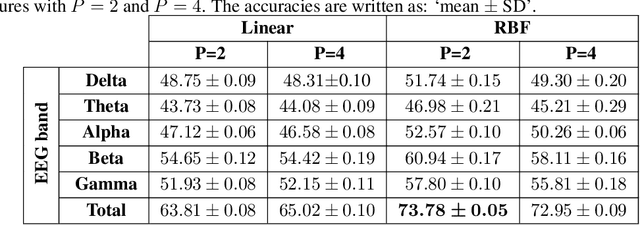

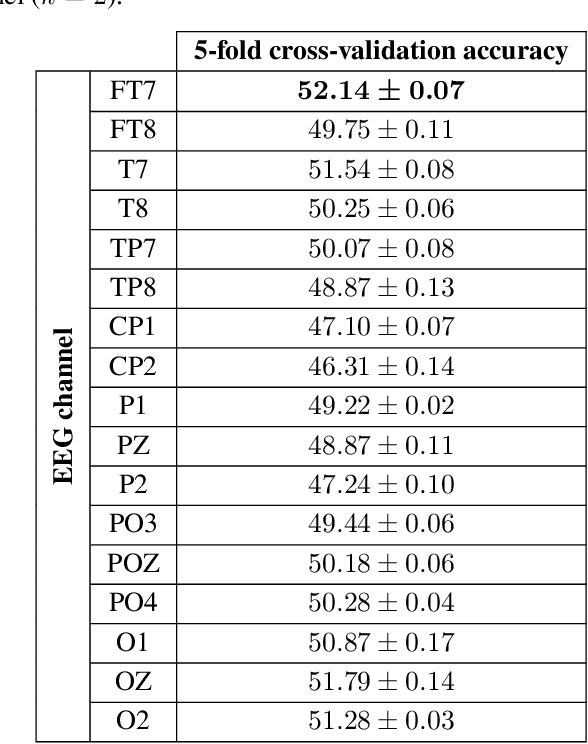

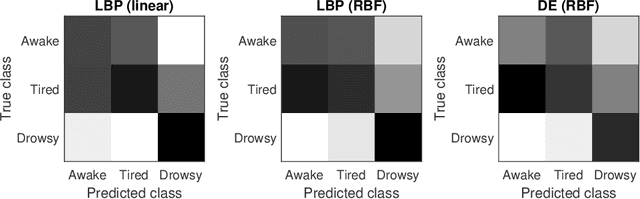

Electroencephalography signal processing based on textural features for monitoring the driver's state by a Brain-Computer Interface

Oct 17, 2020

Abstract:In this study we investigate a textural processing method of electroencephalography (EEG) signal as an indicator to estimate the driver's vigilance in a hypothetical Brain-Computer Interface (BCI) system. The novelty of the solution proposed relies on employing the one-dimensional Local Binary Pattern (1D-LBP) algorithm for feature extraction from pre-processed EEG data. From the resulting feature vector, the classification is done according to three vigilance classes: awake, tired and drowsy. The claim is that the class transitions can be detected by describing the variations of the micro-patterns' occurrences along the EEG signal. The 1D-LBP is able to describe them by detecting mutual variations of the signal temporarily "close" as a short bit-code. Our analysis allows to conclude that the 1D-LBP adoption has led to significant performance improvement. Moreover, capturing the class transitions from the EEG signal is effective, although the overall performance is not yet good enough to develop a BCI for assessing the driver's vigilance in real environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge