Manuel Mazzara

Rtd.

Dynamic Memory-enhanced Transformer for Hyperspectral Image Classification

Apr 17, 2025Abstract:Hyperspectral image (HSI) classification remains a challenging task due to the intricate spatial-spectral correlations. Existing transformer models excel in capturing long-range dependencies but often suffer from information redundancy and attention inefficiencies, limiting their ability to model fine-grained relationships crucial for HSI classification. To overcome these limitations, this work proposes MemFormer, a lightweight and memory-enhanced transformer. MemFormer introduces a memory-enhanced multi-head attention mechanism that iteratively refines a dynamic memory module, enhancing feature extraction while reducing redundancy across layers. Additionally, a dynamic memory enrichment strategy progressively captures complex spatial and spectral dependencies, leading to more expressive feature representations. To further improve structural consistency, we incorporate a spatial-spectral positional encoding (SSPE) tailored for HSI data, ensuring continuity without the computational burden of convolution-based approaches. Extensive experiments on benchmark datasets demonstrate that MemFormer achieves superior classification accuracy, outperforming state-of-the-art methods.

EnergyFormer: Energy Attention with Fourier Embedding for Hyperspectral Image Classification

Mar 11, 2025Abstract:Hyperspectral imaging (HSI) provides rich spectral-spatial information across hundreds of contiguous bands, enabling precise material discrimination in applications such as environmental monitoring, agriculture, and urban analysis. However, the high dimensionality and spectral variability of HSI data pose significant challenges for feature extraction and classification. This paper presents EnergyFormer, a transformer-based framework designed to address these challenges through three key innovations: (1) Multi-Head Energy Attention (MHEA), which optimizes an energy function to selectively enhance critical spectral-spatial features, improving feature discrimination; (2) Fourier Position Embedding (FoPE), which adaptively encodes spectral and spatial dependencies to reinforce long-range interactions; and (3) Enhanced Convolutional Block Attention Module (ECBAM), which selectively amplifies informative wavelength bands and spatial structures, enhancing representation learning. Extensive experiments on the WHU-Hi-HanChuan, Salinas, and Pavia University datasets demonstrate that EnergyFormer achieves exceptional overall accuracies of 99.28\%, 98.63\%, and 98.72\%, respectively, outperforming state-of-the-art CNN, transformer, and Mamba-based models. The source code will be made available at https://github.com/mahmad000.

Hybrid State-Space and GRU-based Graph Tokenization Mamba for Hyperspectral Image Classification

Feb 10, 2025

Abstract:Hyperspectral image (HSI) classification plays a pivotal role in domains such as environmental monitoring, agriculture, and urban planning. However, it faces significant challenges due to the high-dimensional nature of the data and the complex spectral-spatial relationships inherent in HSI. Traditional methods, including conventional machine learning and convolutional neural networks (CNNs), often struggle to effectively capture these intricate spectral-spatial features and global contextual information. Transformer-based models, while powerful in capturing long-range dependencies, often demand substantial computational resources, posing challenges in scenarios where labeled datasets are limited, as is commonly seen in HSI applications. To overcome these challenges, this work proposes GraphMamba, a hybrid model that combines spectral-spatial token generation, graph-based token prioritization, and cross-attention mechanisms. The model introduces a novel hybridization of state-space modeling and Gated Recurrent Units (GRU), capturing both linear and nonlinear spatial-spectral dynamics. GraphMamba enhances the ability to model complex spatial-spectral relationships while maintaining scalability and computational efficiency across diverse HSI datasets. Through comprehensive experiments, we demonstrate that GraphMamba outperforms existing state-of-the-art models, offering a scalable and robust solution for complex HSI classification tasks.

DiffFormer: a Differential Spatial-Spectral Transformer for Hyperspectral Image Classification

Dec 23, 2024

Abstract:Hyperspectral image classification (HSIC) has gained significant attention because of its potential in analyzing high-dimensional data with rich spectral and spatial information. In this work, we propose the Differential Spatial-Spectral Transformer (DiffFormer), a novel framework designed to address the inherent challenges of HSIC, such as spectral redundancy and spatial discontinuity. The DiffFormer leverages a Differential Multi-Head Self-Attention (DMHSA) mechanism, which enhances local feature discrimination by introducing differential attention to accentuate subtle variations across neighboring spectral-spatial patches. The architecture integrates Spectral-Spatial Tokenization through three-dimensional (3D) convolution-based patch embeddings, positional encoding, and a stack of transformer layers equipped with the SWiGLU activation function for efficient feature extraction (SwiGLU is a variant of the Gated Linear Unit (GLU) activation function). A token-based classification head further ensures robust representation learning, enabling precise labeling of hyperspectral pixels. Extensive experiments on benchmark hyperspectral datasets demonstrate the superiority of DiffFormer in terms of classification accuracy, computational efficiency, and generalizability, compared to existing state-of-the-art (SOTA) methods. In addition, this work provides a detailed analysis of computational complexity, showcasing the scalability of the model for large-scale remote sensing applications. The source code will be made available at \url{https://github.com/mahmad000/DiffFormer} after the first round of revision.

Spectral-Spatial Transformer with Active Transfer Learning for Hyperspectral Image Classification

Nov 27, 2024

Abstract:The classification of hyperspectral images (HSI) is a challenging task due to the high spectral dimensionality and limited labeled data typically available for training. In this study, we propose a novel multi-stage active transfer learning (ATL) framework that integrates a Spatial-Spectral Transformer (SST) with an active learning process for efficient HSI classification. Our approach leverages a pre-trained (initially trained) SST model, fine-tuned iteratively on newly acquired labeled samples using an uncertainty-diversity (Spatial-Spectral Neighborhood Diversity) querying mechanism. This mechanism identifies the most informative and diverse samples, thereby optimizing the transfer learning process to reduce both labeling costs and model uncertainty. We further introduce a dynamic freezing strategy, selectively freezing layers of the SST model to minimize computational overhead while maintaining adaptability to spectral variations in new data. One of the key innovations in our work is the self-calibration of spectral and spatial attention weights, achieved through uncertainty-guided active learning. This not only enhances the model's robustness in handling dynamic and disjoint spectral profiles but also improves generalization across multiple HSI datasets. Additionally, we present a diversity-promoting sampling strategy that ensures the selected samples span distinct spectral regions, preventing overfitting to particular spectral classes. Experiments on benchmark HSI datasets demonstrate that the SST-ATL framework significantly outperforms existing CNN and SST-based methods, offering superior accuracy, efficiency, and computational performance. The source code can be accessed at \url{https://github.com/mahmad000/ATL-SST}.

A Comprehensive Survey for Hyperspectral Image Classification: The Evolution from Conventional to Transformers

May 09, 2024

Abstract:Hyperspectral Image Classification (HSC) is a challenging task due to the high dimensionality and complex nature of Hyperspectral (HS) data. Traditional Machine Learning approaches while effective, face challenges in real-world data due to varying optimal feature sets, subjectivity in human-driven design, biases, and limitations. Traditional approaches encounter the curse of dimensionality, struggle with feature selection and extraction, lack spatial information consideration, exhibit limited robustness to noise, face scalability issues, and may not adapt well to complex data distributions. In recent years, Deep Learning (DL) techniques have emerged as powerful tools for addressing these challenges. This survey provides a comprehensive overview of the current trends and future prospects in HSC, focusing on the advancements from DL models to the emerging use of Transformers. We review the key concepts, methodologies, and state-of-the-art approaches in DL for HSC. We explore the potential of Transformer-based models in HSC, outlining their benefits and challenges. We also delve into emerging trends in HSC, as well as thorough discussions on Explainable AI and Interoperability concepts along with Diffusion Models (image denoising, feature extraction, and image fusion). Lastly, we address several open challenges and research questions pertinent to HSC. Comprehensive experimental results have been undertaken using three HS datasets to verify the efficacy of various conventional DL models and Transformers. Finally, we outline future research directions and potential applications that can further enhance the accuracy and efficiency of HSC. The Source code is available at \href{https://github.com/mahmad00/Conventional-to-Transformer-for-Hyperspectral-Image-Classification-Survey-2024}{github.com/mahmad00}.

Quranic Audio Dataset: Crowdsourced and Labeled Recitation from Non-Arabic Speakers

May 04, 2024

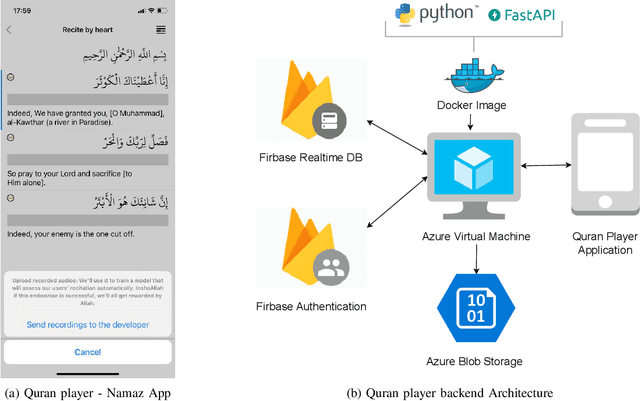

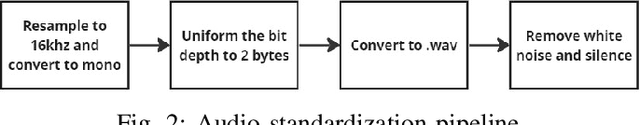

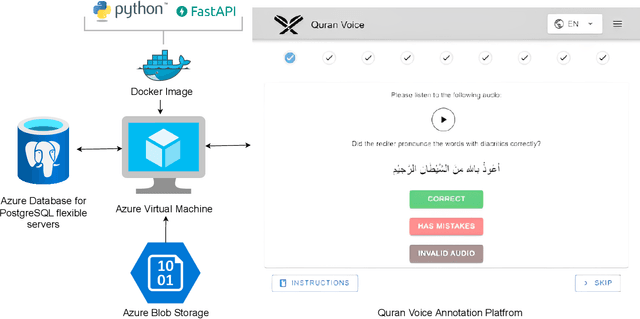

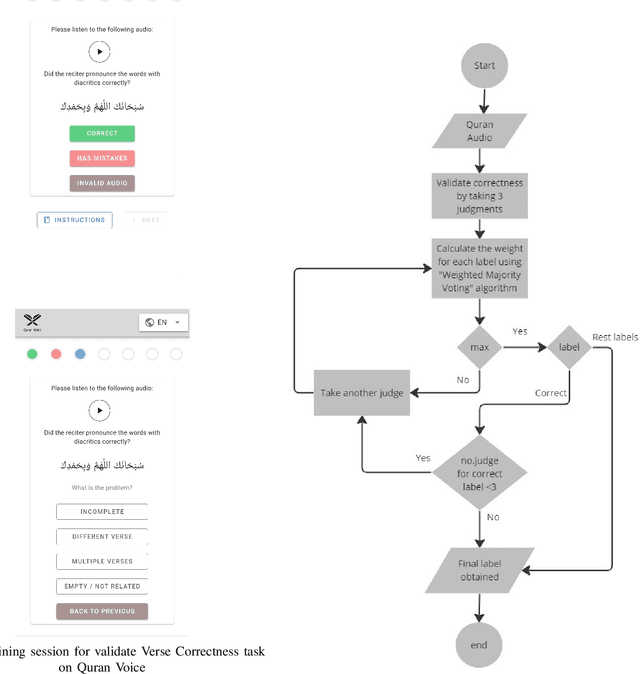

Abstract:This paper addresses the challenge of learning to recite the Quran for non-Arabic speakers. We explore the possibility of crowdsourcing a carefully annotated Quranic dataset, on top of which AI models can be built to simplify the learning process. In particular, we use the volunteer-based crowdsourcing genre and implement a crowdsourcing API to gather audio assets. We integrated the API into an existing mobile application called NamazApp to collect audio recitations. We developed a crowdsourcing platform called Quran Voice for annotating the gathered audio assets. As a result, we have collected around 7000 Quranic recitations from a pool of 1287 participants across more than 11 non-Arabic countries, and we have annotated 1166 recitations from the dataset in six categories. We have achieved a crowd accuracy of 0.77, an inter-rater agreement of 0.63 between the annotators, and 0.89 between the labels assigned by the algorithm and the expert judgments.

Transformers Fusion across Disjoint Samples for Hyperspectral Image Classification

May 02, 2024

Abstract:3D Swin Transformer (3D-ST) known for its hierarchical attention and window-based processing, excels in capturing intricate spatial relationships within images. Spatial-spectral Transformer (SST), meanwhile, specializes in modeling long-range dependencies through self-attention mechanisms. Therefore, this paper introduces a novel method: an attentional fusion of these two transformers to significantly enhance the classification performance of Hyperspectral Images (HSIs). What sets this approach apart is its emphasis on the integration of attentional mechanisms from both architectures. This integration not only refines the modeling of spatial and spectral information but also contributes to achieving more precise and accurate classification results. The experimentation and evaluation of benchmark HSI datasets underscore the importance of employing disjoint training, validation, and test samples. The results demonstrate the effectiveness of the fusion approach, showcasing its superiority over traditional methods and individual transformers. Incorporating disjoint samples enhances the robustness and reliability of the proposed methodology, emphasizing its potential for advancing hyperspectral image classification.

Importance of Disjoint Sampling in Conventional and Transformer Models for Hyperspectral Image Classification

Apr 23, 2024

Abstract:Disjoint sampling is critical for rigorous and unbiased evaluation of state-of-the-art (SOTA) models. When training, validation, and test sets overlap or share data, it introduces a bias that inflates performance metrics and prevents accurate assessment of a model's true ability to generalize to new examples. This paper presents an innovative disjoint sampling approach for training SOTA models on Hyperspectral image classification (HSIC) tasks. By separating training, validation, and test data without overlap, the proposed method facilitates a fairer evaluation of how well a model can classify pixels it was not exposed to during training or validation. Experiments demonstrate the approach significantly improves a model's generalization compared to alternatives that include training and validation data in test data. By eliminating data leakage between sets, disjoint sampling provides reliable metrics for benchmarking progress in HSIC. Researchers can have confidence that reported performance truly reflects a model's capabilities for classifying new scenes, not just memorized pixels. This rigorous methodology is critical for advancing SOTA models and their real-world application to large-scale land mapping with Hyperspectral sensors. The source code is available at https://github.com/mahmad00/Disjoint-Sampling-for-Hyperspectral-Image-Classification.

Pyramid Hierarchical Transformer for Hyperspectral Image Classification

Apr 23, 2024

Abstract:The traditional Transformer model encounters challenges with variable-length input sequences, particularly in Hyperspectral Image Classification (HSIC), leading to efficiency and scalability concerns. To overcome this, we propose a pyramid-based hierarchical transformer (PyFormer). This innovative approach organizes input data hierarchically into segments, each representing distinct abstraction levels, thereby enhancing processing efficiency for lengthy sequences. At each level, a dedicated transformer module is applied, effectively capturing both local and global context. Spatial and spectral information flow within the hierarchy facilitates communication and abstraction propagation. Integration of outputs from different levels culminates in the final input representation. Experimental results underscore the superiority of the proposed method over traditional approaches. Additionally, the incorporation of disjoint samples augments robustness and reliability, thereby highlighting the potential of our approach in advancing HSIC. The source code is available at https://github.com/mahmad00/PyFormer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge