Manuel Del Verme

WorkArena: How Capable Are Web Agents at Solving Common Knowledge Work Tasks?

Mar 12, 2024

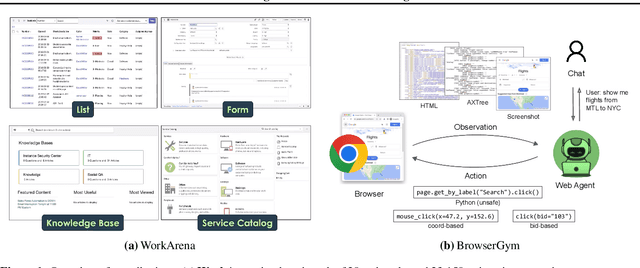

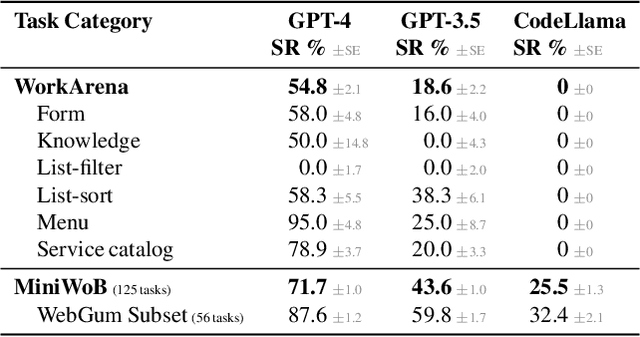

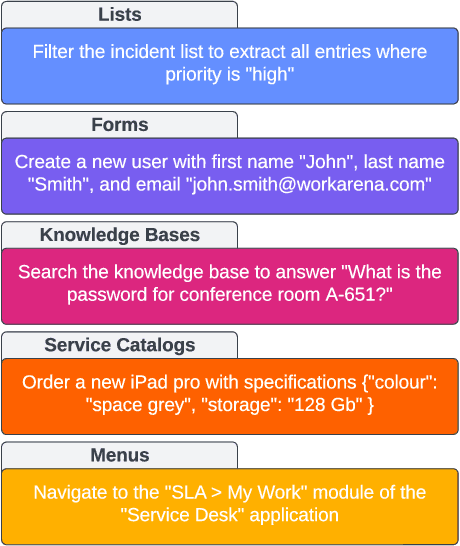

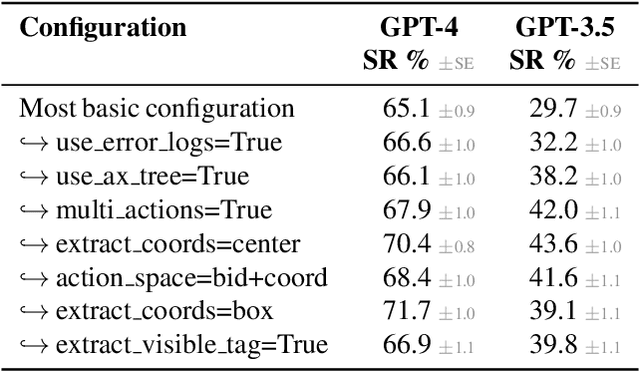

Abstract:We study the use of large language model-based agents for interacting with software via web browsers. Unlike prior work, we focus on measuring the agents' ability to perform tasks that span the typical daily work of knowledge workers utilizing enterprise software systems. To this end, we propose WorkArena, a remote-hosted benchmark of 29 tasks based on the widely-used ServiceNow platform. We also introduce BrowserGym, an environment for the design and evaluation of such agents, offering a rich set of actions as well as multimodal observations. Our empirical evaluation reveals that while current agents show promise on WorkArena, there remains a considerable gap towards achieving full task automation. Notably, our analysis uncovers a significant performance disparity between open and closed-source LLMs, highlighting a critical area for future exploration and development in the field.

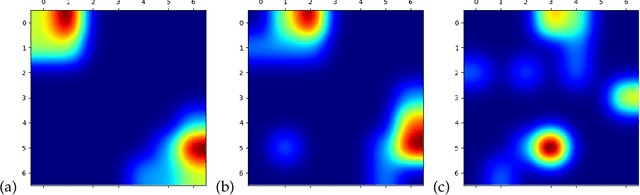

GrowSpace: Learning How to Shape Plants

Oct 15, 2021

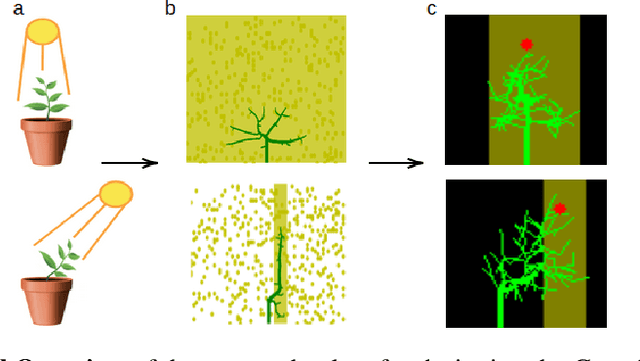

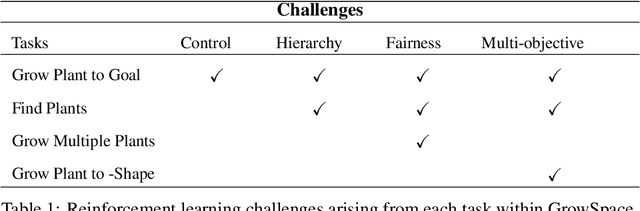

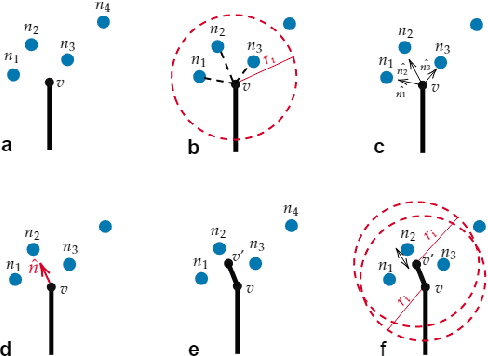

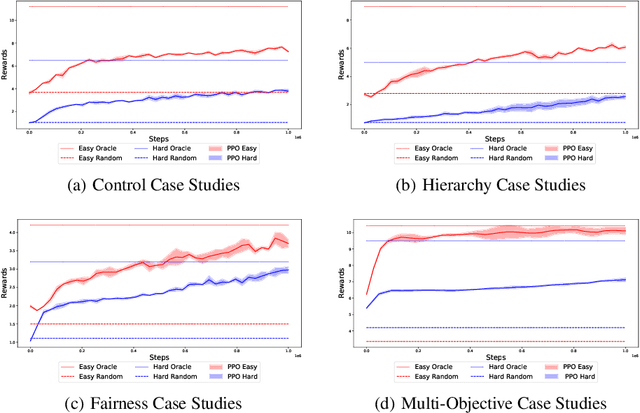

Abstract:Plants are dynamic systems that are integral to our existence and survival. Plants face environment changes and adapt over time to their surrounding conditions. We argue that plant responses to an environmental stimulus are a good example of a real-world problem that can be approached within a reinforcement learning (RL)framework. With the objective of controlling a plant by moving the light source, we propose GrowSpace, as a new RL benchmark. The back-end of the simulator is implemented using the Space Colonisation Algorithm, a plant growing model based on competition for space. Compared to video game RL environments, this simulator addresses a real-world problem and serves as a test bed to visualize plant growth and movement in a faster way than physical experiments. GrowSpace is composed of a suite of challenges that tackle several problems such as control, multi-stage learning,fairness and multi-objective learning. We provide agent baselines alongside case studies to demonstrate the difficulty of the proposed benchmark.

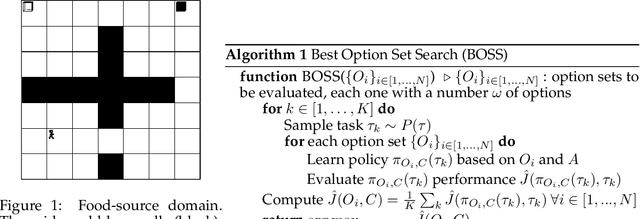

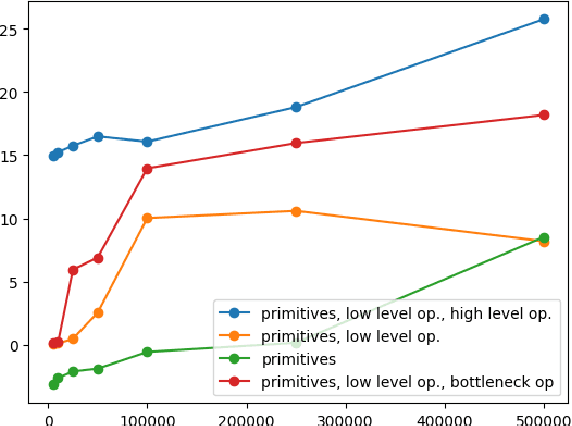

Optimal Options for Multi-Task Reinforcement Learning Under Time Constraints

Jan 06, 2020

Abstract:Reinforcement learning can greatly benefit from the use of options as a way of encoding recurring behaviours and to foster exploration. An important open problem is how can an agent autonomously learn useful options when solving particular distributions of related tasks. We investigate some of the conditions that influence optimality of options, in settings where agents have a limited time budget for learning each task and the task distribution might involve problems with different levels of similarity. We directly search for optimal option sets and show that the discovered options significantly differ depending on factors such as the available learning time budget and that the found options outperform popular option-generation heuristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge