Manolis Chiou

An Exploratory Study on Human-Robot Interaction using Semantics-based Situational Awareness

Jul 23, 2025Abstract:In this paper, we investigate the impact of high-level semantics (evaluation of the environment) on Human-Robot Teams (HRT) and Human-Robot Interaction (HRI) in the context of mobile robot deployments. Although semantics has been widely researched in AI, how high-level semantics can benefit the HRT paradigm is underexplored, often fuzzy, and intractable. We applied a semantics-based framework that could reveal different indicators of the environment (i.e. how much semantic information exists) in a mock-up disaster response mission. In such missions, semantics are crucial as the HRT should handle complex situations and respond quickly with correct decisions, where humans might have a high workload and stress. Especially when human operators need to shift their attention between robots and other tasks, they will struggle to build Situational Awareness (SA) quickly. The experiment suggests that the presented semantics: 1) alleviate the perceived workload of human operators; 2) increase the operator's trust in the SA; and 3) help to reduce the reaction time in switching the level of autonomy when needed. Additionally, we find that participants with higher trust in the system are encouraged by high-level semantics to use teleoperation mode more.

A Framework for Semantics-based Situational Awareness during Mobile Robot Deployments

Feb 19, 2025Abstract:Deployment of robots into hazardous environments typically involves a ``Human-Robot Teaming'' (HRT) paradigm, in which a human supervisor interacts with a remotely operating robot inside the hazardous zone. Situational Awareness (SA) is vital for enabling HRT, to support navigation, planning, and decision-making. This paper explores issues of higher-level ``semantic'' information and understanding in SA. In semi-autonomous, or variable-autonomy paradigms, different types of semantic information may be important, in different ways, for both the human operator and an autonomous agent controlling the robot. We propose a generalizable framework for acquiring and combining multiple modalities of semantic-level SA during remote deployments of mobile robots. We demonstrate the framework with an example application of search and rescue (SAR) in disaster response robotics. We propose a set of ``environment semantic indicators" that can reflect a variety of different types of semantic information, e.g. indicators of risk, or signs of human activity, as the robot encounters different scenes. Based on these indicators, we propose a metric to describe the overall situation of the environment called ``Situational Semantic Richness (SSR)". This metric combines multiple semantic indicators to summarise the overall situation. The SSR indicates if an information-rich and complex situation has been encountered, which may require advanced reasoning for robots and humans and hence the attention of the expert human operator. The framework is tested on a Jackal robot in a mock-up disaster response environment. Experimental results demonstrate that the proposed semantic indicators are sensitive to changes in different modalities of semantic information in different scenes, and the SSR metric reflects overall semantic changes in the situations encountered.

The ATTUNE model for Artificial Trust Towards Human Operators

Nov 29, 2024

Abstract:This paper presents a novel method to quantify Trust in HRI. It proposes an HRI framework for estimating the Robot Trust towards the Human in the context of a narrow and specified task. The framework produces a real-time estimation of an AI agent's Artificial Trust towards a Human partner interacting with a mobile teleoperation robot. The approach for the framework is based on principles drawn from Theory of Mind, including information about the human state, action, and intent. The framework creates the ATTUNE model for Artificial Trust Towards Human Operators. The model uses metrics on the operator's state of attention, navigational intent, actions, and performance to quantify the Trust towards them. The model is tested on a pre-existing dataset that includes recordings (ROSbags) of a human trial in a simulated disaster response scenario. The performance of ATTUNE is evaluated through a qualitative and quantitative analysis. The results of the analyses provide insight into the next stages of the research and help refine the proposed approach.

* Published in IEEE SMC 2024

A Mini-Review on Mobile Manipulators with Variable Autonomy

Aug 20, 2024

Abstract:This paper presents a mini-review of the current state of research in mobile manipulators with variable levels of autonomy, emphasizing their associated challenges and application environments. The need for mobile manipulators in different environments is evident due to the unique challenges and risks each presents. Many systems deployed in these environments are not fully autonomous, requiring human-robot teaming to ensure safe and reliable operations under uncertainties. Through this analysis, we identify gaps and challenges in the literature on Variable Autonomy, including cognitive workload and communication delays, and propose future directions, including whole-body Variable Autonomy for mobile manipulators, virtual reality frameworks, and large language models to reduce operators' complexity and cognitive load in some challenging and uncertain scenarios.

Negotiating Control: Neurosymbolic Variable Autonomy

Jul 23, 2024Abstract:Variable autonomy equips a system, such as a robot, with mixed initiatives such that it can adjust its independence level based on the task's complexity and the surrounding environment. Variable autonomy solves two main problems in robotic planning: the first is the problem of humans being unable to keep focus in monitoring and intervening during robotic tasks without appropriate human factor indicators, and the second is achieving mission success in unforeseen and uncertain environments in the face of static reward structures. An open problem in variable autonomy is developing robust methods to dynamically balance autonomy and human intervention in real-time, ensuring optimal performance and safety in unpredictable and evolving environments. We posit that addressing unpredictable and evolving environments through an addition of rule-based symbolic logic has the potential to make autonomy adjustments more contextually reliable and adding feedback to reinforcement learning through data from mixed-initiative control further increases efficacy and safety of autonomous behaviour.

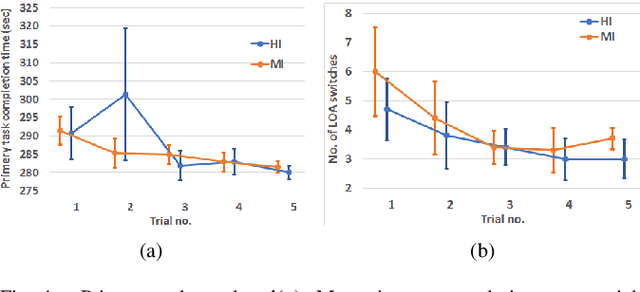

Learning effects in variable autonomy human-robot systems: how much training is enough?

Nov 16, 2023

Abstract:This paper investigates learning effects and human operator training practices in variable autonomy robotic systems. These factors are known to affect performance of a human-robot system and are frequently overlooked. We present the results from an experiment inspired by a search and rescue scenario in which operators remotely controlled a mobile robot with either Human-Initiative (HI) or Mixed-Initiative (MI) control. Evidence suggests learning in terms of primary navigation task and secondary (distractor) task performance. Further evidence is provided that MI and HI performance in a pure navigation task is equal. Lastly, guidelines are proposed for experimental design and operator training practices.

* This paper is a preprint of the paper published on the IEEE International Conference on Systems, Man and Cybernetics (SMC) 2019

A Supervised Machine Learning Approach to Operator Intent Recognition for Teleoperated Mobile Robot Navigation

Apr 27, 2023Abstract:In applications that involve human-robot interaction (HRI), human-robot teaming (HRT), and cooperative human-machine systems, the inference of the human partner's intent is of critical importance. This paper presents a method for the inference of the human operator's navigational intent, in the context of mobile robots that provide full or partial (e.g., shared control) teleoperation. We propose the Machine Learning Operator Intent Inference (MLOII) method, which a) processes spatial data collected by the robot's sensors; b) utilizes a supervised machine learning algorithm to estimate the operator's most probable navigational goal online. The proposed method's ability to reliably and efficiently infer the intent of the human operator is experimentally evaluated in realistically simulated exploration and remote inspection scenarios. The results in terms of accuracy and uncertainty indicate that the proposed method is comparable to another state-of-the-art method found in the literature.

Robot Health Indicator: A Visual Cue to Improve Level of Autonomy Switching Systems

Mar 12, 2023Abstract:Using different Levels of Autonomy (LoA), a human operator can vary the extent of control they have over a robot's actions. LoAs enable operators to mitigate a robot's performance degradation or limitations in the its autonomous capabilities. However, LoA regulation and other tasks may often overload an operator's cognitive abilities. Inspired by video game user interfaces, we study if adding a 'Robot Health Bar' to the robot control UI can reduce the cognitive demand and perceptual effort required for LoA regulation while promoting trust and transparency. This Health Bar uses the robot vitals and robot health framework to quantify and present runtime performance degradation in robots. Results from our pilot study indicate that when using a health bar, operators used to manual control more to minimise the risk of robot failure during high performance degradation. It also gave us insights and lessons to inform subsequent experiments on human-robot teaming.

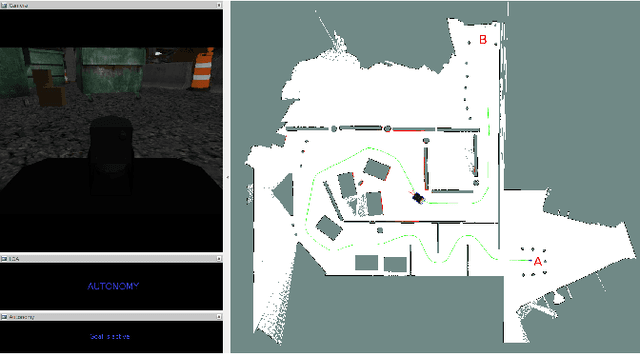

A Hierarchical Variable Autonomy Mixed-Initiative Framework for Human-Robot Teaming in Mobile Robotics

Nov 25, 2022

Abstract:This paper presents a Mixed-Initiative (MI) framework for addressing the problem of control authority transfer between a remote human operator and an AI agent when cooperatively controlling a mobile robot. Our Hierarchical Expert-guided Mixed-Initiative Control Switcher (HierEMICS) leverages information on the human operator's state and intent. The control switching policies are based on a criticality hierarchy. An experimental evaluation was conducted in a high-fidelity simulated disaster response and remote inspection scenario, comparing HierEMICS with a state-of-the-art Expert-guided Mixed-Initiative Control Switcher (EMICS) in the context of mobile robot navigation. Results suggest that HierEMICS reduces conflicts for control between the human and the AI agent, which is a fundamental challenge in both the MI control paradigm and also in the related shared control paradigm. Additionally, we provide statistically significant evidence of improved, navigational safety (i.e., fewer collisions), LOA switching efficiency, and conflict for control reduction.

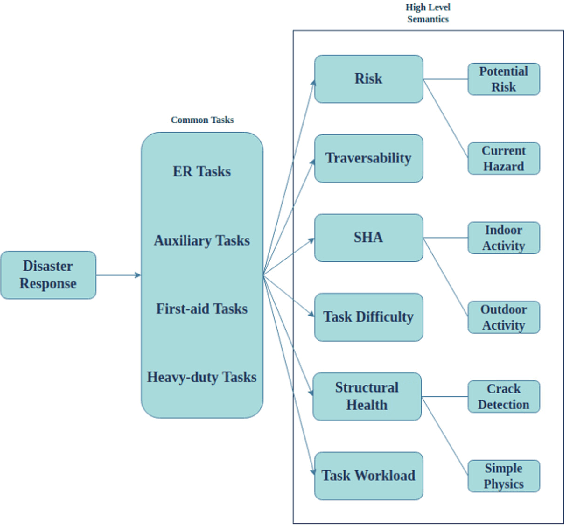

A Taxonomy of Semantic Information in Robot-Assisted Disaster Response

Sep 30, 2022

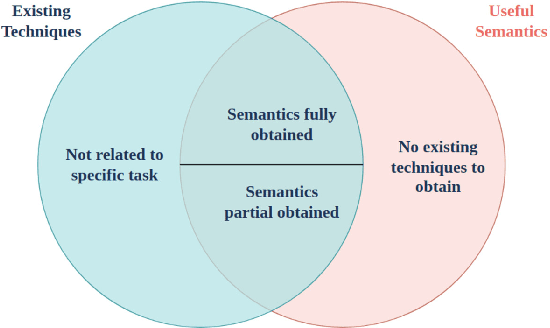

Abstract:This paper proposes a taxonomy of semantic information in robot-assisted disaster response. Robots are increasingly being used in hazardous environment industries and emergency response teams to perform various tasks. Operational decision-making in such applications requires a complex semantic understanding of environments that are remote from the human operator. Low-level sensory data from the robot is transformed into perception and informative cognition. Currently, such cognition is predominantly performed by a human expert, who monitors remote sensor data such as robot video feeds. This engenders a need for AI-generated semantic understanding capabilities on the robot itself. Current work on semantics and AI lies towards the relatively academic end of the research spectrum, hence relatively removed from the practical realities of first responder teams. We aim for this paper to be a step towards bridging this divide. We first review common robot tasks in disaster response and the types of information such robots must collect. We then organize the types of semantic features and understanding that may be useful in disaster operations into a taxonomy of semantic information. We also briefly review the current state-of-the-art semantic understanding techniques. We highlight potential synergies, but we also identify gaps that need to be bridged to apply these ideas. We aim to stimulate the research that is needed to adapt, robustify, and implement state-of-the-art AI semantics methods in the challenging conditions of disasters and first responder scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge