Ludwig Hoegner

Mind the Domain Gap: Measuring the Domain Gap Between Real-World and Synthetic Point Clouds for Automated Driving Development

May 23, 2025

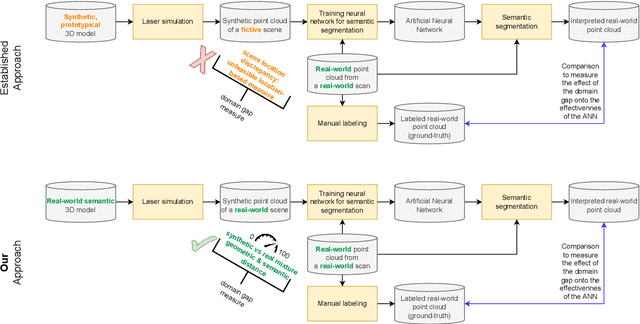

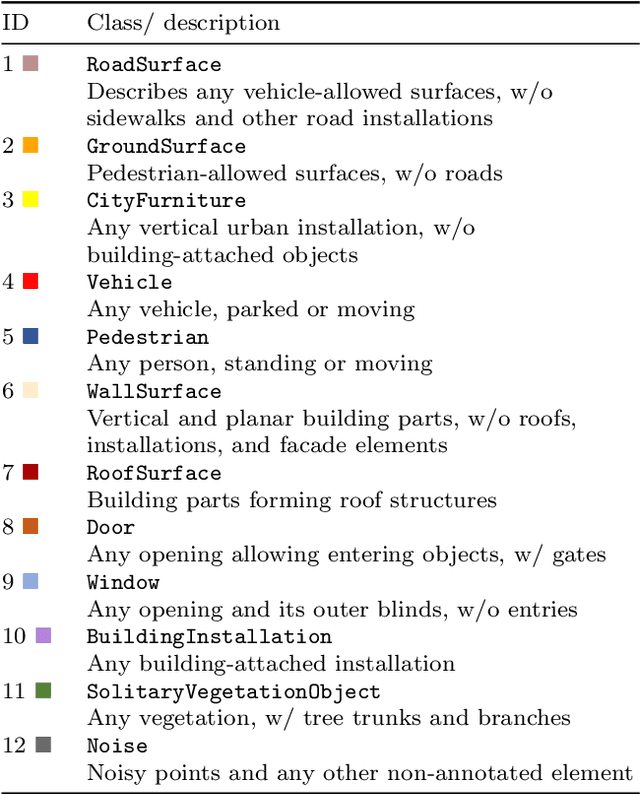

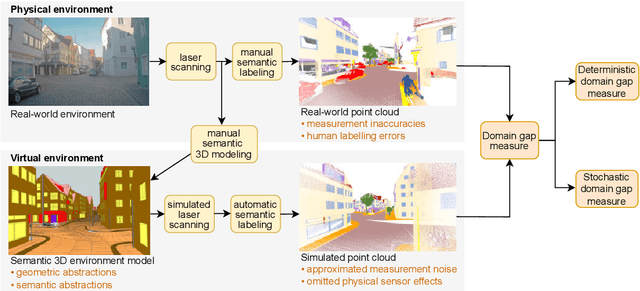

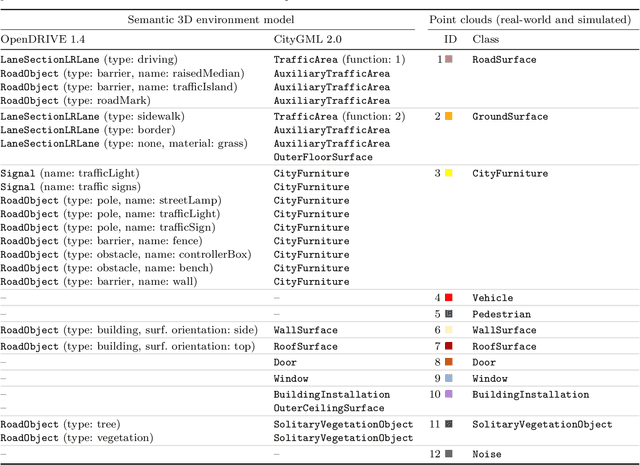

Abstract:Owing to the typical long-tail data distribution issues, simulating domain-gap-free synthetic data is crucial in robotics, photogrammetry, and computer vision research. The fundamental challenge pertains to credibly measuring the difference between real and simulated data. Such a measure is vital for safety-critical applications, such as automated driving, where out-of-domain samples may impact a car's perception and cause fatal accidents. Previous work has commonly focused on simulating data on one scene and analyzing performance on a different, real-world scene, hampering the disjoint analysis of domain gap coming from networks' deficiencies, class definitions, and object representation. In this paper, we propose a novel approach to measuring the domain gap between the real world sensor observations and simulated data representing the same location, enabling comprehensive domain gap analysis. To measure such a domain gap, we introduce a novel metric DoGSS-PCL and evaluation assessing the geometric and semantic quality of the simulated point cloud. Our experiments corroborate that the introduced approach can be used to measure the domain gap. The tests also reveal that synthetic semantic point clouds may be used for training deep neural networks, maintaining the performance at the 50/50 real-to-synthetic ratio. We strongly believe that this work will facilitate research on credible data simulation and allow for at-scale deployment in automated driving testing and digital twinning.

TUM2TWIN: Introducing the Large-Scale Multimodal Urban Digital Twin Benchmark Dataset

May 13, 2025

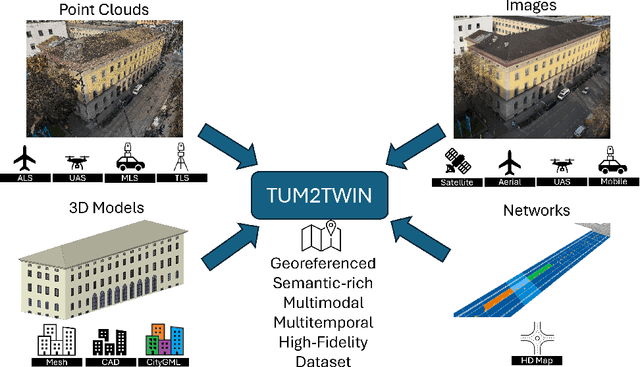

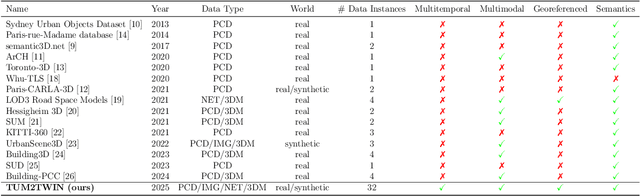

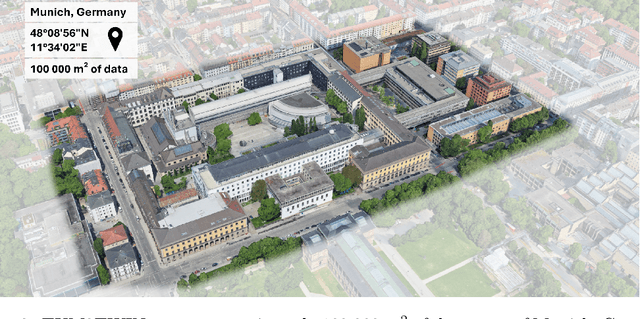

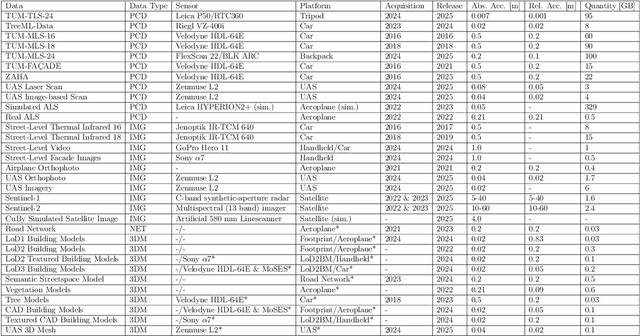

Abstract:Urban Digital Twins (UDTs) have become essential for managing cities and integrating complex, heterogeneous data from diverse sources. Creating UDTs involves challenges at multiple process stages, including acquiring accurate 3D source data, reconstructing high-fidelity 3D models, maintaining models' updates, and ensuring seamless interoperability to downstream tasks. Current datasets are usually limited to one part of the processing chain, hampering comprehensive UDTs validation. To address these challenges, we introduce the first comprehensive multimodal Urban Digital Twin benchmark dataset: TUM2TWIN. This dataset includes georeferenced, semantically aligned 3D models and networks along with various terrestrial, mobile, aerial, and satellite observations boasting 32 data subsets over roughly 100,000 $m^2$ and currently 767 GB of data. By ensuring georeferenced indoor-outdoor acquisition, high accuracy, and multimodal data integration, the benchmark supports robust analysis of sensors and the development of advanced reconstruction methods. Additionally, we explore downstream tasks demonstrating the potential of TUM2TWIN, including novel view synthesis of NeRF and Gaussian Splatting, solar potential analysis, point cloud semantic segmentation, and LoD3 building reconstruction. We are convinced this contribution lays a foundation for overcoming current limitations in UDT creation, fostering new research directions and practical solutions for smarter, data-driven urban environments. The project is available under: https://tum2t.win

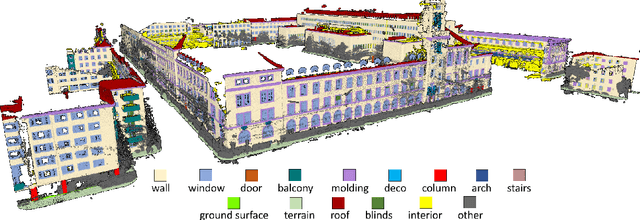

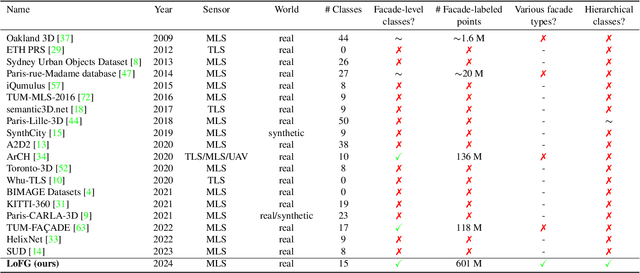

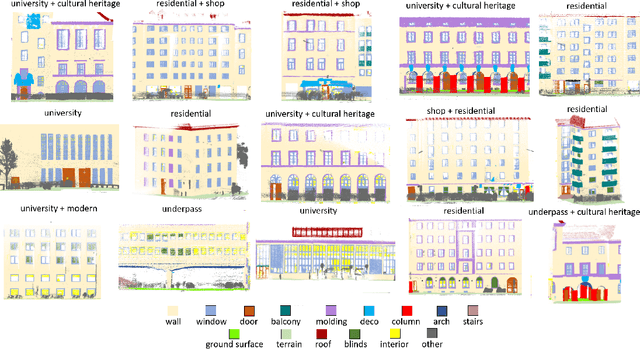

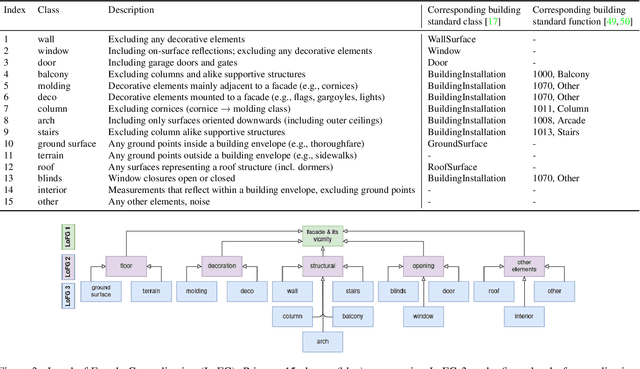

ZAHA: Introducing the Level of Facade Generalization and the Large-Scale Point Cloud Facade Semantic Segmentation Benchmark Dataset

Nov 07, 2024

Abstract:Facade semantic segmentation is a long-standing challenge in photogrammetry and computer vision. Although the last decades have witnessed the influx of facade segmentation methods, there is a lack of comprehensive facade classes and data covering the architectural variability. In ZAHA, we introduce Level of Facade Generalization (LoFG), novel hierarchical facade classes designed based on international urban modeling standards, ensuring compatibility with real-world challenging classes and uniform methods' comparison. Realizing the LoFG, we present to date the largest semantic 3D facade segmentation dataset, providing 601 million annotated points at five and 15 classes of LoFG2 and LoFG3, respectively. Moreover, we analyze the performance of baseline semantic segmentation methods on our introduced LoFG classes and data, complementing it with a discussion on the unresolved challenges for facade segmentation. We firmly believe that ZAHA shall facilitate further development of 3D facade semantic segmentation methods, enabling robust segmentation indispensable in creating urban digital twins.

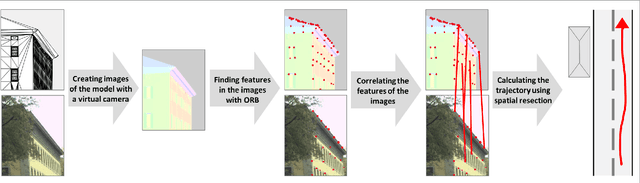

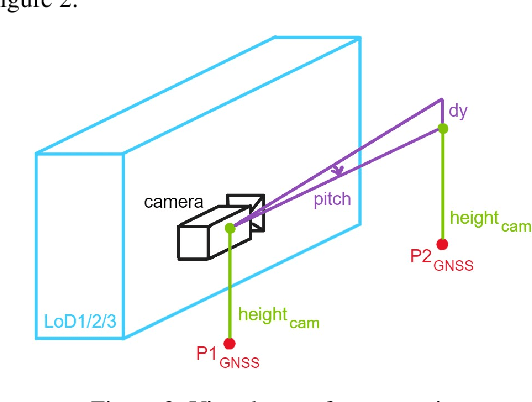

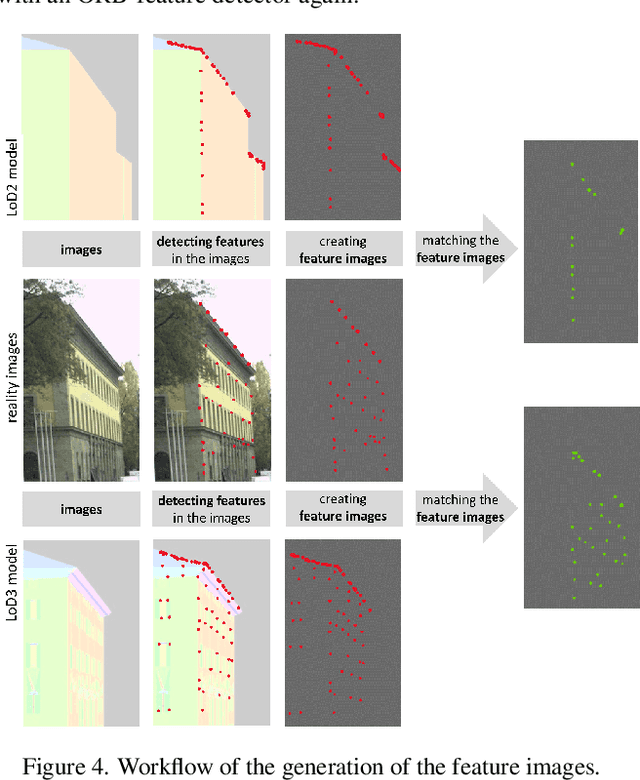

Analyzing the impact of semantic LoD3 building models on image-based vehicle localization

Jul 31, 2024

Abstract:Numerous navigation applications rely on data from global navigation satellite systems (GNSS), even though their accuracy is compromised in urban areas, posing a significant challenge, particularly for precise autonomous car localization. Extensive research has focused on enhancing localization accuracy by integrating various sensor types to address this issue. This paper introduces a novel approach for car localization, leveraging image features that correspond with highly detailed semantic 3D building models. The core concept involves augmenting positioning accuracy by incorporating prior geometric and semantic knowledge into calculations. The work assesses outcomes using Level of Detail 2 (LoD2) and Level of Detail 3 (LoD3) models, analyzing whether facade-enriched models yield superior accuracy. This comprehensive analysis encompasses diverse methods, including off-the-shelf feature matching and deep learning, facilitating thorough discussion. Our experiments corroborate that LoD3 enables detecting up to 69\% more features than using LoD2 models. We believe that this study will contribute to the research of enhancing positioning accuracy in GNSS-denied urban canyons. It also shows a practical application of under-explored LoD3 building models on map-based car positioning.

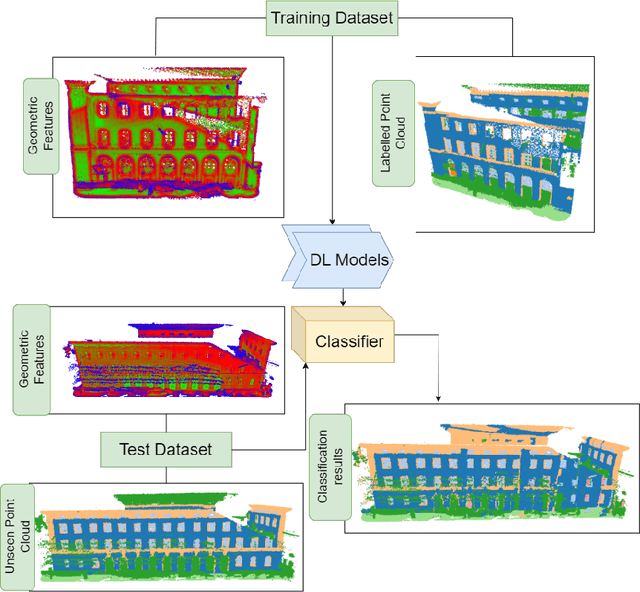

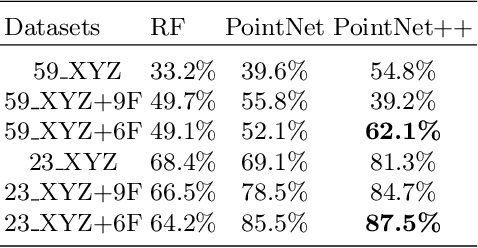

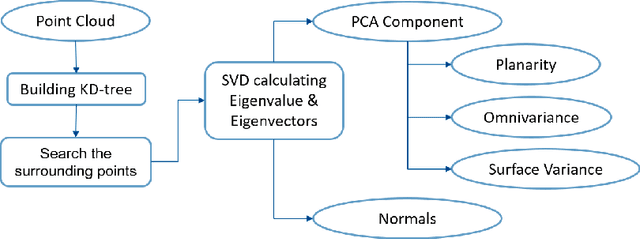

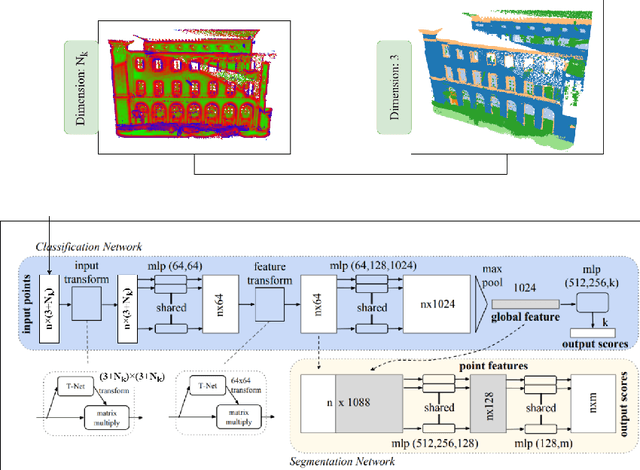

Classifying point clouds at the facade-level using geometric features and deep learning networks

Feb 09, 2024

Abstract:3D building models with facade details are playing an important role in many applications now. Classifying point clouds at facade-level is key to create such digital replicas of the real world. However, few studies have focused on such detailed classification with deep neural networks. We propose a method fusing geometric features with deep learning networks for point cloud classification at facade-level. Our experiments conclude that such early-fused features improve deep learning methods' performance. This method can be applied for compensating deep learning networks' ability in capturing local geometric information and promoting the advancement of semantic segmentation.

Transferring facade labels between point clouds with semantic octrees while considering change detection

Feb 09, 2024Abstract:Point clouds and high-resolution 3D data have become increasingly important in various fields, including surveying, construction, and virtual reality. However, simply having this data is not enough; to extract useful information, semantic labeling is crucial. In this context, we propose a method to transfer annotations from a labeled to an unlabeled point cloud using an octree structure. The structure also analyses changes between the point clouds. Our experiments confirm that our method effectively transfers annotations while addressing changes. The primary contribution of this project is the development of the method for automatic label transfer between two different point clouds that represent the same real-world object. The proposed method can be of great importance for data-driven deep learning algorithms as it can also allow circumventing stochastic transfer learning by deterministic label transfer between datasets depicting the same objects.

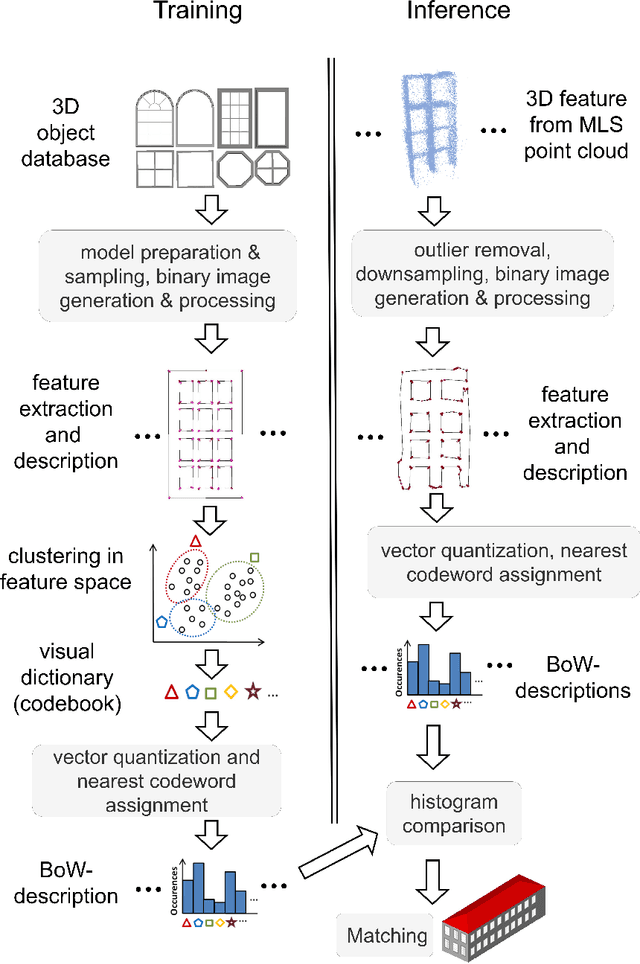

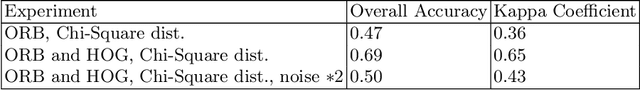

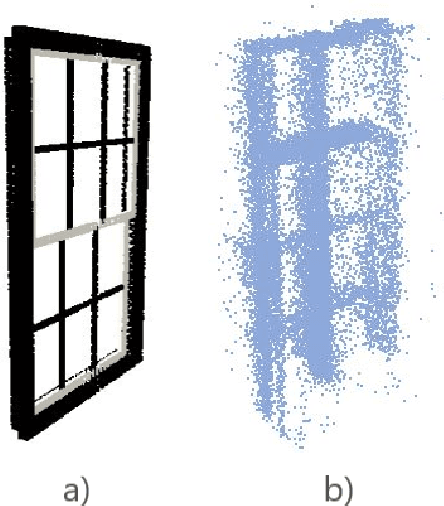

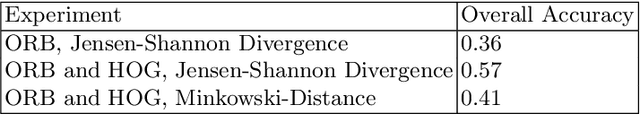

Reconstructing facade details using MLS point clouds and Bag-of-Words approach

Feb 09, 2024

Abstract:In the reconstruction of fa\c{c}ade elements, the identification of specific object types remains challenging and is often circumvented by rectangularity assumptions or the use of bounding boxes. We propose a new approach for the reconstruction of 3D fa\c{c}ade details. We combine MLS point clouds and a pre-defined 3D model library using a BoW concept, which we augment by incorporating semi-global features. We conduct experiments on the models superimposed with random noise and on the TUM-FA\c{C}ADE dataset. Our method demonstrates promising results, improving the conventional BoW approach. It holds the potential to be utilized for more realistic facade reconstruction without rectangularity assumptions, which can be used in applications such as testing automated driving functions or estimating fa\c{c}ade solar potential.

MLS2LoD3: Refining low LoDs building models with MLS point clouds to reconstruct semantic LoD3 building models

Feb 09, 2024Abstract:Although highly-detailed LoD3 building models reveal great potential in various applications, they have yet to be available. The primary challenges in creating such models concern not only automatic detection and reconstruction but also standard-consistent modeling. In this paper, we introduce a novel refinement strategy enabling LoD3 reconstruction by leveraging the ubiquity of lower LoD building models and the accuracy of MLS point clouds. Such a strategy promises at-scale LoD3 reconstruction and unlocks LoD3 applications, which we also describe and illustrate in this paper. Additionally, we present guidelines for reconstructing LoD3 facade elements and their embedding into the CityGML standard model, disseminating gained knowledge to academics and professionals. We believe that our method can foster development of LoD3 reconstruction algorithms and subsequently enable their wider adoption.

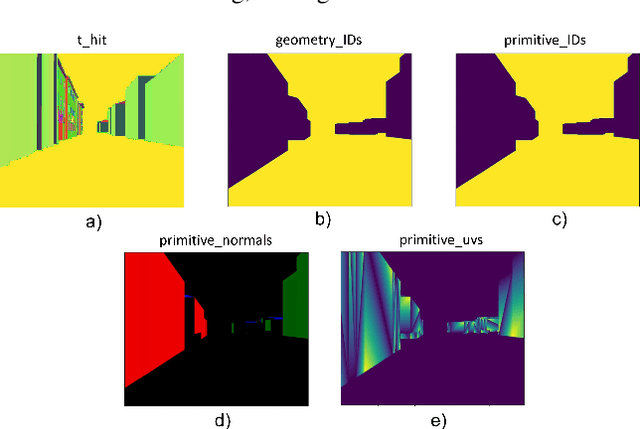

Scan2LoD3: Reconstructing semantic 3D building models at LoD3 using ray casting and Bayesian networks

May 10, 2023

Abstract:Reconstructing semantic 3D building models at the level of detail (LoD) 3 is a long-standing challenge. Unlike mesh-based models, they require watertight geometry and object-wise semantics at the fa\c{c}ade level. The principal challenge of such demanding semantic 3D reconstruction is reliable fa\c{c}ade-level semantic segmentation of 3D input data. We present a novel method, called Scan2LoD3, that accurately reconstructs semantic LoD3 building models by improving fa\c{c}ade-level semantic 3D segmentation. To this end, we leverage laser physics and 3D building model priors to probabilistically identify model conflicts. These probabilistic physical conflicts propose locations of model openings: Their final semantics and shapes are inferred in a Bayesian network fusing multimodal probabilistic maps of conflicts, 3D point clouds, and 2D images. To fulfill demanding LoD3 requirements, we use the estimated shapes to cut openings in 3D building priors and fit semantic 3D objects from a library of fa\c{c}ade objects. Extensive experiments on the TUM city campus datasets demonstrate the superior performance of the proposed Scan2LoD3 over the state-of-the-art methods in fa\c{c}ade-level detection, semantic segmentation, and LoD3 building model reconstruction. We believe our method can foster the development of probability-driven semantic 3D reconstruction at LoD3 since not only the high-definition reconstruction but also reconstruction confidence becomes pivotal for various applications such as autonomous driving and urban simulations.

TUM-FAÇADE: Reviewing and enriching point cloud benchmarks for façade segmentation

Apr 14, 2023Abstract:Point clouds are widely regarded as one of the best dataset types for urban mapping purposes. Hence, point cloud datasets are commonly investigated as benchmark types for various urban interpretation methods. Yet, few researchers have addressed the use of point cloud benchmarks for fa\c{c}ade segmentation. Robust fa\c{c}ade segmentation is becoming a key factor in various applications ranging from simulating autonomous driving functions to preserving cultural heritage. In this work, we present a method of enriching existing point cloud datasets with fa\c{c}ade-related classes that have been designed to facilitate fa\c{c}ade segmentation testing. We propose how to efficiently extend existing datasets and comprehensively assess their potential for fa\c{c}ade segmentation. We use the method to create the TUM-FA\c{C}ADE dataset, which extends the capabilities of TUM-MLS-2016. Not only can TUM-FA\c{C}ADE facilitate the development of point-cloud-based fa\c{c}ade segmentation tasks, but our procedure can also be applied to enrich further datasets.

* 3D-ARCH 2022, Mantova, Italy, 2022, ISPRS conference

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge