Ludovica Pannitto

Constraining constructions with WordNet: pros and cons for the semantic annotation of fillers in the Italian Constructicon

Jan 10, 2025

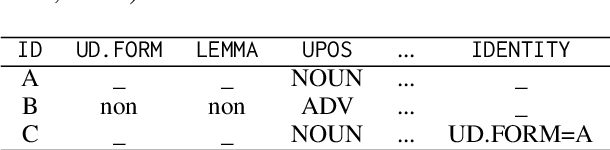

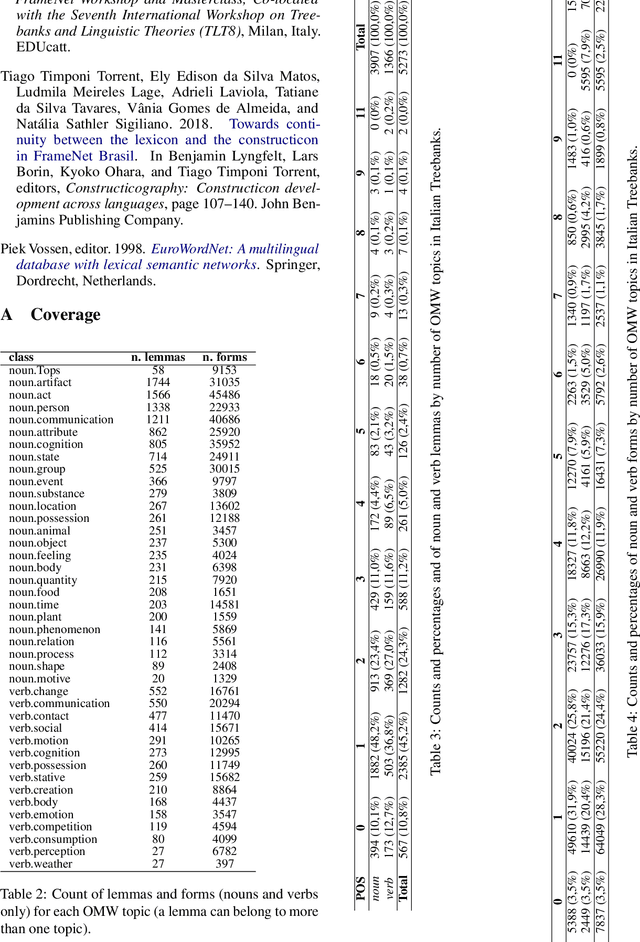

Abstract:The paper discusses the role of WordNet-based semantic classification in the formalization of constructions, and more specifically in the semantic annotation of schematic fillers, in the Italian Constructicon. We outline how the Italian Constructicon project uses Open Multilingual WordNet topics to represent semantic features and constraints of constructions.

Annotating Constructions with UD: the experience of the Italian Constructicon

Nov 12, 2024Abstract:The paper descirbes a first attempt of linking the Italian constructicon to UD resources

The KIPARLA Forest treebank of spoken Italian: an overview of initial design choices

Nov 10, 2024Abstract:The paper presents an overview of initial design choices discussed towards the creation of a treebank for the Italian KIParla corpus

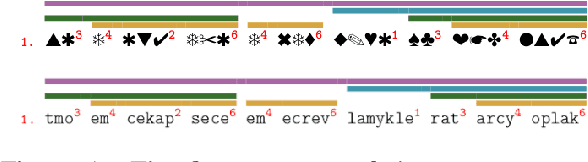

Did somebody say "Gest-IT"? A pilot exploration of multimodal data management

Oct 21, 2024Abstract:The paper presents a pilot exploration of the construction, management and analysis of a multimodal corpus. Through a three-layer annotation that provides orthographic, prosodic, and gestural transcriptions, the Gest-IT resource allows to investigate the variation of gesture-making patterns in conversations between sighted people and people with visual impairment. After discussing the transcription methods and technical procedures employed in our study, we propose a unified CoNLL-U corpus and indicate our future steps

Towards the first UD Treebank of Spoken Italian: the KIParla forest

Oct 06, 2024Abstract:The present project endeavors to enrich the linguistic resources available for Italian by constructing a Universal Dependencies treebank for the KIParla corpus (Mauri et al., 2019, Ballar\`e et al., 2020), an existing and well known resource for spoken Italian.

CALaMo: a Constructionist Assessment of Language Models

Feb 07, 2023Abstract:This paper presents a novel framework for evaluating Neural Language Models' linguistic abilities using a constructionist approach. Not only is the usage-based model in line with the underlying stochastic philosophy of neural architectures, but it also allows the linguist to keep meaning as a determinant factor in the analysis. We outline the framework and present two possible scenarios for its application.

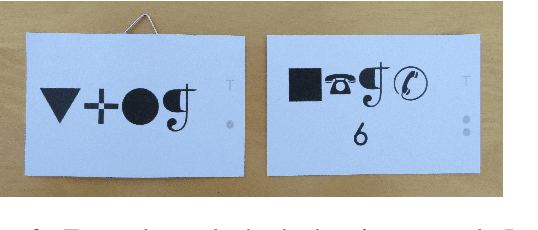

A dissemination workshop for introducing young Italian students to NLP

May 14, 2021

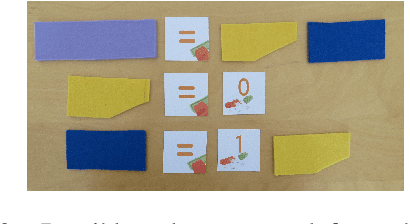

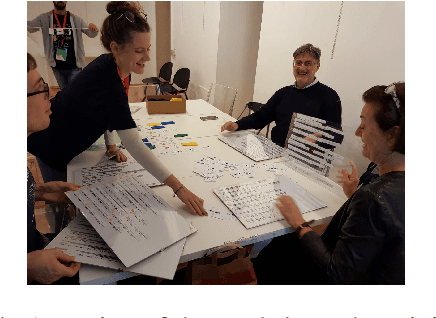

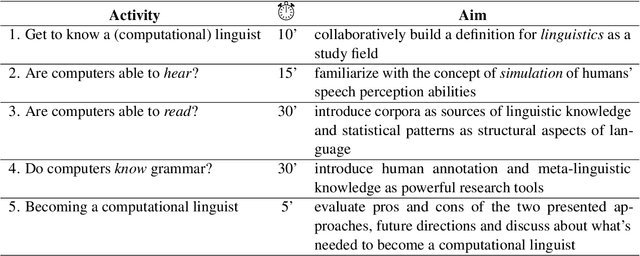

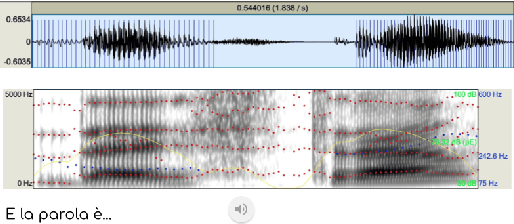

Abstract:We describe and make available the game-based material developed for a laboratory run at several Italian science festivals to popularize NLP among young students.

Teaching NLP with Bracelets and Restaurant Menus: An Interactive Workshop for Italian Students

May 14, 2021

Abstract:Although Natural Language Processing (NLP) is at the core of many tools young people use in their everyday life, high school curricula (in Italy) do not include any computational linguistics education. This lack of exposure makes the use of such tools less responsible than it could be and makes choosing computational linguistics as a university degree unlikely. To raise awareness, curiosity, and longer-term interest in young people, we have developed an interactive workshop designed to illustrate the basic principles of NLP and computational linguistics to high school Italian students aged between 13 and 18 years. The workshop takes the form of a game in which participants play the role of machines needing to solve some of the most common problems a computer faces in understanding language: from voice recognition to Markov chains to syntactic parsing. Participants are guided through the workshop with the help of instructors, who present the activities and explain core concepts from computational linguistics. The workshop was presented at numerous outlets in Italy between 2019 and 2021, both face-to-face and online.

Recurrent babbling: evaluating the acquisition of grammar from limited input data

Oct 09, 2020

Abstract:Recurrent Neural Networks (RNNs) have been shown to capture various aspects of syntax from raw linguistic input. In most previous experiments, however, learning happens over unrealistic corpora, which do not reflect the type and amount of data a child would be exposed to. This paper remedies this state of affairs by training a Long Short-Term Memory network (LSTM) over a realistically sized subset of child-directed input. The behaviour of the network is analysed over time using a novel methodology which consists in quantifying the level of grammatical abstraction in the model's generated output (its "babbling"), compared to the language it has been exposed to. We show that the LSTM indeed abstracts new structuresas learning proceeds.

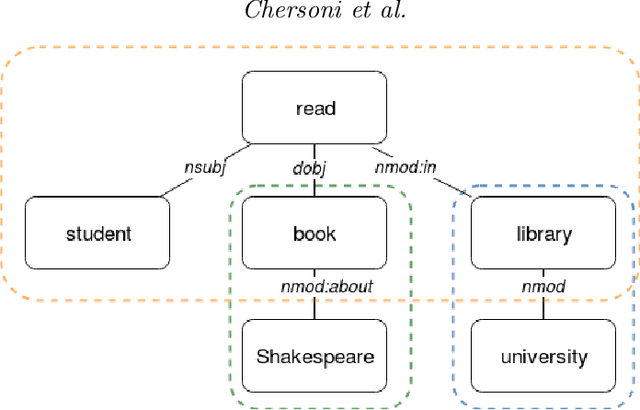

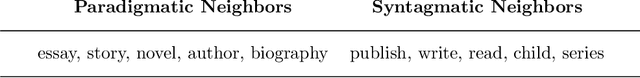

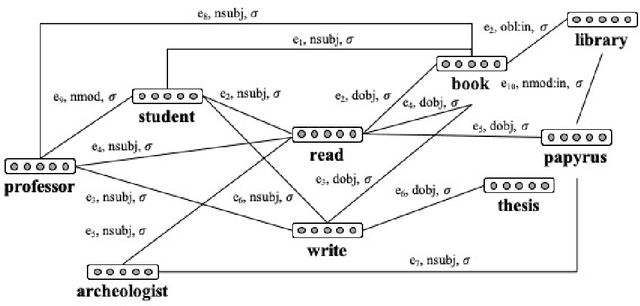

A Structured Distributional Model of Sentence Meaning and Processing

Jun 17, 2019

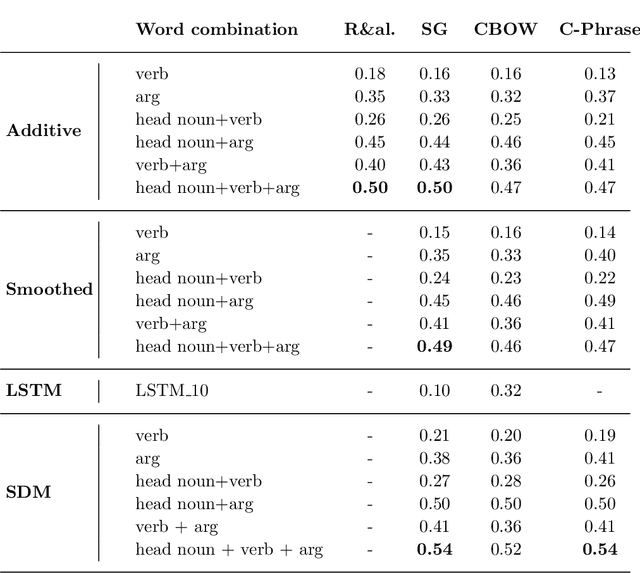

Abstract:Most compositional distributional semantic models represent sentence meaning with a single vector. In this paper, we propose a Structured Distributional Model (SDM) that combines word embeddings with formal semantics and is based on the assumption that sentences represent events and situations. The semantic representation of a sentence is a formal structure derived from Discourse Representation Theory and containing distributional vectors. This structure is dynamically and incrementally built by integrating knowledge about events and their typical participants, as they are activated by lexical items. Event knowledge is modeled as a graph extracted from parsed corpora and encoding roles and relationships between participants that are represented as distributional vectors. SDM is grounded on extensive psycholinguistic research showing that generalized knowledge about events stored in semantic memory plays a key role in sentence comprehension. We evaluate SDM on two recently introduced compositionality datasets, and our results show that combining a simple compositional model with event knowledge constantly improves performances, even with different types of word embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge