Luca Bortolussi

University of Trieste, Italy

Guided by Stars: Interpretable Concept Learning Over Time Series via Temporal Logic Semantics

Nov 06, 2025Abstract:Time series classification is a task of paramount importance, as this kind of data often arises in safety-critical applications. However, it is typically tackled with black-box deep learning methods, making it hard for humans to understand the rationale behind their output. To take on this challenge, we propose a novel approach, STELLE (Signal Temporal logic Embedding for Logically-grounded Learning and Explanation), a neuro-symbolic framework that unifies classification and explanation through direct embedding of trajectories into a space of temporal logic concepts. By introducing a novel STL-inspired kernel that maps raw time series to their alignment with predefined STL formulae, our model jointly optimises accuracy and interpretability, as each prediction is accompanied by the most relevant logical concepts that characterise it. This yields (i) local explanations as human-readable STL conditions justifying individual predictions, and (ii) global explanations as class-characterising formulae. Experiments demonstrate that STELLE achieves competitive accuracy while providing logically faithful explanations, validated on diverse real-world benchmarks.

CoCAI: Copula-based Conformal Anomaly Identification for Multivariate Time-Series

Jul 23, 2025Abstract:We propose a novel framework that harnesses the power of generative artificial intelligence and copula-based modeling to address two critical challenges in multivariate time-series analysis: delivering accurate predictions and enabling robust anomaly detection. Our method, Copula-based Conformal Anomaly Identification for Multivariate Time-Series (CoCAI), leverages a diffusion-based model to capture complex dependencies within the data, enabling high quality forecasting. The model's outputs are further calibrated using a conformal prediction technique, yielding predictive regions which are statistically valid, i.e., cover the true target values with a desired confidence level. Starting from these calibrated forecasts, robust outlier detection is performed by combining dimensionality reduction techniques with copula-based modeling, providing a statistically grounded anomaly score. CoCAI benefits from an offline calibration phase that allows for minimal overhead during deployment and delivers actionable results rooted in established theoretical foundations. Empirical tests conducted on real operational data derived from water distribution and sewerage systems confirm CoCAI's effectiveness in accurately forecasting target sequences of data and in identifying anomalous segments within them.

Bridging Logic and Learning: Decoding Temporal Logic Embeddings via Transformers

Jul 10, 2025Abstract:Continuous representations of logic formulae allow us to integrate symbolic knowledge into data-driven learning algorithms. If such embeddings are semantically consistent, i.e. if similar specifications are mapped into nearby vectors, they enable continuous learning and optimization directly in the semantic space of formulae. However, to translate the optimal continuous representation into a concrete requirement, such embeddings must be invertible. We tackle this issue by training a Transformer-based decoder-only model to invert semantic embeddings of Signal Temporal Logic (STL) formulae. STL is a powerful formalism that allows us to describe properties of signals varying over time in an expressive yet concise way. By constructing a small vocabulary from STL syntax, we demonstrate that our proposed model is able to generate valid formulae after only 1 epoch and to generalize to the semantics of the logic in about 10 epochs. Additionally, the model is able to decode a given embedding into formulae that are often simpler in terms of length and nesting while remaining semantically close (or equivalent) to gold references. We show the effectiveness of our methodology across various levels of training formulae complexity to assess the impact of training data on the model's ability to effectively capture the semantic information contained in the embeddings and generalize out-of-distribution. Finally, we deploy our model for solving a requirement mining task, i.e. inferring STL specifications that solve a classification task on trajectories, performing the optimization directly in the semantic space.

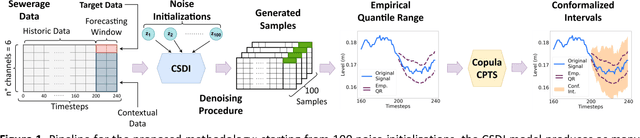

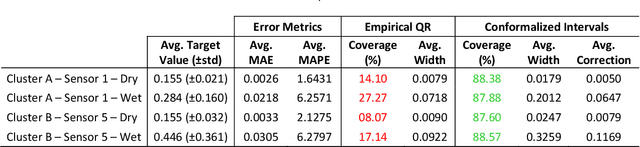

Diffusion-based Time Series Forecasting for Sewerage Systems

Jun 10, 2025

Abstract:We introduce a novel deep learning approach that harnesses the power of generative artificial intelligence to enhance the accuracy of contextual forecasting in sewerage systems. By developing a diffusion-based model that processes multivariate time series data, our system excels at capturing complex correlations across diverse environmental signals, enabling robust predictions even during extreme weather events. To strengthen the model's reliability, we further calibrate its predictions with a conformal inference technique, tailored for probabilistic time series data, ensuring that the resulting prediction intervals are statistically reliable and cover the true target values with a desired confidence level. Our empirical tests on real sewerage system data confirm the model's exceptional capability to deliver reliable contextual predictions, maintaining accuracy even under severe weather conditions.

Graph Conditional Flow Matching for Relational Data Generation

May 21, 2025Abstract:Data synthesis is gaining momentum as a privacy-enhancing technology. While single-table tabular data generation has seen considerable progress, current methods for multi-table data often lack the flexibility and expressiveness needed to capture complex relational structures. In particular, they struggle with long-range dependencies and complex foreign-key relationships, such as tables with multiple parent tables or multiple types of links between the same pair of tables. We propose a generative model for relational data that generates the content of a relational dataset given the graph formed by the foreign-key relationships. We do this by learning a deep generative model of the content of the whole relational database by flow matching, where the neural network trained to denoise records leverages a graph neural network to obtain information from connected records. Our method is flexible, as it can support relational datasets with complex structures, and expressive, as the generation of each record can be influenced by any other record within the same connected component. We evaluate our method on several benchmark datasets and show that it achieves state-of-the-art performance in terms of synthetic data fidelity.

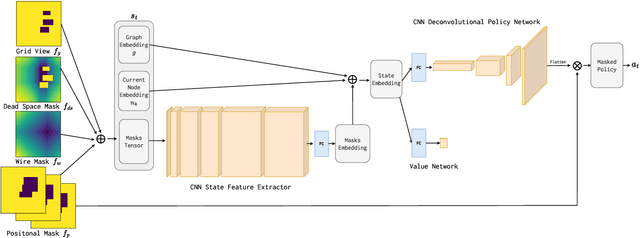

Enhancing Reinforcement Learning for the Floorplanning of Analog ICs with Beam Search

May 08, 2025Abstract:The layout of analog ICs requires making complex trade-offs, while addressing device physics and variability of the circuits. This makes full automation with learning-based solutions hard to achieve. However, reinforcement learning (RL) has recently reached significant results, particularly in solving the floorplanning problem. This paper presents a hybrid method that combines RL with a beam (BS) strategy. The BS algorithm enhances the agent's inference process, allowing for the generation of flexible floorplans by accomodating various objective weightings, and addressing congestion without without the need for policy retraining or fine-tuning. Moreover, the RL agent's generalization ability stays intact, along with its efficient handling of circuit features and constraints. Experimental results show approx. 5-85% improvement in area, dead space and half-perimeter wire length compared to a standard RL application, along with higher rewards for the agent. Moreover, performance and efficiency align closely with those of existing state-of-the-art techniques.

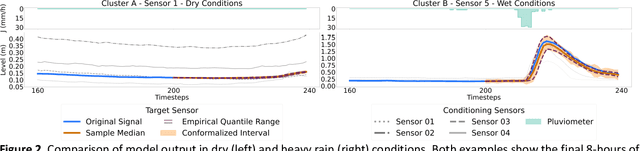

Contrastive Learning-Based privacy metrics in Tabular Synthetic Datasets

Feb 19, 2025

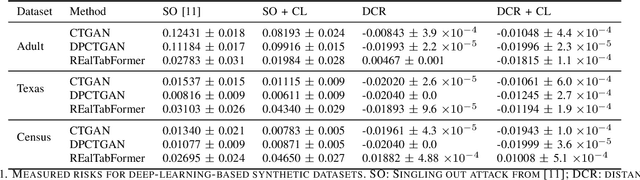

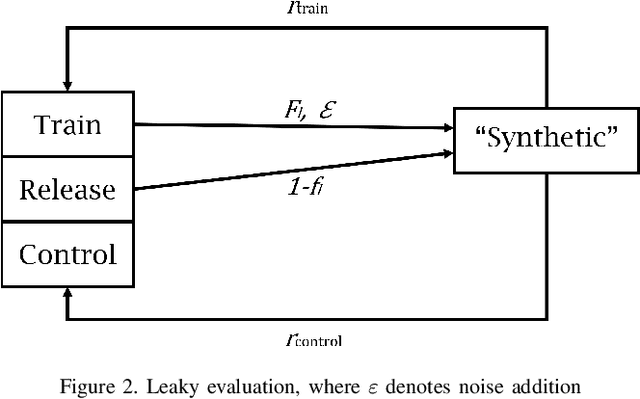

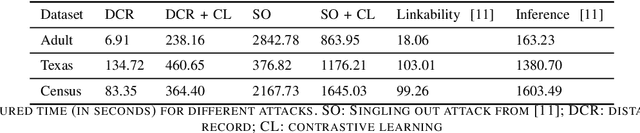

Abstract:Synthetic data has garnered attention as a Privacy Enhancing Technology (PET) in sectors such as healthcare and finance. When using synthetic data in practical applications, it is important to provide protection guarantees. In the literature, two family of approaches are proposed for tabular data: on the one hand, Similarity-based methods aim at finding the level of similarity between training and synthetic data. Indeed, a privacy breach can occur if the generated data is consistently too similar or even identical to the train data. On the other hand, Attack-based methods conduce deliberate attacks on synthetic datasets. The success rates of these attacks reveal how secure the synthetic datasets are. In this paper, we introduce a contrastive method that improves privacy assessment of synthetic datasets by embedding the data in a more representative space. This overcomes obstacles surrounding the multitude of data types and attributes. It also makes the use of intuitive distance metrics possible for similarity measurements and as an attack vector. In a series of experiments with publicly available datasets, we compare the performances of similarity-based and attack-based methods, both with and without use of the contrastive learning-based embeddings. Our results show that relatively efficient, easy to implement privacy metrics can perform equally well as more advanced metrics explicitly modeling conditions for privacy referred to by the GDPR.

Scaling Combinatorial Optimization Neural Improvement Heuristics with Online Search and Adaptation

Dec 13, 2024

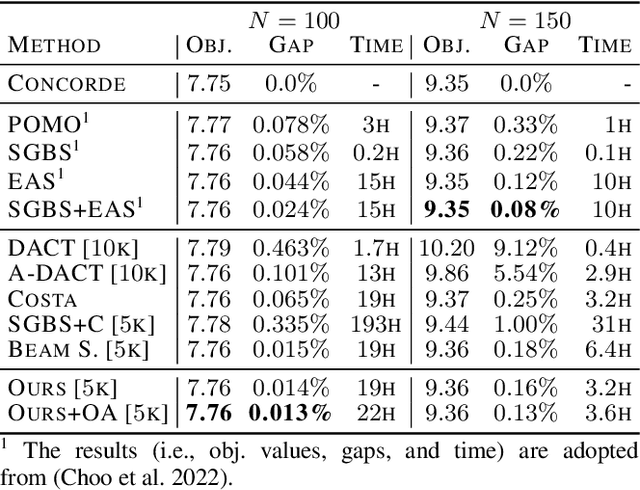

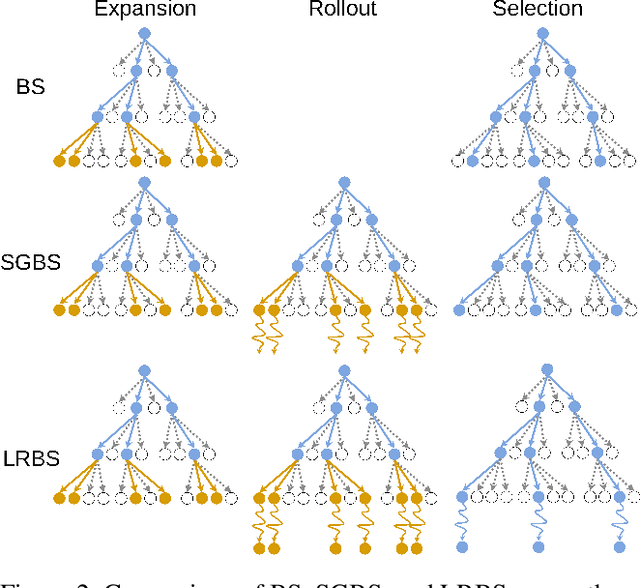

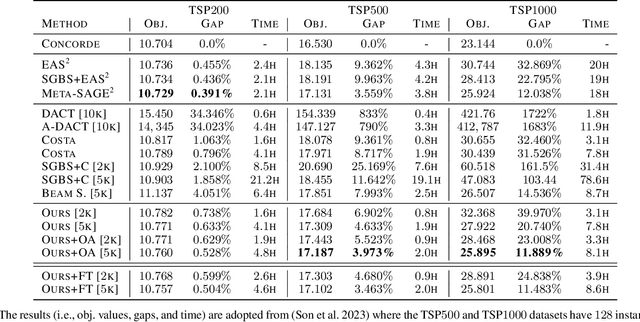

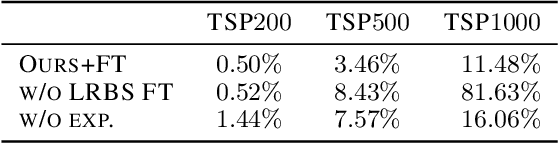

Abstract:We introduce Limited Rollout Beam Search (LRBS), a beam search strategy for deep reinforcement learning (DRL) based combinatorial optimization improvement heuristics. Utilizing pre-trained models on the Euclidean Traveling Salesperson Problem, LRBS significantly enhances both in-distribution performance and generalization to larger problem instances, achieving optimality gaps that outperform existing improvement heuristics and narrowing the gap with state-of-the-art constructive methods. We also extend our analysis to two pickup and delivery TSP variants to validate our results. Finally, we employ our search strategy for offline and online adaptation of the pre-trained improvement policy, leading to improved search performance and surpassing recent adaptive methods for constructive heuristics.

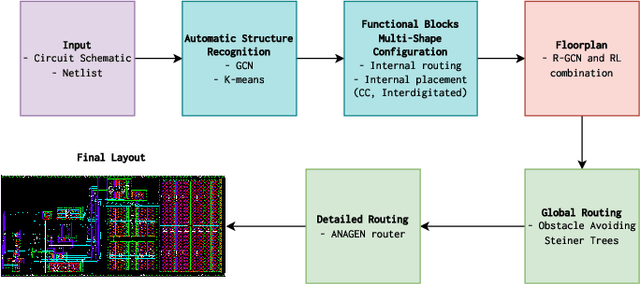

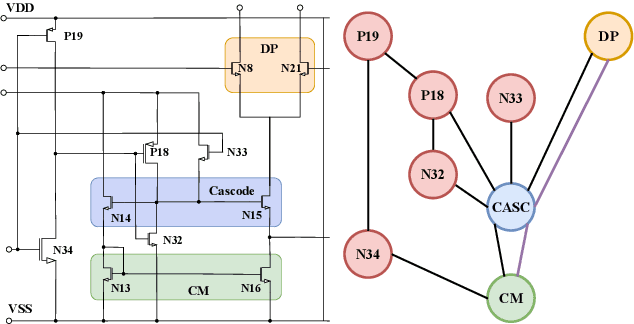

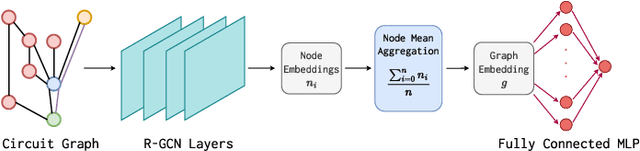

Effective Analog ICs Floorplanning with Relational Graph Neural Networks and Reinforcement Learning

Nov 20, 2024

Abstract:Analog integrated circuit (IC) floorplanning is typically a manual process with the placement of components (devices and modules) planned by a layout engineer. This process is further complicated by the interdependence of floorplanning and routing steps, numerous electric and layout-dependent constraints, as well as the high level of customization expected in analog design. This paper presents a novel automatic floorplanning algorithm based on reinforcement learning. It is augmented by a relational graph convolutional neural network model for encoding circuit features and positional constraints. The combination of these two machine learning methods enables knowledge transfer across different circuit designs with distinct topologies and constraints, increasing the \emph{generalization ability} of the solution. Applied to $6$ industrial circuits, our approach surpassed established floorplanning techniques in terms of speed, area and half-perimeter wire length. When integrated into a \emph{procedural generator} for layout completion, overall layout time was reduced by $67.3\%$ with a $8.3\%$ mean area reduction compared to manual layout.

ResiDual Transformer Alignment with Spectral Decomposition

Oct 31, 2024Abstract:When examined through the lens of their residual streams, a puzzling property emerges in transformer networks: residual contributions (e.g., attention heads) sometimes specialize in specific tasks or input attributes. In this paper, we analyze this phenomenon in vision transformers, focusing on the spectral geometry of residuals, and explore its implications for modality alignment in vision-language models. First, we link it to the intrinsically low-dimensional structure of visual head representations, zooming into their principal components and showing that they encode specialized roles across a wide variety of input data distributions. Then, we analyze the effect of head specialization in multimodal models, focusing on how improved alignment between text and specialized heads impacts zero-shot classification performance. This specialization-performance link consistently holds across diverse pre-training data, network sizes, and objectives, demonstrating a powerful new mechanism for boosting zero-shot classification through targeted alignment. Ultimately, we translate these insights into actionable terms by introducing ResiDual, a technique for spectral alignment of the residual stream. Much like panning for gold, it lets the noise from irrelevant unit principal components (i.e., attributes) wash away to amplify task-relevant ones. Remarkably, this dual perspective on modality alignment yields fine-tuning level performances on different data distributions while modeling an extremely interpretable and parameter-efficient transformation, as we extensively show on more than 50 (pre-trained network, dataset) pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge