Liangfan Zheng

ECLIPSE: Semantic Entropy-LCS for Cross-Lingual Industrial Log Parsing

May 22, 2024

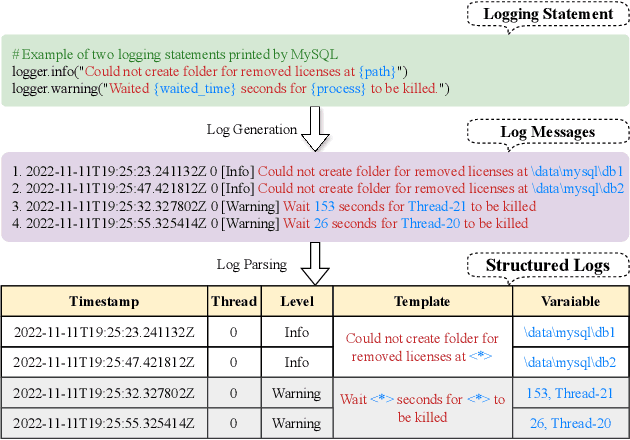

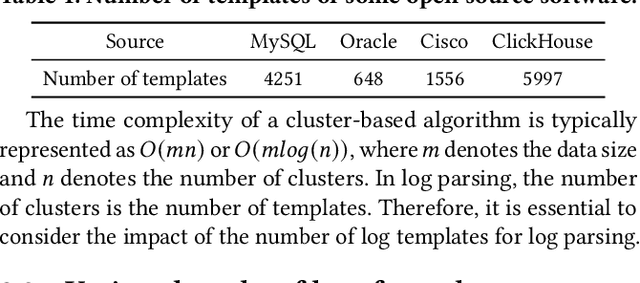

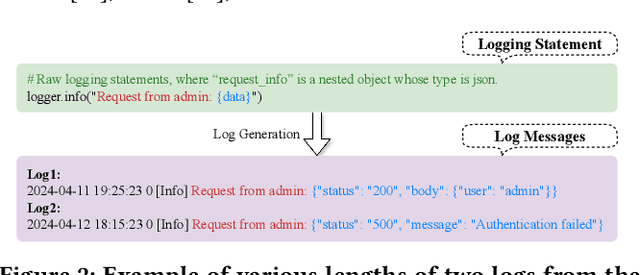

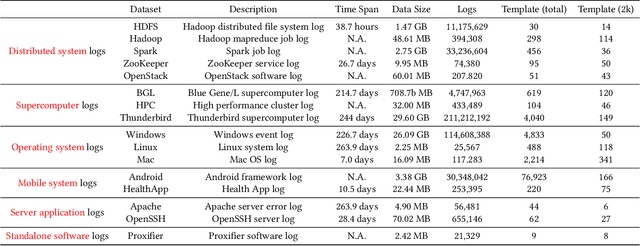

Abstract:Log parsing, a vital task for interpreting the vast and complex data produced within software architectures faces significant challenges in the transition from academic benchmarks to the industrial domain. Existing log parsers, while highly effective on standardized public datasets, struggle to maintain performance and efficiency when confronted with the sheer scale and diversity of real-world industrial logs. These challenges are two-fold: 1) massive log templates: The performance and efficiency of most existing parsers will be significantly reduced when logs of growing quantities and different lengths; 2) Complex and changeable semantics: Traditional template-matching algorithms cannot accurately match the log templates of complicated industrial logs because they cannot utilize cross-language logs with similar semantics. To address these issues, we propose ECLIPSE, Enhanced Cross-Lingual Industrial log Parsing with Semantic Entropy-LCS, since cross-language logs can robustly parse industrial logs. On the one hand, it integrates two efficient data-driven template-matching algorithms and Faiss indexing. On the other hand, driven by the powerful semantic understanding ability of the Large Language Model (LLM), the semantics of log keywords were accurately extracted, and the retrieval space was effectively reduced. It is worth noting that we launched a Chinese and English cross-platform industrial log parsing benchmark ECLIPSE-Bench to evaluate the performance of mainstream parsers in industrial scenarios. Our experimental results, conducted across public benchmarks and the proprietary ECLIPSE-Bench dataset, underscore the superior performance and robustness of our proposed ECLIPSE. Notably, ECLIPSE delivers state-of-the-art performance when compared to strong baselines on diverse datasets and preserves a significant edge in processing efficiency.

Lemur: Log Parsing with Entropy Sampling and Chain-of-Thought Merging

Mar 02, 2024Abstract:Logs produced by extensive software systems are integral to monitoring system behaviors. Advanced log analysis facilitates the detection, alerting, and diagnosis of system faults. Log parsing, which entails transforming raw log messages into structured templates, constitutes a critical phase in the automation of log analytics. Existing log parsers fail to identify the correct templates due to reliance on human-made rules. Besides, These methods focus on statistical features while ignoring semantic information in log messages. To address these challenges, we introduce a cutting-edge \textbf{L}og parsing framework with \textbf{E}ntropy sampling and Chain-of-Thought \textbf{M}erging (Lemur). Specifically, to discard the tedious manual rules. We propose a novel sampling method inspired by information entropy, which efficiently clusters typical logs. Furthermore, to enhance the merging of log templates, we design a chain-of-thought method for large language models (LLMs). LLMs exhibit exceptional semantic comprehension, deftly distinguishing between parameters and invariant tokens. We have conducted experiments on large-scale public datasets. Extensive evaluation demonstrates that Lemur achieves the state-of-the-art performance and impressive efficiency.

MLAD: A Unified Model for Multi-system Log Anomaly Detection

Jan 15, 2024Abstract:In spite of the rapid advancements in unsupervised log anomaly detection techniques, the current mainstream models still necessitate specific training for individual system datasets, resulting in costly procedures and limited scalability due to dataset size, thereby leading to performance bottlenecks. Furthermore, numerous models lack cognitive reasoning capabilities, posing challenges in direct transferability to similar systems for effective anomaly detection. Additionally, akin to reconstruction networks, these models often encounter the "identical shortcut" predicament, wherein the majority of system logs are classified as normal, erroneously predicting normal classes when confronted with rare anomaly logs due to reconstruction errors. To address the aforementioned issues, we propose MLAD, a novel anomaly detection model that incorporates semantic relational reasoning across multiple systems. Specifically, we employ Sentence-bert to capture the similarities between log sequences and convert them into highly-dimensional learnable semantic vectors. Subsequently, we revamp the formulas of the Attention layer to discern the significance of each keyword in the sequence and model the overall distribution of the multi-system dataset through appropriate vector space diffusion. Lastly, we employ a Gaussian mixture model to highlight the uncertainty of rare words pertaining to the "identical shortcut" problem, optimizing the vector space of the samples using the maximum expectation model. Experiments on three real-world datasets demonstrate the superiority of MLAD.

OWL: A Large Language Model for IT Operations

Sep 17, 2023

Abstract:With the rapid development of IT operations, it has become increasingly crucial to efficiently manage and analyze large volumes of data for practical applications. The techniques of Natural Language Processing (NLP) have shown remarkable capabilities for various tasks, including named entity recognition, machine translation and dialogue systems. Recently, Large Language Models (LLMs) have achieved significant improvements across various NLP downstream tasks. However, there is a lack of specialized LLMs for IT operations. In this paper, we introduce the OWL, a large language model trained on our collected OWL-Instruct dataset with a wide range of IT-related information, where the mixture-of-adapter strategy is proposed to improve the parameter-efficient tuning across different domains or tasks. Furthermore, we evaluate the performance of our OWL on the OWL-Bench established by us and open IT-related benchmarks. OWL demonstrates superior performance results on IT tasks, which outperforms existing models by significant margins. Moreover, we hope that the findings of our work will provide more insights to revolutionize the techniques of IT operations with specialized LLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge