Kurt Gray

WorldValuesBench: A Large-Scale Benchmark Dataset for Multi-Cultural Value Awareness of Language Models

Apr 25, 2024

Abstract:The awareness of multi-cultural human values is critical to the ability of language models (LMs) to generate safe and personalized responses. However, this awareness of LMs has been insufficiently studied, since the computer science community lacks access to the large-scale real-world data about multi-cultural values. In this paper, we present WorldValuesBench, a globally diverse, large-scale benchmark dataset for the multi-cultural value prediction task, which requires a model to generate a rating response to a value question based on demographic contexts. Our dataset is derived from an influential social science project, World Values Survey (WVS), that has collected answers to hundreds of value questions (e.g., social, economic, ethical) from 94,728 participants worldwide. We have constructed more than 20 million examples of the type "(demographic attributes, value question) $\rightarrow$ answer" from the WVS responses. We perform a case study using our dataset and show that the task is challenging for strong open and closed-source models. On merely $11.1\%$, $25.0\%$, $72.2\%$, and $75.0\%$ of the questions, Alpaca-7B, Vicuna-7B-v1.5, Mixtral-8x7B-Instruct-v0.1, and GPT-3.5 Turbo can respectively achieve $<0.2$ Wasserstein 1-distance from the human normalized answer distributions. WorldValuesBench opens up new research avenues in studying limitations and opportunities in multi-cultural value awareness of LMs.

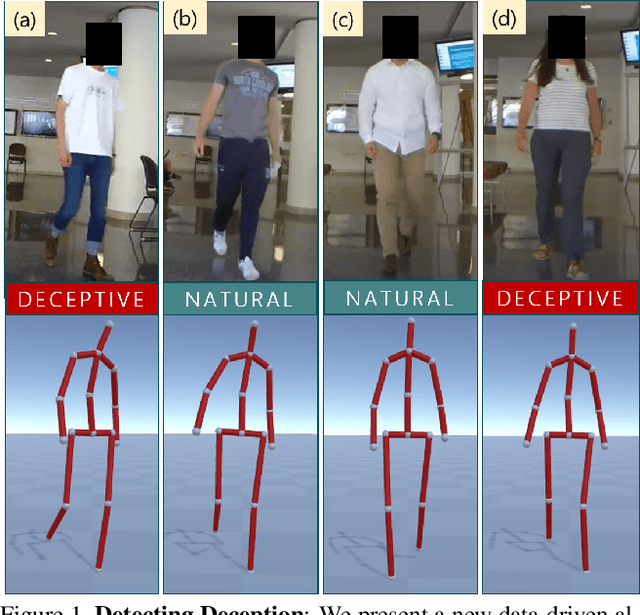

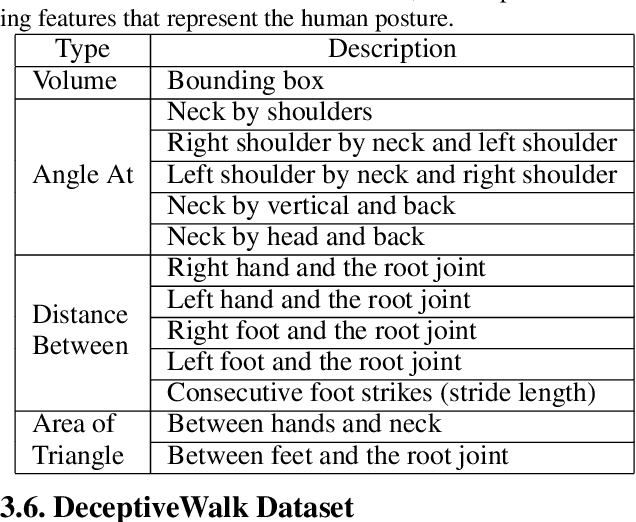

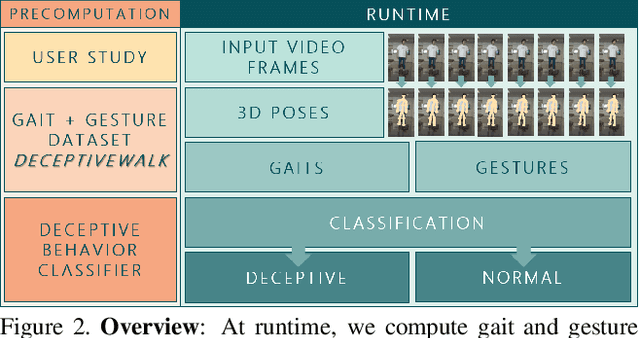

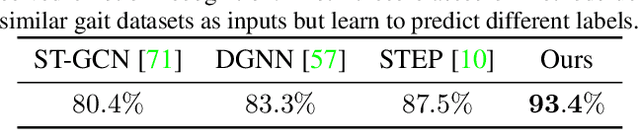

The Liar's Walk: Detecting Deception with Gait and Gesture

Dec 20, 2019

Abstract:We present a data-driven deep neural algorithm for detecting deceptive walking behavior using nonverbal cues like gaits and gestures. We conducted an elaborate user study, where we recorded many participants performing tasks involving deceptive walking. We extract the participants' walking gaits as series of 3D poses. We annotate various gestures performed by participants during their tasks. Based on the gait and gesture data, we train an LSTM-based deep neural network to obtain deep features. Finally, we use a combination of psychology-based gait, gesture, and deep features to detect deceptive walking with an accuracy of 93.4%. This is an improvement of 16.1% over handcrafted gait and gesture features and an improvement of 5.9% and 10.1% over classifiers based on the state-of-the-art emotion and action classification algorithms, respectively. Additionally, we present a novel dataset, DeceptiveWalk, that contains gaits and gestures with their associated deception labels. To the best of our knowledge, ours is the first algorithm to detect deceptive behavior using non-verbal cues of gait and gesture.

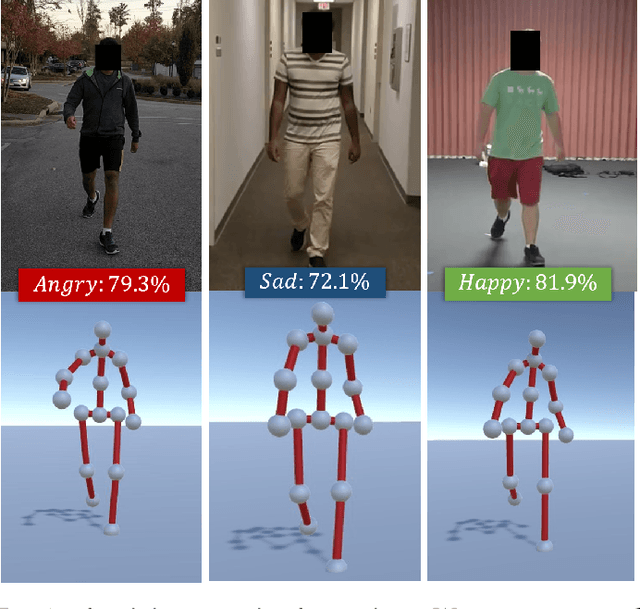

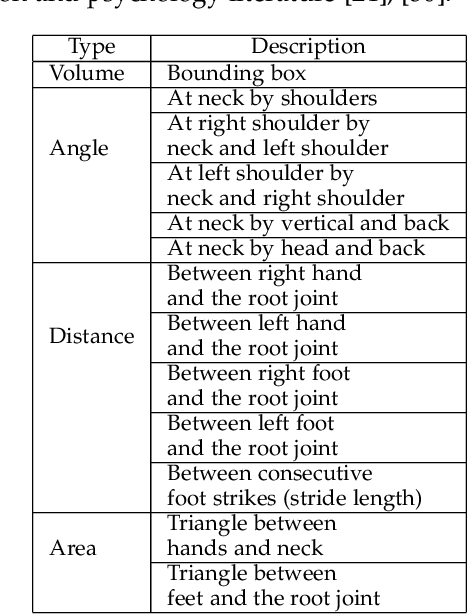

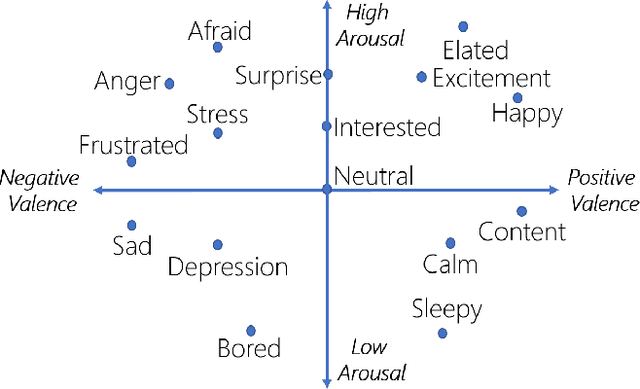

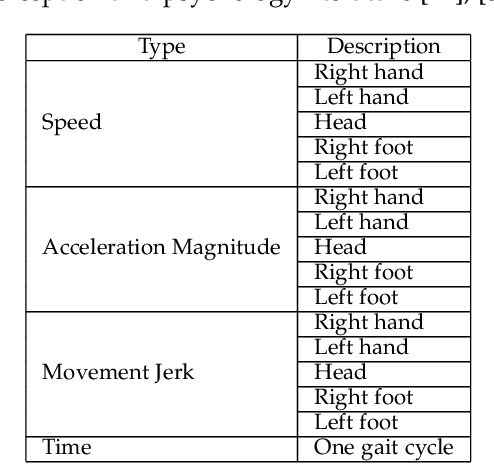

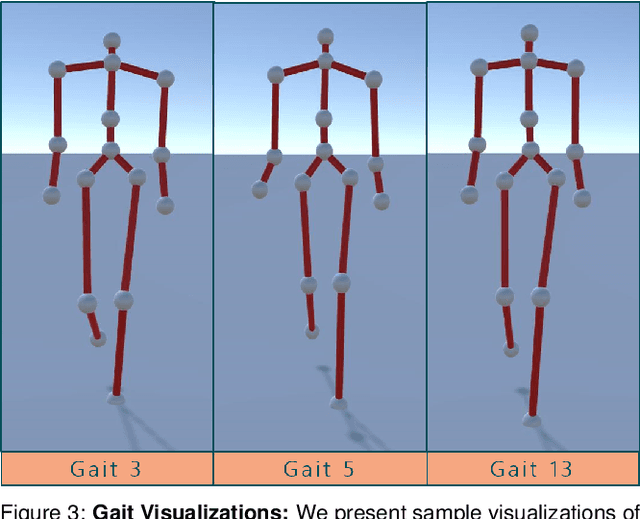

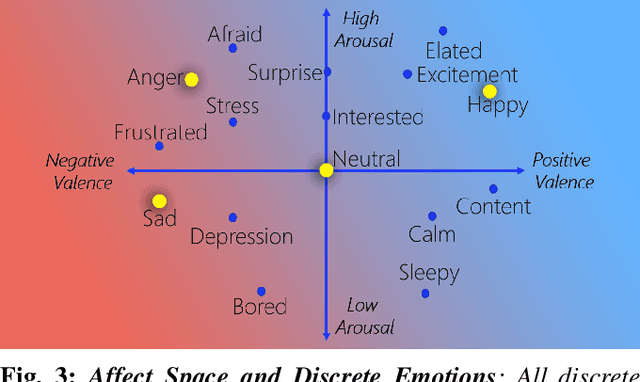

Identifying Emotions from Walking using Affective and Deep Features

Jul 21, 2019

Abstract:We present a new data-driven model and algorithm to identify the perceived emotions of individuals based on their walking styles. Given an RGB video of an individual walking, we extract his/her walking gait in the form of a series of 3D poses. Our goal is to exploit the gait features to classify the emotional state of the human into one of four emotions: happy, sad, angry, or neutral. Our perceived emotion recognition approach uses deep features learned via LSTM on labeled emotion datasets. Furthermore, we combine these features with affective features computed from gaits using posture and movement cues. These features are classified using a Random Forest Classifier. We show that our mapping between the combined feature space and the perceived emotional state provides 80.07% accuracy in identifying the perceived emotions. In addition to classifying discrete categories of emotions, our algorithm also predicts the values of perceived valence and arousal from gaits. We also present an EWalk (Emotion Walk) dataset that consists of videos of walking individuals with gaits and labeled emotions. To the best of our knowledge, this is the first gait-based model to identify perceived emotions from videos of walking individuals.

FVA: Modeling Perceived Friendliness of Virtual Agents Using Movement Characteristics

Jun 30, 2019

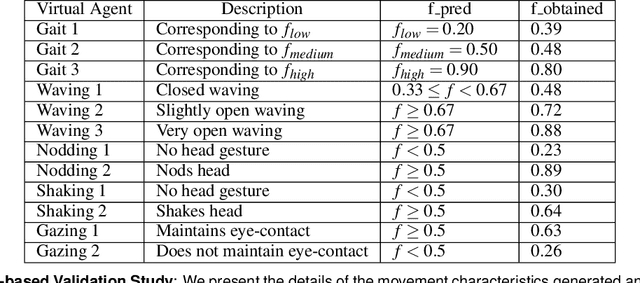

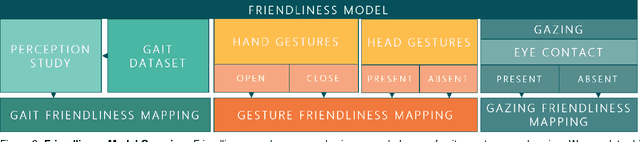

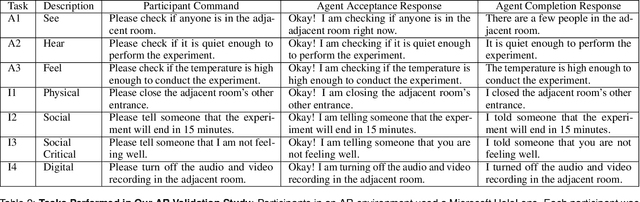

Abstract:We present a new approach for improving the friendliness and warmth of a virtual agent in an AR environment by generating appropriate movement characteristics. Our algorithm is based on a novel data-driven friendliness model that is computed using a user-study and psychological characteristics. We use our model to control the movements corresponding to the gaits, gestures, and gazing of friendly virtual agents (FVAs) as they interact with the user's avatar and other agents in the environment. We have integrated FVA agents with an AR environment using with a Microsoft HoloLens. Our algorithm can generate plausible movements at interactive rates to increase the social presence. We also investigate the perception of a user in an AR setting and observe that an FVA has a statistically significant improvement in terms of the perceived friendliness and social presence of a user compared to an agent without the friendliness modeling. We observe an increment of 5.71% in the mean responses to a friendliness measure and an improvement of 4.03% in the mean responses to a social presence measure.

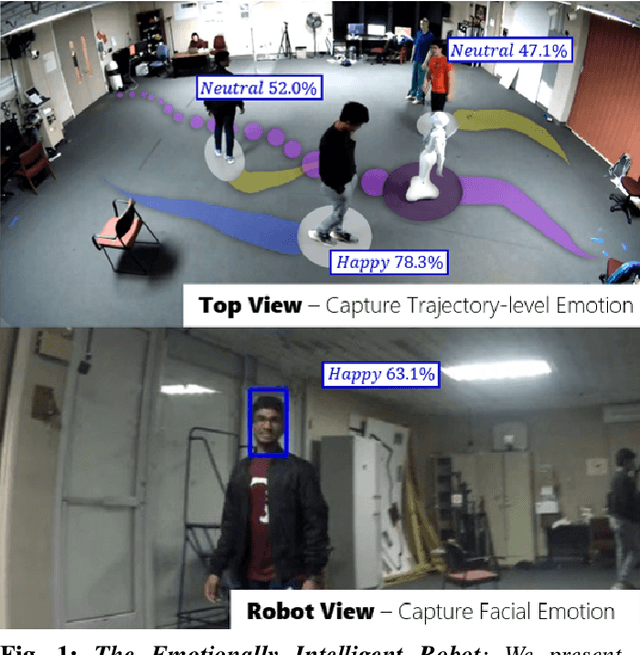

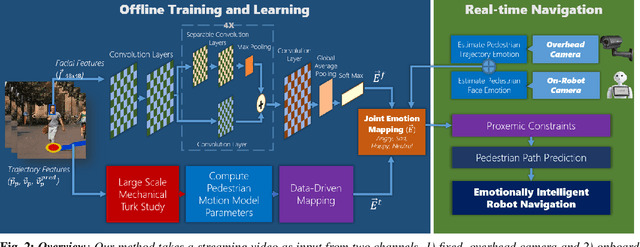

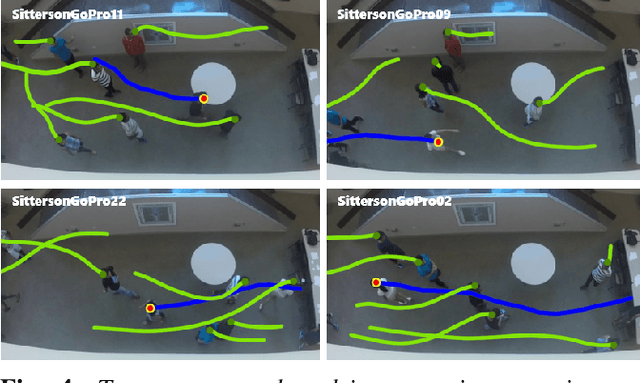

The Emotionally Intelligent Robot: Improving Social Navigation in Crowded Environments

Mar 07, 2019

Abstract:We present a real-time algorithm for emotion-aware navigation of a robot among pedestrians. Our approach estimates time-varying emotional behaviors of pedestrians from their faces and trajectories using a combination of Bayesian-inference, CNN-based learning, and the PAD (Pleasure-Arousal-Dominance) model from psychology. These PAD characteristics are used for long-term path prediction and generating proxemic constraints for each pedestrian. We use a multi-channel model to classify pedestrian characteristics into four emotion categories (happy, sad, angry, neutral). In our validation results, we observe an emotion detection accuracy of 85.33%. We formulate emotion-based proxemic constraints to perform socially-aware robot navigation in low- to medium-density environments. We demonstrate the benefits of our algorithm in simulated environments with tens of pedestrians as well as in a real-world setting with Pepper, a social humanoid robot.

Pedestrian Dominance Modeling for Socially-Aware Robot Navigation

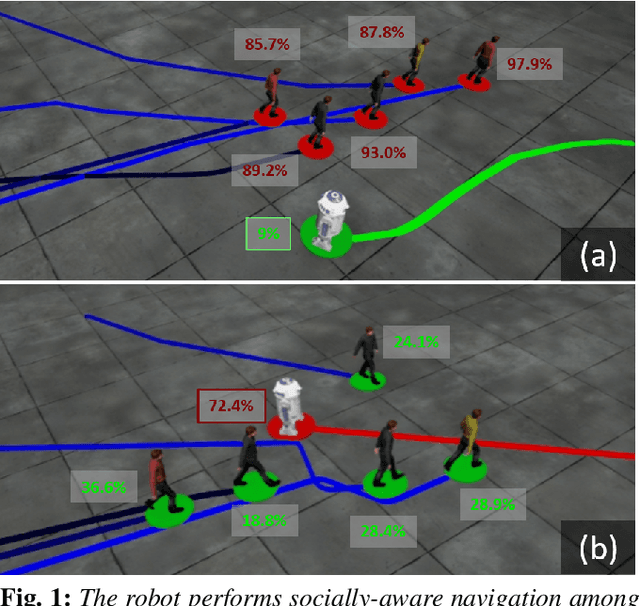

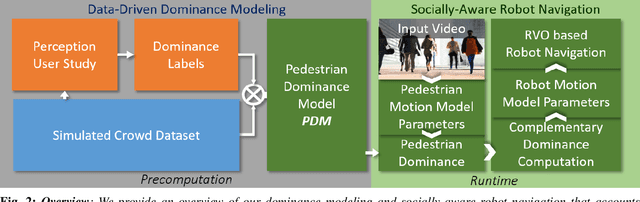

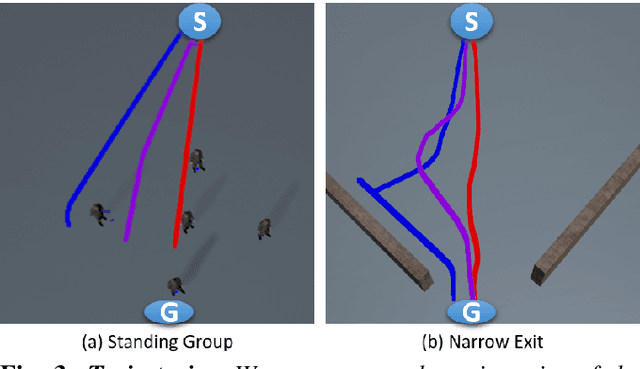

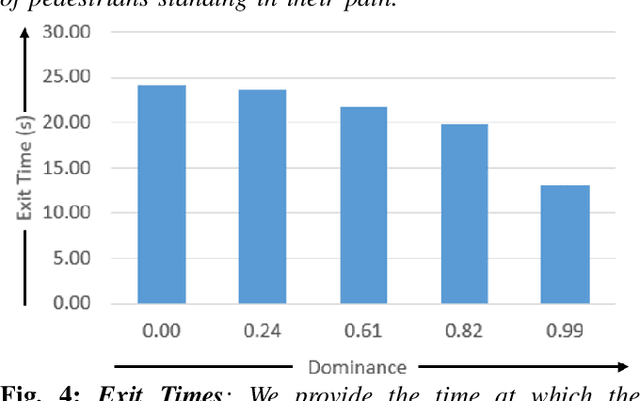

Feb 13, 2019

Abstract:We present a Pedestrian Dominance Model (PDM) to identify the dominance characteristics of pedestrians for robot navigation. Through a perception study on a simulated dataset of pedestrians, PDM models the perceived dominance levels of pedestrians with varying motion behaviors corresponding to trajectory, speed, and personal space. At runtime, we use PDM to identify the dominance levels of pedestrians to facilitate socially-aware navigation for the robots. PDM can predict dominance levels from trajectories with ~85% accuracy. Prior studies in psychology literature indicate that when interacting with humans, people are more comfortable around people that exhibit complementary movement behaviors. Our algorithm leverages this by enabling the robots to exhibit complementing responses to pedestrian dominance. We also present an application of PDM for generating dominance-based collision-avoidance behaviors in the navigation of autonomous vehicles among pedestrians. We demonstrate the benefits of our algorithm for robots navigating among tens of pedestrians in simulated environments.

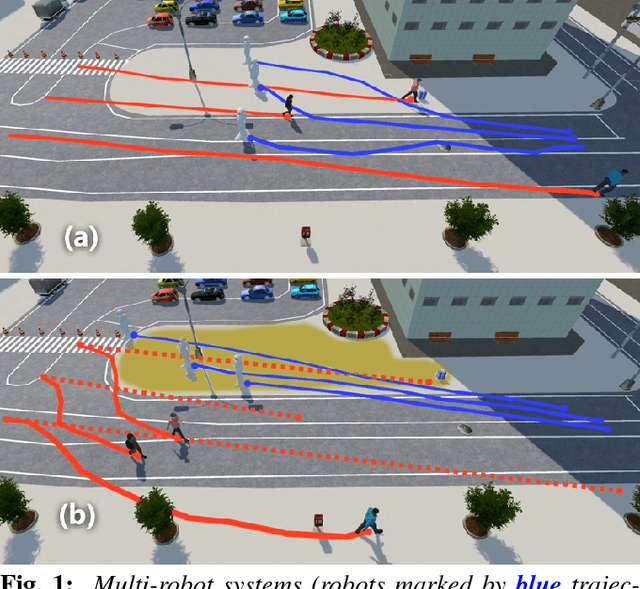

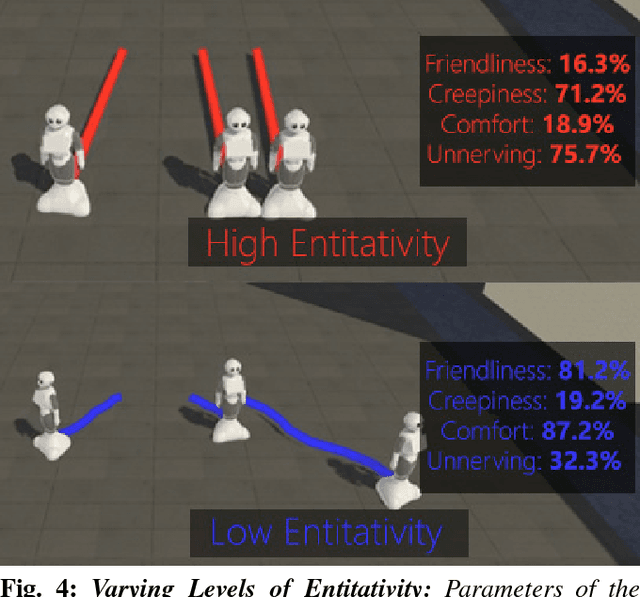

The Socially Invisible Robot: Navigation in the Social World using Robot Entitativity

Jul 18, 2018

Abstract:We present a real-time, data-driven algorithm to enhance the social-invisibility of robots within crowds. Our approach is based on prior psychological research, which reveals that people notice and--importantly--react negatively to groups of social actors when they have high entitativity, moving in a tight group with similar appearances and trajectories. In order to evaluate that behavior, we performed a user study to develop navigational algorithms that minimize entitativity. This study establishes a mapping between emotional reactions and multi-robot trajectories and appearances and further generalizes the finding across various environmental conditions. We demonstrate the applicability of our entitativity modeling for trajectory computation for active surveillance and dynamic intervention in simulated robot-human interaction scenarios. Our approach empirically shows that various levels of entitative robots can be used to both avoid and influence pedestrians while not eliciting strong emotional reactions, giving multi-robot systems socially-invisibility.

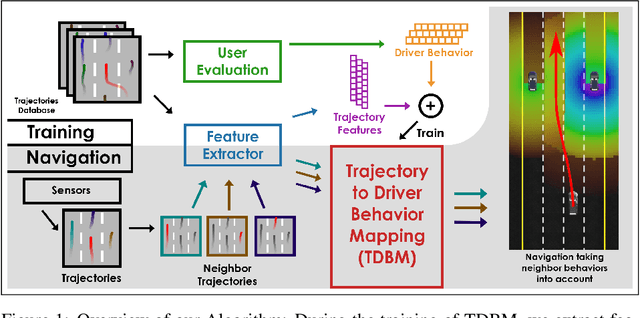

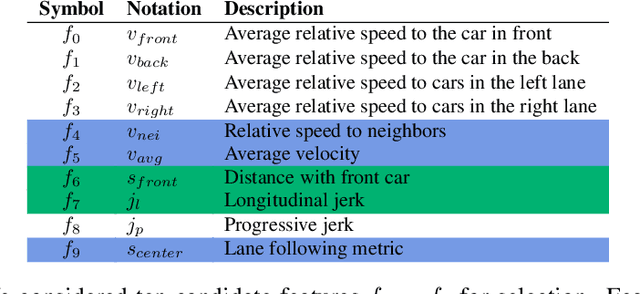

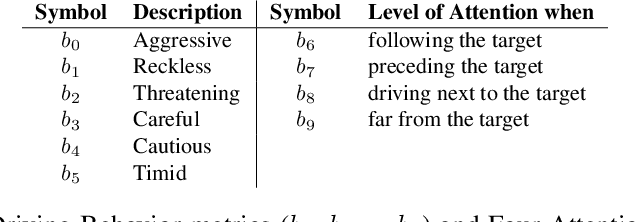

Identifying Driver Behaviors using Trajectory Features for Vehicle Navigation

Mar 16, 2018

Abstract:We present a novel approach to automatically identify driver behaviors from vehicle trajectories and use them for safe navigation of autonomous vehicles. We propose a novel set of features that can be easily extracted from car trajectories. We derive a data-driven mapping between these features and six driver behaviors using an elaborate web-based user study. We also compute a summarized score indicating a level of awareness that is needed while driving next to other vehicles. We also incorporate our algorithm into a vehicle navigation simulation system and demonstrate its benefits in terms of safer real-time navigation, while driving next to aggressive or dangerous drivers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge