Kurinchi Gurusamy

Voice-assisted Image Labelling for Endoscopic Ultrasound Classification using Neural Networks

Oct 12, 2021

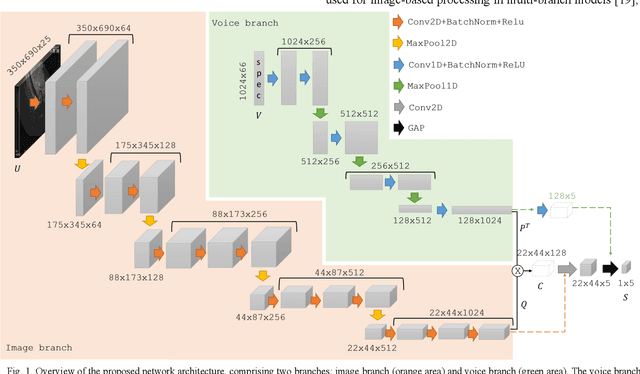

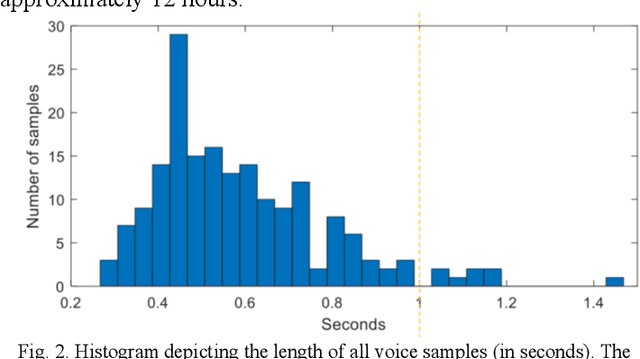

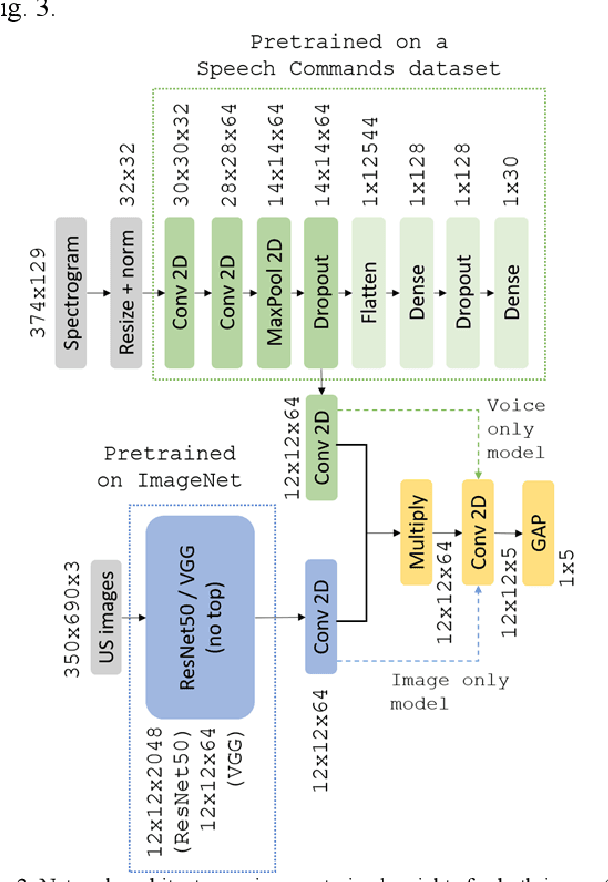

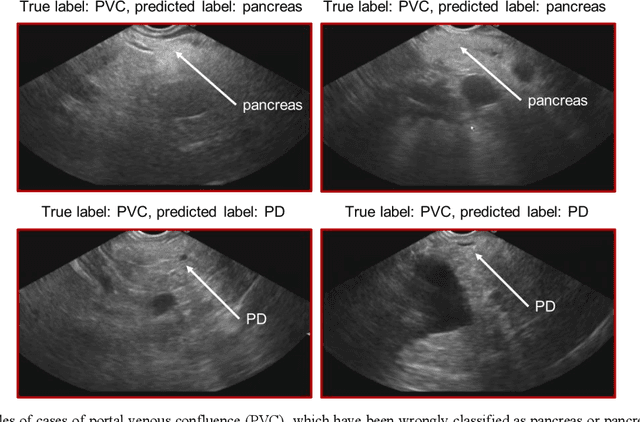

Abstract:Ultrasound imaging is a commonly used technology for visualising patient anatomy in real-time during diagnostic and therapeutic procedures. High operator dependency and low reproducibility make ultrasound imaging and interpretation challenging with a steep learning curve. Automatic image classification using deep learning has the potential to overcome some of these challenges by supporting ultrasound training in novices, as well as aiding ultrasound image interpretation in patient with complex pathology for more experienced practitioners. However, the use of deep learning methods requires a large amount of data in order to provide accurate results. Labelling large ultrasound datasets is a challenging task because labels are retrospectively assigned to 2D images without the 3D spatial context available in vivo or that would be inferred while visually tracking structures between frames during the procedure. In this work, we propose a multi-modal convolutional neural network (CNN) architecture that labels endoscopic ultrasound (EUS) images from raw verbal comments provided by a clinician during the procedure. We use a CNN composed of two branches, one for voice data and another for image data, which are joined to predict image labels from the spoken names of anatomical landmarks. The network was trained using recorded verbal comments from expert operators. Our results show a prediction accuracy of 76% at image level on a dataset with 5 different labels. We conclude that the addition of spoken commentaries can increase the performance of ultrasound image classification, and eliminate the burden of manually labelling large EUS datasets necessary for deep learning applications.

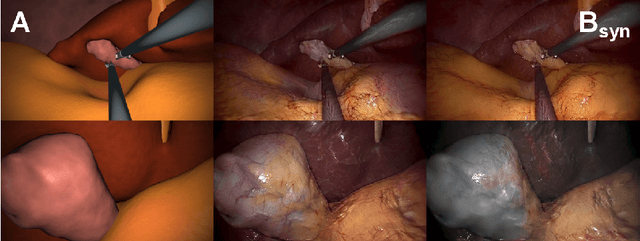

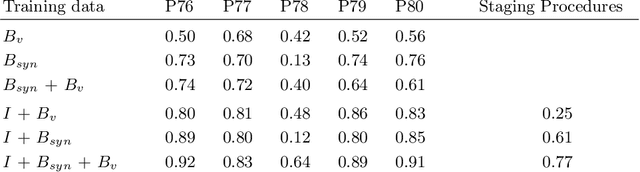

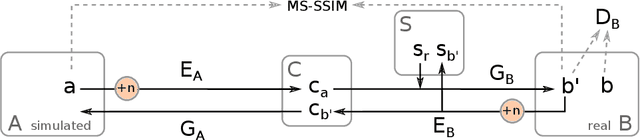

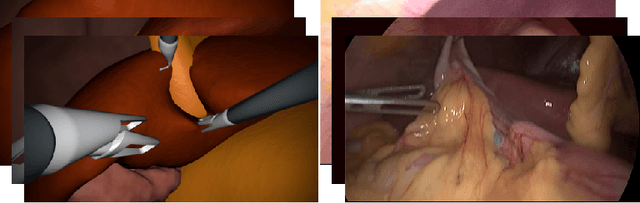

Generating large labeled data sets for laparoscopic image processing tasks using unpaired image-to-image translation

Jul 05, 2019

Abstract:In the medical domain, the lack of large training data sets and benchmarks is often a limiting factor for training deep neural networks. In contrast to expensive manual labeling, computer simulations can generate large and fully labeled data sets with a minimum of manual effort. However, models that are trained on simulated data usually do not translate well to real scenarios. To bridge the domain gap between simulated and real laparoscopic images, we exploit recent advances in unpaired image-to-image translation. We extent an image-to-image translation method to generate a diverse multitude of realistically looking synthetic images based on images from a simple laparoscopy simulation. By incorporating means to ensure that the image content is preserved during the translation process, we ensure that the labels given for the simulated images remain valid for their realistically looking translations. This way, we are able to generate a large, fully labeled synthetic data set of laparoscopic images with realistic appearance. We show that this data set can be used to train models for the task of liver segmentation of laparoscopic images. We achieve average dice scores of up to 0.89 in some patients without manually labeling a single laparoscopic image and show that using our synthetic data to pre-train models can greatly improve their performance. The synthetic data set will be made publicly available, fully labeled with segmentation maps, depth maps, normal maps, and positions of tools and camera (http://opencas.dkfz.de/image2image).

Augmented Reality needle ablation guidance tool for Irreversible Electroporation in the pancreas

Feb 09, 2018Abstract:Irreversible electroporation (IRE) is a soft tissue ablation technique suitable for treatment of inoperable tumours in the pancreas. The process involves applying a high voltage electric field to the tissue containing the mass using needle electrodes, leaving cancerous cells irreversibly damaged and vulnerable to apoptosis. Efficacy of the treatment depends heavily on the accuracy of needle placement and requires a high degree of skill from the operator. In this paper, we describe an Augmented Reality (AR) system designed to overcome the challenges associated with planning and guiding the needle insertion process. Our solution, based on the HoloLens (Microsoft, USA) platform, tracks the position of the headset, needle electrodes and ultrasound (US) probe in space. The proof of concept implementation of the system uses this tracking data to render real-time holographic guides on the HoloLens, giving the user insight into the current progress of needle insertion and an indication of the target needle trajectory. The operator's field of view is augmented using visual guides and real-time US feed rendered on a holographic plane, eliminating the need to consult external monitors. Based on these early prototypes, we are aiming to develop a system that will lower the skill level required for IRE while increasing overall accuracy of needle insertion and, hence, the likelihood of successful treatment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge