Konstantina Nikita

A modular framework for automated evaluation of procedural content generation in serious games with deep reinforcement learning agents

May 22, 2025Abstract:Serious Games (SGs) are nowadays shifting focus to include procedural content generation (PCG) in the development process as a means of offering personalized and enhanced player experience. However, the development of a framework to assess the impact of PCG techniques when integrated into SGs remains particularly challenging. This study proposes a methodology for automated evaluation of PCG integration in SGs, incorporating deep reinforcement learning (DRL) game testing agents. To validate the proposed framework, a previously introduced SG featuring card game mechanics and incorporating three different versions of PCG for nonplayer character (NPC) creation has been deployed. Version 1 features random NPC creation, while versions 2 and 3 utilize a genetic algorithm approach. These versions are used to test the impact of different dynamic SG environments on the proposed framework's agents. The obtained results highlight the superiority of the DRL game testing agents trained on Versions 2 and 3 over those trained on Version 1 in terms of win rate (i.e. number of wins per played games) and training time. More specifically, within the execution of a test emulating regular gameplay, both Versions 2 and 3 peaked at a 97% win rate and achieved statistically significant higher (p=0009) win rates compared to those achieved in Version 1 that peaked at 94%. Overall, results advocate towards the proposed framework's capability to produce meaningful data for the evaluation of procedurally generated content in SGs.

A comprehensive interpretable machine learning framework for Mild Cognitive Impairment and Alzheimer's disease diagnosis

Dec 12, 2024

Abstract:An interpretable machine learning (ML) framework is introduced to enhance the diagnosis of Mild Cognitive Impairment (MCI) and Alzheimer's disease (AD) by ensuring robustness of the ML models' interpretations. The dataset used comprises volumetric measurements from brain MRI and genetic data from healthy individuals and patients with MCI/AD, obtained through the Alzheimer's Disease Neuroimaging Initiative. The existing class imbalance is addressed by an ensemble learning approach, while various attribution-based and counterfactual-based interpretability methods are leveraged towards producing diverse explanations related to the pathophysiology of MCI/AD. A unification method combining SHAP with counterfactual explanations assesses the interpretability techniques' robustness. The best performing model yielded 87.5% balanced accuracy and 90.8% F1-score. The attribution-based interpretability methods highlighted significant volumetric and genetic features related to MCI/AD risk. The unification method provided useful insights regarding those features' necessity and sufficiency, further showcasing their significance in MCI/AD diagnosis.

AIris: An AI-powered Wearable Assistive Device for the Visually Impaired

May 13, 2024Abstract:Assistive technologies for the visually impaired have evolved to facilitate interaction with a complex and dynamic world. In this paper, we introduce AIris, an AI-powered wearable device that provides environmental awareness and interaction capabilities to visually impaired users. AIris combines a sophisticated camera mounted on eyewear with a natural language processing interface, enabling users to receive real-time auditory descriptions of their surroundings. We have created a functional prototype system that operates effectively in real-world conditions. AIris demonstrates the ability to accurately identify objects and interpret scenes, providing users with a sense of spatial awareness previously unattainable with traditional assistive devices. The system is designed to be cost-effective and user-friendly, supporting general and specialized tasks: face recognition, scene description, text reading, object recognition, money counting, note-taking, and barcode scanning. AIris marks a transformative step, bringing AI enhancements to assistive technology, enabling rich interactions with a human-like feel.

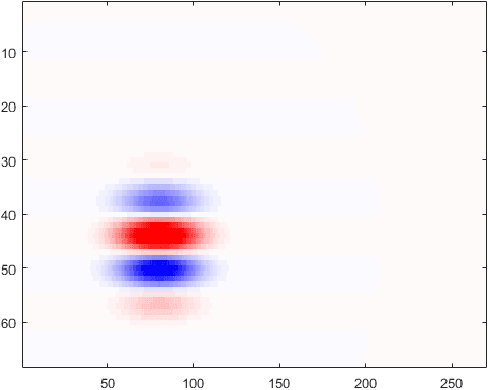

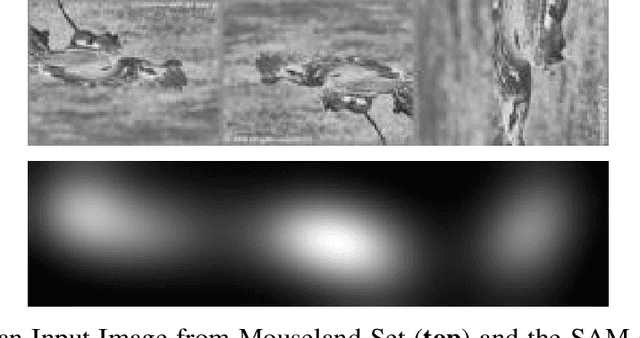

Visual attention information can be traced on cortical response but not on the retina: evidence from electrophysiological mouse data using natural images as stimuli

Aug 01, 2023

Abstract:Visual attention forms the basis of understanding the visual world. In this work we follow a computational approach to investigate the biological basis of visual attention. We analyze retinal and cortical electrophysiological data from mouse. Visual Stimuli are Natural Images depicting real world scenes. Our results show that in primary visual cortex (V1), a subset of around $10\%$ of the neurons responds differently to salient versus non-salient visual regions. Visual attention information was not traced in retinal response. It appears that the retina remains naive concerning visual attention; cortical response gets modulated to interpret visual attention information. Experimental animal studies may be designed to further explore the biological basis of visual attention we traced in this study. In applied and translational science, our study contributes to the design of improved visual prostheses systems -- systems that create artificial visual percepts to visually impaired individuals by electronic implants placed on either the retina or the cortex.

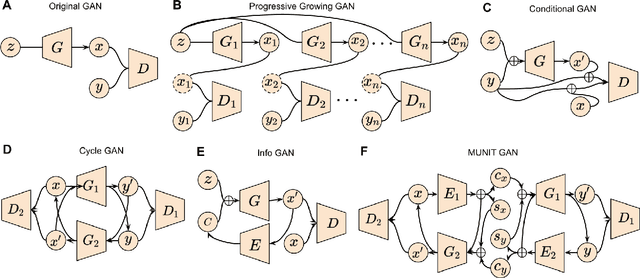

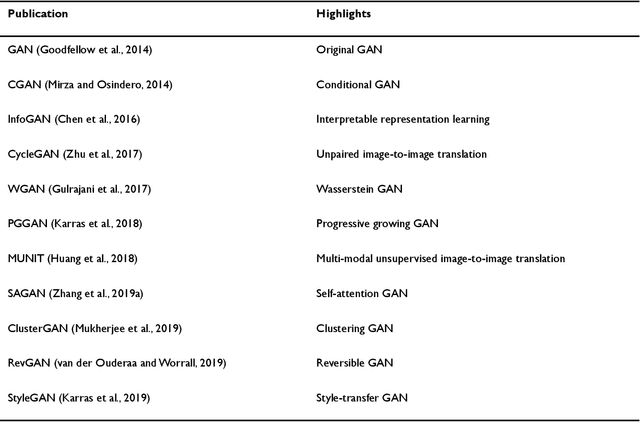

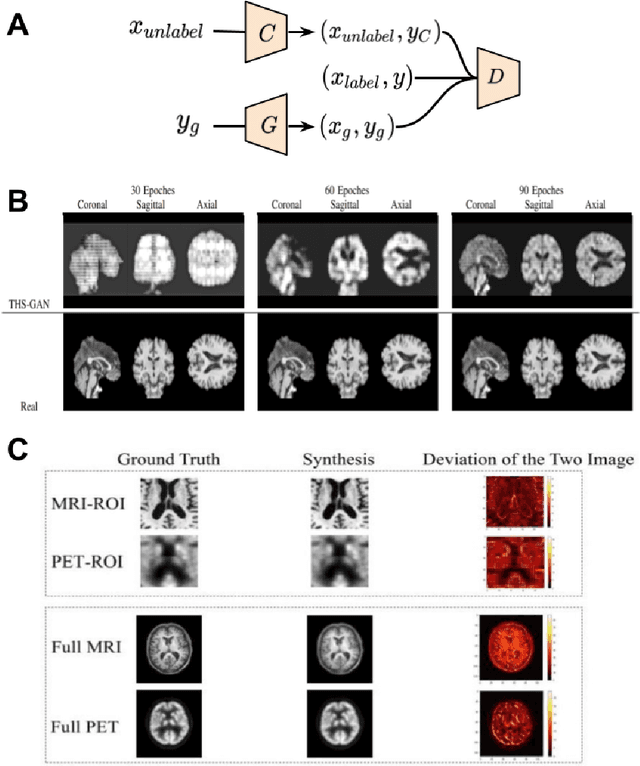

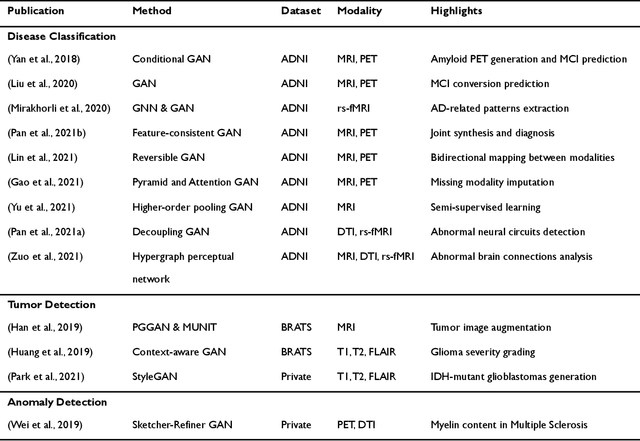

Applications of Generative Adversarial Networks in Neuroimaging and Clinical Neuroscience

Jun 14, 2022

Abstract:Generative adversarial networks (GANs) are one powerful type of deep learning models that have been successfully utilized in numerous fields. They belong to a broader family called generative methods, which generate new data with a probabilistic model by learning sample distribution from real examples. In the clinical context, GANs have shown enhanced capabilities in capturing spatially complex, nonlinear, and potentially subtle disease effects compared to traditional generative methods. This review appraises the existing literature on the applications of GANs in imaging studies of various neurological conditions, including Alzheimer's disease, brain tumors, brain aging, and multiple sclerosis. We provide an intuitive explanation of various GAN methods for each application and further discuss the main challenges, open questions, and promising future directions of leveraging GANs in neuroimaging. We aim to bridge the gap between advanced deep learning methods and neurology research by highlighting how GANs can be leveraged to support clinical decision making and contribute to a better understanding of the structural and functional patterns of brain diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge