Maria Athanasiou

Comparative assessment of fairness definitions and bias mitigation strategies in machine learning-based diagnosis of Alzheimer's disease from MR images

May 29, 2025Abstract:The present study performs a comprehensive fairness analysis of machine learning (ML) models for the diagnosis of Mild Cognitive Impairment (MCI) and Alzheimer's disease (AD) from MRI-derived neuroimaging features. Biases associated with age, race, and gender in a multi-cohort dataset, as well as the influence of proxy features encoding these sensitive attributes, are investigated. The reliability of various fairness definitions and metrics in the identification of such biases is also assessed. Based on the most appropriate fairness measures, a comparative analysis of widely used pre-processing, in-processing, and post-processing bias mitigation strategies is performed. Moreover, a novel composite measure is introduced to quantify the trade-off between fairness and performance by considering the F1-score and the equalized odds ratio, making it appropriate for medical diagnostic applications. The obtained results reveal the existence of biases related to age and race, while no significant gender bias is observed. The deployed mitigation strategies yield varying improvements in terms of fairness across the different sensitive attributes and studied subproblems. For race and gender, Reject Option Classification improves equalized odds by 46% and 57%, respectively, and achieves harmonic mean scores of 0.75 and 0.80 in the MCI versus AD subproblem, whereas for age, in the same subproblem, adversarial debiasing yields the highest equalized odds improvement of 40% with a harmonic mean score of 0.69. Insights are provided into how variations in AD neuropathology and risk factors, associated with demographic characteristics, influence model fairness.

A modular framework for automated evaluation of procedural content generation in serious games with deep reinforcement learning agents

May 22, 2025Abstract:Serious Games (SGs) are nowadays shifting focus to include procedural content generation (PCG) in the development process as a means of offering personalized and enhanced player experience. However, the development of a framework to assess the impact of PCG techniques when integrated into SGs remains particularly challenging. This study proposes a methodology for automated evaluation of PCG integration in SGs, incorporating deep reinforcement learning (DRL) game testing agents. To validate the proposed framework, a previously introduced SG featuring card game mechanics and incorporating three different versions of PCG for nonplayer character (NPC) creation has been deployed. Version 1 features random NPC creation, while versions 2 and 3 utilize a genetic algorithm approach. These versions are used to test the impact of different dynamic SG environments on the proposed framework's agents. The obtained results highlight the superiority of the DRL game testing agents trained on Versions 2 and 3 over those trained on Version 1 in terms of win rate (i.e. number of wins per played games) and training time. More specifically, within the execution of a test emulating regular gameplay, both Versions 2 and 3 peaked at a 97% win rate and achieved statistically significant higher (p=0009) win rates compared to those achieved in Version 1 that peaked at 94%. Overall, results advocate towards the proposed framework's capability to produce meaningful data for the evaluation of procedurally generated content in SGs.

A comprehensive interpretable machine learning framework for Mild Cognitive Impairment and Alzheimer's disease diagnosis

Dec 12, 2024

Abstract:An interpretable machine learning (ML) framework is introduced to enhance the diagnosis of Mild Cognitive Impairment (MCI) and Alzheimer's disease (AD) by ensuring robustness of the ML models' interpretations. The dataset used comprises volumetric measurements from brain MRI and genetic data from healthy individuals and patients with MCI/AD, obtained through the Alzheimer's Disease Neuroimaging Initiative. The existing class imbalance is addressed by an ensemble learning approach, while various attribution-based and counterfactual-based interpretability methods are leveraged towards producing diverse explanations related to the pathophysiology of MCI/AD. A unification method combining SHAP with counterfactual explanations assesses the interpretability techniques' robustness. The best performing model yielded 87.5% balanced accuracy and 90.8% F1-score. The attribution-based interpretability methods highlighted significant volumetric and genetic features related to MCI/AD risk. The unification method provided useful insights regarding those features' necessity and sufficiency, further showcasing their significance in MCI/AD diagnosis.

Sustaining model performance for covid-19 detection from dynamic audio data: Development and evaluation of a comprehensive drift-adaptive framework

Sep 28, 2024

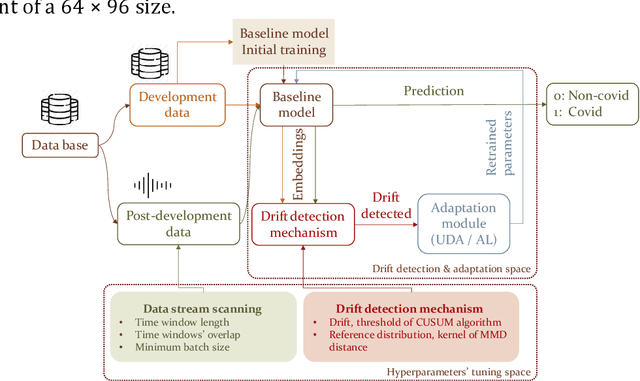

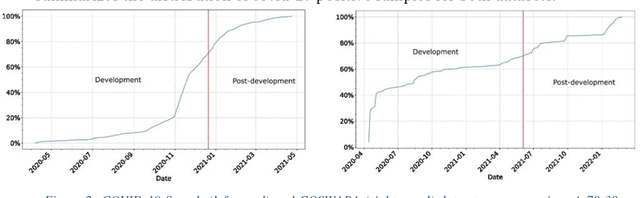

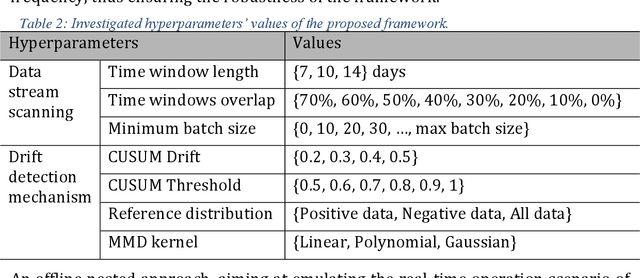

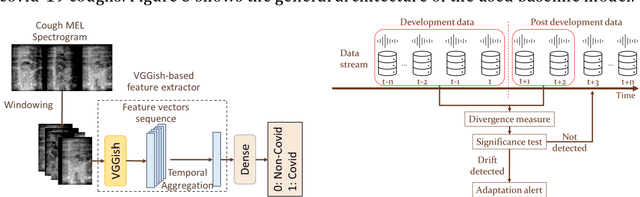

Abstract:Background: The COVID-19 pandemic has highlighted the need for robust diagnostic tools capable of detecting the disease from diverse and evolving data sources. Machine learning models, especially convolutional neural networks (CNNs), have shown promise. However, the dynamic nature of real-world data can lead to model drift, where performance degrades over time as the underlying data distribution changes. Addressing this challenge is crucial to maintaining accuracy and reliability in diagnostic applications. Objective: This study aims to develop a framework that monitors model drift and employs adaptation mechanisms to mitigate performance fluctuations in COVID-19 detection models trained on dynamic audio data. Methods: Two crowd-sourced COVID-19 audio datasets, COVID-19 Sounds and COSWARA, were used. Each was divided into development and post-development periods. A baseline CNN model was trained and evaluated using cough recordings from the development period. Maximum mean discrepancy (MMD) was used to detect changes in data distributions and model performance between periods. Upon detecting drift, retraining was triggered to update the baseline model. Two adaptation approaches were compared: unsupervised domain adaptation (UDA) and active learning (AL). Results: UDA improved balanced accuracy by up to 22% and 24% for the COVID-19 Sounds and COSWARA datasets, respectively. AL yielded even greater improvements, with increases of up to 30% and 60%, respectively. Conclusions: The proposed framework addresses model drift in COVID-19 detection, enabling continuous adaptation to evolving data. This approach ensures sustained model performance, contributing to robust diagnostic tools for COVID-19 and potentially other infectious diseases.

Stratification of carotid atheromatous plaque using interpretable deep learning methods on B-mode ultrasound images

Feb 04, 2022

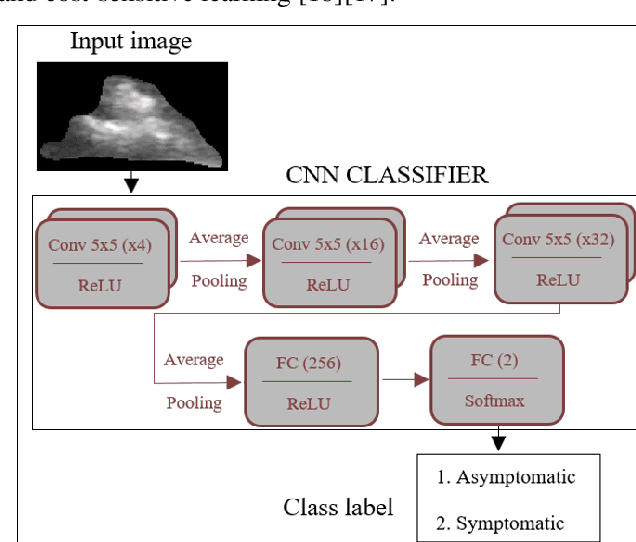

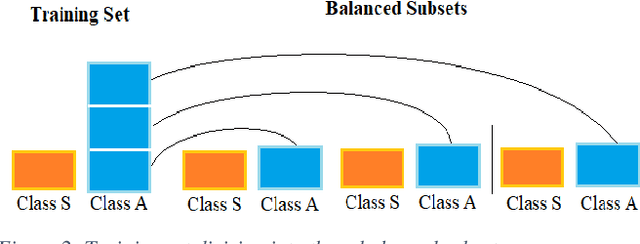

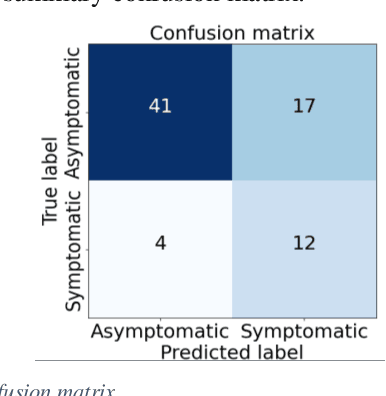

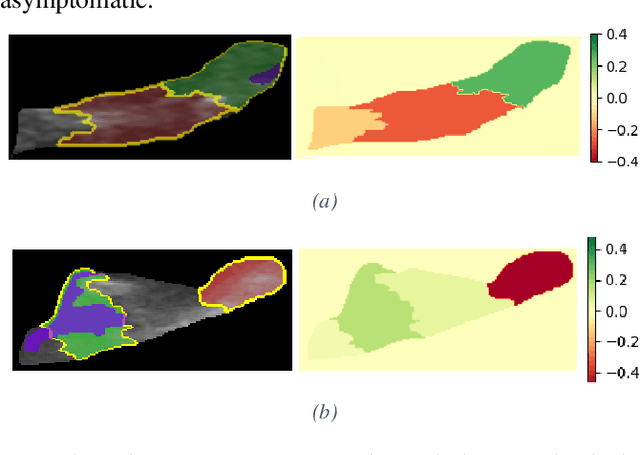

Abstract:Carotid atherosclerosis is the major cause of ischemic stroke resulting in significant rates of mortality and disability annually. Early diagnosis of such cases is of great importance, since it enables clinicians to apply a more effective treatment strategy. This paper introduces an interpretable classification approach of carotid ultrasound images for the risk assessment and stratification of patients with carotid atheromatous plaque. To address the highly imbalanced distribution of patients between the symptomatic and asymptomatic classes (16 vs 58, respectively), an ensemble learning scheme based on a sub-sampling approach was applied along with a two-phase, cost-sensitive strategy of learning, that uses the original and a resampled data set. Convolutional Neural Networks (CNNs) were utilized for building the primary models of the ensemble. A six-layer deep CNN was used to automatically extract features from the images, followed by a classification stage of two fully connected layers. The obtained results (Area Under the ROC Curve (AUC): 73%, sensitivity: 75%, specificity: 70%) indicate that the proposed approach achieved acceptable discrimination performance. Finally, interpretability methods were applied on the model's predictions in order to reveal insights on the model's decision process as well as to enable the identification of novel image biomarkers for the stratification of patients with carotid atheromatous plaque.Clinical Relevance-The integration of interpretability methods with deep learning strategies can facilitate the identification of novel ultrasound image biomarkers for the stratification of patients with carotid atheromatous plaque.

Interpretability methods of machine learning algorithms with applications in breast cancer diagnosis

Feb 04, 2022

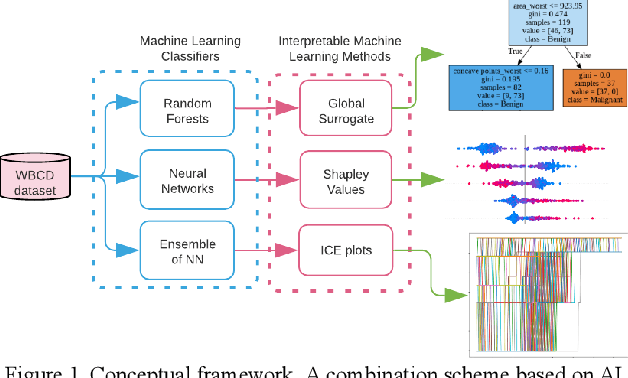

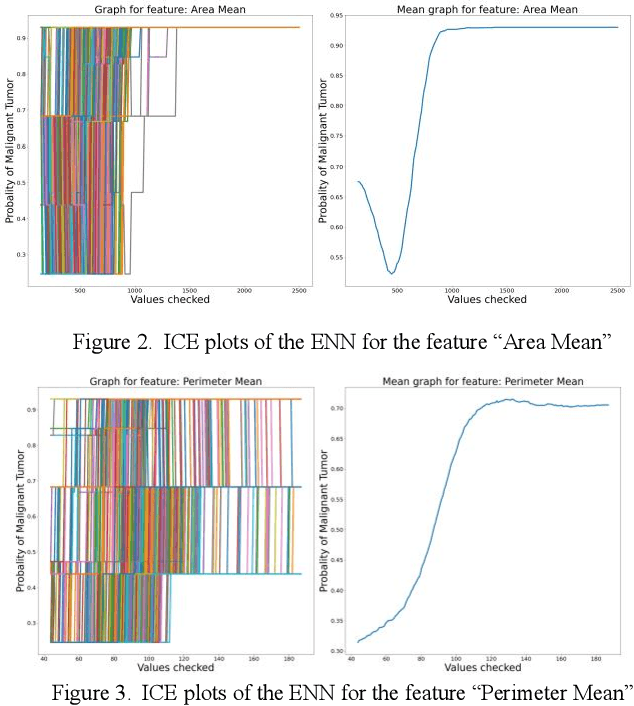

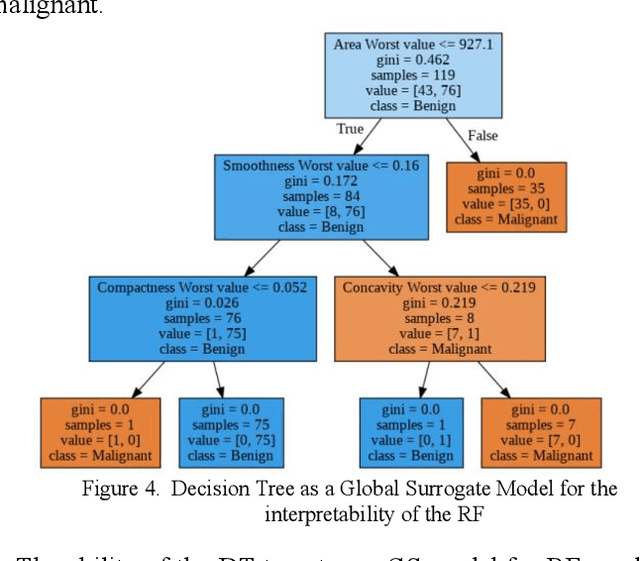

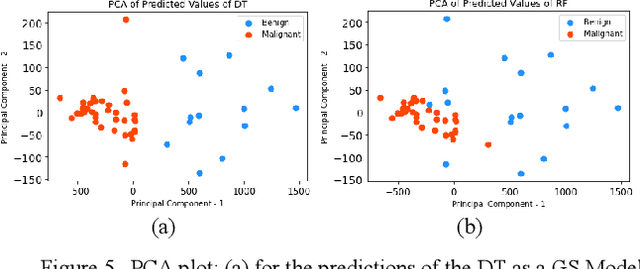

Abstract:Early detection of breast cancer is a powerful tool towards decreasing its socioeconomic burden. Although, artificial intelligence (AI) methods have shown remarkable results towards this goal, their "black box" nature hinders their wide adoption in clinical practice. To address the need for AI guided breast cancer diagnosis, interpretability methods can be utilized. In this study, we used AI methods, i.e., Random Forests (RF), Neural Networks (NN) and Ensembles of Neural Networks (ENN), towards this goal and explained and optimized their performance through interpretability techniques, such as the Global Surrogate (GS) method, the Individual Conditional Expectation (ICE) plots and the Shapley values (SV). The Wisconsin Diagnostic Breast Cancer (WDBC) dataset of the open UCI repository was used for the training and evaluation of the AI algorithms. The best performance for breast cancer diagnosis was achieved by the proposed ENN (96.6% accuracy and 0.96 area under the ROC curve), and its predictions were explained by ICE plots, proving that its decisions were compliant with current medical knowledge and can be further utilized to gain new insights in the pathophysiological mechanisms of breast cancer. Feature selection based on features' importance according to the GS model improved the performance of the RF (leading the accuracy from 96.49% to 97.18% and the area under the ROC curve from 0.96 to 0.97) and feature selection based on features' importance according to SV improved the performance of the NN (leading the accuracy from 94.6% to 95.53% and the area under the ROC curve from 0.94 to 0.95). Compared to other approaches on the same dataset, our proposed models demonstrated state of the art performance while being interpretable.

An explainable XGBoost-based approach towards assessing the risk of cardiovascular disease in patients with Type 2 Diabetes Mellitus

Sep 14, 2020

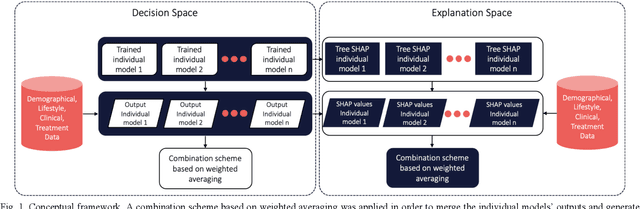

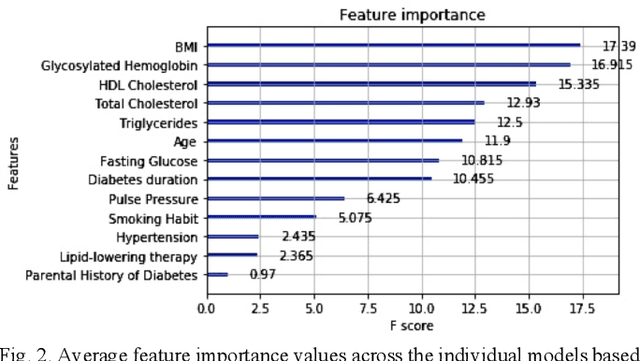

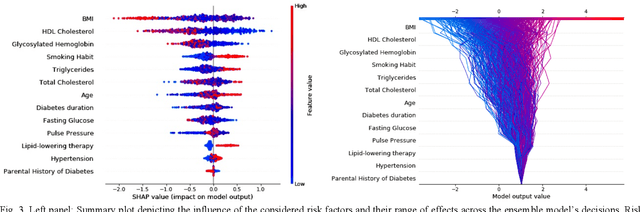

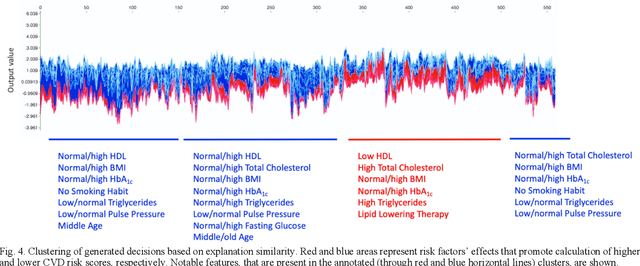

Abstract:Cardiovascular Disease (CVD) is an important cause of disability and death among individuals with Diabetes Mellitus (DM). International clinical guidelines for the management of Type 2 DM (T2DM) are founded on primary and secondary prevention and favor the evaluation of CVD related risk factors towards appropriate treatment initiation. CVD risk prediction models can provide valuable tools for optimizing the frequency of medical visits and performing timely preventive and therapeutic interventions against CVD events. The integration of explainability modalities in these models can enhance human understanding on the reasoning process, maximize transparency and embellish trust towards the models' adoption in clinical practice. The aim of the present study is to develop and evaluate an explainable personalized risk prediction model for the fatal or non-fatal CVD incidence in T2DM individuals. An explainable approach based on the eXtreme Gradient Boosting (XGBoost) and the Tree SHAP (SHapley Additive exPlanations) method is deployed for the calculation of the 5-year CVD risk and the generation of individual explanations on the model's decisions. Data from the 5-year follow up of 560 patients with T2DM are used for development and evaluation purposes. The obtained results (AUC = 71.13%) indicate the potential of the proposed approach to handle the unbalanced nature of the used dataset, while providing clinically meaningful insights about the ensemble model's decision process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge