Theofanis Ganitidis

Sustaining model performance for covid-19 detection from dynamic audio data: Development and evaluation of a comprehensive drift-adaptive framework

Sep 28, 2024

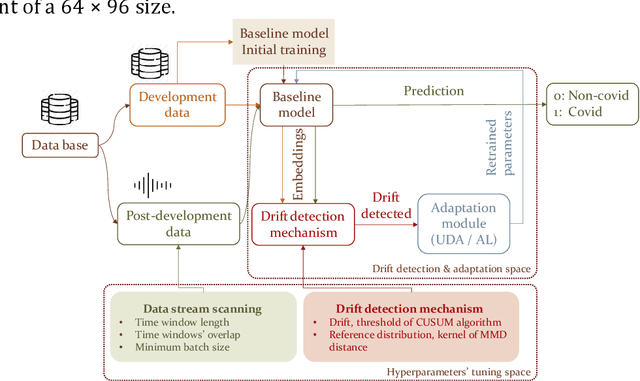

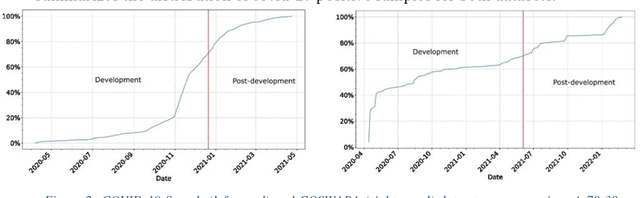

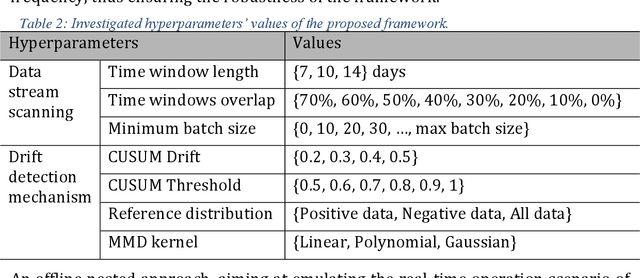

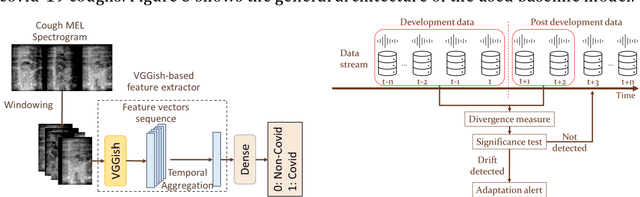

Abstract:Background: The COVID-19 pandemic has highlighted the need for robust diagnostic tools capable of detecting the disease from diverse and evolving data sources. Machine learning models, especially convolutional neural networks (CNNs), have shown promise. However, the dynamic nature of real-world data can lead to model drift, where performance degrades over time as the underlying data distribution changes. Addressing this challenge is crucial to maintaining accuracy and reliability in diagnostic applications. Objective: This study aims to develop a framework that monitors model drift and employs adaptation mechanisms to mitigate performance fluctuations in COVID-19 detection models trained on dynamic audio data. Methods: Two crowd-sourced COVID-19 audio datasets, COVID-19 Sounds and COSWARA, were used. Each was divided into development and post-development periods. A baseline CNN model was trained and evaluated using cough recordings from the development period. Maximum mean discrepancy (MMD) was used to detect changes in data distributions and model performance between periods. Upon detecting drift, retraining was triggered to update the baseline model. Two adaptation approaches were compared: unsupervised domain adaptation (UDA) and active learning (AL). Results: UDA improved balanced accuracy by up to 22% and 24% for the COVID-19 Sounds and COSWARA datasets, respectively. AL yielded even greater improvements, with increases of up to 30% and 60%, respectively. Conclusions: The proposed framework addresses model drift in COVID-19 detection, enabling continuous adaptation to evolving data. This approach ensures sustained model performance, contributing to robust diagnostic tools for COVID-19 and potentially other infectious diseases.

The smarty4covid dataset and knowledge base: a framework enabling interpretable analysis of audio signals

Jul 11, 2023

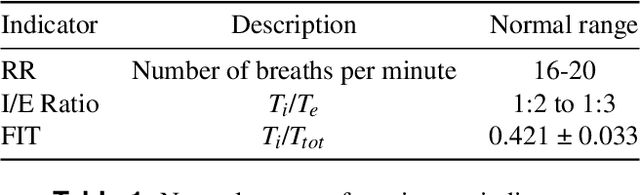

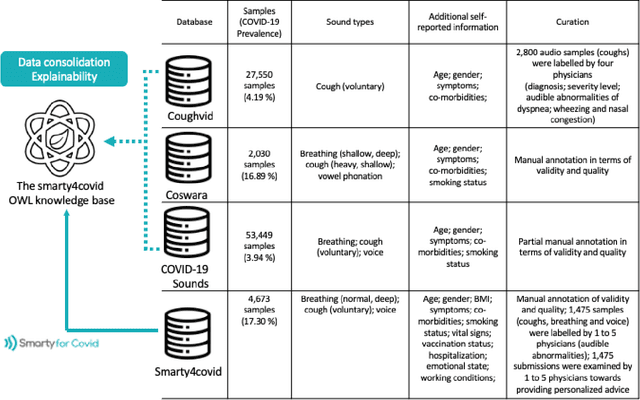

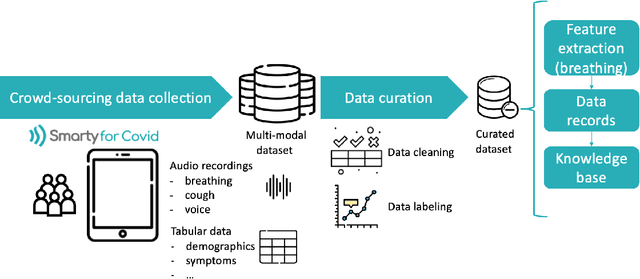

Abstract:Harnessing the power of Artificial Intelligence (AI) and m-health towards detecting new bio-markers indicative of the onset and progress of respiratory abnormalities/conditions has greatly attracted the scientific and research interest especially during COVID-19 pandemic. The smarty4covid dataset contains audio signals of cough (4,676), regular breathing (4,665), deep breathing (4,695) and voice (4,291) as recorded by means of mobile devices following a crowd-sourcing approach. Other self reported information is also included (e.g. COVID-19 virus tests), thus providing a comprehensive dataset for the development of COVID-19 risk detection models. The smarty4covid dataset is released in the form of a web-ontology language (OWL) knowledge base enabling data consolidation from other relevant datasets, complex queries and reasoning. It has been utilized towards the development of models able to: (i) extract clinically informative respiratory indicators from regular breathing records, and (ii) identify cough, breath and voice segments in crowd-sourced audio recordings. A new framework utilizing the smarty4covid OWL knowledge base towards generating counterfactual explanations in opaque AI-based COVID-19 risk detection models is proposed and validated.

Stratification of carotid atheromatous plaque using interpretable deep learning methods on B-mode ultrasound images

Feb 04, 2022

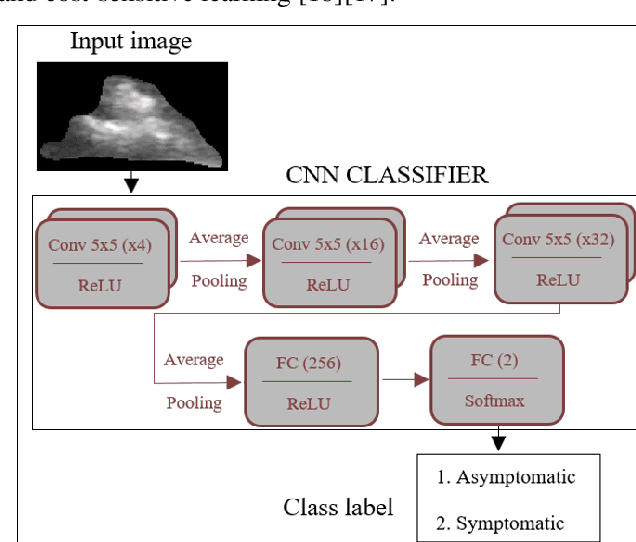

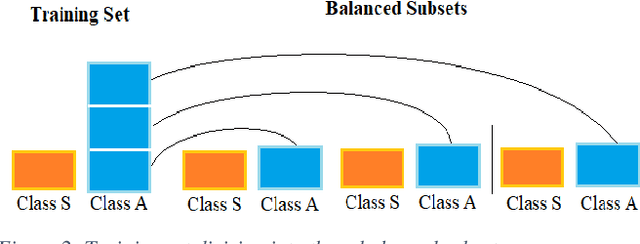

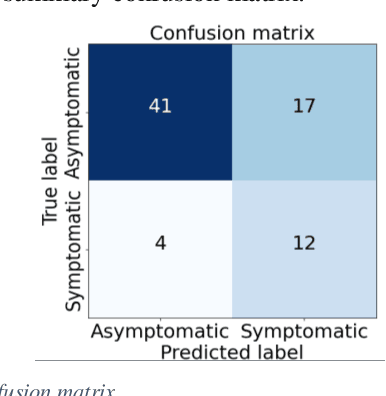

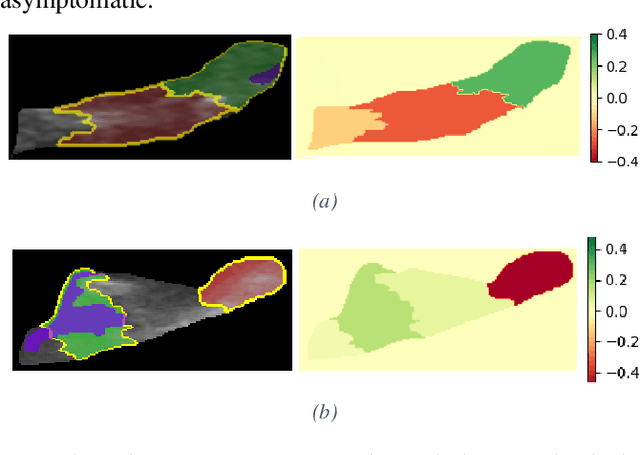

Abstract:Carotid atherosclerosis is the major cause of ischemic stroke resulting in significant rates of mortality and disability annually. Early diagnosis of such cases is of great importance, since it enables clinicians to apply a more effective treatment strategy. This paper introduces an interpretable classification approach of carotid ultrasound images for the risk assessment and stratification of patients with carotid atheromatous plaque. To address the highly imbalanced distribution of patients between the symptomatic and asymptomatic classes (16 vs 58, respectively), an ensemble learning scheme based on a sub-sampling approach was applied along with a two-phase, cost-sensitive strategy of learning, that uses the original and a resampled data set. Convolutional Neural Networks (CNNs) were utilized for building the primary models of the ensemble. A six-layer deep CNN was used to automatically extract features from the images, followed by a classification stage of two fully connected layers. The obtained results (Area Under the ROC Curve (AUC): 73%, sensitivity: 75%, specificity: 70%) indicate that the proposed approach achieved acceptable discrimination performance. Finally, interpretability methods were applied on the model's predictions in order to reveal insights on the model's decision process as well as to enable the identification of novel image biomarkers for the stratification of patients with carotid atheromatous plaque.Clinical Relevance-The integration of interpretability methods with deep learning strategies can facilitate the identification of novel ultrasound image biomarkers for the stratification of patients with carotid atheromatous plaque.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge