Khoi Khac Nguyen

Deep Reinforcement Learning for Intelligent Reflecting Surface-assisted D2D Communications

Aug 06, 2021

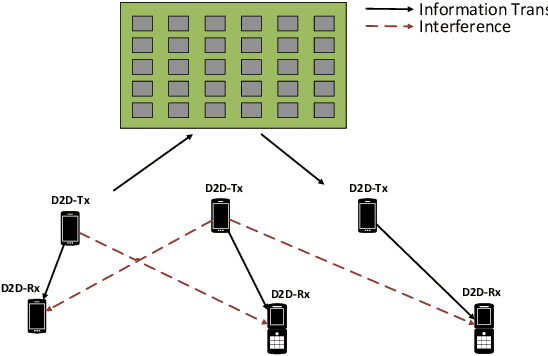

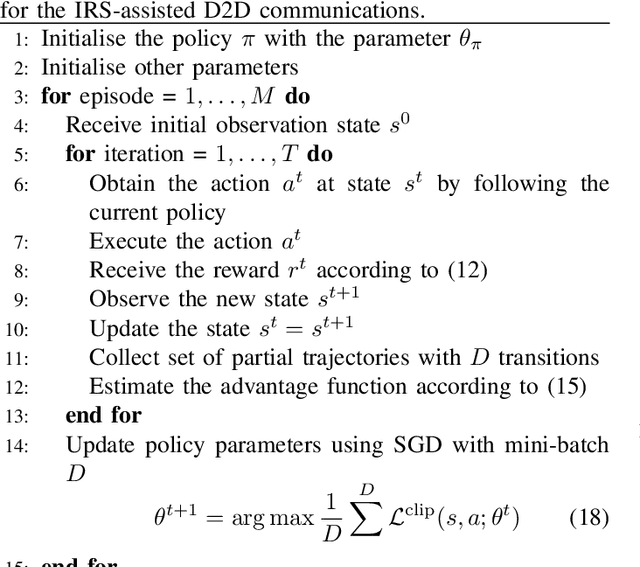

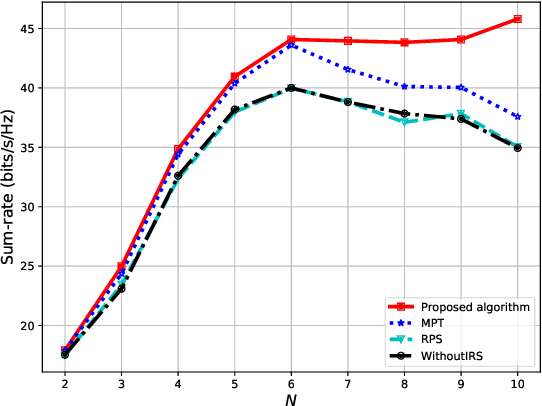

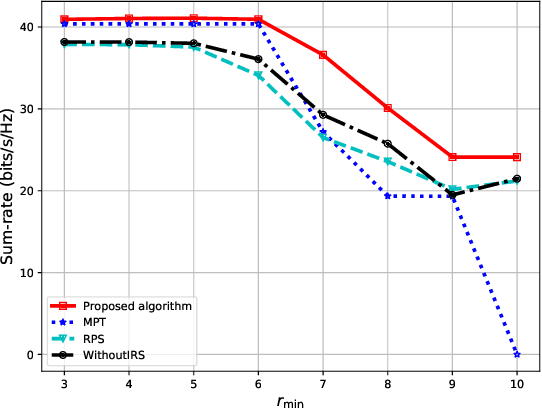

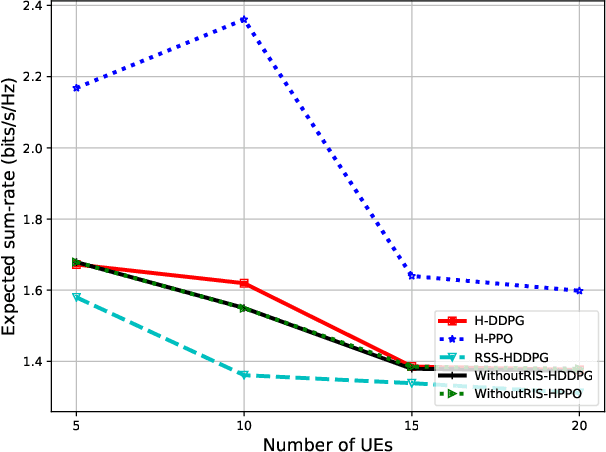

Abstract:In this paper, we propose a deep reinforcement learning (DRL) approach for solving the optimisation problem of the network's sum-rate in device-to-device (D2D) communications supported by an intelligent reflecting surface (IRS). The IRS is deployed to mitigate the interference and enhance the signal between the D2D transmitter and the associated D2D receiver. Our objective is to jointly optimise the transmit power at the D2D transmitter and the phase shift matrix at the IRS to maximise the network sum-rate. We formulate a Markov decision process and then propose the proximal policy optimisation for solving the maximisation game. Simulation results show impressive performance in terms of the achievable rate and processing time.

RIS-assisted UAV Communications for IoT with Wireless Power Transfer Using Deep Reinforcement Learning

Aug 05, 2021

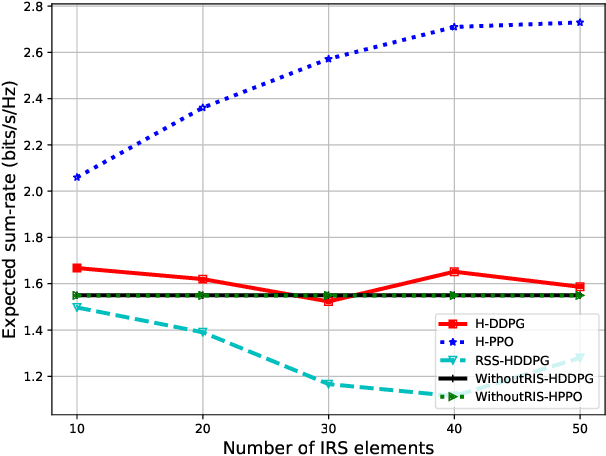

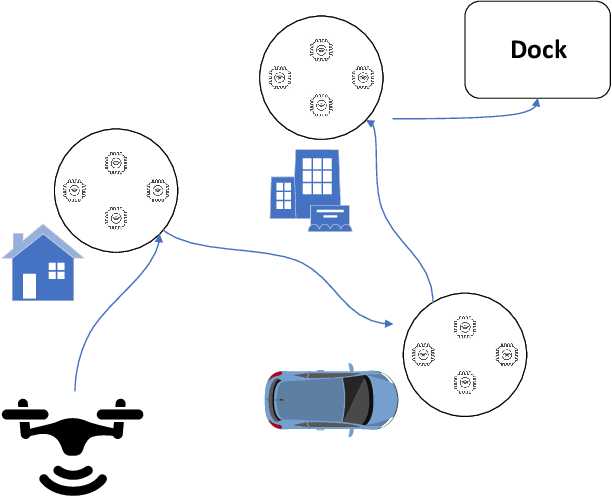

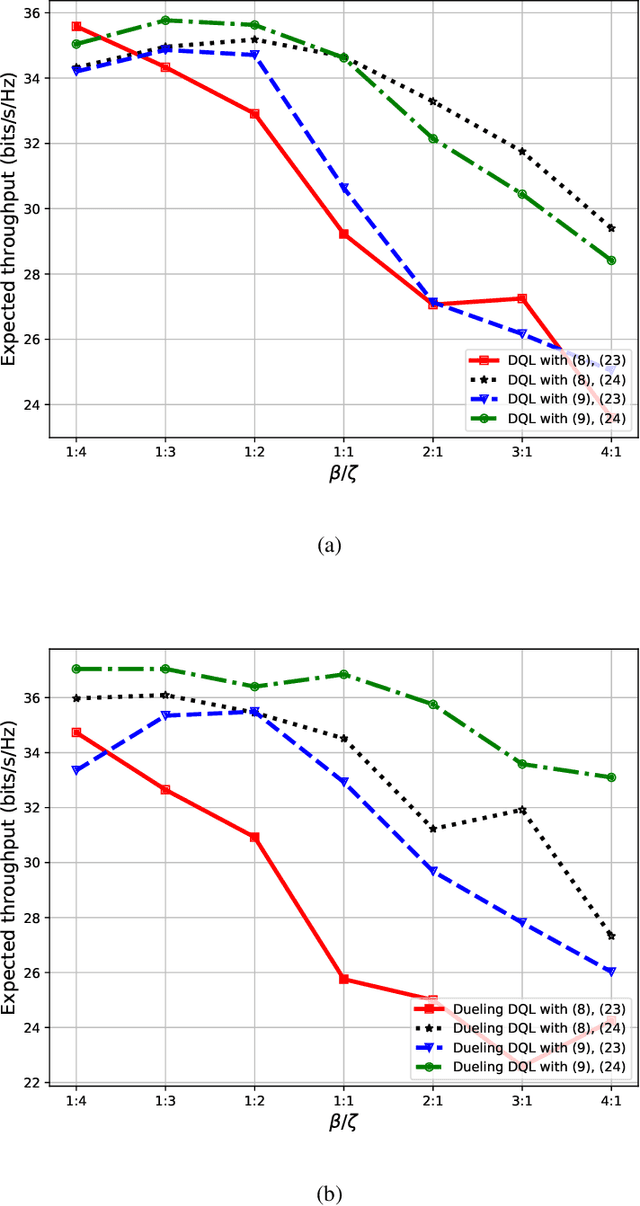

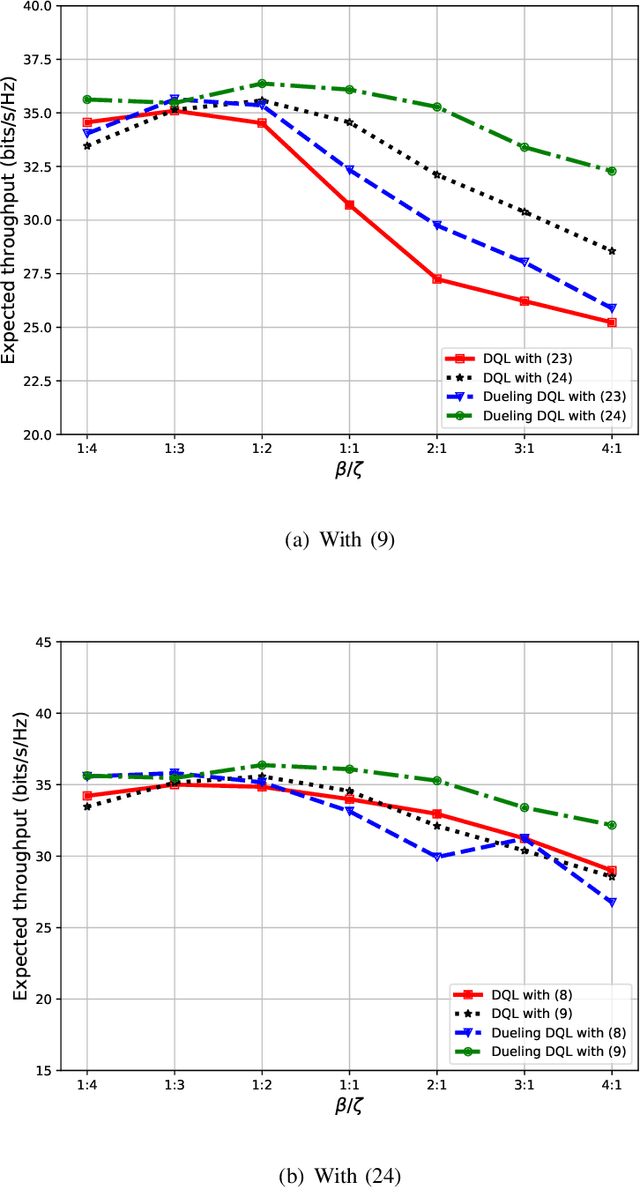

Abstract:Many of the devices used in Internet-of-Things (IoT) applications are energy-limited, and thus supplying energy while maintaining seamless connectivity for IoT devices is of considerable importance. In this context, we propose a simultaneous wireless power transfer and information transmission scheme for IoT devices with support from reconfigurable intelligent surface (RIS)-aided unmanned aerial vehicle (UAV) communications. In particular, in a first phase, IoT devices harvest energy from the UAV through wireless power transfer; and then in a second phase, the UAV collects data from the IoT devices through information transmission. To characterise the agility of the UAV, we consider two scenarios: a hovering UAV and a mobile UAV. Aiming at maximizing the total network sum-rate, we jointly optimize the trajectory of the UAV, the energy harvesting scheduling of IoT devices, and the phaseshift matrix of the RIS. We formulate a Markov decision process and propose two deep reinforcement learning algorithms to solve the optimization problem of maximizing the total network sum-rate. Numerical results illustrate the effectiveness of the UAV's flying path optimization and the network's throughput of our proposed techniques compared with other benchmark schemes. Given the strict requirements of the RIS and UAV, the significant improvement in processing time and throughput performance demonstrates that our proposed scheme is well applicable for practical IoT applications.

3D UAV Trajectory and Data Collection Optimisation via Deep Reinforcement Learning

Jun 06, 2021

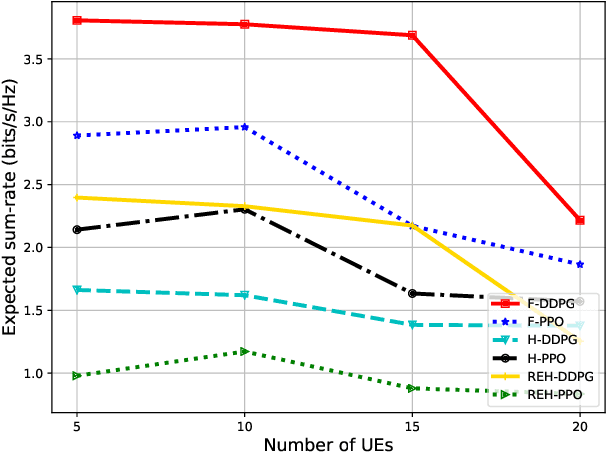

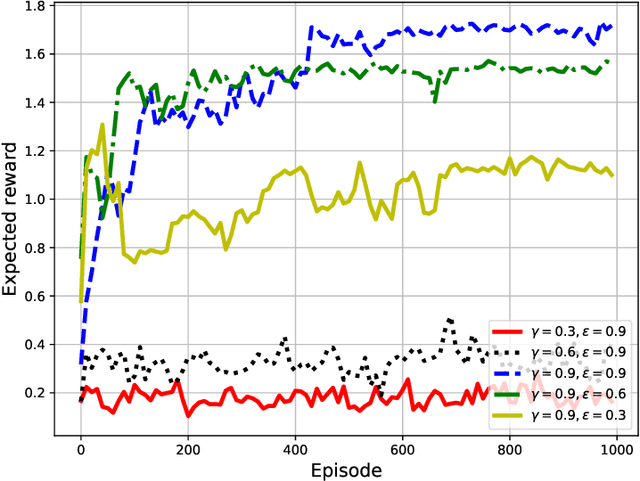

Abstract:Unmanned aerial vehicles (UAVs) are now beginning to be deployed for enhancing the network performance and coverage in wireless communication. However, due to the limitation of their on-board power and flight time, it is challenging to obtain an optimal resource allocation scheme for the UAV-assisted Internet of Things (IoT). In this paper, we design a new UAV-assisted IoT systems relying on the shortest flight path of the UAVs while maximising the amount of data collected from IoT devices. Then, a deep reinforcement learning-based technique is conceived for finding the optimal trajectory and throughput in a specific coverage area. After training, the UAV has the ability to autonomously collect all the data from user nodes at a significant total sum-rate improvement while minimising the associated resources used. Numerical results are provided to highlight how our techniques strike a balance between the throughput attained, trajectory, and the time spent. More explicitly, we characterise the attainable performance in terms of the UAV trajectory, the expected reward and the total sum-rate.

Intelligent Reconfigurable Surface-assisted Multi-UAV Networks: Efficient Resource Allocation with Deep Reinforcement Learning

May 28, 2021

Abstract:In this paper, we propose intelligent reconfigurable surface (IRS)-assisted unmanned aerial vehicles (UAVs) networks that can utilise both advantages of agility and reflection for enhancing the network's performance. To aim at maximising the energy efficiency (EE) of the considered networks, we jointly optimise the power allocation of the UAVs and the phaseshift matrix of the IRS. A deep reinforcement learning (DRL) approach is proposed for solving the continuous optimisation problem with time-varying channel gain in a centralised fashion. Moreover, a parallel learning approach is also proposed for reducing the information transmission requirement of the centralised approach. Numerical results show a significant improvement of our proposed schemes compared with the conventional approaches in terms of EE, flexibility, and processing time. Our proposed DRL methods for IRS-assisted UAV networks can be used for real-time applications due to their capability of instant decision-making and handling the time-varying channel with the dynamic environmental setting.

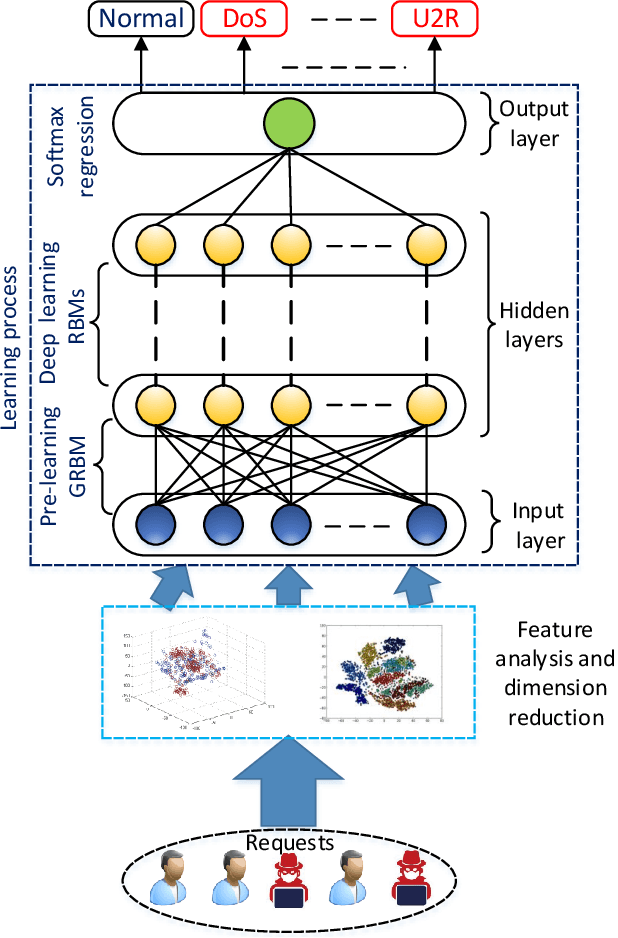

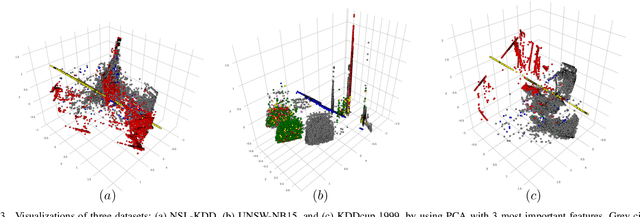

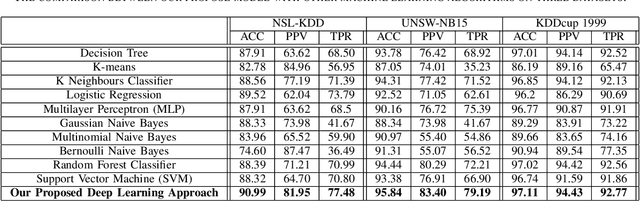

Cyberattack Detection in Mobile Cloud Computing: A Deep Learning Approach

Dec 16, 2017

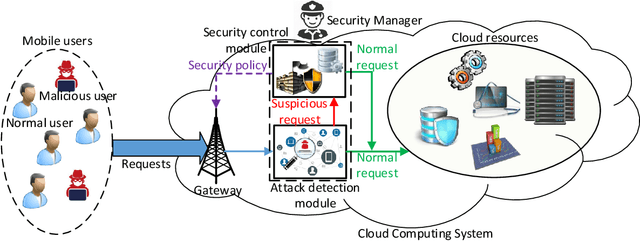

Abstract:With the rapid growth of mobile applications and cloud computing, mobile cloud computing has attracted great interest from both academia and industry. However, mobile cloud applications are facing security issues such as data integrity, users' confidentiality, and service availability. A preventive approach to such problems is to detect and isolate cyber threats before they can cause serious impacts to the mobile cloud computing system. In this paper, we propose a novel framework that leverages a deep learning approach to detect cyberattacks in mobile cloud environment. Through experimental results, we show that our proposed framework not only recognizes diverse cyberattacks, but also achieves a high accuracy (up to 97.11%) in detecting the attacks. Furthermore, we present the comparisons with current machine learning-based approaches to demonstrate the effectiveness of our proposed solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge