Tan Do-Duy

Applications of Distributed Machine Learning for the Internet-of-Things: A Comprehensive Survey

Oct 16, 2023Abstract:The emergence of new services and applications in emerging wireless networks (e.g., beyond 5G and 6G) has shown a growing demand for the usage of artificial intelligence (AI) in the Internet of Things (IoT). However, the proliferation of massive IoT connections and the availability of computing resources distributed across future IoT systems have strongly demanded the development of distributed AI for better IoT services and applications. Therefore, existing AI-enabled IoT systems can be enhanced by implementing distributed machine learning (aka distributed learning) approaches. This work aims to provide a comprehensive survey on distributed learning for IoT services and applications in emerging networks. In particular, we first provide a background of machine learning and present a preliminary to typical distributed learning approaches, such as federated learning, multi-agent reinforcement learning, and distributed inference. Then, we provide an extensive review of distributed learning for critical IoT services (e.g., data sharing and computation offloading, localization, mobile crowdsensing, and security and privacy) and IoT applications (e.g., smart healthcare, smart grid, autonomous vehicle, aerial IoT networks, and smart industry). From the reviewed literature, we also present critical challenges of distributed learning for IoT and propose several promising solutions and research directions in this emerging area.

RIS-assisted UAV Communications for IoT with Wireless Power Transfer Using Deep Reinforcement Learning

Aug 05, 2021

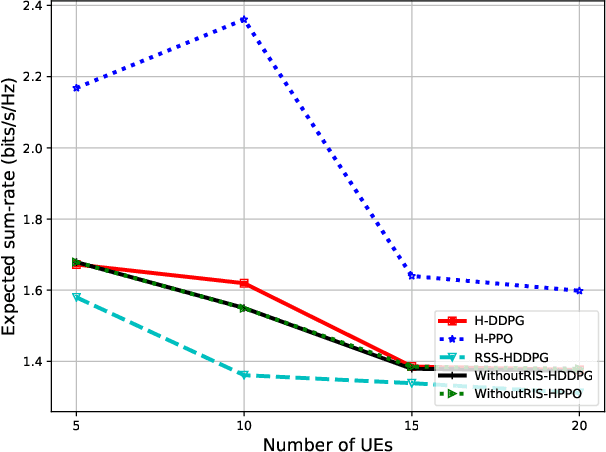

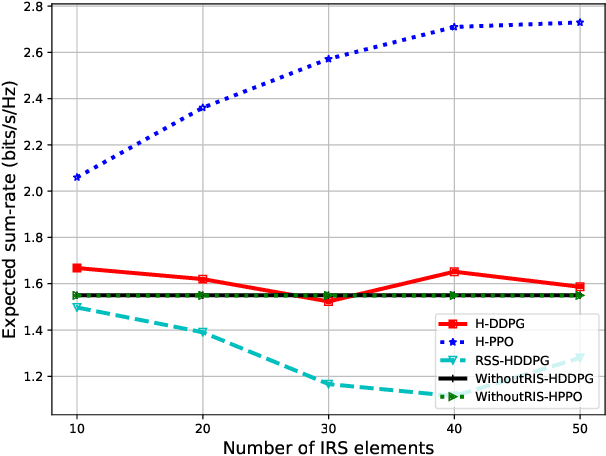

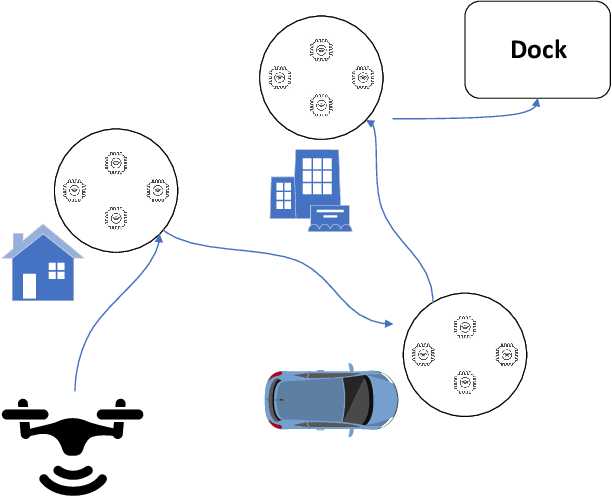

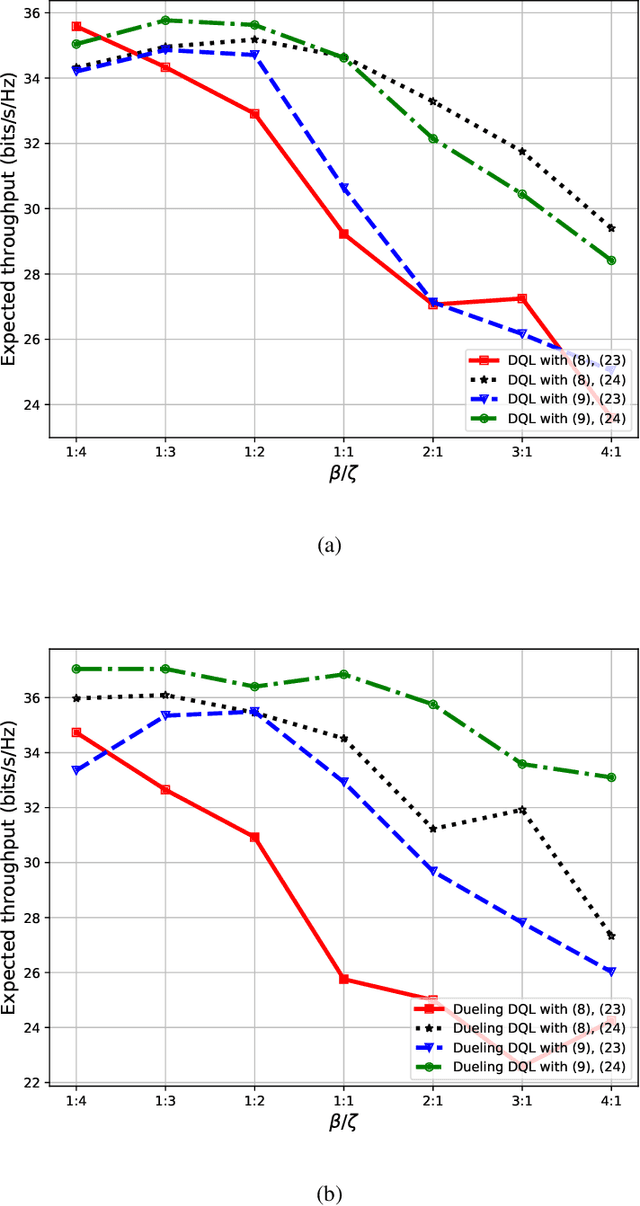

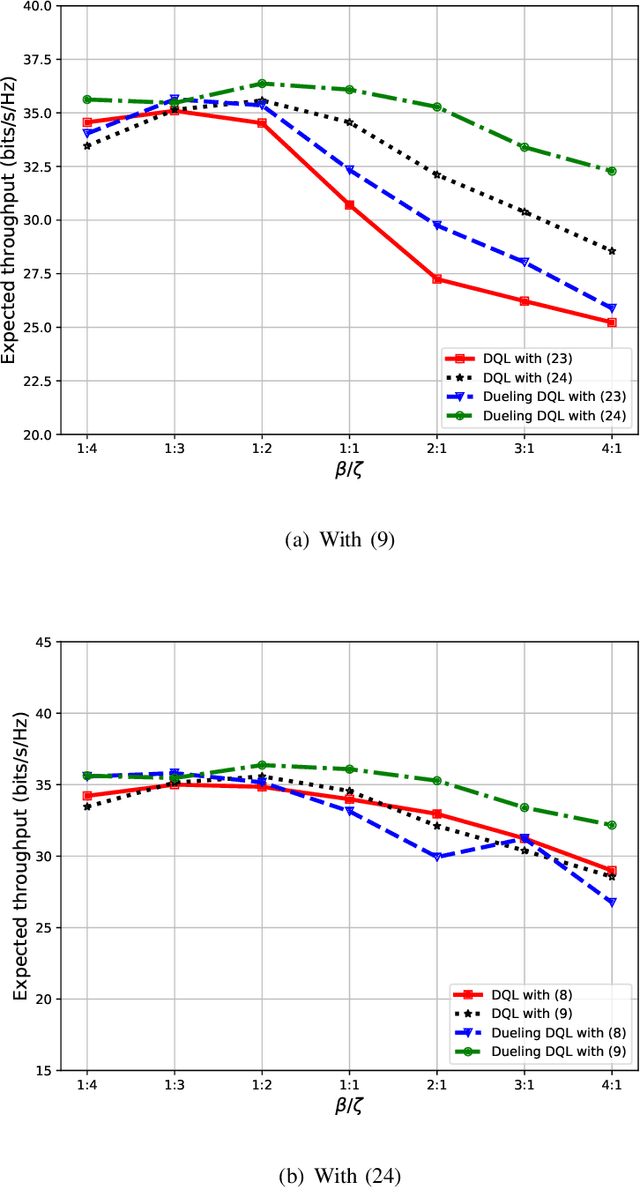

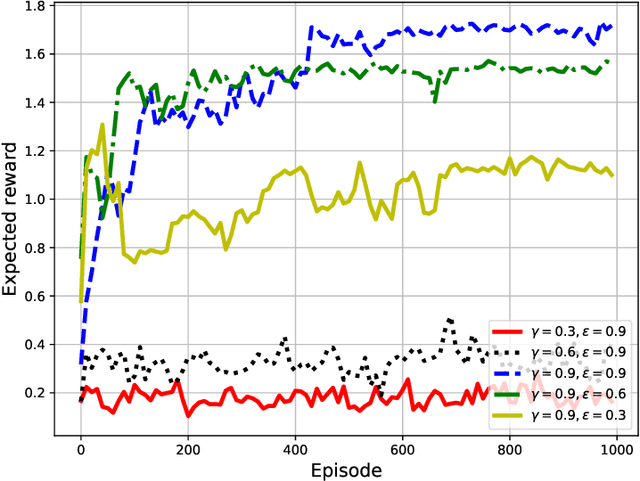

Abstract:Many of the devices used in Internet-of-Things (IoT) applications are energy-limited, and thus supplying energy while maintaining seamless connectivity for IoT devices is of considerable importance. In this context, we propose a simultaneous wireless power transfer and information transmission scheme for IoT devices with support from reconfigurable intelligent surface (RIS)-aided unmanned aerial vehicle (UAV) communications. In particular, in a first phase, IoT devices harvest energy from the UAV through wireless power transfer; and then in a second phase, the UAV collects data from the IoT devices through information transmission. To characterise the agility of the UAV, we consider two scenarios: a hovering UAV and a mobile UAV. Aiming at maximizing the total network sum-rate, we jointly optimize the trajectory of the UAV, the energy harvesting scheduling of IoT devices, and the phaseshift matrix of the RIS. We formulate a Markov decision process and propose two deep reinforcement learning algorithms to solve the optimization problem of maximizing the total network sum-rate. Numerical results illustrate the effectiveness of the UAV's flying path optimization and the network's throughput of our proposed techniques compared with other benchmark schemes. Given the strict requirements of the RIS and UAV, the significant improvement in processing time and throughput performance demonstrates that our proposed scheme is well applicable for practical IoT applications.

3D UAV Trajectory and Data Collection Optimisation via Deep Reinforcement Learning

Jun 06, 2021

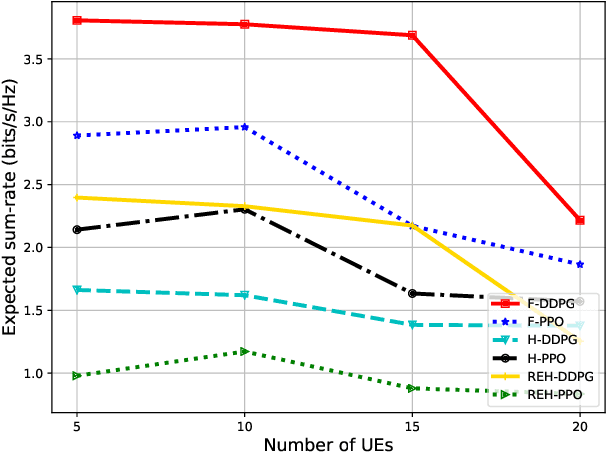

Abstract:Unmanned aerial vehicles (UAVs) are now beginning to be deployed for enhancing the network performance and coverage in wireless communication. However, due to the limitation of their on-board power and flight time, it is challenging to obtain an optimal resource allocation scheme for the UAV-assisted Internet of Things (IoT). In this paper, we design a new UAV-assisted IoT systems relying on the shortest flight path of the UAVs while maximising the amount of data collected from IoT devices. Then, a deep reinforcement learning-based technique is conceived for finding the optimal trajectory and throughput in a specific coverage area. After training, the UAV has the ability to autonomously collect all the data from user nodes at a significant total sum-rate improvement while minimising the associated resources used. Numerical results are provided to highlight how our techniques strike a balance between the throughput attained, trajectory, and the time spent. More explicitly, we characterise the attainable performance in terms of the UAV trajectory, the expected reward and the total sum-rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge