Kavi Arya

agriFrame: Agricultural framework to remotely control a rover inside a greenhouse environment

Apr 12, 2025Abstract:The growing demand for innovation in agriculture is essential for food security worldwide and more implicit in developing countries. With growing demand comes a reduction in rapid development time. Data collection and analysis are essential in agriculture. However, considering a given crop, its cycle comes once a year, and researchers must wait a few months before collecting more data for the given crop. To overcome this hurdle, researchers are venturing into digital twins for agriculture. Toward this effort, we present an agricultural framework(agriFrame). Here, we introduce a simulated greenhouse environment for testing and controlling a robot and remotely controlling/implementing the algorithms in the real-world greenhouse setup. This work showcases the importance/interdependence of network setup, remotely controllable rover, and messaging protocol. The sophisticated yet simple-to-use agriFrame has been optimized for the simulator on minimal laptop/desktop specifications.

Scalable and low-cost remote lab platforms: Teaching industrial robotics using open-source tools and understanding its social implications

Dec 19, 2024Abstract:With recent advancements in industrial robots, educating students in new technologies and preparing them for the future is imperative. However, access to industrial robots for teaching poses challenges, such as the high cost of acquiring these robots, the safety of the operator and the robot, and complicated training material. This paper proposes two low-cost platforms built using open-source tools like Robot Operating System (ROS) and its latest version ROS 2 to help students learn and test algorithms on remotely connected industrial robots. Universal Robotics (UR5) arm and a custom mobile rover were deployed in different life-size testbeds, a greenhouse, and a warehouse to create an Autonomous Agricultural Harvester System (AAHS) and an Autonomous Warehouse Management System (AWMS). These platforms were deployed for a period of 7 months and were tested for their efficacy with 1,433 and 1,312 students, respectively. The hardware used in AAHS and AWMS was controlled remotely for 160 and 355 hours, respectively, by students over a period of 3 months.

Vision-based indoor localization of nano drones in controlled environment with its applications

Dec 11, 2024

Abstract:Navigating unmanned aerial vehicles in environments where GPS signals are unavailable poses a compelling and intricate challenge. This challenge is further heightened when dealing with Nano Aerial Vehicles (NAVs) due to their compact size, payload restrictions, and computational capabilities. This paper proposes an approach for localization using off-board computing, an off-board monocular camera, and modified open-source algorithms. The proposed method uses three parallel proportional-integral-derivative controllers on the off-board computer to provide velocity corrections via wireless communication, stabilizing the NAV in a custom-controlled environment. Featuring a 3.1cm localization error and a modest setup cost of 50 USD, this approach proves optimal for environments where cost considerations are paramount. It is especially well-suited for applications like teaching drone control in academic institutions, where the specified error margin is deemed acceptable. Various applications are designed to validate the proposed technique, such as landing the NAV on a moving ground vehicle, path planning in a 3D space, and localizing multi-NAVs. The created package is openly available at https://github.com/simmubhangu/eyantra_drone to foster research in this field.

Keeping Teams in the Game: Predicting Dropouts in Online Problem-Based Learning Competition

Dec 27, 2023Abstract:Online learning and MOOCs have become increasingly popular in recent years, and the trend will continue, given the technology boom. There is a dire need to observe learners' behavior in these online courses, similar to what instructors do in a face-to-face classroom. Learners' strategies and activities become crucial to understanding their behavior. One major challenge in online courses is predicting and preventing dropout behavior. While several studies have tried to perform such analysis, there is still a shortage of studies that employ different data streams to understand and predict the drop rates. Moreover, studies rarely use a fully online team-based collaborative environment as their context. Thus, the current study employs an online longitudinal problem-based learning (PBL) collaborative robotics competition as the testbed. Through methodological triangulation, the study aims to predict dropout behavior via the contributions of Discourse discussion forum 'activities' of participating teams, along with a self-reported Online Learning Strategies Questionnaire (OSLQ). The study also uses Qualitative interviews to enhance the ground truth and results. The OSLQ data is collected from more than 4000 participants. Furthermore, the study seeks to establish the reliability of OSLQ to advance research within online environments. Various Machine Learning algorithms are applied to analyze the data. The findings demonstrate the reliability of OSLQ with our substantial sample size and reveal promising results for predicting the dropout rate in online competition.

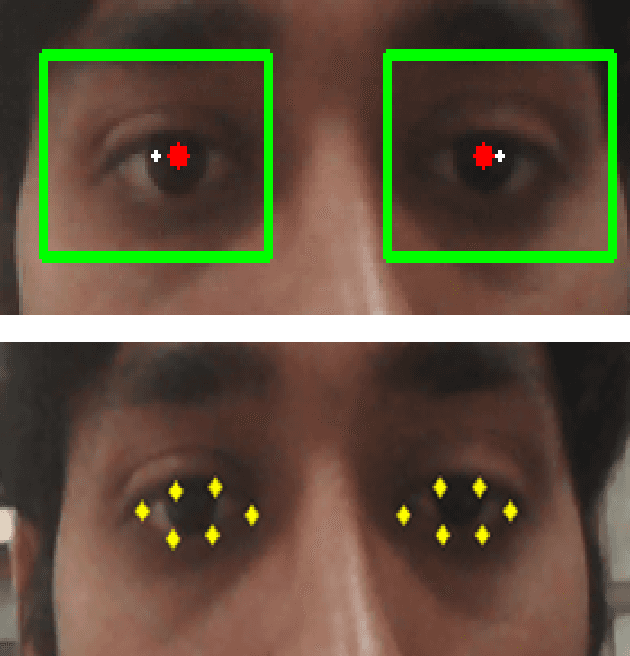

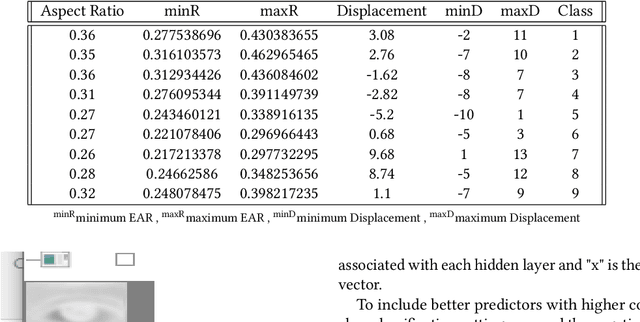

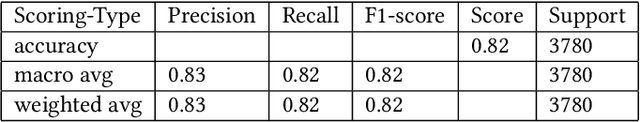

An Efficient Point of Gaze Estimator for Low-Resolution Imaging Systems Using Extracted Ocular Features Based Neural Architecture

Jun 09, 2021

Abstract:A user's eyes provide means for Human Computer Interaction (HCI) research as an important modal. The time to time scientific explorations of the eye has already seen an upsurge of the benefits in HCI applications from gaze estimation to the measure of attentiveness of a user looking at a screen for a given time period. The eye tracking system as an assisting, interactive tool can be incorporated by physically disabled individuals, fitted best for those who have eyes as only a limited set of communication. The threefold objective of this paper is - 1. To introduce a neural network based architecture to predict users' gaze at 9 positions displayed in the 11.31{\deg} visual range on the screen, through a low resolution based system such as a webcam in real time by learning various aspects of eyes as an ocular feature set. 2.A collection of coarsely supervised feature set obtained in real time which is also validated through the user case study presented in the paper for 21 individuals ( 17 men and 4 women ) from whom a 35k set of instances was derived with an accuracy score of 82.36% and f1_score of 82.2% and 3.A detailed study over applicability and underlying challenges of such systems. The experimental results verify the feasibility and validity of the proposed eye gaze tracking model.

A Weakly Supervised Model for Solving Math word Problems

Apr 14, 2021

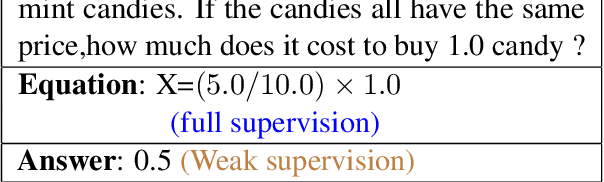

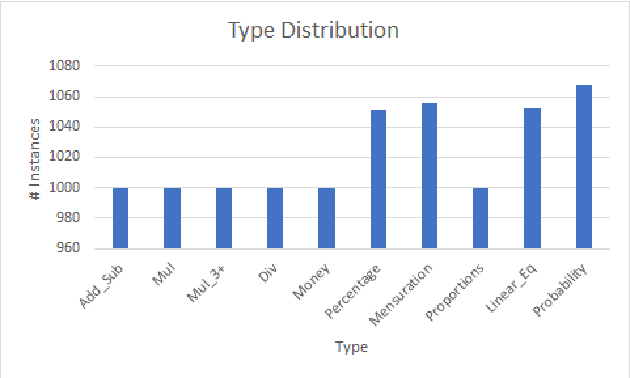

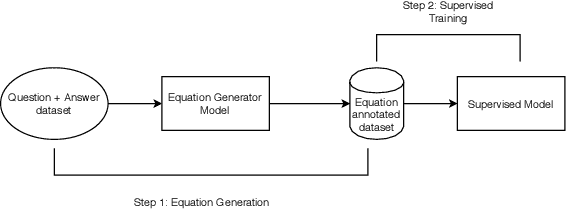

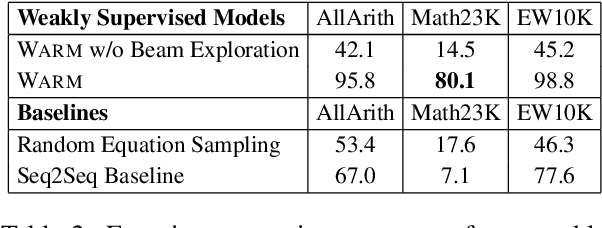

Abstract:Solving math word problems (MWPs) is an important and challenging problem in natural language processing. Existing approaches to solve MWPs require full supervision in the form of intermediate equations. However, labeling every math word problem with its corresponding equations is a time-consuming and expensive task. In order to address this challenge of equation annotation, we propose a weakly supervised model for solving math word problems by requiring only the final answer as supervision. We approach this problem by first learning to generate the equation using the problem description and the final answer, which we then use to train a supervised MWP solver. We propose and compare various weakly supervised techniques to learn to generate equations directly from the problem description and answer. Through extensive experiment, we demonstrate that even without using equations for supervision, our approach achieves an accuracy of 56.0 on the standard Math23K dataset. We also curate and release a new dataset for MWPs in English consisting of 10227 instances suitable for training weakly supervised models.

Unsupervised Learning of Explainable Parse Trees for Improved Generalisation

Apr 11, 2021

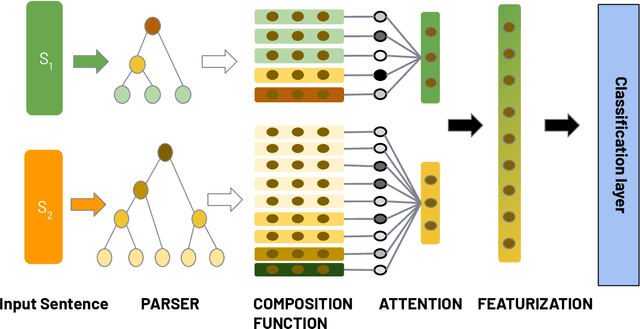

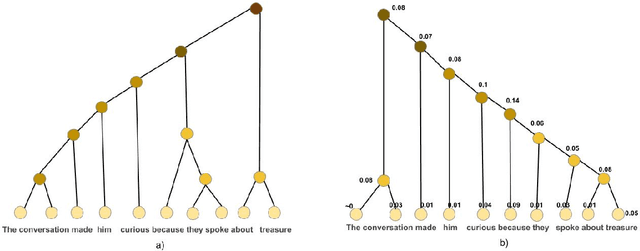

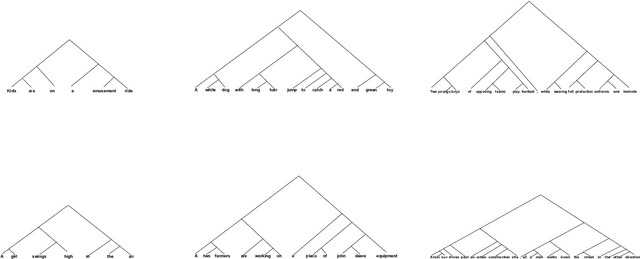

Abstract:Recursive neural networks (RvNN) have been shown useful for learning sentence representations and helped achieve competitive performance on several natural language inference tasks. However, recent RvNN-based models fail to learn simple grammar and meaningful semantics in their intermediate tree representation. In this work, we propose an attention mechanism over Tree-LSTMs to learn more meaningful and explainable parse tree structures. We also demonstrate the superior performance of our proposed model on natural language inference, semantic relatedness, and sentiment analysis tasks and compare them with other state-of-the-art RvNN based methods. Further, we present a detailed qualitative and quantitative analysis of the learned parse trees and show that the discovered linguistic structures are more explainable, semantically meaningful, and grammatically correct than recent approaches. The source code of the paper is available at https://github.com/atul04/Explainable-Latent-Structures-Using-Attention.

Selection-based Question Answering of an MOOC

Nov 15, 2019

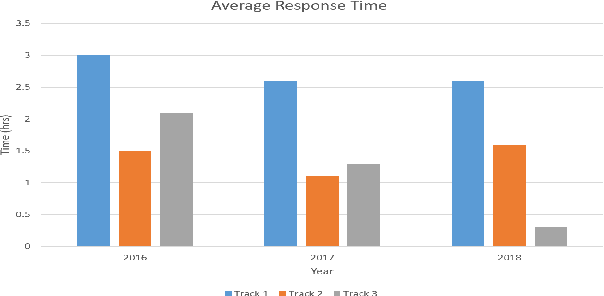

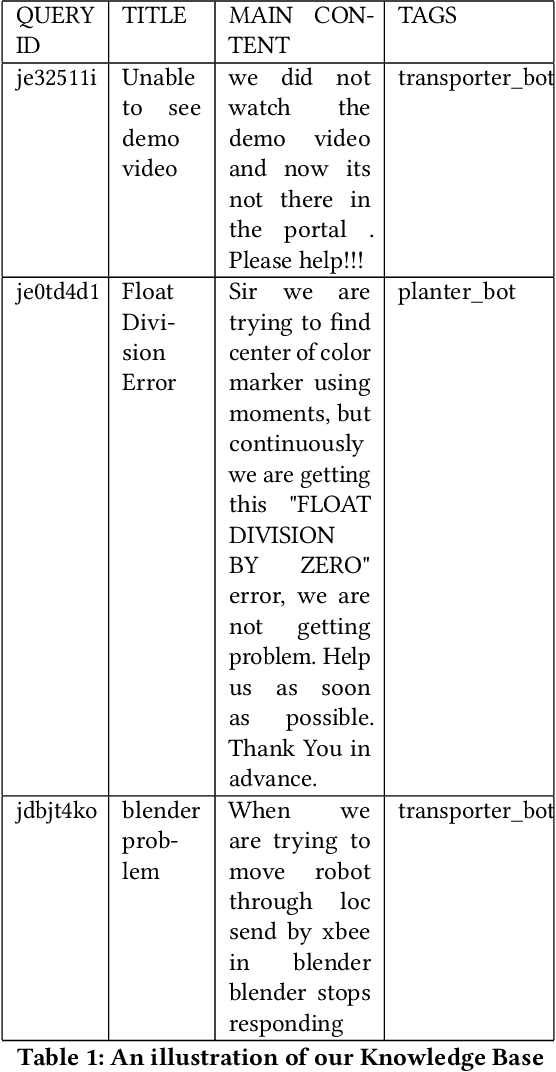

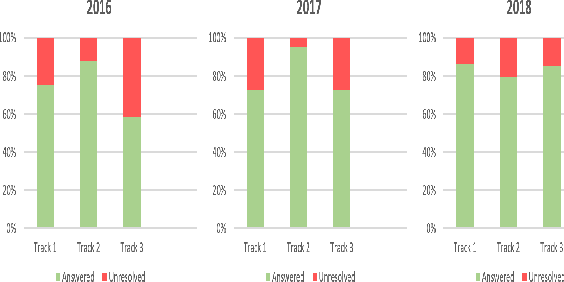

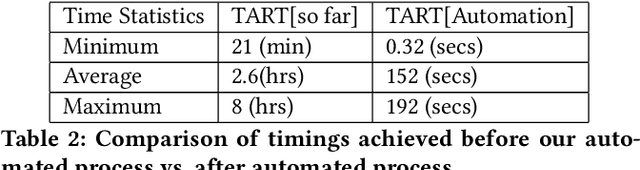

Abstract:e-Yantra Robotics Competition (eYRC) is a unique Robotics Competition hosted by IIT Bombay that is actually an Embedded Systems and Robotics MOOC. Registrations have been growing exponentially in each year from 4500 in 2012 to over 34000 in 2019. In this 5-month long competition students learn complex skills under severe time pressure and have access to a discussion forum to post doubts about the learning material. Responding to questions in real-time is a challenge for project staff. Here, we illustrate the advantage of Deep Learning for real-time question answering in the eYRC discussion forum. We illustrate the advantage of Transformer based contextual embedding mechanisms such as Bidirectional Encoder Representation From Transformer (BERT) over word embedding mechanisms such as Word2Vec. We propose a weighted similarity metric as a measure of matching and find it more reliable than Content-Content or Title-Title similarities alone. The automation of replying to questions has brought the turn around response time(TART) down from a minimum of 21 mins to a minimum of 0.3 secs.

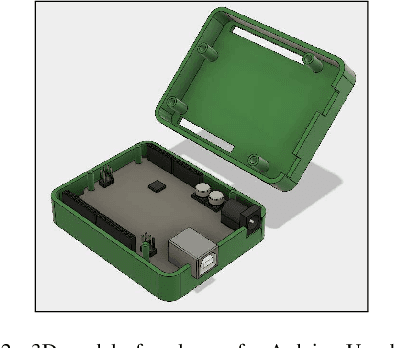

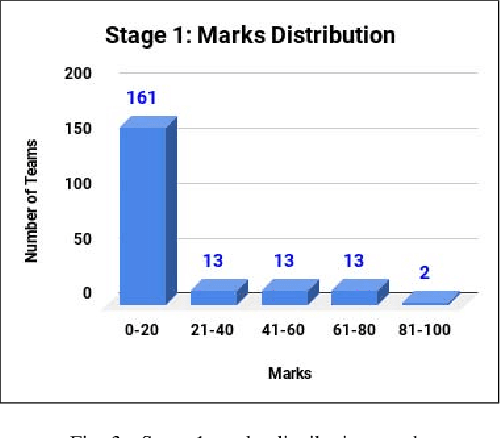

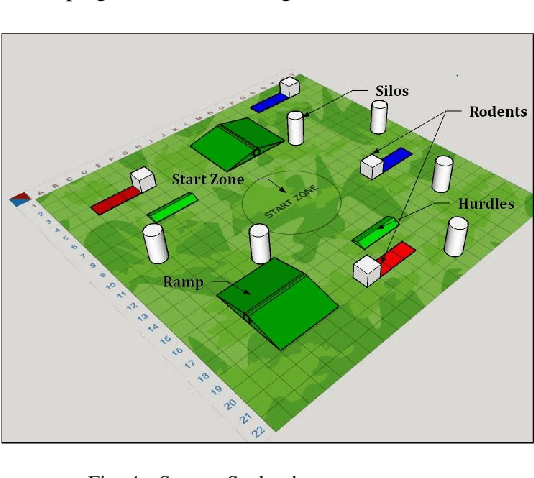

Learning while Competing -- 3D Modeling & Design

May 18, 2019

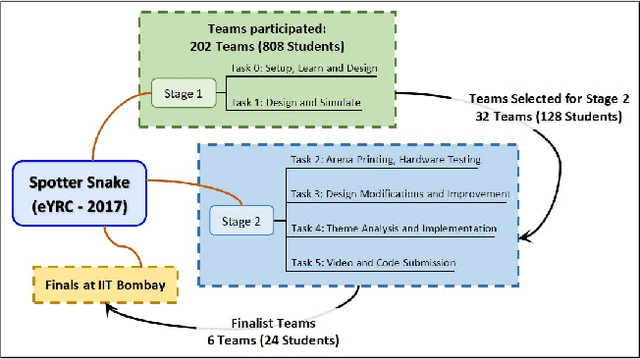

Abstract:The e-Yantra project at IIT Bombay conducts an online competition, e-Yantra Robotics Competition (eYRC) which uses a Project Based Learning (PBL) methodology to train students to implement a robotics project in a step-by-step manner over a five-month period. Participation is absolutely free. The competition provides all resources - robot, accessories, and a problem statement - to a participating team. If selected for the finals, e-Yantra pays for them to come to the finals at IIT Bombay. This makes the competition accessible to resource-poor student teams. In this paper, we describe the methodology used in the 6th edition of eYRC, eYRC-2017 where we experimented with a Theme (projects abstracted into rulebooks) involving an advanced topic - 3D Designing and interfacing with sensors and actuators. We demonstrate that the learning outcomes are consistent with our previous studies [1]. We infer that even 3D designing to create a working model can be effectively learned in a competition mode through PBL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge