Imon Mukherjee

CitePrompt: Using Prompts to Identify Citation Intent in Scientific Papers

May 03, 2023Abstract:Citations in scientific papers not only help us trace the intellectual lineage but also are a useful indicator of the scientific significance of the work. Citation intents prove beneficial as they specify the role of the citation in a given context. In this paper, we present CitePrompt, a framework which uses the hitherto unexplored approach of prompt-based learning for citation intent classification. We argue that with the proper choice of the pretrained language model, the prompt template, and the prompt verbalizer, we can not only get results that are better than or comparable to those obtained with the state-of-the-art methods but also do it with much less exterior information about the scientific document. We report state-of-the-art results on the ACL-ARC dataset, and also show significant improvement on the SciCite dataset over all baseline models except one. As suitably large labelled datasets for citation intent classification can be quite hard to find, in a first, we propose the conversion of this task to the few-shot and zero-shot settings. For the ACL-ARC dataset, we report a 53.86% F1 score for the zero-shot setting, which improves to 63.61% and 66.99% for the 5-shot and 10-shot settings, respectively.

An Efficient Point of Gaze Estimator for Low-Resolution Imaging Systems Using Extracted Ocular Features Based Neural Architecture

Jun 09, 2021

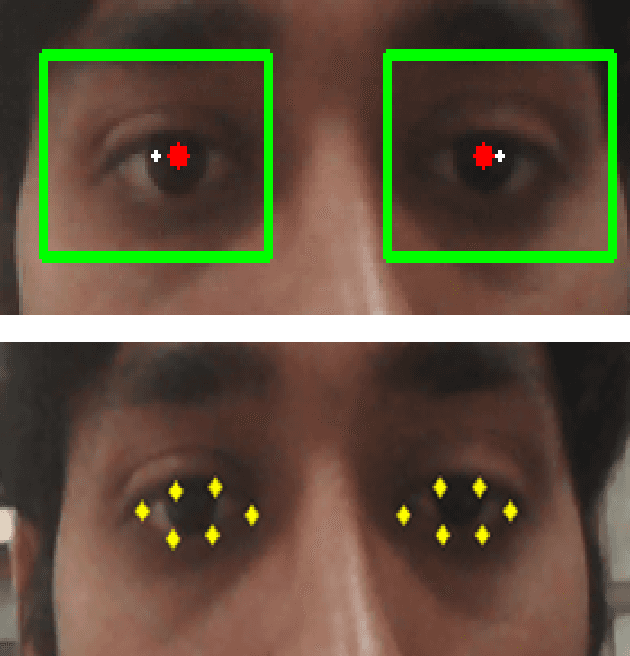

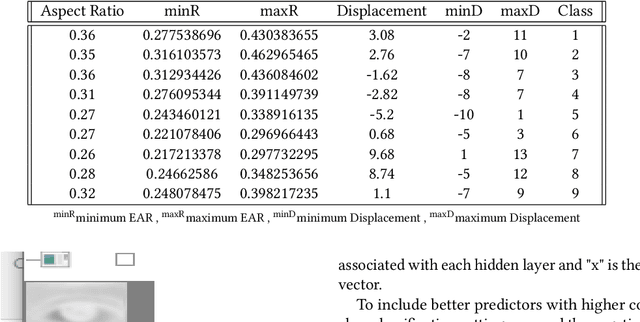

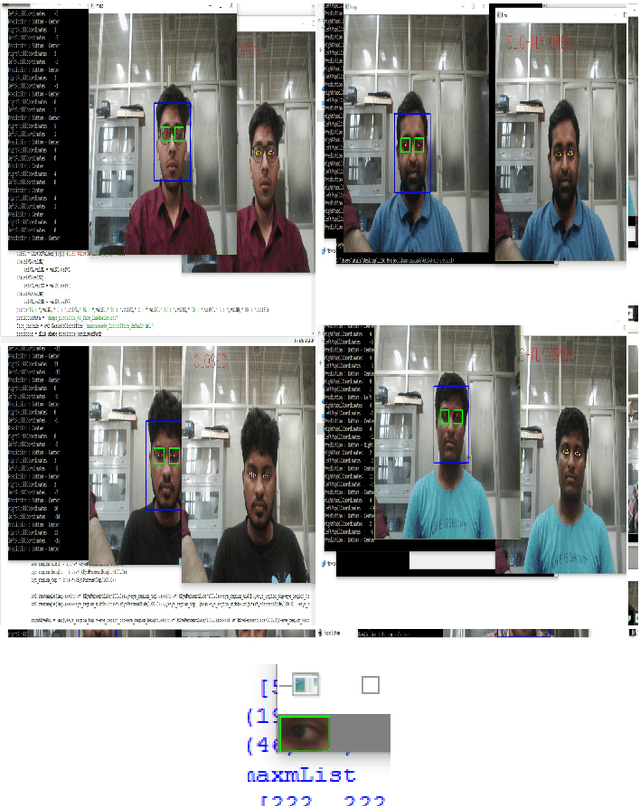

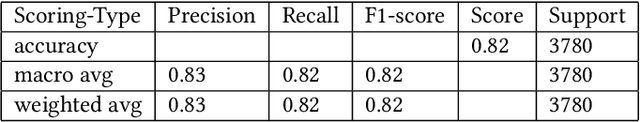

Abstract:A user's eyes provide means for Human Computer Interaction (HCI) research as an important modal. The time to time scientific explorations of the eye has already seen an upsurge of the benefits in HCI applications from gaze estimation to the measure of attentiveness of a user looking at a screen for a given time period. The eye tracking system as an assisting, interactive tool can be incorporated by physically disabled individuals, fitted best for those who have eyes as only a limited set of communication. The threefold objective of this paper is - 1. To introduce a neural network based architecture to predict users' gaze at 9 positions displayed in the 11.31{\deg} visual range on the screen, through a low resolution based system such as a webcam in real time by learning various aspects of eyes as an ocular feature set. 2.A collection of coarsely supervised feature set obtained in real time which is also validated through the user case study presented in the paper for 21 individuals ( 17 men and 4 women ) from whom a 35k set of instances was derived with an accuracy score of 82.36% and f1_score of 82.2% and 3.A detailed study over applicability and underlying challenges of such systems. The experimental results verify the feasibility and validity of the proposed eye gaze tracking model.

Decoding CNN based Object Classifier Using Visualization

Jul 15, 2020

Abstract:This paper investigates how working of Convolutional Neural Network (CNN) can be explained through visualization in the context of machine perception of autonomous vehicles. We visualize what type of features are extracted in different convolution layers of CNN that helps to understand how CNN gradually increases spatial information in every layer. Thus, it concentrates on region of interests in every transformation. Visualizing heat map of activation helps us to understand how CNN classifies and localizes different objects in image. This study also helps us to reason behind low accuracy of a model helps to increase trust on object detection module.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge