An Efficient Point of Gaze Estimator for Low-Resolution Imaging Systems Using Extracted Ocular Features Based Neural Architecture

Paper and Code

Jun 09, 2021

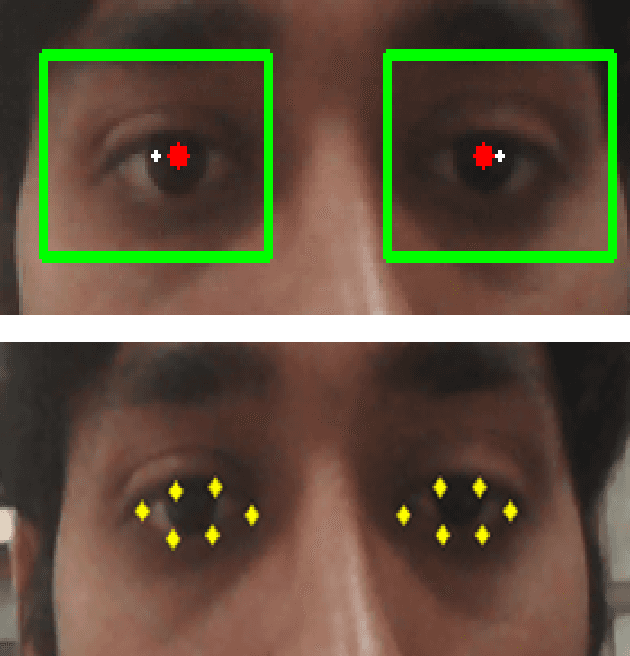

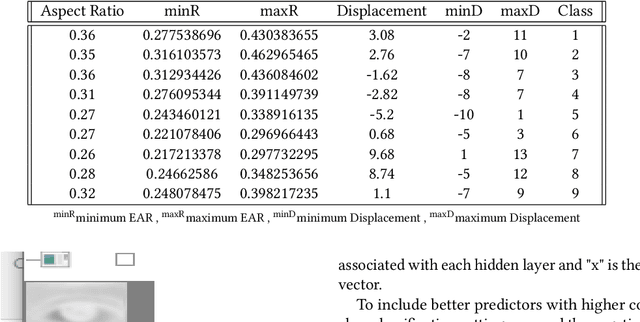

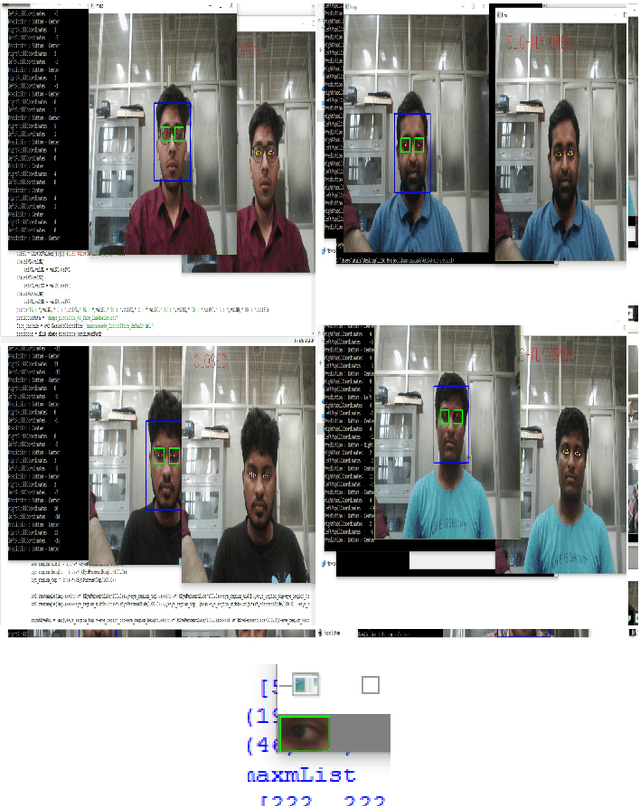

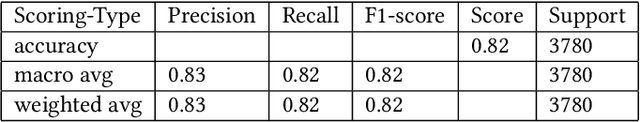

A user's eyes provide means for Human Computer Interaction (HCI) research as an important modal. The time to time scientific explorations of the eye has already seen an upsurge of the benefits in HCI applications from gaze estimation to the measure of attentiveness of a user looking at a screen for a given time period. The eye tracking system as an assisting, interactive tool can be incorporated by physically disabled individuals, fitted best for those who have eyes as only a limited set of communication. The threefold objective of this paper is - 1. To introduce a neural network based architecture to predict users' gaze at 9 positions displayed in the 11.31{\deg} visual range on the screen, through a low resolution based system such as a webcam in real time by learning various aspects of eyes as an ocular feature set. 2.A collection of coarsely supervised feature set obtained in real time which is also validated through the user case study presented in the paper for 21 individuals ( 17 men and 4 women ) from whom a 35k set of instances was derived with an accuracy score of 82.36% and f1_score of 82.2% and 3.A detailed study over applicability and underlying challenges of such systems. The experimental results verify the feasibility and validity of the proposed eye gaze tracking model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge