Kareem Metwaly

Surface Defect Detection and Evaluation for Marine Vessels using Multi-Stage Deep Learning

Mar 17, 2022

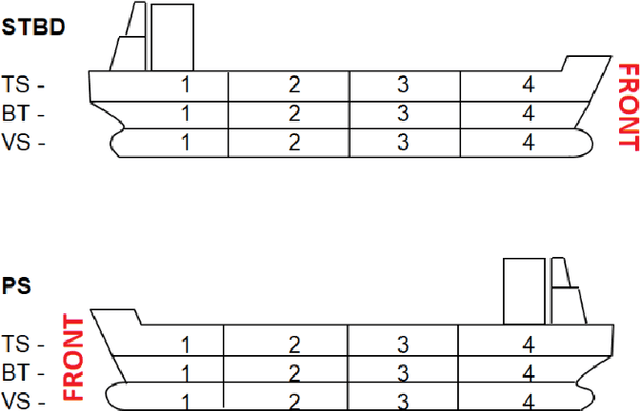

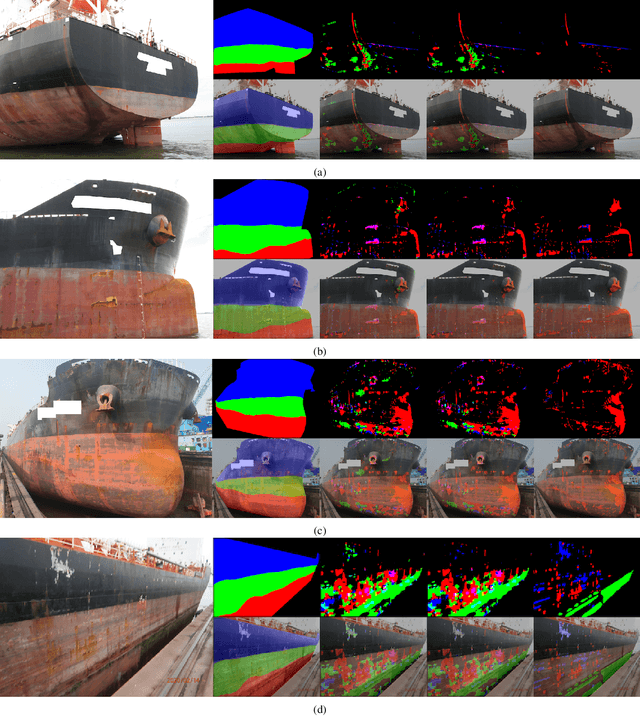

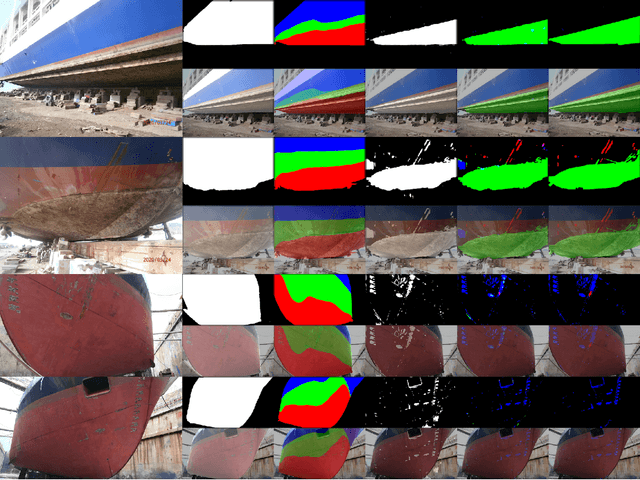

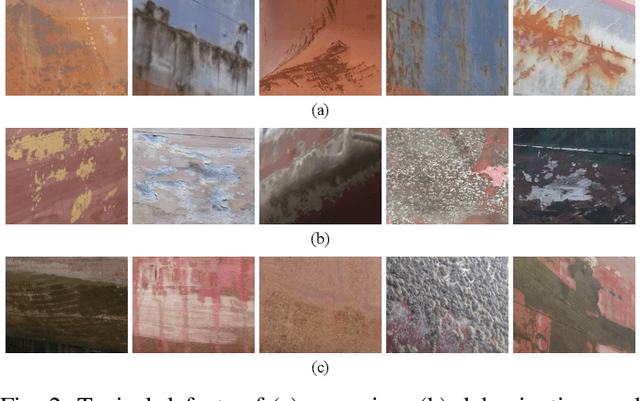

Abstract:Detecting and evaluating surface coating defects is important for marine vessel maintenance. Currently, the assessment is carried out manually by qualified inspectors using international standards and their own experience. Automating the processes is highly challenging because of the high level of variation in vessel type, paint surface, coatings, lighting condition, weather condition, paint colors, areas of the vessel, and time in service. We present a novel deep learning-based pipeline to detect and evaluate the percentage of corrosion, fouling, and delamination on the vessel surface from normal photographs. We propose a multi-stage image processing framework, including ship section segmentation, defect segmentation, and defect classification, to automatically recognize different types of defects and measure the coverage percentage on the ship surface. Experimental results demonstrate that our proposed pipeline can objectively perform a similar assessment as a qualified inspector.

GlideNet: Global, Local and Intrinsic based Dense Embedding NETwork for Multi-category Attributes Prediction

Mar 14, 2022

Abstract:Attaching attributes (such as color, shape, state, action) to object categories is an important computer vision problem. Attribute prediction has seen exciting recent progress and is often formulated as a multi-label classification problem. Yet significant challenges remain in: 1) predicting diverse attributes over multiple categories, 2) modeling attributes-category dependency, 3) capturing both global and local scene context, and 4) predicting attributes of objects with low pixel-count. To address these issues, we propose a novel multi-category attribute prediction deep architecture named GlideNet, which contains three distinct feature extractors. A global feature extractor recognizes what objects are present in a scene, whereas a local one focuses on the area surrounding the object of interest. Meanwhile, an intrinsic feature extractor uses an extension of standard convolution dubbed Informed Convolution to retrieve features of objects with low pixel-count. GlideNet uses gating mechanisms with binary masks and its self-learned category embedding to combine the dense embeddings. Collectively, the Global-Local-Intrinsic blocks comprehend the scene's global context while attending to the characteristics of the local object of interest. Finally, using the combined features, an interpreter predicts the attributes, and the length of the output is determined by the category, thereby removing unnecessary attributes. GlideNet can achieve compelling results on two recent and challenging datasets -- VAW and CAR -- for large-scale attribute prediction. For instance, it obtains more than 5\% gain over state of the art in the mean recall (mR) metric. GlideNet's advantages are especially apparent when predicting attributes of objects with low pixel counts as well as attributes that demand global context understanding. Finally, we show that GlideNet excels in training starved real-world scenarios.

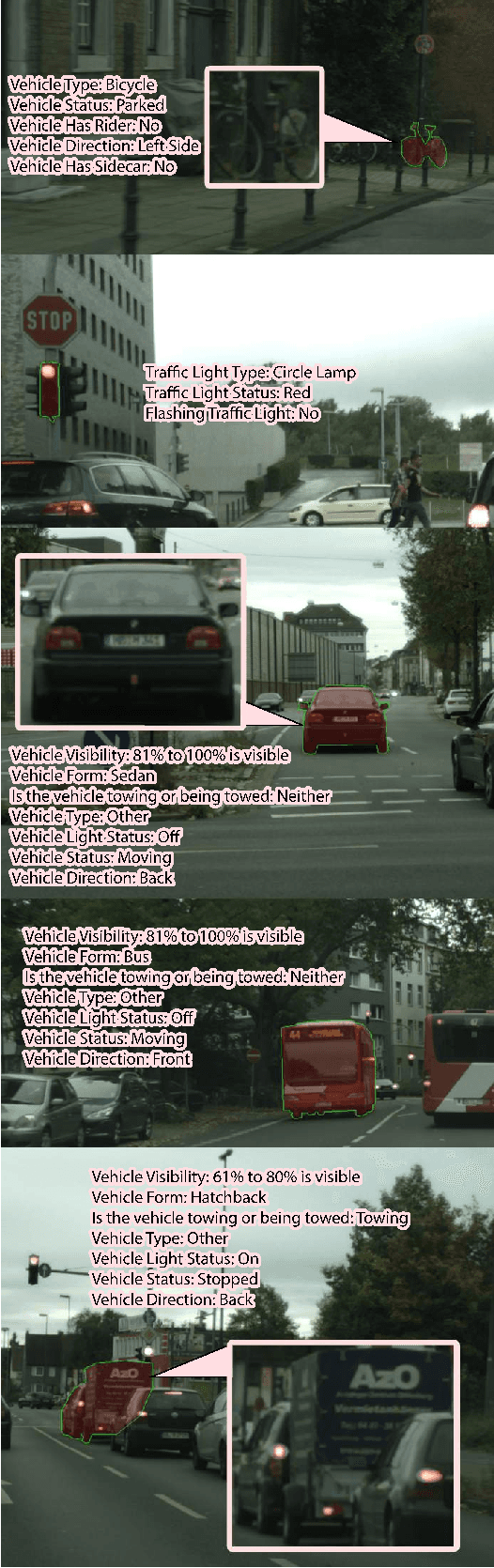

CAR -- Cityscapes Attributes Recognition A Multi-category Attributes Dataset for Autonomous Vehicles

Nov 16, 2021

Abstract:Self-driving vehicles are the future of transportation. With current advancements in this field, the world is getting closer to safe roads with almost zero probability of having accidents and eliminating human errors. However, there is still plenty of research and development necessary to reach a level of robustness. One important aspect is to understand a scene fully including all details. As some characteristics (attributes) of objects in a scene (drivers' behavior for instance) could be imperative for correct decision making. However, current algorithms suffer from low-quality datasets with such rich attributes. Therefore, in this paper, we present a new dataset for attributes recognition -- Cityscapes Attributes Recognition (CAR). The new dataset extends the well-known dataset Cityscapes by adding an additional yet important annotation layer of attributes of objects in each image. Currently, we have annotated more than 32k instances of various categories (Vehicles, Pedestrians, etc.). The dataset has a structured and tailored taxonomy where each category has its own set of possible attributes. The tailored taxonomy focuses on attributes that is of most beneficent for developing better self-driving algorithms that depend on accurate computer vision and scene comprehension. We have also created an API for the dataset to ease the usage of CAR. The API can be accessed through https://github.com/kareem-metwaly/CAR-API.

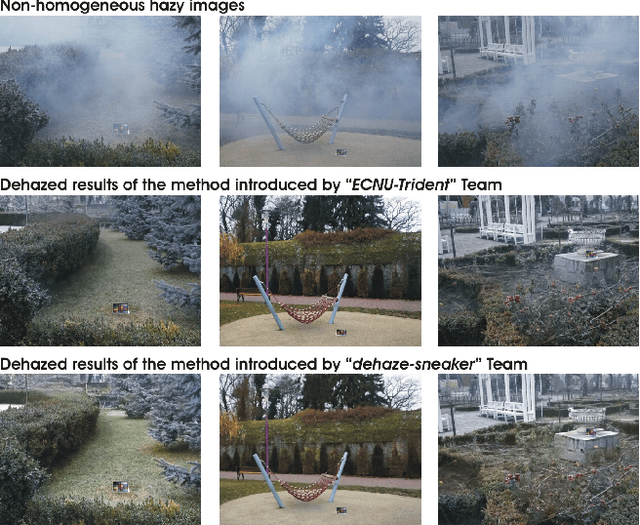

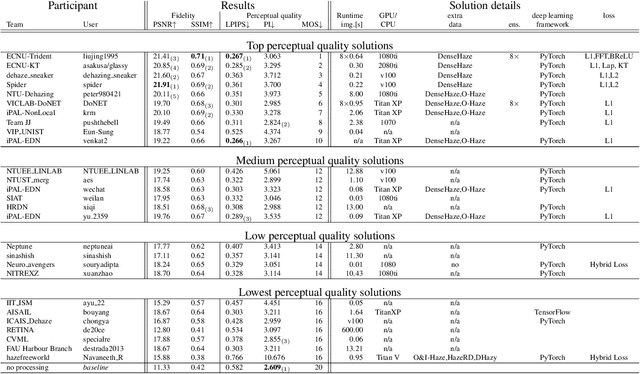

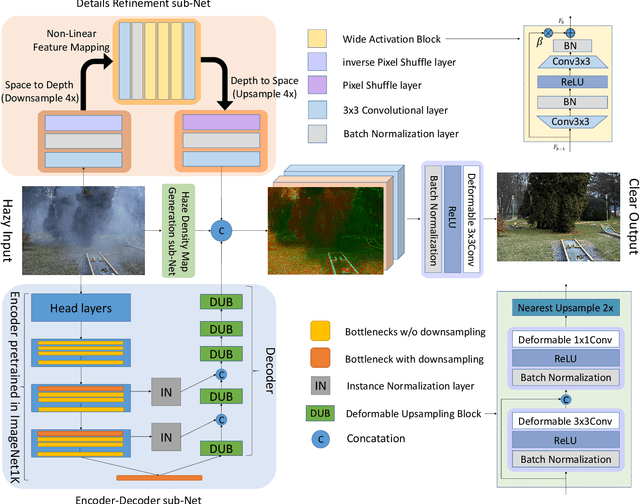

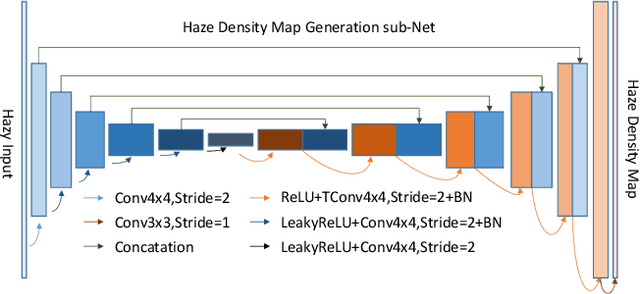

NTIRE 2020 Challenge on NonHomogeneous Dehazing

May 07, 2020

Abstract:This paper reviews the NTIRE 2020 Challenge on NonHomogeneous Dehazing of images (restoration of rich details in hazy image). We focus on the proposed solutions and their results evaluated on NH-Haze, a novel dataset consisting of 55 pairs of real haze free and nonhomogeneous hazy images recorded outdoor. NH-Haze is the first realistic nonhomogeneous haze dataset that provides ground truth images. The nonhomogeneous haze has been produced using a professional haze generator that imitates the real conditions of haze scenes. 168 participants registered in the challenge and 27 teams competed in the final testing phase. The proposed solutions gauge the state-of-the-art in image dehazing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge