Junhang Li

Instruct2See: Learning to Remove Any Obstructions Across Distributions

May 23, 2025

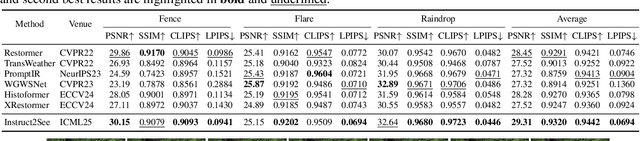

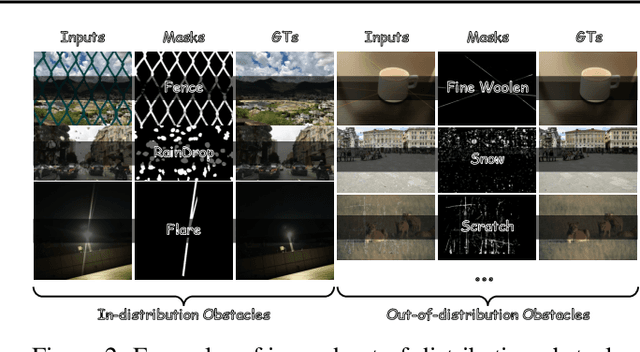

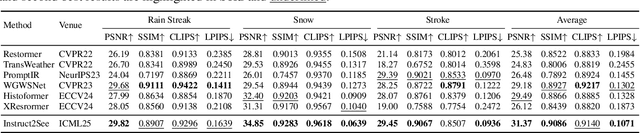

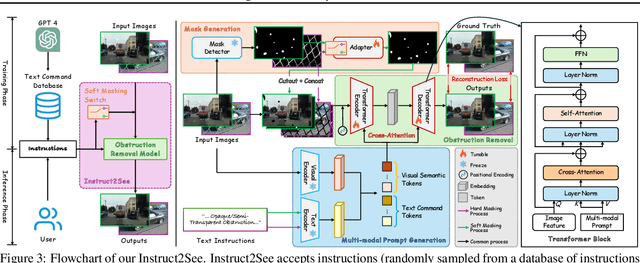

Abstract:Images are often obstructed by various obstacles due to capture limitations, hindering the observation of objects of interest. Most existing methods address occlusions from specific elements like fences or raindrops, but are constrained by the wide range of real-world obstructions, making comprehensive data collection impractical. To overcome these challenges, we propose Instruct2See, a novel zero-shot framework capable of handling both seen and unseen obstacles. The core idea of our approach is to unify obstruction removal by treating it as a soft-hard mask restoration problem, where any obstruction can be represented using multi-modal prompts, such as visual semantics and textual instructions, processed through a cross-attention unit to enhance contextual understanding and improve mode control. Additionally, a tunable mask adapter allows for dynamic soft masking, enabling real-time adjustment of inaccurate masks. Extensive experiments on both in-distribution and out-of-distribution obstacles show that Instruct2See consistently achieves strong performance and generalization in obstruction removal, regardless of whether the obstacles were present during the training phase. Code and dataset are available at https://jhscut.github.io/Instruct2See.

Adaptive Weighted Nonnegative Matrix Factorization for Robust Feature Representation

Jun 07, 2022

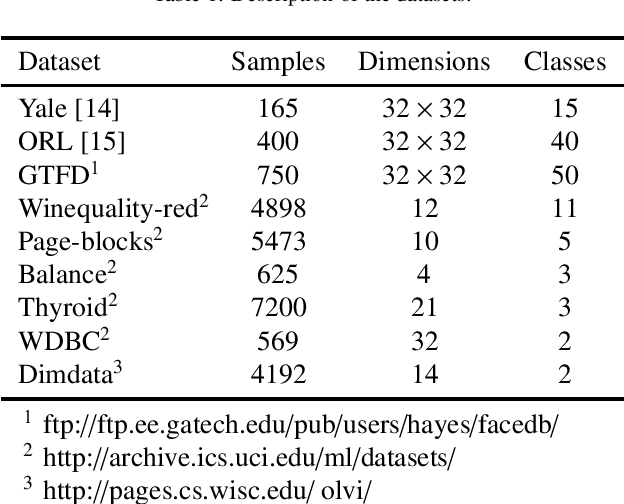

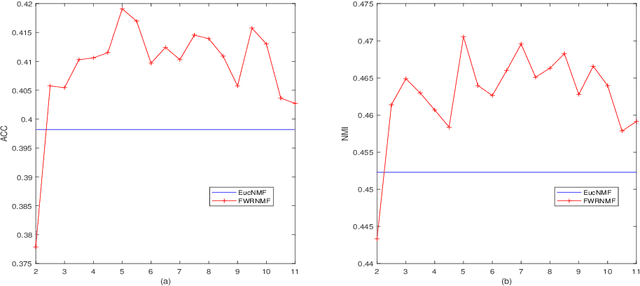

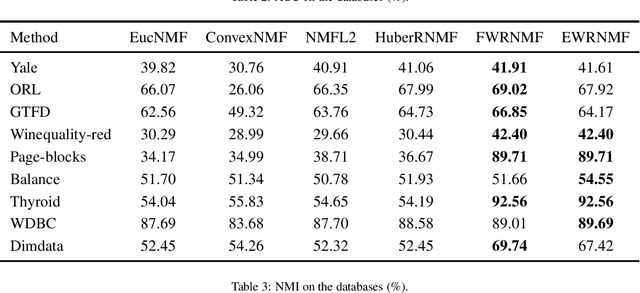

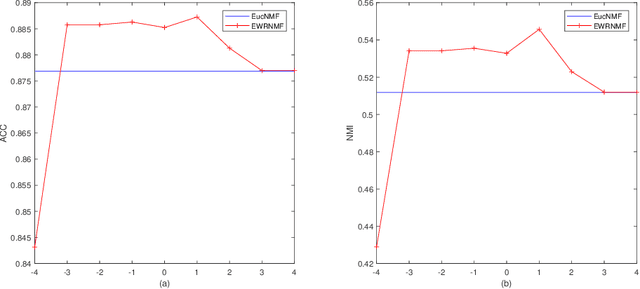

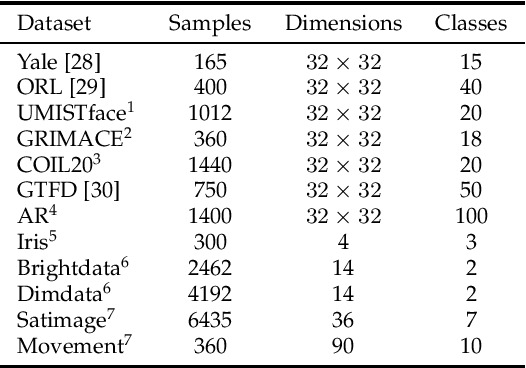

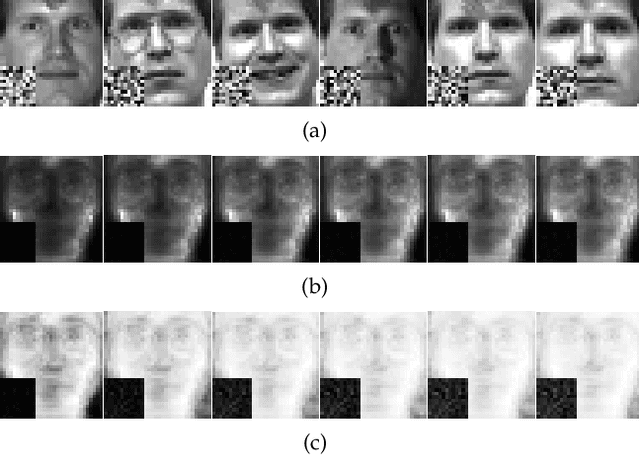

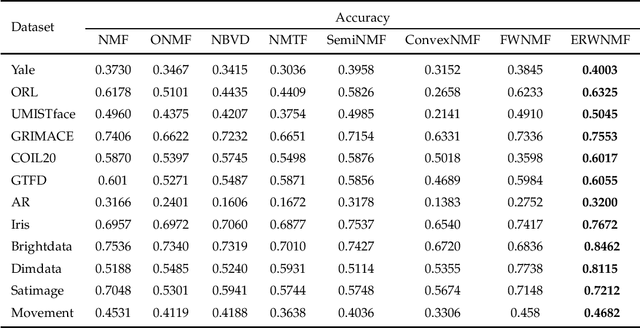

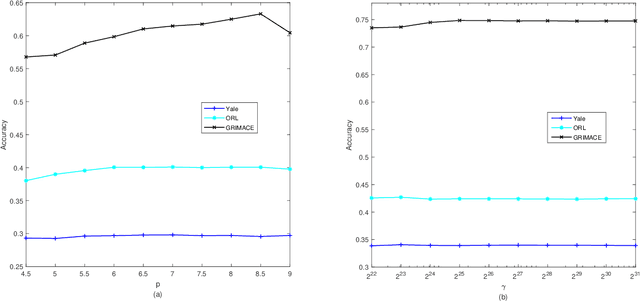

Abstract:Nonnegative matrix factorization (NMF) has been widely used to dimensionality reduction in machine learning. However, the traditional NMF does not properly handle outliers, so that it is sensitive to noise. In order to improve the robustness of NMF, this paper proposes an adaptive weighted NMF, which introduces weights to emphasize the different importance of each data point, thus the algorithmic sensitivity to noisy data is decreased. It is very different from the existing robust NMFs that use a slow growth similarity measure. Specifically, two strategies are proposed to achieve this: fuzzier weighted technique and entropy weighted regularized technique, and both of them lead to an iterative solution with a simple form. Experimental results showed that new methods have more robust feature representation on several real datasets with noise than exsiting methods.

Subspace Nonnegative Matrix Factorization for Feature Representation

Apr 18, 2022

Abstract:Traditional nonnegative matrix factorization (NMF) learns a new feature representation on the whole data space, which means treating all features equally. However, a subspace is often sufficient for accurate representation in practical applications, and redundant features can be invalid or even harmful. For example, if a camera has some sensors destroyed, then the corresponding pixels in the photos from this camera are not helpful to identify the content, which means only the subspace consisting of remaining pixels is worthy of attention. This paper proposes a new NMF method by introducing adaptive weights to identify key features in the original space so that only a subspace involves generating the new representation. Two strategies are proposed to achieve this: the fuzzier weighted technique and entropy regularized weighted technique, both of which result in an iterative solution with a simple form. Experimental results on several real-world datasets demonstrated that the proposed methods can generate a more accurate feature representation than existing methods. The code developed in this study is available at https://github.com/WNMF1/FWNMF-ERWNMF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge