Jonathan Tirado

Multimodal Limbless Crawling Soft Robot with a Kirigami Skin

Jun 05, 2025Abstract:Limbless creatures can crawl on flat surfaces by deforming their bodies and interacting with asperities on the ground, offering a biological blueprint for designing efficient limbless robots. Inspired by this natural locomotion, we present a soft robot capable of navigating complex terrains using a combination of rectilinear motion and asymmetric steering gaits. The robot is made of a pair of antagonistic inflatable soft actuators covered with a flexible kirigami skin with asymmetric frictional properties. The robot's rectilinear locomotion is achieved through cyclic inflation of internal chambers with precise phase shifts, enabling forward progression. Steering is accomplished using an asymmetric gait, allowing for both in-place rotation and wide turns. To validate its mobility in obstacle-rich environments, we tested the robot in an arena with coarse substrates and multiple obstacles. Real-time feedback from onboard proximity sensors, integrated with a human-machine interface (HMI), allowed adaptive control to avoid collisions. This study highlights the potential of bioinspired soft robots for applications in confined or unstructured environments, such as search-and-rescue operations, environmental monitoring, and industrial inspections.

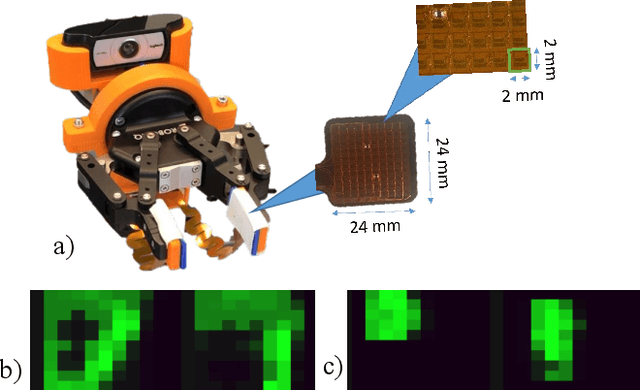

TiltXter: CNN-based Electro-tactile Rendering of Tilt Angle for Telemanipulation of Pasteur Pipettes

Sep 24, 2024Abstract:The shape of deformable objects can change drastically during grasping by robotic grippers, causing an ambiguous perception of their alignment and hence resulting in errors in robot positioning and telemanipulation. Rendering clear tactile patterns is fundamental to increasing users' precision and dexterity through tactile haptic feedback during telemanipulation. Therefore, different methods have to be studied to decode the sensors' data into haptic stimuli. This work presents a telemanipulation system for plastic pipettes that consists of a Force Dimension Omega.7 haptic interface endowed with two electro-stimulation arrays and two tactile sensor arrays embedded in the 2-finger Robotiq gripper. We propose a novel approach based on convolutional neural networks (CNN) to detect the tilt of deformable objects. The CNN generates a tactile pattern based on recognized tilt data to render further electro-tactile stimuli provided to the user during the telemanipulation. The study has shown that using the CNN algorithm, tilt recognition by users increased from 23.13\% with the downsized data to 57.9%, and the success rate during teleoperation increased from 53.12% using the downsized data to 92.18% using the tactile patterns generated by the CNN.

CoboGuider: Haptic Potential Fields for Safe Human-Robot Interaction

Oct 25, 2021

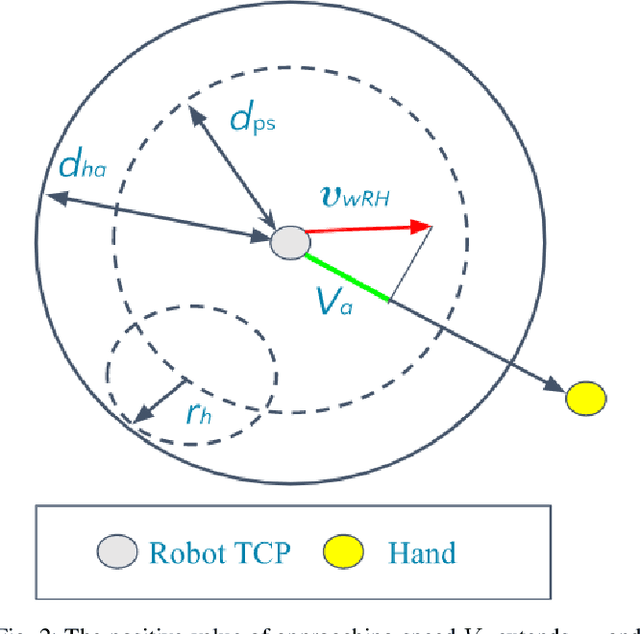

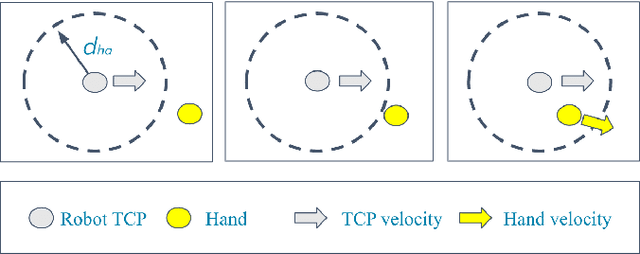

Abstract:Modern industry still relies on manual manufacturing operations and safe human-robot interaction is of great interest nowadays. Speed and Separation Monitoring (SSM) allows close and efficient collaborative scenarios by maintaining a protective separation distance during robot operation. The paper focuses on a novel approach to strengthen the SSM safety requirements by introducing haptic feedback to a robotic cell worker. Tactile stimuli provide early warning of dangerous movements and proximity to the robot, based on the human reaction time and instantaneous velocities of robot and operator. A preliminary experiment was performed to identify the reaction time of participants when they are exposed to tactile stimuli in a collaborative environment with controlled conditions. In a second experiment, we evaluated our approach into a study case where human worker and cobot performed collaborative planetary gear assembly. Results show that the applied approach increased the average minimum distance between the robot's end-effector and hand by 44% compared to the operator relying only on the visual feedback. Moreover, the participants without the haptic support have failed several times to maintain the protective separation distance.

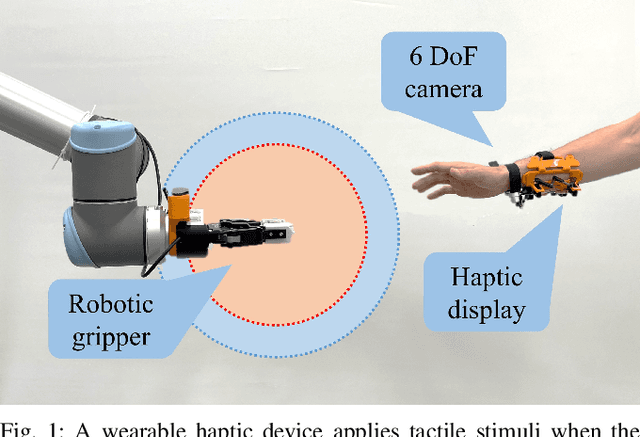

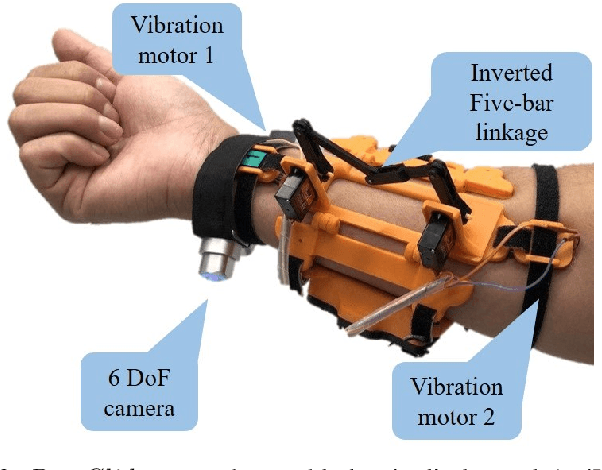

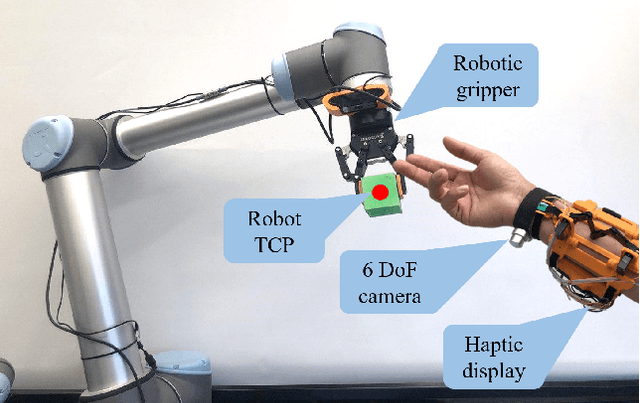

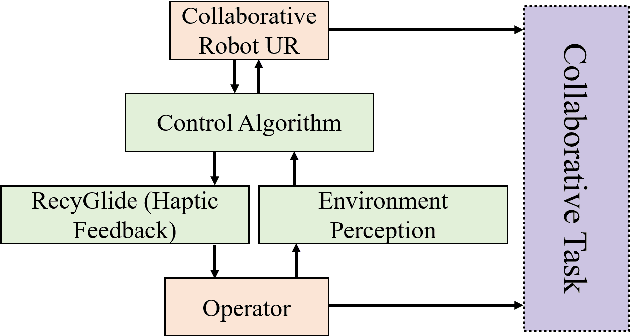

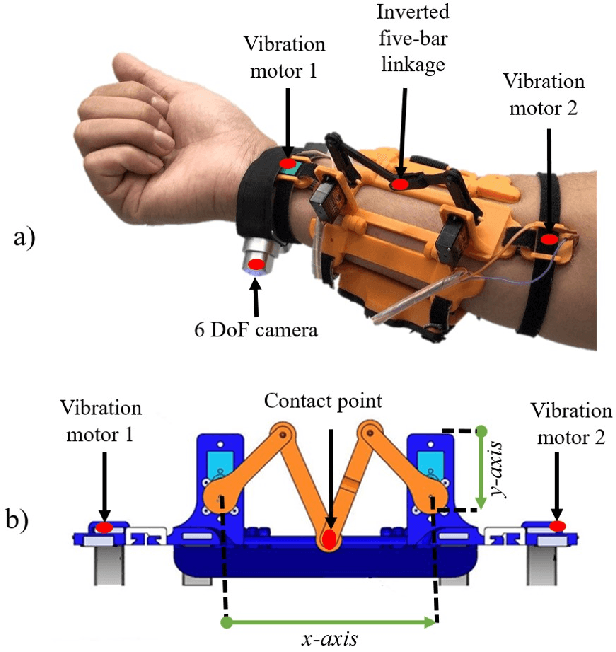

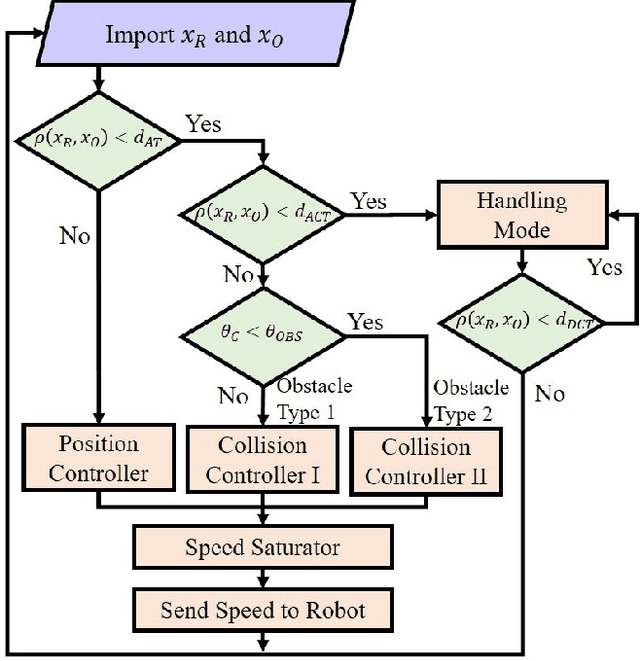

CoHaptics: Development of Human-Robot Collaborative System with Forearm-worn Haptic Display to Increase Safety in Future Factories

Sep 13, 2021

Abstract:Complex tasks require human collaboration since robots do not have enough dexterity. However, robots are still used as instruments and not as collaborative systems. We are introducing a framework to ensure safety in a human-robot collaborative environment. The system is composed of a haptic feedback display, low-cost wearable mocap, and a new collision avoidance algorithm based on the Artificial Potential Fields (APF). Wearable optical motion capturing system enables tracking the human hand position with high accuracy and low latency on large working areas. This study evaluates whether haptic feedback improves safety in human-robot collaboration. Three experiments were carried out to evaluate the performance of the proposed system. The first one evaluated human responses to the haptic device during interaction with the Robot Tool Center Point (TCP). The second experiment analyzed human-robot behavior during an imminent collision. The third experiment evaluated the system in a collaborative activity in a shared working environment. This study had shown that when haptic feedback in the control loop was included, the safe distance (minimum robot-obstacle distance) increased by 4.1 cm from 12.39 cm to 16.55 cm, and the robot's path, when the collision avoidance algorithm was activated, was reduced by 81%.

ZoomTouch: Multi-User Remote Robot Control in Zoom by DNN-based Gesture Recognition

Nov 07, 2020

Abstract:We present ZoomTouch - a breakthrough technology for multi-user control of robot from Zoom in real-time by DNN-based gesture recognition. The users from digital world can have a video conferencing and manipulate the robot to make the dexterous manipulations with tangible objects. As the scenario, we proposed the remote COVID-19 test Laboratory to considerably reduce the time to receive the data and substitute medical assistant working in protective gear in close proximity with infected cells. The proposed technology suggests a new type of reality, where multi-users can jointly interact with remote object, e.g. make a new building design, joint cooking in robotic kitchen, etc, and discuss/modify the results at the same time.

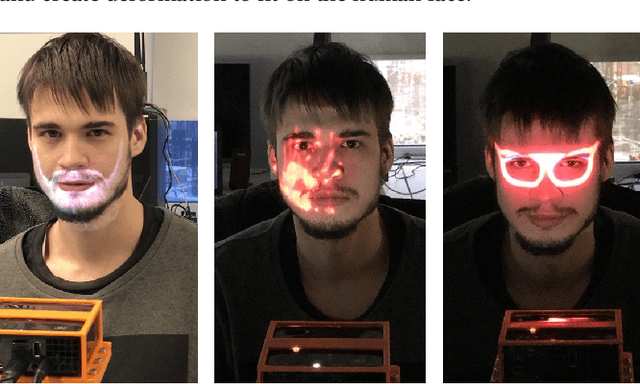

MaskBot: Real-time Robotic Projection Mapping with Head Motion Tracking

Nov 07, 2020

Abstract:The projection mapping systems on the human face is limited by the latency and the movement of the users. The area of the projection is restricted by the position of the projectors and the cameras. We are introducing MaskBot, a real-time projection mapping system operated by a 6 Degrees of Freedom (DoF) collaborative robot. The collaborative robot locates the projector and camera in normal position to the face of the user to increase the projection area and to reduce the latency of the system. A webcam is used to detect the face and to sense the robot-user distance to modify the projection size and orientation. MaskBot projects different images on the face of the user, such as face modifications, make-up, and logos. In contrast to the existing methods, the presented system is the first that introduces a robotic projection mapping. One of the prospective applications is to acquire a dataset of adversarial images to challenge face detection DNN systems, such as Face ID.

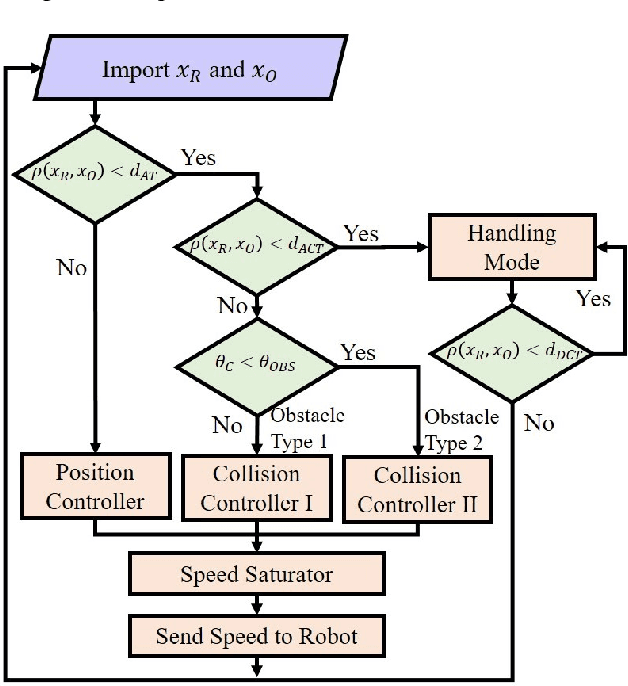

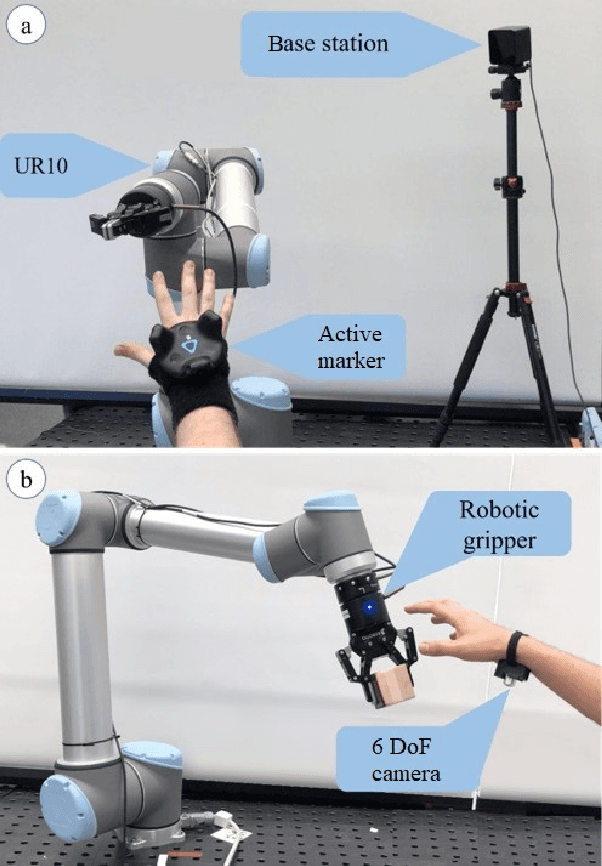

CobotGear: Interaction with Collaborative Robots using Wearable Optical Motion Capturing Systems

Jul 20, 2020

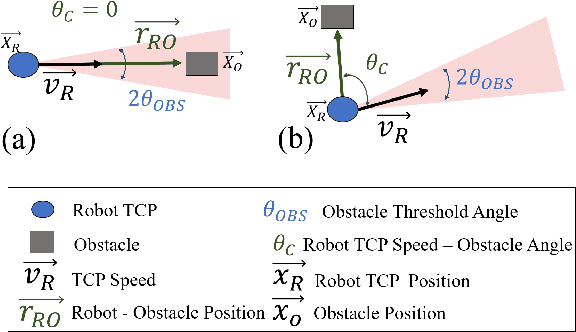

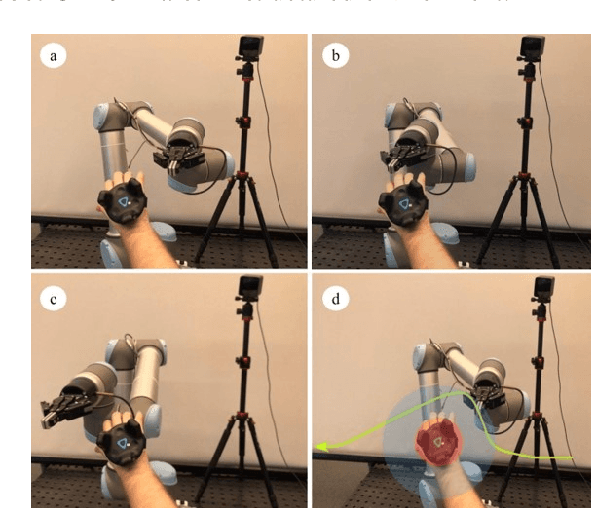

Abstract:In industrial applications, complex tasks require human collaboration since the robot doesn't have enough dexterity. However, the robots are still implemented as tools and not as collaborative intelligent systems. To ensure safety in the human-robot collaboration, we introduce a system that presents a new method that integrates low-cost wearable mocap, and an improved collision avoidance algorithm based on the artificial potential fields. Wearable optical motion capturing allows to track the human hand position with high accuracy and low latency on large working areas. To increase the efficiency of the proposed algorithm, two obstacle types are discriminated according to their collision probability. A preliminary experiment was performed to analyze the algorithm behavior and to select the best values for the obstacle's threshold angle $\theta_{OBS}$, and for the avoidance threshold distance $d_{AT}$. The second experiment was carried out to evaluate the system performance with $d_{AT}$ = 0.2 m and $\theta_{OBS}$ = 45 degrees. The third experiment evaluated the system in a real collaborative task. The results demonstrate the robust performance of the robotic arm generating smooth collision-free trajectories. The proposed technology will allow consumer robots to safely collaborate with humans in cluttered environments, e.g., factories, kitchens, living rooms, and restaurants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge