Juan Heredia

EcBot: Data-Driven Energy Consumption Open-Source MATLAB Library for Manipulators

Aug 08, 2025Abstract:Existing literature proposes models for estimating the electrical power of manipulators, yet two primary limitations prevail. First, most models are predominantly tested using traditional industrial robots. Second, these models often lack accuracy. To address these issues, we introduce an open source Matlab-based library designed to automatically generate \ac{ec} models for manipulators. The necessary inputs for the library are Denavit-Hartenberg parameters, link masses, and centers of mass. Additionally, our model is data-driven and requires real operational data, including joint positions, velocities, accelerations, electrical power, and corresponding timestamps. We validated our methodology by testing on four lightweight robots sourced from three distinct manufacturers: Universal Robots, Franka Emika, and Kinova. The model underwent testing, and the results demonstrated an RMSE ranging from 1.42 W to 2.80 W for the training dataset and from 1.45 W to 5.25 W for the testing dataset.

Evaluating Robot Program Performance with Power Consumption Driven Metrics in Lightweight Industrial Robots

Aug 08, 2025Abstract:The code performance of industrial robots is typically analyzed through CPU metrics, which overlook the physical impact of code on robot behavior. This study introduces a novel framework for assessing robot program performance from an embodiment perspective by analyzing the robot's electrical power profile. Our approach diverges from conventional CPU based evaluations and instead leverages a suite of normalized metrics, namely, the energy utilization coefficient, the energy conversion metric, and the reliability coefficient, to capture how efficiently and reliably energy is used during task execution. Complementing these metrics, the established robot wear metric provides further insight into long term reliability. Our approach is demonstrated through an experimental case study in machine tending, comparing four programs with diverse strategies using a UR5e robot. The proposed metrics directly compare and categorize different robot programs, regardless of the specific task, by linking code performance to its physical manifestation through power consumption patterns. Our results reveal the strengths and weaknesses of each strategy, offering actionable insights for optimizing robot programming practices. Enhancing energy efficiency and reliability through this embodiment centric approach not only improves individual robot performance but also supports broader industrial objectives such as sustainable manufacturing and cost reduction.

AR Training App for Energy Optimal Programming of Cobots

Oct 14, 2022

Abstract:Worldwide most factories aim for low-cost and fast production ignoring resources and energy consumption. But, high revenues have been accompanied by environmental degradation. The United Nations reacted to the ecological problem and proposed the Sustainable Development Goals, and one of them is Sustainable Production (Goal 12). In addition, the participation of lightweight robots, such as collaborative robots, in modern industrial production is increasing. The energy consumption of a single collaborative robot is not significant, however, the consumption of more and more cobots worldwide is representative. Consequently, our research focuses on strategies to reduce the energy consumption of lightweight robots aiming for sustainable production. Firstly, the energy consumption of the lightweight robot UR10e is assessed by a set of experiments. We analyzed the results of the experiments to describe the relationship between the energy consumption and the evaluation parameters, thus paving the way to optimization strategies. Next, we propose four strategies to reduce energy consumption: 1) optimal standby position, 2) optimal robot instruction, 3) optimal motion time, and 4) reduction of dissipative energy. The results show that cobots potentially reduce from 3\% up to 37\% of their energy consumption, depending on the optimization technique. To disseminate the results of our research, we developed an AR game in which the users learn how to energy-efficiently program cobots.

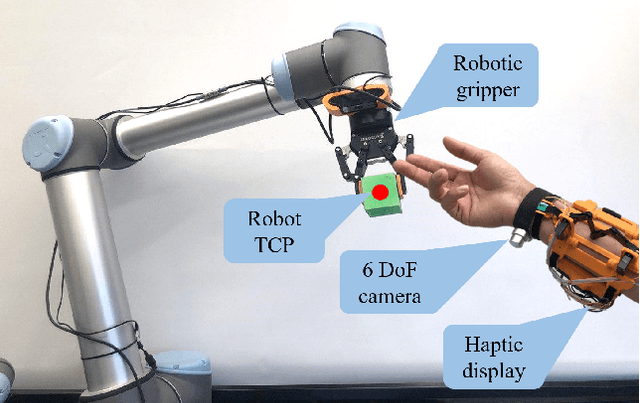

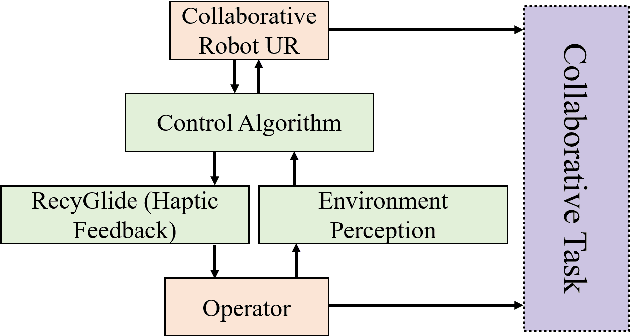

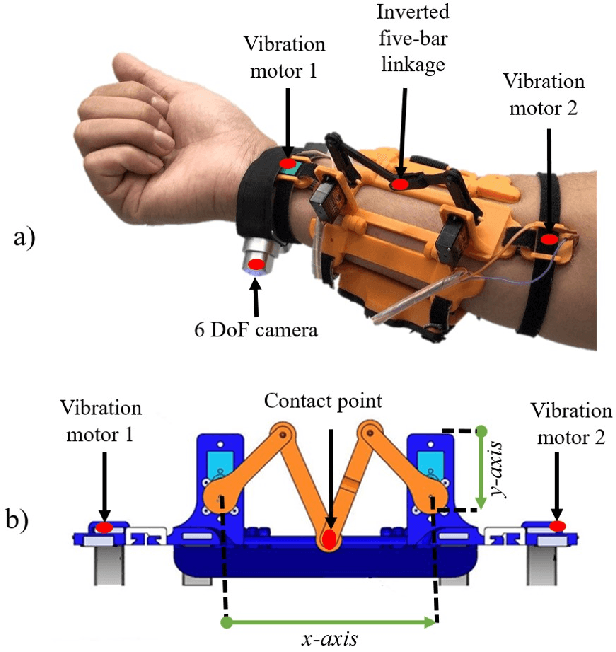

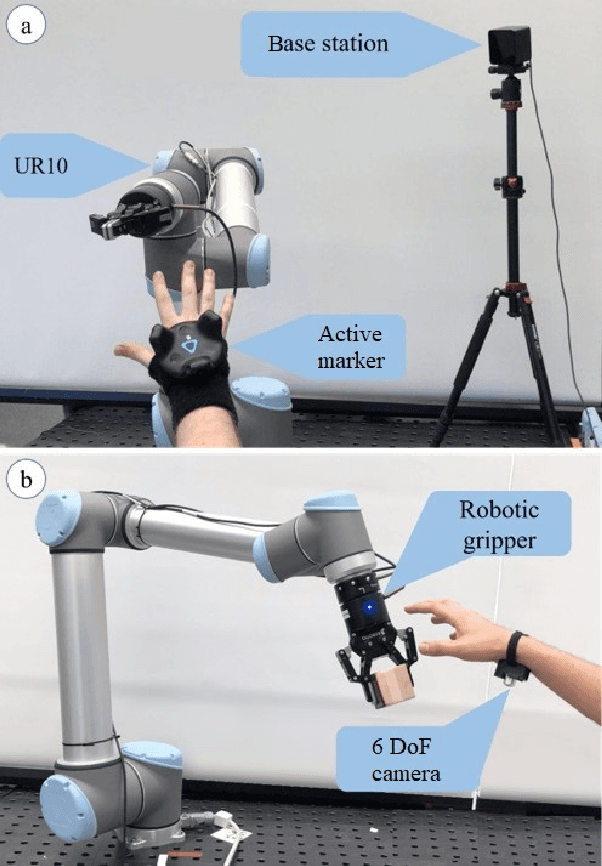

CoHaptics: Development of Human-Robot Collaborative System with Forearm-worn Haptic Display to Increase Safety in Future Factories

Sep 13, 2021

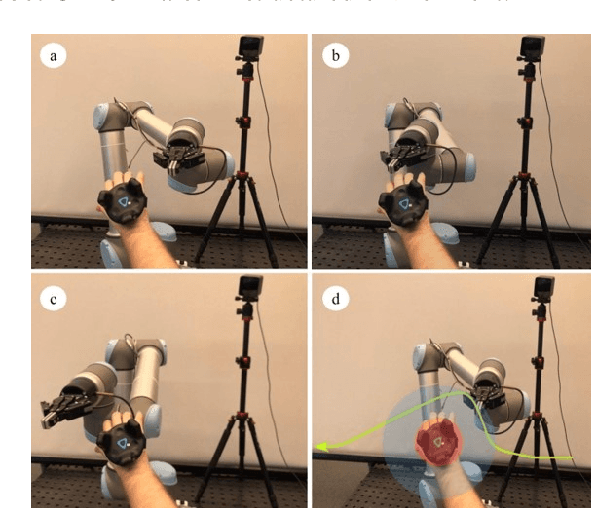

Abstract:Complex tasks require human collaboration since robots do not have enough dexterity. However, robots are still used as instruments and not as collaborative systems. We are introducing a framework to ensure safety in a human-robot collaborative environment. The system is composed of a haptic feedback display, low-cost wearable mocap, and a new collision avoidance algorithm based on the Artificial Potential Fields (APF). Wearable optical motion capturing system enables tracking the human hand position with high accuracy and low latency on large working areas. This study evaluates whether haptic feedback improves safety in human-robot collaboration. Three experiments were carried out to evaluate the performance of the proposed system. The first one evaluated human responses to the haptic device during interaction with the Robot Tool Center Point (TCP). The second experiment analyzed human-robot behavior during an imminent collision. The third experiment evaluated the system in a collaborative activity in a shared working environment. This study had shown that when haptic feedback in the control loop was included, the safe distance (minimum robot-obstacle distance) increased by 4.1 cm from 12.39 cm to 16.55 cm, and the robot's path, when the collision avoidance algorithm was activated, was reduced by 81%.

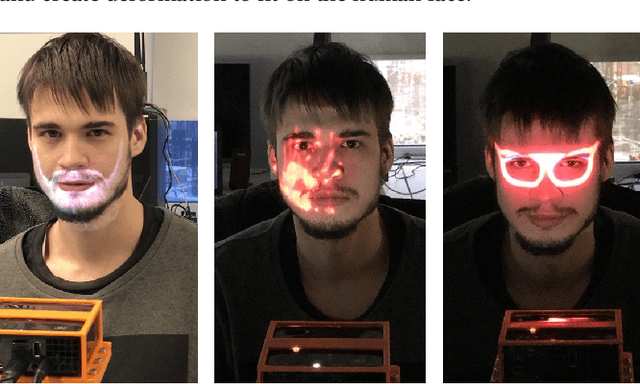

MaskBot: Real-time Robotic Projection Mapping with Head Motion Tracking

Nov 07, 2020

Abstract:The projection mapping systems on the human face is limited by the latency and the movement of the users. The area of the projection is restricted by the position of the projectors and the cameras. We are introducing MaskBot, a real-time projection mapping system operated by a 6 Degrees of Freedom (DoF) collaborative robot. The collaborative robot locates the projector and camera in normal position to the face of the user to increase the projection area and to reduce the latency of the system. A webcam is used to detect the face and to sense the robot-user distance to modify the projection size and orientation. MaskBot projects different images on the face of the user, such as face modifications, make-up, and logos. In contrast to the existing methods, the presented system is the first that introduces a robotic projection mapping. One of the prospective applications is to acquire a dataset of adversarial images to challenge face detection DNN systems, such as Face ID.

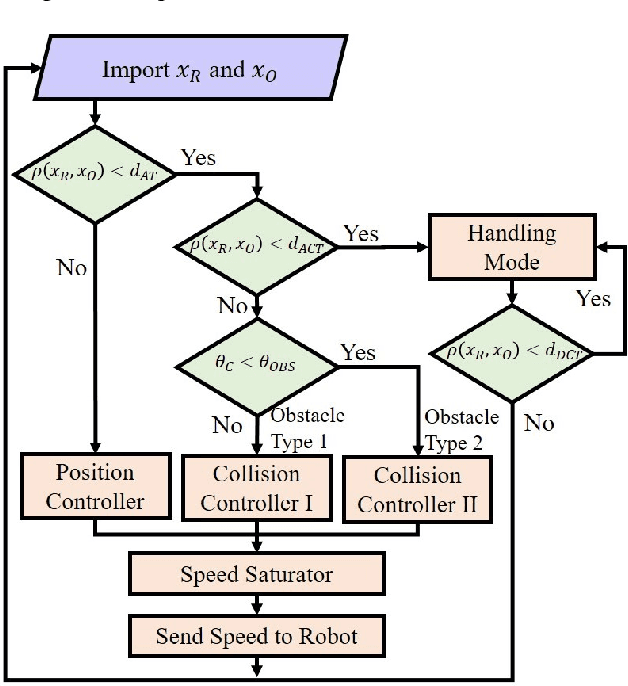

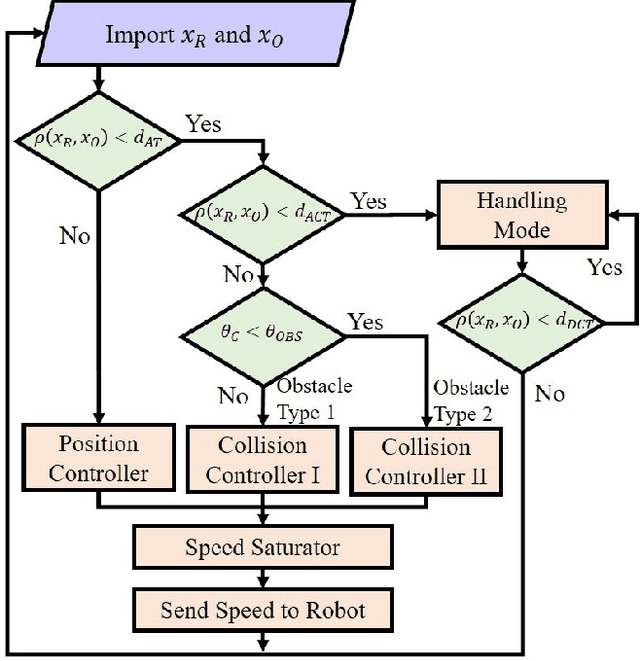

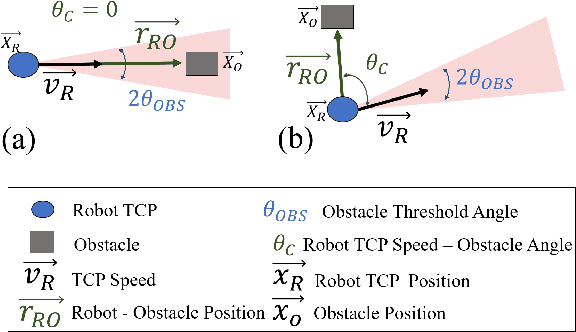

CobotGear: Interaction with Collaborative Robots using Wearable Optical Motion Capturing Systems

Jul 20, 2020

Abstract:In industrial applications, complex tasks require human collaboration since the robot doesn't have enough dexterity. However, the robots are still implemented as tools and not as collaborative intelligent systems. To ensure safety in the human-robot collaboration, we introduce a system that presents a new method that integrates low-cost wearable mocap, and an improved collision avoidance algorithm based on the artificial potential fields. Wearable optical motion capturing allows to track the human hand position with high accuracy and low latency on large working areas. To increase the efficiency of the proposed algorithm, two obstacle types are discriminated according to their collision probability. A preliminary experiment was performed to analyze the algorithm behavior and to select the best values for the obstacle's threshold angle $\theta_{OBS}$, and for the avoidance threshold distance $d_{AT}$. The second experiment was carried out to evaluate the system performance with $d_{AT}$ = 0.2 m and $\theta_{OBS}$ = 45 degrees. The third experiment evaluated the system in a real collaborative task. The results demonstrate the robust performance of the robotic arm generating smooth collision-free trajectories. The proposed technology will allow consumer robots to safely collaborate with humans in cluttered environments, e.g., factories, kitchens, living rooms, and restaurants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge