Jonathan Hurst

Dynamic Bipedal Maneuvers through Sim-to-Real Reinforcement Learning

Jul 16, 2022

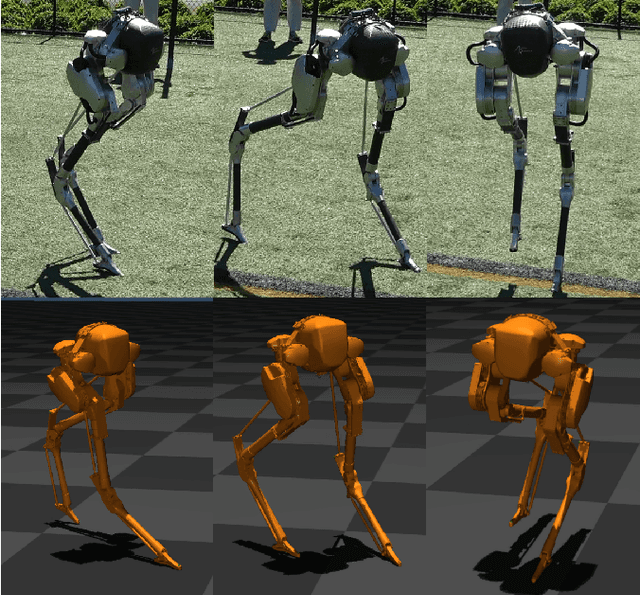

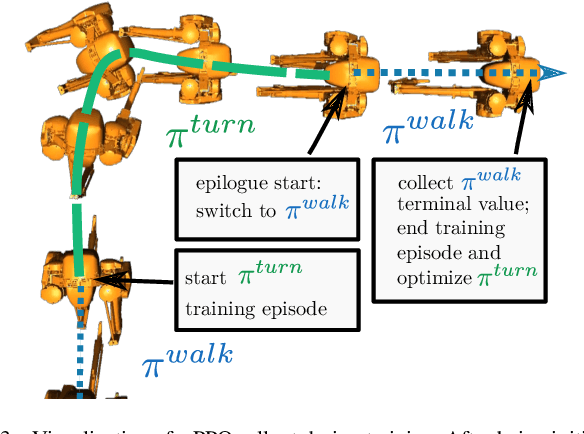

Abstract:For legged robots to match the athletic capabilities of humans and animals, they must not only produce robust periodic walking and running, but also seamlessly switch between nominal locomotion gaits and more specialized transient maneuvers. Despite recent advancements in controls of bipedal robots, there has been little focus on producing highly dynamic behaviors. Recent work utilizing reinforcement learning to produce policies for control of legged robots have demonstrated success in producing robust walking behaviors. However, these learned policies have difficulty expressing a multitude of different behaviors on a single network. Inspired by conventional optimization-based control techniques for legged robots, this work applies a recurrent policy to execute four-step, 90 degree turns trained using reference data generated from optimized single rigid body model trajectories. We present a novel training framework using epilogue terminal rewards for learning specific behaviors from pre-computed trajectory data and demonstrate a successful transfer to hardware on the bipedal robot Cassie.

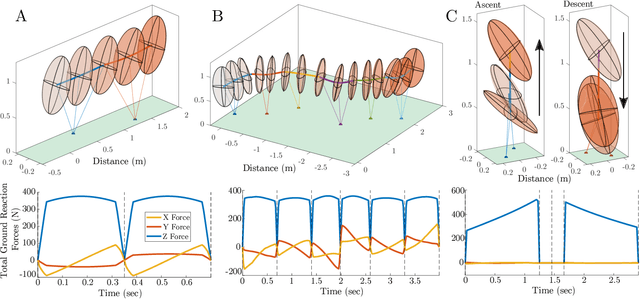

Optimizing Bipedal Maneuvers of Single Rigid-Body Models for Reinforcement Learning

Jul 09, 2022

Abstract:In this work, we propose a method to generate reduced-order model reference trajectories for general classes of highly dynamic maneuvers for bipedal robots for use in sim-to-real reinforcement learning. Our approach is to utilize a single rigid-body model (SRBM) to optimize libraries of trajectories offline to be used as expert references in the reward function of a learned policy. This method translates the model's dynamically rich rotational and translational behaviour to a full-order robot model and successfully transfers to real hardware. The SRBM's simplicity allows for fast iteration and refinement of behaviors, while the robustness of learning-based controllers allows for highly dynamic motions to be transferred to hardware. % Within this work we introduce a set of transferability constraints that amend the SRBM dynamics to actual bipedal robot hardware, our framework for creating optimal trajectories for dynamic stepping, turning maneuvers and jumps as well as our approach to integrating reference trajectories to a reinforcement learning policy. Within this work we introduce a set of transferability constraints that amend the SRBM dynamics to actual bipedal robot hardware, our framework for creating optimal trajectories for a variety of highly dynamic maneuvers as well as our approach to integrating reference trajectories for a high-speed running reinforcement learning policy. We validate our methods on the bipedal robot Cassie on which we were successfully able to demonstrate highly dynamic grounded running gaits up to 3.0 m/s.

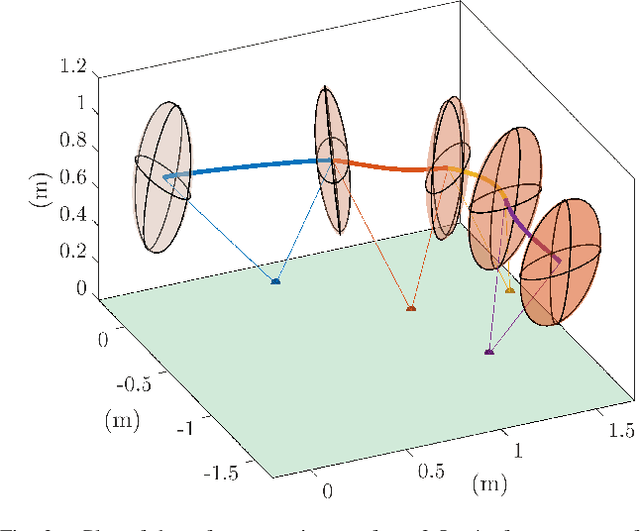

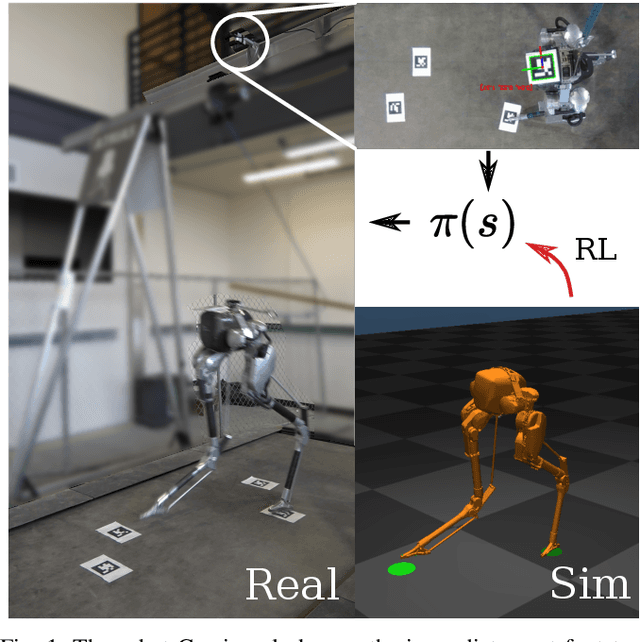

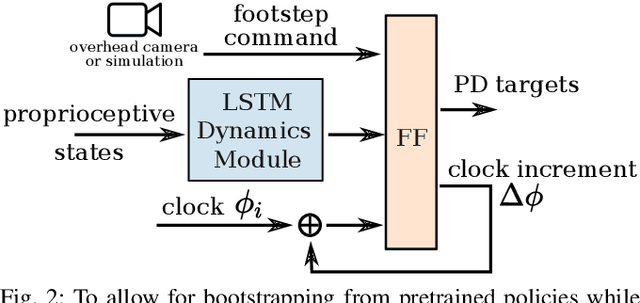

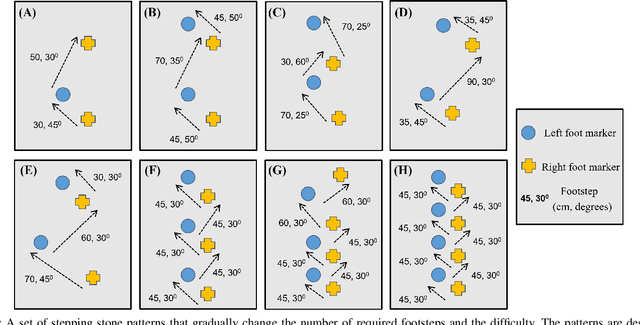

Learning Dynamic Bipedal Walking Across Stepping Stones

May 03, 2022

Abstract:In this work, we propose a learning approach for 3D dynamic bipedal walking when footsteps are constrained to stepping stones. While recent work has shown progress on this problem, real-world demonstrations have been limited to relatively simple open-loop, perception-free scenarios. Our main contribution is a more advanced learning approach that enables real-world demonstrations, using the Cassie robot, of closed-loop dynamic walking over moderately difficult stepping-stone patterns. Our approach first uses reinforcement learning (RL) in simulation to train a controller that maps footstep commands onto joint actions without any reference motion information. We then learn a model of that controller's capabilities, which enables prediction of feasible footsteps given the robot's current dynamic state. The resulting controller and model are then integrated with a real-time overhead camera system for detecting stepping stone locations. For evaluation, we develop a benchmark set of stepping stone patterns, which are used to test performance in both simulation and the real world. Overall, we demonstrate that sim-to-real learning is extremely promising for enabling dynamic locomotion over stepping stones. We also identify challenges remaining that motivate important future research directions.

Sim-to-Real Learning for Bipedal Locomotion Under Unsensed Dynamic Loads

Apr 09, 2022

Abstract:Recent work on sim-to-real learning for bipedal locomotion has demonstrated new levels of robustness and agility over a variety of terrains. However, that work, and most prior bipedal locomotion work, have not considered locomotion under a variety of external loads that can significantly influence the overall system dynamics. In many applications, robots will need to maintain robust locomotion under a wide range of potential dynamic loads, such as pulling a cart or carrying a large container of sloshing liquid, ideally without requiring additional load-sensing capabilities. In this work, we explore the capabilities of reinforcement learning (RL) and sim-to-real transfer for bipedal locomotion under dynamic loads using only proprioceptive feedback. We show that prior RL policies trained for unloaded locomotion fail for some loads and that simply training in the context of loads is enough to result in successful and improved policies. We also compare training specialized policies for each load versus a single policy for all considered loads and analyze how the resulting gaits change to accommodate different loads. Finally, we demonstrate sim-to-real transfer, which is successful but shows a wider sim-to-real gap than prior unloaded work, which points to interesting future research.

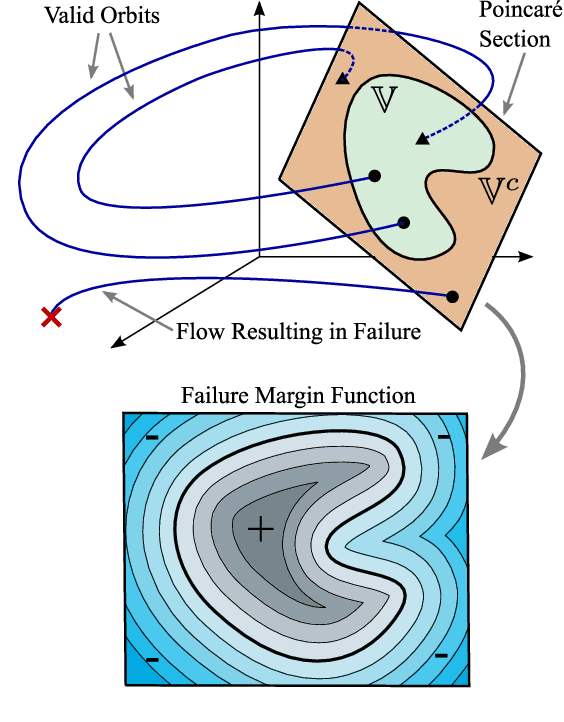

Motion Planning for Agile Legged Locomotion using Failure Margin Constraints

Mar 28, 2022

Abstract:The complex dynamics of agile robotic legged locomotion requires motion planning to intelligently adjust footstep locations. Often, bipedal footstep and motion planning use mathematically simple models such as the linear inverted pendulum, instead of dynamically-rich models that do not have closed-form solutions. We propose a real-time optimization method to plan for dynamical models that do not have closed form solutions and experience irrecoverable failure. Our method uses a data-driven approximation of the step-to-step dynamics and of a failure margin function. This failure margin function is an oriented distance function in state-action space where it describes the signed distance to success or failure. The motion planning problem is formed as a nonlinear program with constraints that enforce the approximated forward dynamics and the validity of state-action pairs. For illustration, this method is applied to create a planner for an actuated spring-loaded inverted pendulum model. In an ablation study, the failure margin constraints decreased the number of invalid solutions by between 24 and 47 percentage points across different objectives and horizon lengths. While we demonstrate the method on a canonical model of locomotion, we also discuss how this can be applied to data-driven models and full-order robot models.

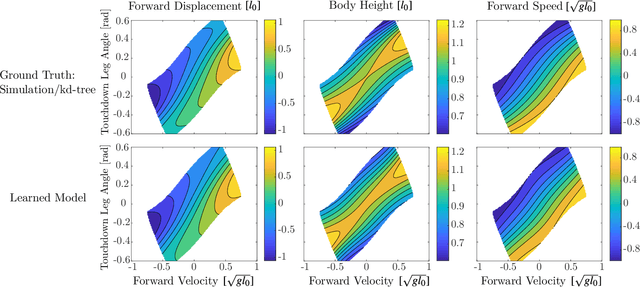

Sim-to-Real Learning of Footstep-Constrained Bipedal Dynamic Walking

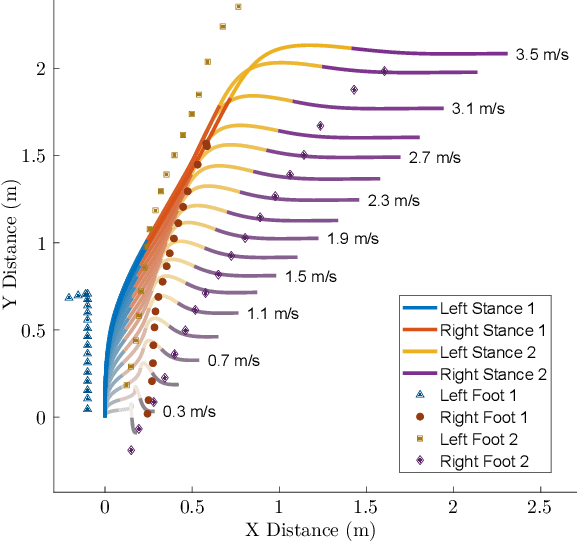

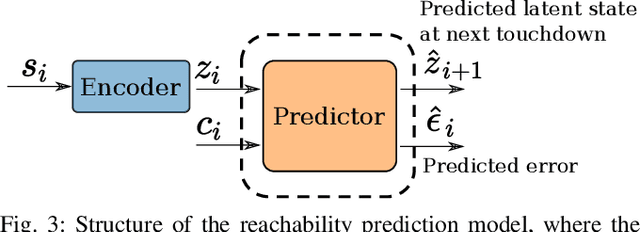

Mar 15, 2022

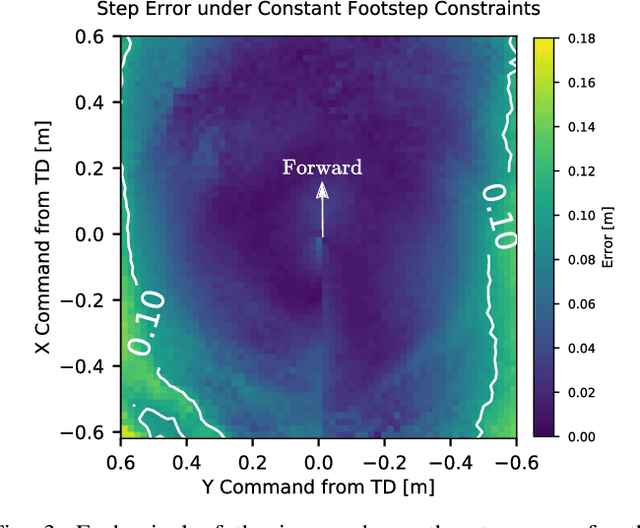

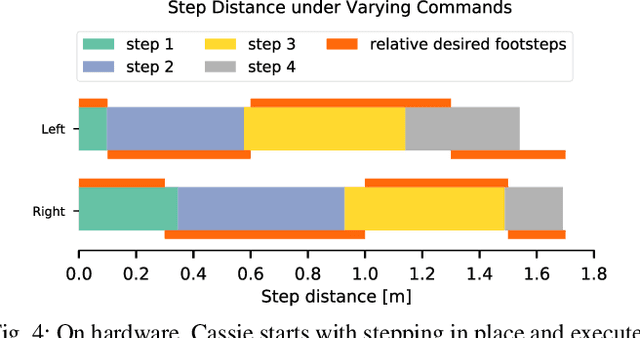

Abstract:Recently, work on reinforcement learning (RL) for bipedal robots has successfully learned controllers for a variety of dynamic gaits with robust sim-to-real demonstrations. In order to maintain balance, the learned controllers have full freedom of where to place the feet, resulting in highly robust gaits. In the real world however, the environment will often impose constraints on the feasible footstep locations, typically identified by perception systems. Unfortunately, most demonstrated RL controllers on bipedal robots do not allow for specifying and responding to such constraints. This missing control interface greatly limits the real-world application of current RL controllers. In this paper, we aim to maintain the robust and dynamic nature of learned gaits while also respecting footstep constraints imposed externally. We develop an RL formulation for training dynamic gait controllers that can respond to specified touchdown locations. We then successfully demonstrate simulation and sim-to-real performance on the bipedal robot Cassie. In addition, we use supervised learning to induce a transition model for accurately predicting the next touchdown locations that the controller can achieve given the robot's proprioceptive observations. This model paves the way for integrating the learned controller into a full-order robot locomotion planner that robustly satisfies both balance and environmental constraints.

Ankle Torque During Mid-Stance Does Not Lower Energy Requirements of Steady Gaits

Nov 29, 2021

Abstract:In this paper, we investigate whether applying ankle torques during mid-stance can be a more effective way to reduce energetic cost of locomotion than actuating leg length alone. Ankles are useful in human gaits for many reasons including static balancing. In this work, we specifically avoid the heel-strike and toe-off benefits to investigate whether the progression of the center of pressure from heel-to-toe during mid-stance, or some other approach, is beneficial in and of itself. We use an "Ankle Actuated Spring Loaded Inverted Pendulum" model to simulate the shifting center of pressure dynamics, and trajectory optimization is applied to find limit cycles that minimize cost of transport. The results show that, for the vast majority of gaits, ankle torques do not affect cost of transport. Ankles reduce the cost of transport during a narrow band of gaits at the transition from grounded running to aerial running. This suggests that applying ankle torque during mid-stance of a steady gait is not a directly beneficial strategy, but is most likely a path between beneficial heel-strikes and toe-offs.

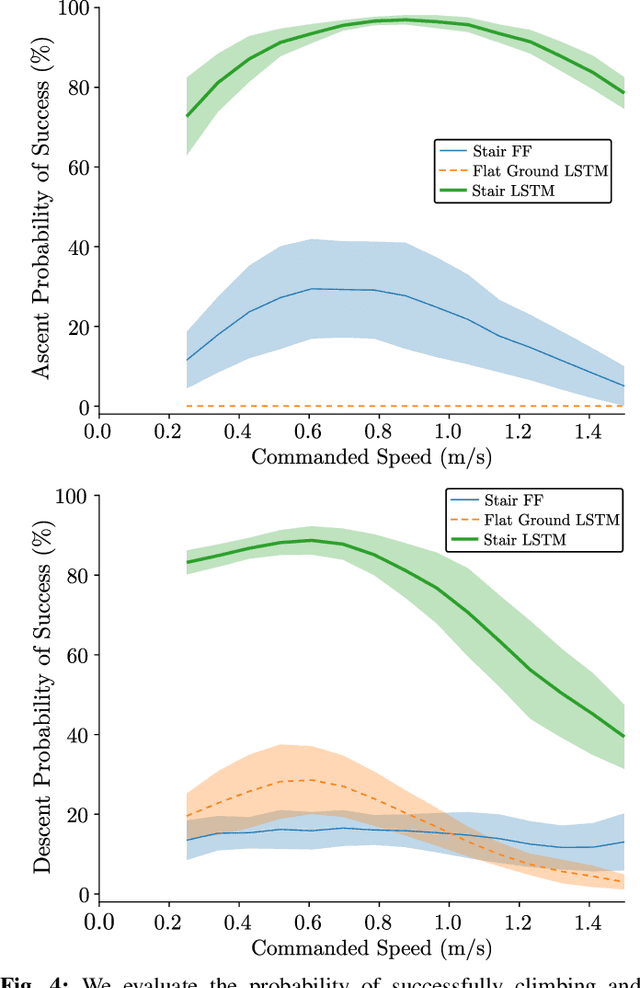

Blind Bipedal Stair Traversal via Sim-to-Real Reinforcement Learning

May 18, 2021

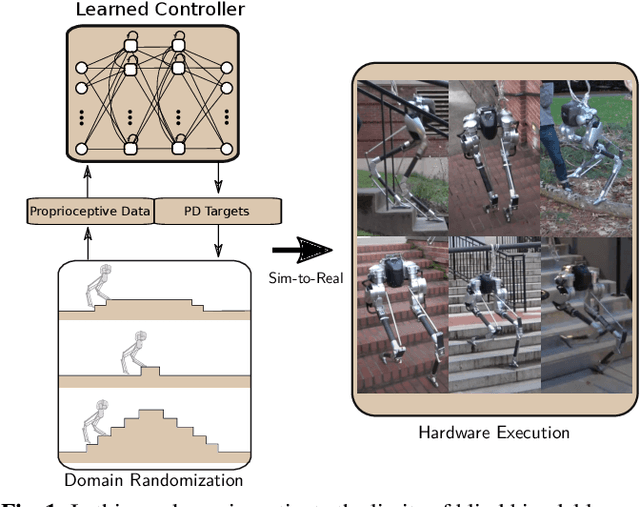

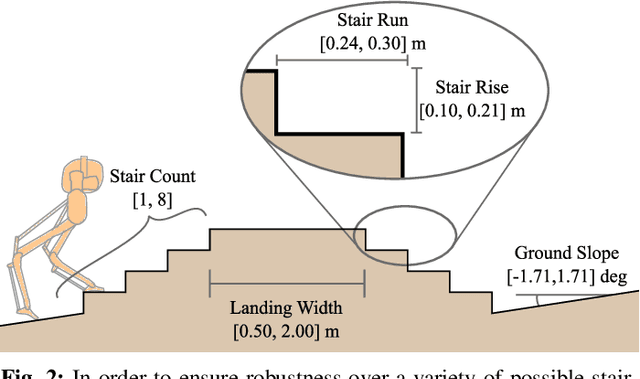

Abstract:Accurate and precise terrain estimation is a difficult problem for robot locomotion in real-world environments. Thus, it is useful to have systems that do not depend on accurate estimation to the point of fragility. In this paper, we explore the limits of such an approach by investigating the problem of traversing stair-like terrain without any external perception or terrain models on a bipedal robot. For such blind bipedal platforms, the problem appears difficult (even for humans) due to the surprise elevation changes. Our main contribution is to show that sim-to-real reinforcement learning (RL) can achieve robust locomotion over stair-like terrain on the bipedal robot Cassie using only proprioceptive feedback. Importantly, this only requires modifying an existing flat-terrain training RL framework to include stair-like terrain randomization, without any changes in reward function. To our knowledge, this is the first controller for a bipedal, human-scale robot capable of reliably traversing a variety of real-world stairs and other stair-like disturbances using only proprioception.

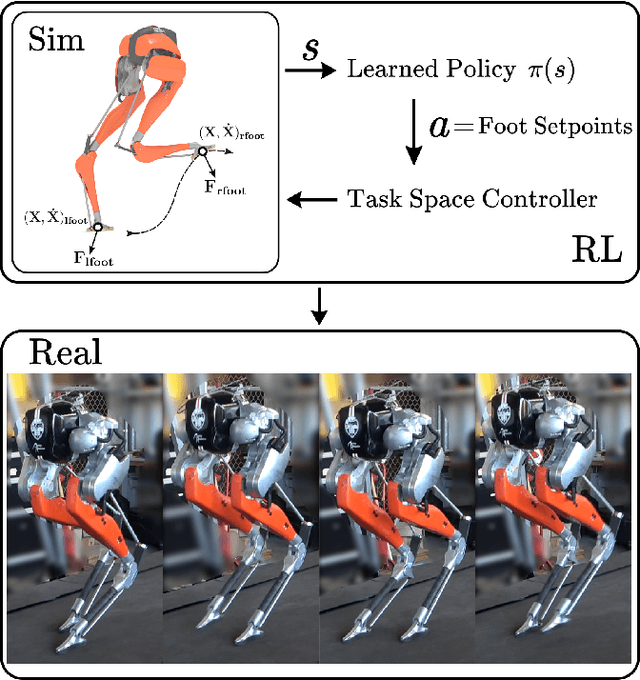

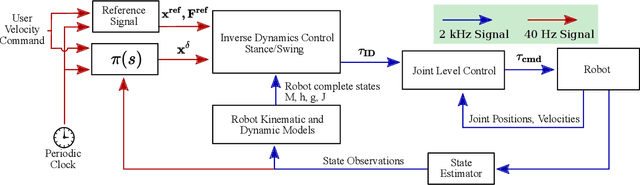

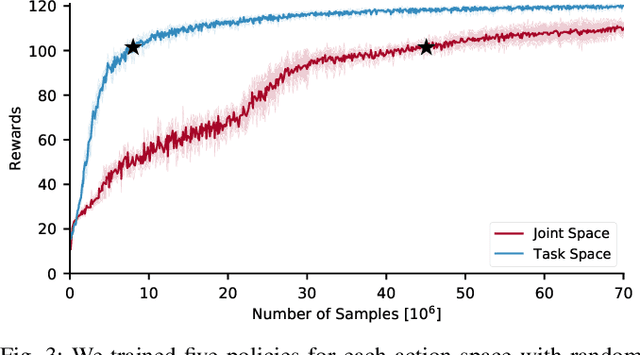

Learning Task Space Actions for Bipedal Locomotion

Nov 09, 2020

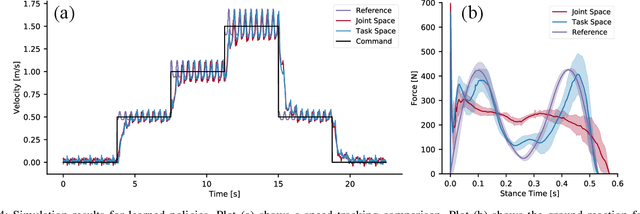

Abstract:Recent work has demonstrated the success of reinforcement learning (RL) for training bipedal locomotion policies for real robots. This prior work, however, has focused on learning joint-coordination controllers based on an objective of following joint trajectories produced by already available controllers. As such, it is difficult to train these approaches to achieve higher-level goals of legged locomotion, such as simply specifying the desired end-effector foot movement or ground reaction forces. In this work, we propose an approach for integrating knowledge of the robot system into RL to allow for learning at the level of task space actions in terms of feet setpoints. In particular, we integrate learning a task space policy with a model-based inverse dynamics controller, which translates task space actions into joint-level controls. With this natural action space for learning locomotion, the approach is more sample efficient and produces desired task space dynamics compared to learning purely joint space actions. We demonstrate the approach in simulation and also show that the learned policies are able to transfer to the real bipedal robot Cassie. This result encourages further research towards incorporating bipedal control techniques into the structure of the learning process to enable dynamic behaviors.

Sim-to-Real Learning of All Common Bipedal Gaits via Periodic Reward Composition

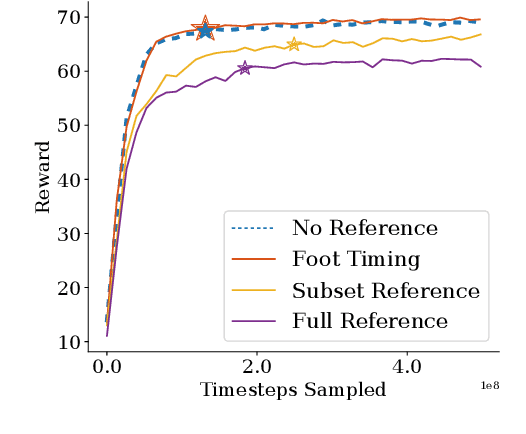

Nov 02, 2020

Abstract:We study the problem of realizing the full spectrum of bipedal locomotion on a real robot with sim-to-real reinforcement learning (RL). A key challenge of learning legged locomotion is describing different gaits, via reward functions, in a way that is intuitive for the designer and specific enough to reliably learn the gait across different initial random seeds or hyperparameters. A common approach is to use reference motions (e.g. trajectories of joint positions) to guide learning. However, finding high-quality reference motions can be difficult and the trajectories themselves narrowly constrain the space of learned motion. At the other extreme, reference-free reward functions are often underspecified (e.g. move forward) leading to massive variance in policy behavior, or are the product of significant reward-shaping via trial-and-error, making them exclusive to specific gaits. In this work, we propose a reward-specification framework based on composing simple probabilistic periodic costs on basic forces and velocities. We instantiate this framework to define a parametric reward function with intuitive settings for all common bipedal gaits - standing, walking, hopping, running, and skipping. Using this function we demonstrate successful sim-to-real transfer of the learned gaits to the bipedal robot Cassie, as well as a generic policy that can transition between all of the two-beat gaits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge