Taylor Apgar

Learning Task Space Actions for Bipedal Locomotion

Nov 09, 2020

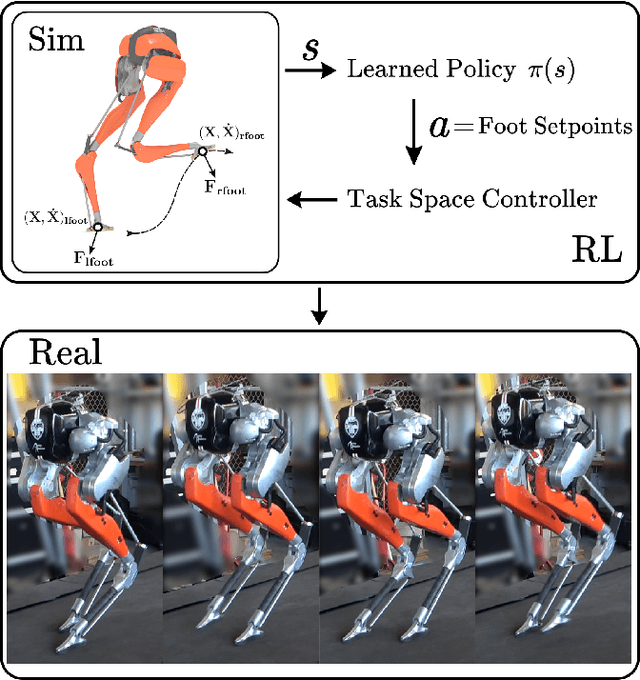

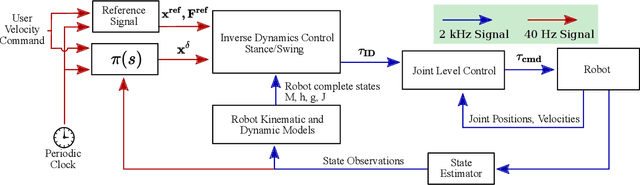

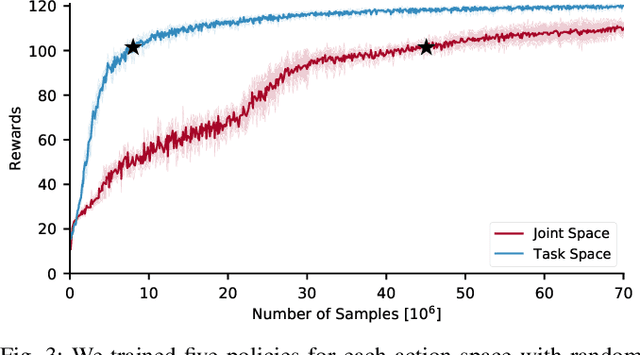

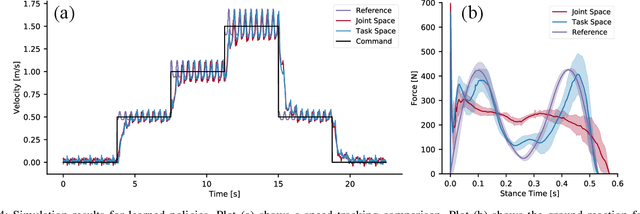

Abstract:Recent work has demonstrated the success of reinforcement learning (RL) for training bipedal locomotion policies for real robots. This prior work, however, has focused on learning joint-coordination controllers based on an objective of following joint trajectories produced by already available controllers. As such, it is difficult to train these approaches to achieve higher-level goals of legged locomotion, such as simply specifying the desired end-effector foot movement or ground reaction forces. In this work, we propose an approach for integrating knowledge of the robot system into RL to allow for learning at the level of task space actions in terms of feet setpoints. In particular, we integrate learning a task space policy with a model-based inverse dynamics controller, which translates task space actions into joint-level controls. With this natural action space for learning locomotion, the approach is more sample efficient and produces desired task space dynamics compared to learning purely joint space actions. We demonstrate the approach in simulation and also show that the learned policies are able to transfer to the real bipedal robot Cassie. This result encourages further research towards incorporating bipedal control techniques into the structure of the learning process to enable dynamic behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge