John Francis

MIMDE: Exploring the Use of Synthetic vs Human Data for Evaluating Multi-Insight Multi-Document Extraction Tasks

Nov 29, 2024

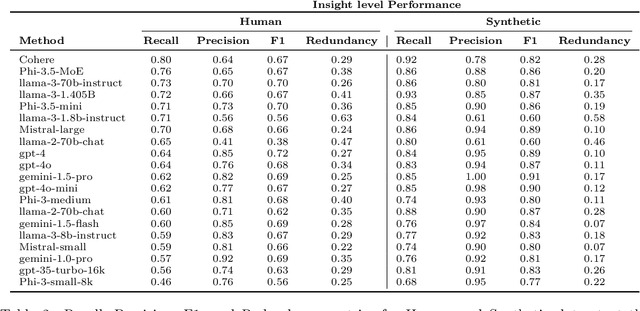

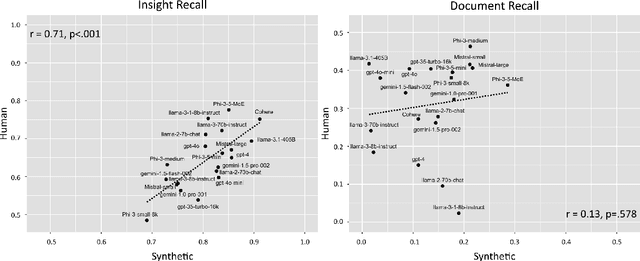

Abstract:Large language models (LLMs) have demonstrated remarkable capabilities in text analysis tasks, yet their evaluation on complex, real-world applications remains challenging. We define a set of tasks, Multi-Insight Multi-Document Extraction (MIMDE) tasks, which involves extracting an optimal set of insights from a document corpus and mapping these insights back to their source documents. This task is fundamental to many practical applications, from analyzing survey responses to processing medical records, where identifying and tracing key insights across documents is crucial. We develop an evaluation framework for MIMDE and introduce a novel set of complementary human and synthetic datasets to examine the potential of synthetic data for LLM evaluation. After establishing optimal metrics for comparing extracted insights, we benchmark 20 state-of-the-art LLMs on both datasets. Our analysis reveals a strong correlation (0.71) between the ability of LLMs to extracts insights on our two datasets but synthetic data fails to capture the complexity of document-level analysis. These findings offer crucial guidance for the use of synthetic data in evaluating text analysis systems, highlighting both its potential and limitations.

Exploring selective image matching methods for zero-shot and few-sample unsupervised domain adaptation of urban canopy prediction

Apr 16, 2024Abstract:We explore simple methods for adapting a trained multi-task UNet which predicts canopy cover and height to a new geographic setting using remotely sensed data without the need of training a domain-adaptive classifier and extensive fine-tuning. Extending previous research, we followed a selective alignment process to identify similar images in the two geographical domains and then tested an array of data-based unsupervised domain adaptation approaches in a zero-shot setting as well as with a small amount of fine-tuning. We find that the selective aligned data-based image matching methods produce promising results in a zero-shot setting, and even more so with a small amount of fine-tuning. These methods outperform both an untransformed baseline and a popular data-based image-to-image translation model. The best performing methods were pixel distribution adaptation and fourier domain adaptation on the canopy cover and height tasks respectively.

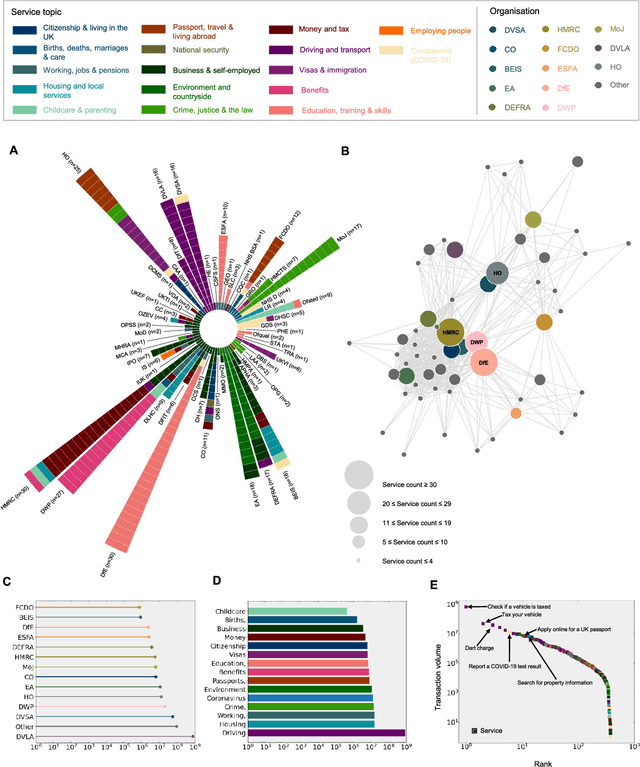

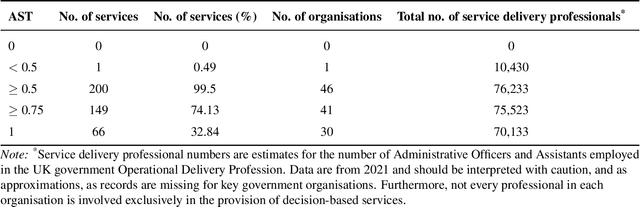

AI for bureaucratic productivity: Measuring the potential of AI to help automate 143 million UK government transactions

Mar 18, 2024

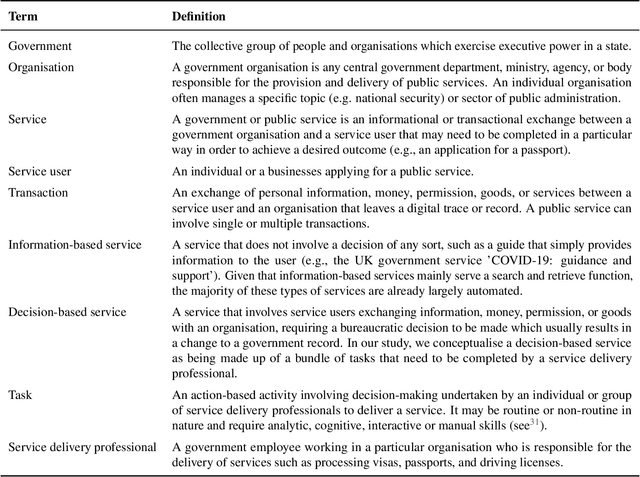

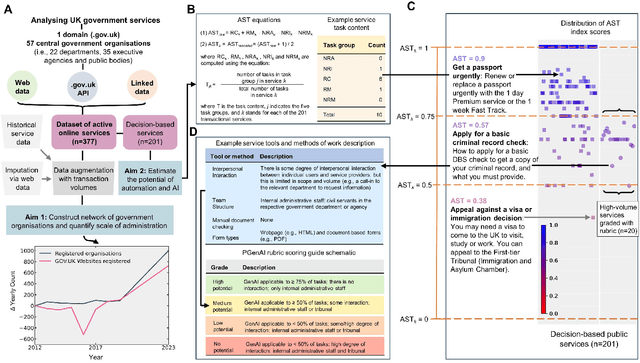

Abstract:There is currently considerable excitement within government about the potential of artificial intelligence to improve public service productivity through the automation of complex but repetitive bureaucratic tasks, freeing up the time of skilled staff. Here, we explore the size of this opportunity, by mapping out the scale of citizen-facing bureaucratic decision-making procedures within UK central government, and measuring their potential for AI-driven automation. We estimate that UK central government conducts approximately one billion citizen-facing transactions per year in the provision of around 400 services, of which approximately 143 million are complex repetitive transactions. We estimate that 84% of these complex transactions are highly automatable, representing a huge potential opportunity: saving even an average of just one minute per complex transaction would save the equivalent of approximately 1,200 person-years of work every year. We also develop a model to estimate the volume of transactions a government service undertakes, providing a way for government to avoid conducting time consuming transaction volume measurements. Finally, we find that there is high turnover in the types of services government provide, meaning that automation efforts should focus on general procedures rather than services themselves which are likely to evolve over time. Overall, our work presents a novel perspective on the structure and functioning of modern government, and how it might evolve in the age of artificial intelligence.

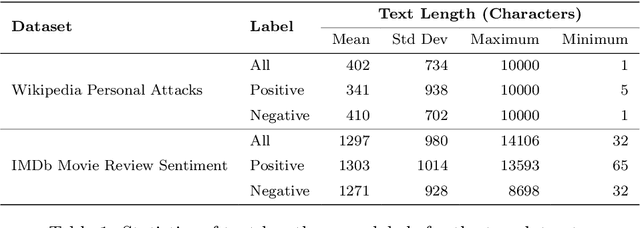

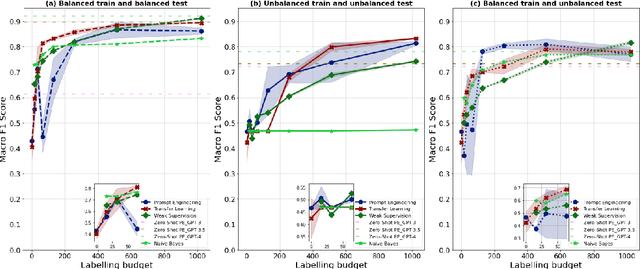

Cheap Learning: Maximising Performance of Language Models for Social Data Science Using Minimal Data

Jan 22, 2024

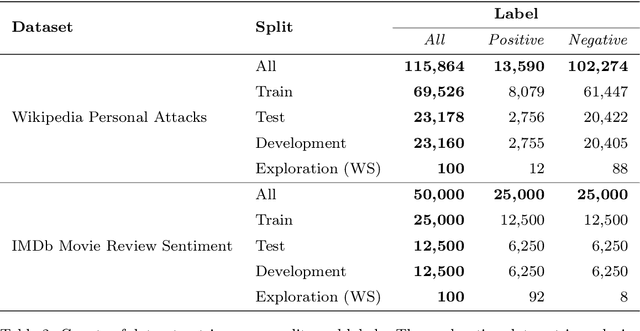

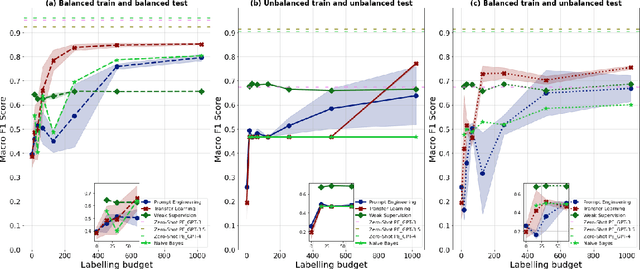

Abstract:The field of machine learning has recently made significant progress in reducing the requirements for labelled training data when building new models. These `cheaper' learning techniques hold significant potential for the social sciences, where development of large labelled training datasets is often a significant practical impediment to the use of machine learning for analytical tasks. In this article we review three `cheap' techniques that have developed in recent years: weak supervision, transfer learning and prompt engineering. For the latter, we also review the particular case of zero-shot prompting of large language models. For each technique we provide a guide of how it works and demonstrate its application across six different realistic social science applications (two different tasks paired with three different dataset makeups). We show good performance for all techniques, and in particular we demonstrate how prompting of large language models can achieve high accuracy at very low cost. Our results are accompanied by a code repository to make it easy for others to duplicate our work and use it in their own research. Overall, our article is intended to stimulate further uptake of these techniques in the social sciences.

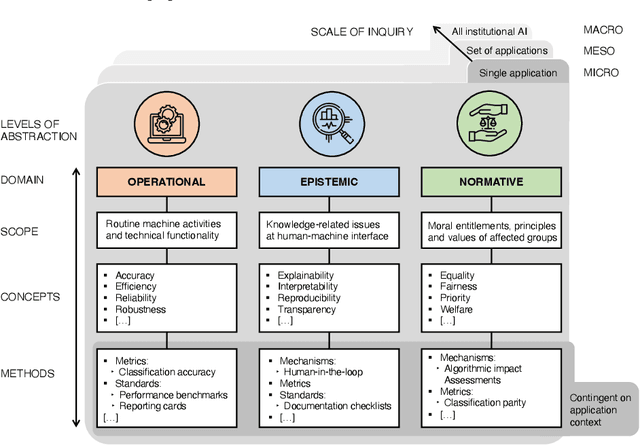

A multidomain relational framework to guide institutional AI research and adoption

Mar 17, 2023

Abstract:Calls for new metrics, technical standards and governance mechanisms to guide the adoption of Artificial Intelligence (AI) in institutions and public administration are now commonplace. Yet, most research and policy efforts aimed at understanding the implications of adopting AI tend to prioritize only a handful of ideas; they do not fully account for all the different perspectives and topics that are potentially relevant. In this position paper, we contend that this omission stems, in part, from what we call the relational problem in socio-technical discourse: fundamental ontological issues have not yet been settled-including semantic ambiguity, a lack of clear relations between concepts and differing standard terminologies. This contributes to the persistence of disparate modes of reasoning to assess institutional AI systems, and the prevalence of conceptual isolation in the fields that study them including ML, human factors, social science and policy. After developing this critique, we offer a way forward by proposing a simple policy and research design tool in the form of a conceptual framework to organize terms across fields-consisting of three horizontal domains for grouping relevant concepts and related methods: Operational, epistemic, and normative. We first situate this framework against the backdrop of recent socio-technical discourse at two premier academic venues, AIES and FAccT, before illustrating how developing suitable metrics, standards, and mechanisms can be aided by operationalizing relevant concepts in each of these domains. Finally, we outline outstanding questions for developing this relational approach to institutional AI research and adoption.

Estimating Chicago's tree cover and canopy height using multi-spectral satellite imagery

Dec 09, 2022Abstract:Information on urban tree canopies is fundamental to mitigating climate change [1] as well as improving quality of life [2]. Urban tree planting initiatives face a lack of up-to-date data about the horizontal and vertical dimensions of the tree canopy in cities. We present a pipeline that utilizes LiDAR data as ground-truth and then trains a multi-task machine learning model to generate reliable estimates of tree cover and canopy height in urban areas using multi-source multi-spectral satellite imagery for the case study of Chicago.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge