Jinyi Qi

Data-Centric Learning Framework for Real-Time Detection of Aiming Beam in Fluorescence Lifetime Imaging Guided Surgery

Nov 11, 2024

Abstract:This study introduces a novel data-centric approach to improve real-time surgical guidance using fiber-based fluorescence lifetime imaging (FLIm). A key aspect of the methodology is the accurate detection of the aiming beam, which is essential for localizing points used to map FLIm measurements onto the tissue region within the surgical field. The primary challenge arises from the complex and variable conditions encountered in the surgical environment, particularly in Transoral Robotic Surgery (TORS). Uneven illumination in the surgical field can cause reflections, reduce contrast, and results in inconsistent color representation, further complicating aiming beam detection. To overcome these challenges, an instance segmentation model was developed using a data-centric training strategy that improves accuracy by minimizing label noise and enhancing detection robustness. The model was evaluated on a dataset comprising 40 in vivo surgical videos, demonstrating a median detection rate of 85%. This performance was maintained when the model was integrated in a clinical system, achieving a similar detection rate of 85% during TORS procedures conducted in patients. The system's computational efficiency, measured at approximately 24 frames per second (FPS), was sufficient for real-time surgical guidance. This study enhances the reliability of FLIm-based aiming beam detection in complex surgical environments, advancing the feasibility of real-time, image-guided interventions for improved surgical precision

Neural KEM: A Kernel Method with Deep Coefficient Prior for PET Image Reconstruction

Jan 05, 2022

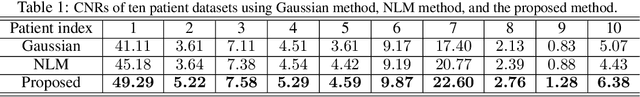

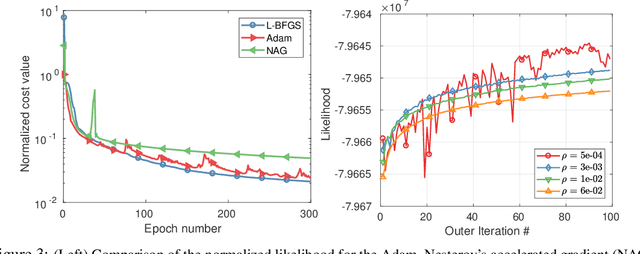

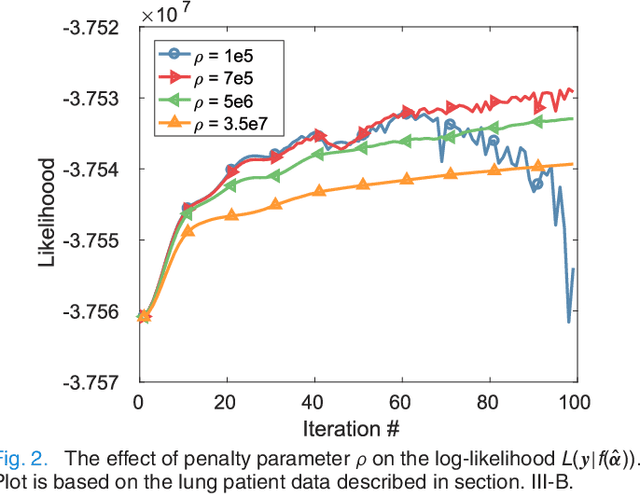

Abstract:Image reconstruction of low-count positron emission tomography (PET) data is challenging. Kernel methods address the challenge by incorporating image prior information in the forward model of iterative PET image reconstruction. The kernelized expectation-maximization (KEM) algorithm has been developed and demonstrated to be effective and easy to implement. A common approach for a further improvement of the kernel method would be adding an explicit regularization, which however leads to a complex optimization problem. In this paper, we propose an implicit regularization for the kernel method by using a deep coefficient prior, which represents the kernel coefficient image in the PET forward model using a convolutional neural-network. To solve the maximum-likelihood neural network-based reconstruction problem, we apply the principle of optimization transfer to derive a neural KEM algorithm. Each iteration of the algorithm consists of two separate steps: a KEM step for image update from the projection data and a deep-learning step in the image domain for updating the kernel coefficient image using the neural network. This optimization algorithm is guaranteed to monotonically increase the data likelihood. The results from computer simulations and real patient data have demonstrated that the neural KEM can outperform existing KEM and deep image prior methods.

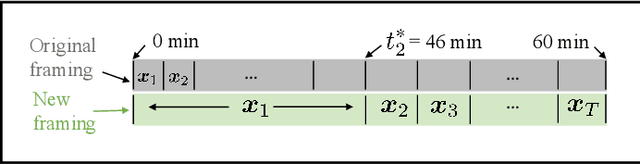

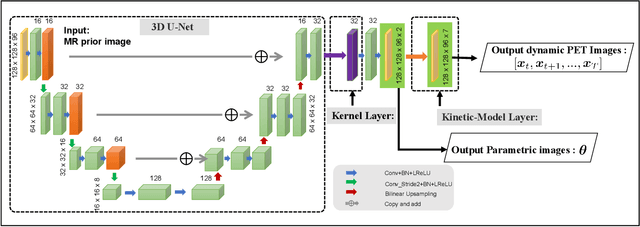

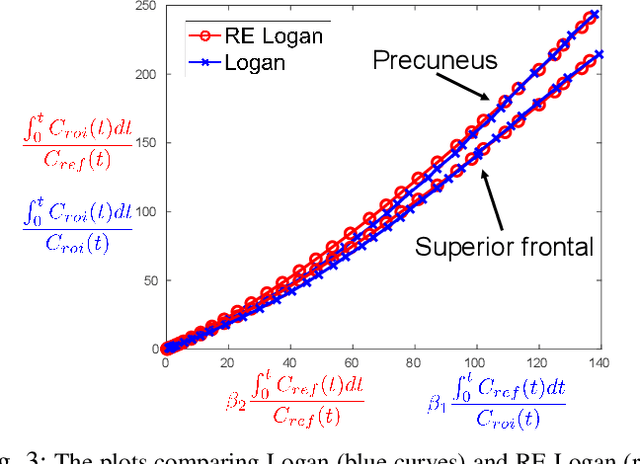

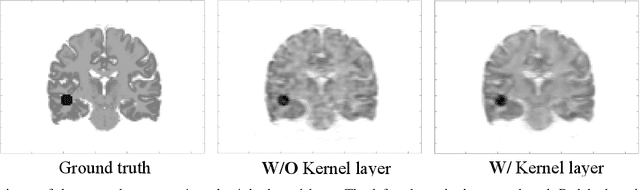

Direct Reconstruction of Linear Parametric Images from Dynamic PET Using Nonlocal Deep Image Prior

Jun 18, 2021

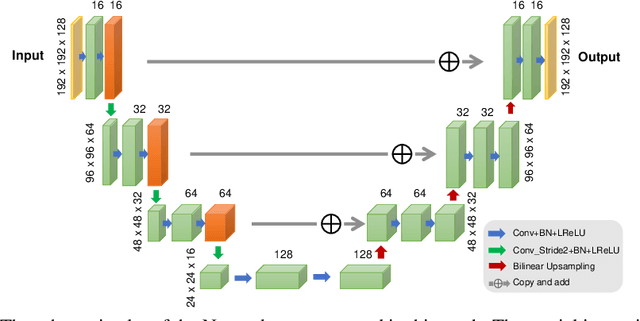

Abstract:Direct reconstruction methods have been developed to estimate parametric images directly from the measured PET sinograms by combining the PET imaging model and tracer kinetics in an integrated framework. Due to limited counts received, signal-to-noise-ratio (SNR) and resolution of parametric images produced by direct reconstruction frameworks are still limited. Recently supervised deep learning methods have been successfully applied to medical imaging denoising/reconstruction when large number of high-quality training labels are available. For static PET imaging, high-quality training labels can be acquired by extending the scanning time. However, this is not feasible for dynamic PET imaging, where the scanning time is already long enough. In this work, we proposed an unsupervised deep learning framework for direct parametric reconstruction from dynamic PET, which was tested on the Patlak model and the relative equilibrium Logan model. The patient's anatomical prior image, which is readily available from PET/CT or PET/MR scans, was supplied as the network input to provide a manifold constraint, and also utilized to construct a kernel layer to perform non-local feature denoising. The linear kinetic model was embedded in the network structure as a 1x1 convolution layer. The training objective function was based on the PET statistical model. Evaluations based on dynamic datasets of 18F-FDG and 11C-PiB tracers show that the proposed framework can outperform the traditional and the kernel method-based direct reconstruction methods.

Learning Personalized Representation for Inverse Problems in Medical Imaging Using Deep Neural Network

Jul 04, 2018

Abstract:Recently deep neural networks have been widely and successfully applied in computer vision tasks and attracted growing interests in medical imaging. One barrier for the application of deep neural networks to medical imaging is the need of large amounts of prior training pairs, which is not always feasible in clinical practice. In this work we propose a personalized representation learning framework where no prior training pairs are needed, but only the patient's own prior images. The representation is expressed using a deep neural network with the patient's prior images as network input. We then applied this novel image representation to inverse problems in medical imaging in which the original inverse problem was formulated as a constraint optimization problem and solved using the alternating direction method of multipliers (ADMM) algorithm. Anatomically guided brain positron emission tomography (PET) image reconstruction and image denoising were employed as examples to demonstrate the effectiveness of the proposed framework. Quantification results based on simulation and real datasets show that the proposed personalized representation framework outperform other widely adopted methods.

Iterative PET Image Reconstruction Using Convolutional Neural Network Representation

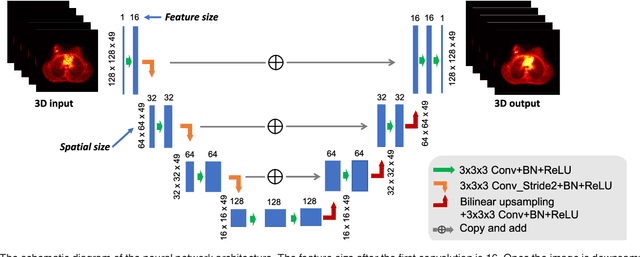

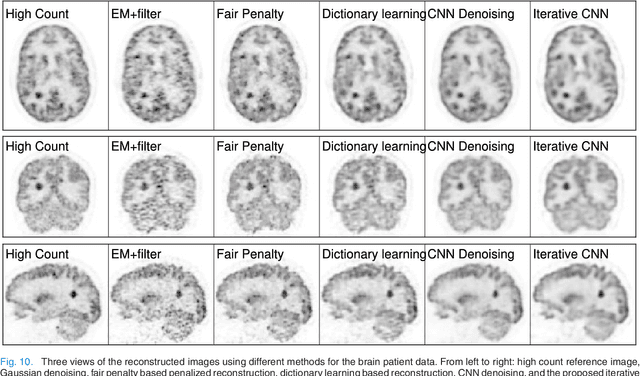

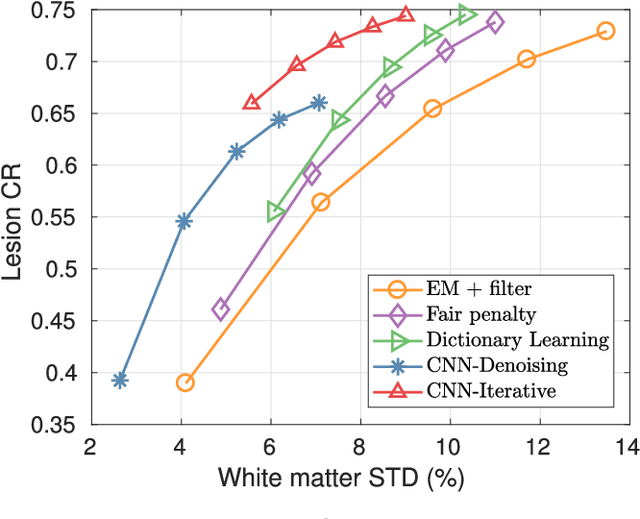

Oct 09, 2017

Abstract:PET image reconstruction is challenging due to the ill-poseness of the inverse problem and limited number of detected photons. Recently deep neural networks have been widely and successfully used in computer vision tasks and attracted growing interests in medical imaging. In this work, we trained a deep residual convolutional neural network to improve PET image quality by using the existing inter-patient information. An innovative feature of the proposed method is that we embed the neural network in the iterative reconstruction framework for image representation, rather than using it as a post-processing tool. We formulate the objective function as a constraint optimization problem and solve it using the alternating direction method of multipliers (ADMM) algorithm. Both simulation data and hybrid real data are used to evaluate the proposed method. Quantification results show that our proposed iterative neural network method can outperform the neural network denoising and conventional penalized maximum likelihood methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge