Mohamed Abul Hassan

Data-Centric Learning Framework for Real-Time Detection of Aiming Beam in Fluorescence Lifetime Imaging Guided Surgery

Nov 11, 2024

Abstract:This study introduces a novel data-centric approach to improve real-time surgical guidance using fiber-based fluorescence lifetime imaging (FLIm). A key aspect of the methodology is the accurate detection of the aiming beam, which is essential for localizing points used to map FLIm measurements onto the tissue region within the surgical field. The primary challenge arises from the complex and variable conditions encountered in the surgical environment, particularly in Transoral Robotic Surgery (TORS). Uneven illumination in the surgical field can cause reflections, reduce contrast, and results in inconsistent color representation, further complicating aiming beam detection. To overcome these challenges, an instance segmentation model was developed using a data-centric training strategy that improves accuracy by minimizing label noise and enhancing detection robustness. The model was evaluated on a dataset comprising 40 in vivo surgical videos, demonstrating a median detection rate of 85%. This performance was maintained when the model was integrated in a clinical system, achieving a similar detection rate of 85% during TORS procedures conducted in patients. The system's computational efficiency, measured at approximately 24 frames per second (FPS), was sufficient for real-time surgical guidance. This study enhances the reliability of FLIm-based aiming beam detection in complex surgical environments, advancing the feasibility of real-time, image-guided interventions for improved surgical precision

Automatic Recognition of Food Ingestion Environment from the AIM-2 Wearable Sensor

May 13, 2024

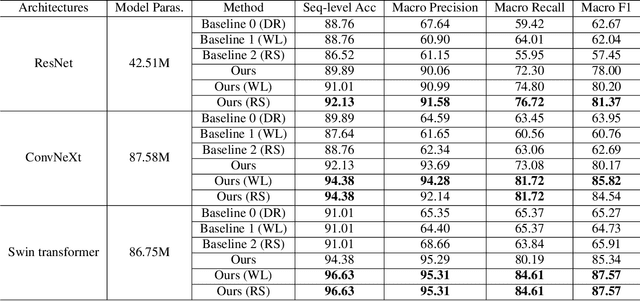

Abstract:Detecting an ingestion environment is an important aspect of monitoring dietary intake. It provides insightful information for dietary assessment. However, it is a challenging problem where human-based reviewing can be tedious, and algorithm-based review suffers from data imbalance and perceptual aliasing problems. To address these issues, we propose a neural network-based method with a two-stage training framework that tactfully combines fine-tuning and transfer learning techniques. Our method is evaluated on a newly collected dataset called ``UA Free Living Study", which uses an egocentric wearable camera, AIM-2 sensor, to simulate food consumption in free-living conditions. The proposed training framework is applied to common neural network backbones, combined with approaches in the general imbalanced classification field. Experimental results on the collected dataset show that our proposed method for automatic ingestion environment recognition successfully addresses the challenging data imbalance problem in the dataset and achieves a promising overall classification accuracy of 96.63%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge