Jie Su

MFH: A Multi-faceted Heuristic Algorithm Selection Approach for Software Verification

Mar 28, 2025Abstract:Currently, many verification algorithms are available to improve the reliability of software systems. Selecting the appropriate verification algorithm typically demands domain expertise and non-trivial manpower. An automated algorithm selector is thus desired. However, existing selectors, either depend on machine-learned strategies or manually designed heuristics, encounter issues such as reliance on high-quality samples with algorithm labels and limited scalability. In this paper, an automated algorithm selection approach, namely MFH, is proposed for software verification. Our approach leverages the heuristics that verifiers producing correct results typically implement certain appropriate algorithms, and the supported algorithms by these verifiers indirectly reflect which ones are potentially applicable. Specifically, MFH embeds the code property graph (CPG) of a semantic-preserving transformed program to enhance the robustness of the prediction model. Furthermore, our approach decomposes the selection task into the sub-tasks of predicting potentially applicable algorithms and matching the most appropriate verifiers. Additionally, MFH also introduces a feedback loop on incorrect predictions to improve model prediction accuracy. We evaluate MFH on 20 verifiers and over 15,000 verification tasks. Experimental results demonstrate the effectiveness of MFH, achieving a prediction accuracy of 91.47% even without ground truth algorithm labels provided during the training phase. Moreover, the prediction accuracy decreases only by 0.84% when introducing 10 new verifiers, indicating the strong scalability of the proposed approach.

Neural Dynamics Model of Visual Decision-Making: Learning from Human Experts

Sep 04, 2024

Abstract:Uncovering the fundamental neural correlates of biological intelligence, developing mathematical models, and conducting computational simulations are critical for advancing new paradigms in artificial intelligence (AI). In this study, we implemented a comprehensive visual decision-making model that spans from visual input to behavioral output, using a neural dynamics modeling approach. Drawing inspiration from the key components of the dorsal visual pathway in primates, our model not only aligns closely with human behavior but also reflects neural activities in primates, and achieving accuracy comparable to convolutional neural networks (CNNs). Moreover, magnetic resonance imaging (MRI) identified key neuroimaging features such as structural connections and functional connectivity that are associated with performance in perceptual decision-making tasks. A neuroimaging-informed fine-tuning approach was introduced and applied to the model, leading to performance improvements that paralleled the behavioral variations observed among subjects. Compared to classical deep learning models, our model more accurately replicates the behavioral performance of biological intelligence, relying on the structural characteristics of biological neural networks rather than extensive training data, and demonstrating enhanced resilience to perturbation.

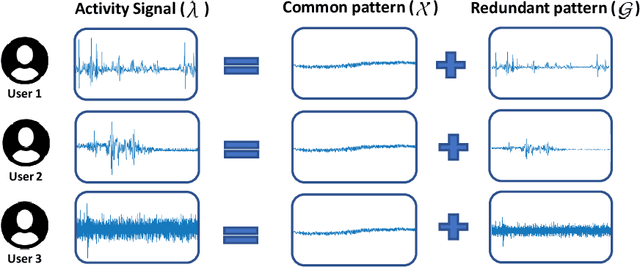

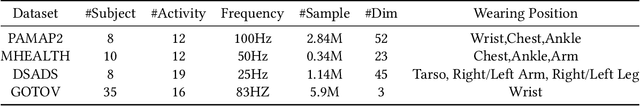

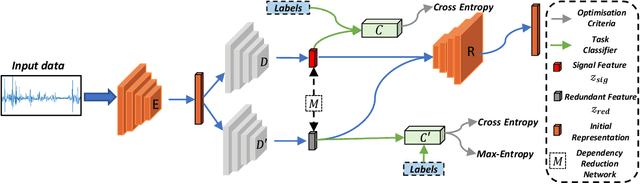

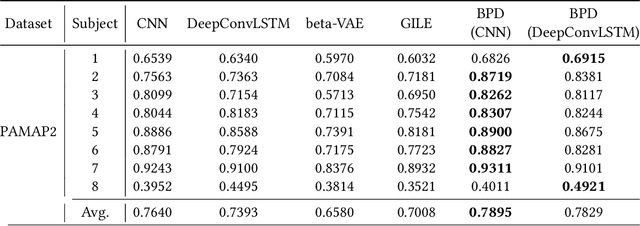

Learning Disentangled Behaviour Patterns for Wearable-based Human Activity Recognition

Feb 15, 2022

Abstract:In wearable-based human activity recognition (HAR) research, one of the major challenges is the large intra-class variability problem. The collected activity signal is often, if not always, coupled with noises or bias caused by personal, environmental, or other factors, making it difficult to learn effective features for HAR tasks, especially when with inadequate data. To address this issue, in this work, we proposed a Behaviour Pattern Disentanglement (BPD) framework, which can disentangle the behavior patterns from the irrelevant noises such as personal styles or environmental noises, etc. Based on a disentanglement network, we designed several loss functions and used an adversarial training strategy for optimization, which can disentangle activity signals from the irrelevant noises with the least dependency (between them) in the feature space. Our BPD framework is flexible, and it can be used on top of existing deep learning (DL) approaches for feature refinement. Extensive experiments were conducted on four public HAR datasets, and the promising results of our proposed BPD scheme suggest its flexibility and effectiveness. This is an open-source project, and the code can be found at http://github.com/Jie-su/BPD

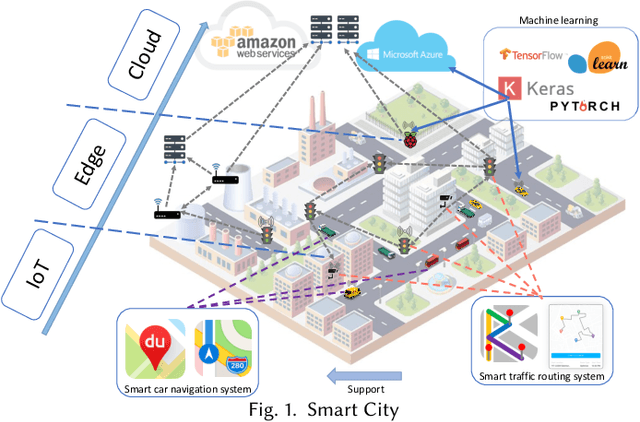

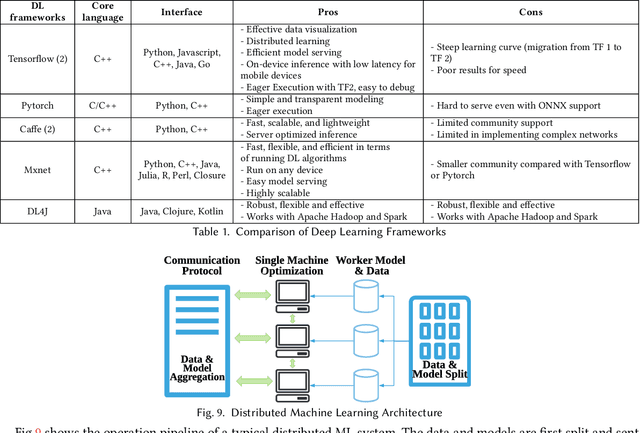

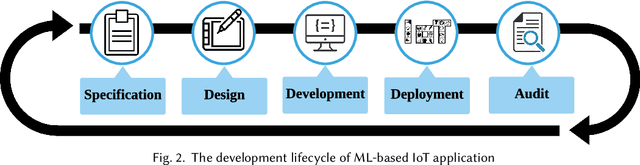

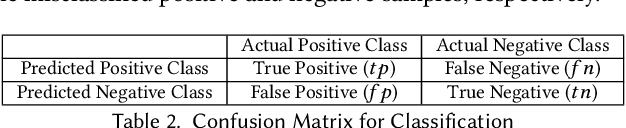

Orchestrating Development Lifecycle of Machine Learning Based IoT Applications: A Survey

Oct 15, 2019

Abstract:Machine Learning (ML) and Internet of Things (IoT) are complementary advances: ML techniques unlock complete potentials of IoT with intelligence, and IoT applications increasingly feed data collected by sensors into ML models, thereby employing results to improve their business processes and services. Hence, orchestrating ML pipelines that encompasses model training and implication involved in holistic development lifecycle of an IoT application often leads to complex system integration. This paper provides a comprehensive and systematic survey on the development lifecycle of ML-based IoT application. We outline core roadmap and taxonomy, and subsequently assess and compare existing standard techniques used in individual stage.

Wasserstein Distance Guided Cross-Domain Learning

Oct 14, 2019

Abstract:Domain adaptation aims to generalise a high-performance learner on target domain (non-labelled data) by leveraging the knowledge from source domain (rich labelled data) which comes from a different but related distribution. Assuming the source and target domains data(e.g. images) come from a joint distribution but follow on different marginal distributions, the domain adaptation work aims to infer the joint distribution from the source and target domain to learn the domain invariant features. Therefore, in this study, I extend the existing state-of-the-art approach to solve the domain adaptation problem. In particular, I propose a new approach to infer the joint distribution of images from different distributions, namely Wasserstein Distance Guided Cross-Domain Learning (WDGCDL). WDGCDL applies the Wasserstein distance to estimate the divergence between the source and target distribution which provides good gradient property and promising generalisation bound. Moreover, to tackle the training difficulty of the proposed framework, I propose two different training schemes for stable training. Qualitative results show that this new approach is superior to the existing state-of-the-art methods in the standard domain adaptation benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge