Jiangming Wang

Effort: Efficient Orthogonal Modeling for Generalizable AI-Generated Image Detection

Nov 23, 2024

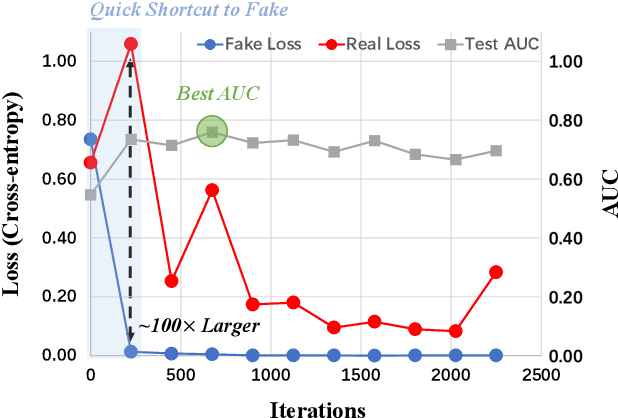

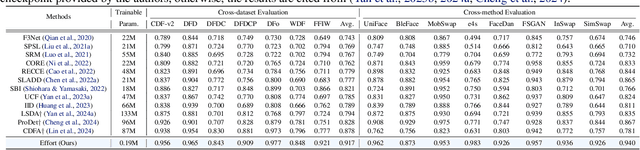

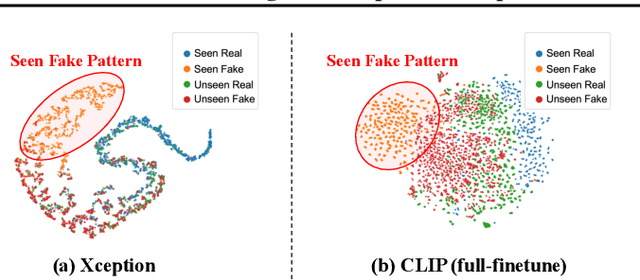

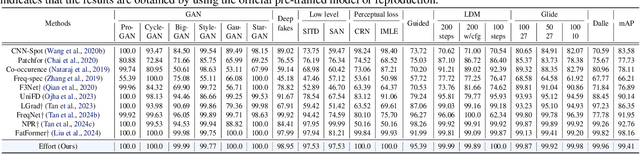

Abstract:Existing AI-generated image (AIGI) detection methods often suffer from limited generalization performance. In this paper, we identify a crucial yet previously overlooked asymmetry phenomenon in AIGI detection: during training, models tend to quickly overfit to specific fake patterns in the training set, while other information is not adequately captured, leading to poor generalization when faced with new fake methods. A key insight is to incorporate the rich semantic knowledge embedded within large-scale vision foundation models (VFMs) to expand the previous discriminative space (based on forgery patterns only), such that the discrimination is decided by both forgery and semantic cues, thereby reducing the overfitting to specific forgery patterns. A straightforward solution is to fully fine-tune VFMs, but it risks distorting the well-learned semantic knowledge, pushing the model back toward overfitting. To this end, we design a novel approach called Effort: Efficient orthogonal modeling for generalizable AIGI detection. Specifically, we employ Singular Value Decomposition (SVD) to construct the orthogonal semantic and forgery subspaces. By freezing the principal components and adapting the residual components ($\sim$0.19M parameters), we preserve the original semantic subspace and use its orthogonal subspace for learning forgeries. Extensive experiments on AIGI detection benchmarks demonstrate the superior effectiveness of our approach.

Mutual Information Guided Optimal Transport for Unsupervised Visible-Infrared Person Re-identification

Jul 17, 2024

Abstract:Unsupervised visible infrared person re-identification (USVI-ReID) is a challenging retrieval task that aims to retrieve cross-modality pedestrian images without using any label information. In this task, the large cross-modality variance makes it difficult to generate reliable cross-modality labels, and the lack of annotations also provides additional difficulties for learning modality-invariant features. In this paper, we first deduce an optimization objective for unsupervised VI-ReID based on the mutual information between the model's cross-modality input and output. With equivalent derivation, three learning principles, i.e., "Sharpness" (entropy minimization), "Fairness" (uniform label distribution), and "Fitness" (reliable cross-modality matching) are obtained. Under their guidance, we design a loop iterative training strategy alternating between model training and cross-modality matching. In the matching stage, a uniform prior guided optimal transport assignment ("Fitness", "Fairness") is proposed to select matched visible and infrared prototypes. In the training stage, we utilize this matching information to introduce prototype-based contrastive learning for minimizing the intra- and cross-modality entropy ("Sharpness"). Extensive experimental results on benchmarks demonstrate the effectiveness of our method, e.g., 60.6% and 90.3% of Rank-1 accuracy on SYSU-MM01 and RegDB without any annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge