Jian Han

InfinityStar: Unified Spacetime AutoRegressive Modeling for Visual Generation

Nov 06, 2025Abstract:We introduce InfinityStar, a unified spacetime autoregressive framework for high-resolution image and dynamic video synthesis. Building on the recent success of autoregressive modeling in both vision and language, our purely discrete approach jointly captures spatial and temporal dependencies within a single architecture. This unified design naturally supports a variety of generation tasks such as text-to-image, text-to-video, image-to-video, and long interactive video synthesis via straightforward temporal autoregression. Extensive experiments demonstrate that InfinityStar scores 83.74 on VBench, outperforming all autoregressive models by large margins, even surpassing some diffusion competitors like HunyuanVideo. Without extra optimizations, our model generates a 5s, 720p video approximately 10x faster than leading diffusion-based methods. To our knowledge, InfinityStar is the first discrete autoregressive video generator capable of producing industrial level 720p videos. We release all code and models to foster further research in efficient, high-quality video generation.

Infinity: Scaling Bitwise AutoRegressive Modeling for High-Resolution Image Synthesis

Dec 05, 2024

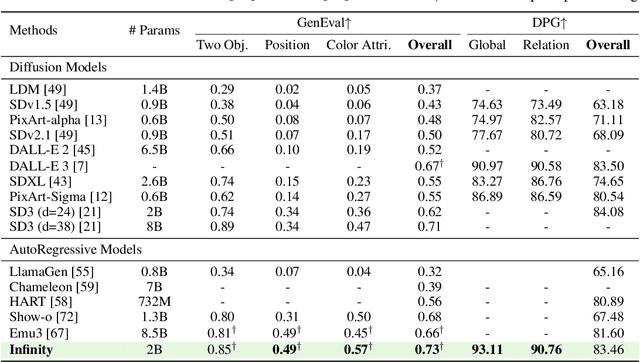

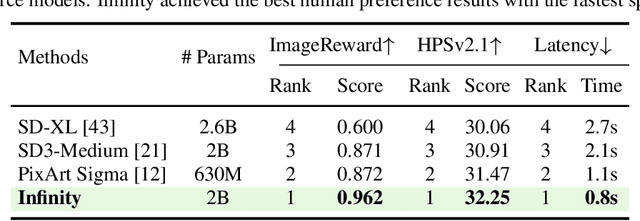

Abstract:We present Infinity, a Bitwise Visual AutoRegressive Modeling capable of generating high-resolution, photorealistic images following language instruction. Infinity redefines visual autoregressive model under a bitwise token prediction framework with an infinite-vocabulary tokenizer & classifier and bitwise self-correction mechanism, remarkably improving the generation capacity and details. By theoretically scaling the tokenizer vocabulary size to infinity and concurrently scaling the transformer size, our method significantly unleashes powerful scaling capabilities compared to vanilla VAR. Infinity sets a new record for autoregressive text-to-image models, outperforming top-tier diffusion models like SD3-Medium and SDXL. Notably, Infinity surpasses SD3-Medium by improving the GenEval benchmark score from 0.62 to 0.73 and the ImageReward benchmark score from 0.87 to 0.96, achieving a win rate of 66%. Without extra optimization, Infinity generates a high-quality 1024x1024 image in 0.8 seconds, making it 2.6x faster than SD3-Medium and establishing it as the fastest text-to-image model. Models and codes will be released to promote further exploration of Infinity for visual generation and unified tokenizer modeling.

Delving into Probabilistic Uncertainty for Unsupervised Domain Adaptive Person Re-Identification

Dec 28, 2021

Abstract:Clustering-based unsupervised domain adaptive (UDA) person re-identification (ReID) reduces exhaustive annotations. However, owing to unsatisfactory feature embedding and imperfect clustering, pseudo labels for target domain data inherently contain an unknown proportion of wrong ones, which would mislead feature learning. In this paper, we propose an approach named probabilistic uncertainty guided progressive label refinery (P$^2$LR) for domain adaptive person re-identification. First, we propose to model the labeling uncertainty with the probabilistic distance along with ideal single-peak distributions. A quantitative criterion is established to measure the uncertainty of pseudo labels and facilitate the network training. Second, we explore a progressive strategy for refining pseudo labels. With the uncertainty-guided alternative optimization, we balance between the exploration of target domain data and the negative effects of noisy labeling. On top of a strong baseline, we obtain significant improvements and achieve the state-of-the-art performance on four UDA ReID benchmarks. Specifically, our method outperforms the baseline by 6.5% mAP on the Duke2Market task, while surpassing the state-of-the-art method by 2.5% mAP on the Market2MSMT task.

Learning adaptively from the unknown for few-example video person re-ID

Aug 25, 2019

Abstract:This paper mainly studies one-example and few-example video person re-identification. A multi-branch network PAM that jointly learns local and global features is proposed. PAM has high accuracy, few parameters and converges fast, which is suitable for few-example person re-identification. We iteratively estimates labels for unlabeled samples, incorporates them into training sets, and trains a more robust network. We propose the static relative distance sampling(SRD) strategy based on the relative distance between classes. For the problem that SRD can not use all unlabeled samples, we propose adaptive relative distance sampling (ARD) strategy. For one-example setting, We get 89.78\%, 56.13\% rank-1 accuracy on PRID2011 and iLIDS-VID respectively, and 85.16\%, 45.36\% mAP on DukeMTMC and MARS respectively, which exceeds the previous methods by large margin.

Intra-clip Aggregation for Video Person Re-identification

May 05, 2019

Abstract:Video-based person re-id has drawn much attention in recent years due to its prospective applications in video surveillance. Most existing methods concentrate on how to represent discriminative clip-level features. Moreover, clip-level data augmentation is also important, especially for temporal aggregation task. Inconsistent intra-clip augmentation will collapse inter-frame alignment, thus bringing in additional noise. To tackle the above-motioned problems, we design a novel framework for video-based person re-id, which consists of two main modules: Synchronized Transformation (ST) and Intra-clip Aggregation (ICA). The former module augments intra-clip frames with the same probability and the same operation, while the latter leverages two-level intra-clip encoding to generate more discriminative clip-level features. To confirm the advantage of synchronized transformation, we conduct ablation study with different synchronized transformation scheme. We also perform cross-dataset experiment to better understand the generality of our method. Extensive experiments on three benchmark datasets demonstrate that our framework outperforming the most of recent state-of-the-art methods.

Improving Face Detection Performance with 3D-Rendered Synthetic Data

Dec 18, 2018

Abstract:In this paper, we provide a synthetic data generator methodology with fully controlled, multifaceted variations based on a new 3D face dataset (3DU-Face). We customized synthetic datasets to address specific types of variations (scale, pose, occlusion, blur, etc.), and systematically investigate the influence of different variations on face detection performances. We examine whether and how these factors contribute to better face detection performances. We validate our synthetic data augmentation for different face detectors (Faster RCNN, SSH and HR) on various face datasets (MAFA, UFDD and Wider Face).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge