Jiahe Fan

Computer Vision for Road Imaging and Pothole Detection: A State-of-the-Art Review of Systems and Algorithms

Apr 28, 2022

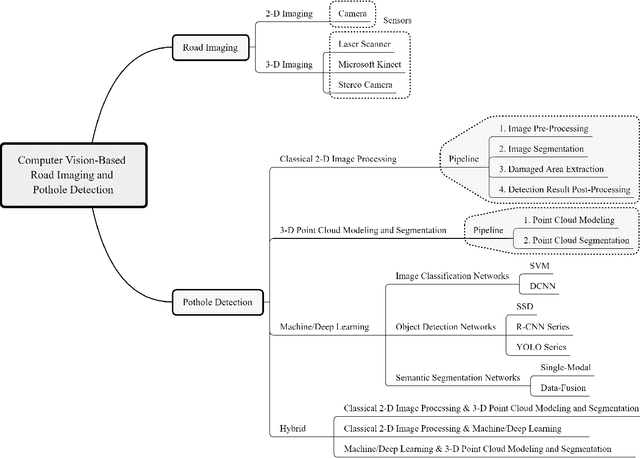

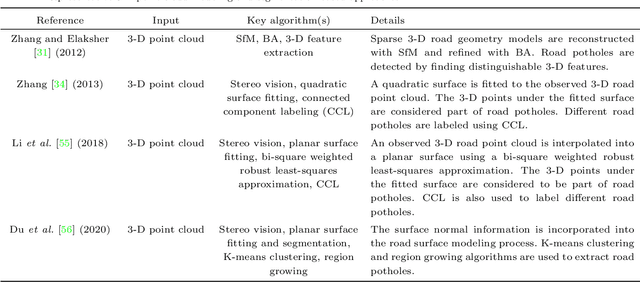

Abstract:Computer vision algorithms have been prevalently utilized for 3-D road imaging and pothole detection for over two decades. Nonetheless, there is a lack of systematic survey articles on state-of-the-art (SoTA) computer vision techniques, especially deep learning models, developed to tackle these problems. This article first introduces the sensing systems employed for 2-D and 3-D road data acquisition, including camera(s), laser scanners, and Microsoft Kinect. Afterward, it thoroughly and comprehensively reviews the SoTA computer vision algorithms, including (1) classical 2-D image processing, (2) 3-D point cloud modeling and segmentation, and (3) machine/deep learning, developed for road pothole detection. This article also discusses the existing challenges and future development trends of computer vision-based road pothole detection approaches: classical 2-D image processing-based and 3-D point cloud modeling and segmentation-based approaches have already become history; and Convolutional neural networks (CNNs) have demonstrated compelling road pothole detection results and are promising to break the bottleneck with the future advances in self/un-supervised learning for multi-modal semantic segmentation. We believe that this survey can serve as practical guidance for developing the next-generation road condition assessment systems.

Multi-Scale Feature Fusion: Learning Better Semantic Segmentation for Road Pothole Detection

Dec 24, 2021

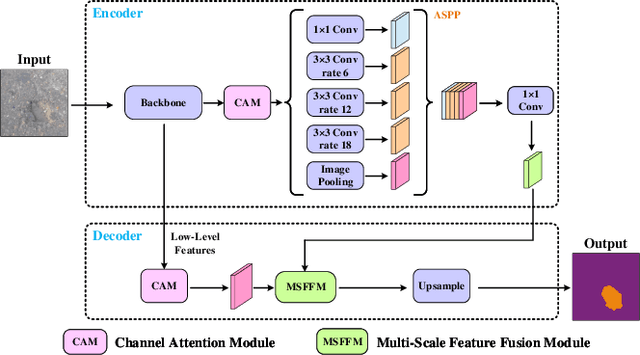

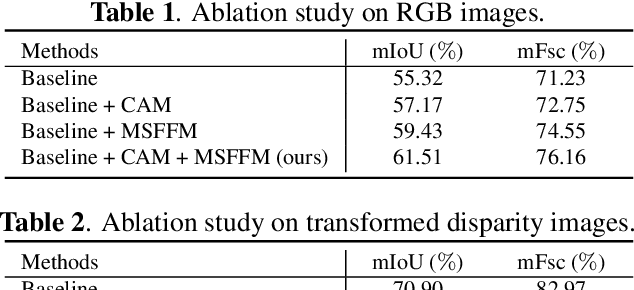

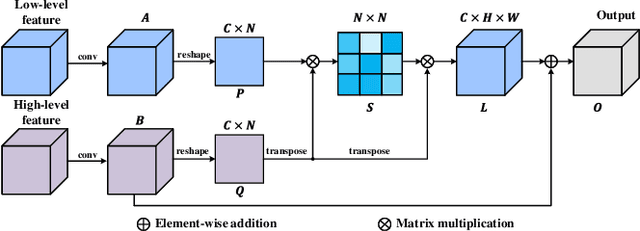

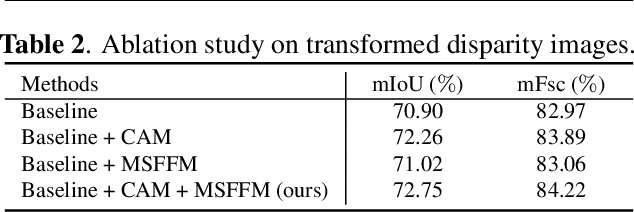

Abstract:This paper presents a novel pothole detection approach based on single-modal semantic segmentation. It first extracts visual features from input images using a convolutional neural network. A channel attention module then reweighs the channel features to enhance the consistency of different feature maps. Subsequently, we employ an atrous spatial pyramid pooling module (comprising of atrous convolutions in series, with progressive rates of dilation) to integrate the spatial context information. This helps better distinguish between potholes and undamaged road areas. Finally, the feature maps in the adjacent layers are fused using our proposed multi-scale feature fusion module. This further reduces the semantic gap between different feature channel layers. Extensive experiments were carried out on the Pothole-600 dataset to demonstrate the effectiveness of our proposed method. The quantitative comparisons suggest that our method achieves the state-of-the-art (SoTA) performance on both RGB images and transformed disparity images, outperforming three SoTA single-modal semantic segmentation networks.

Mobile Robot Localisation and Navigation Using LEGO NXT and Ultrasonic Sensor

Oct 20, 2018

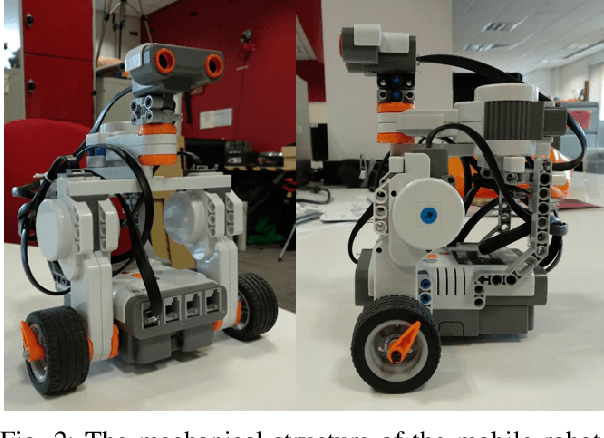

Abstract:Mobile robots are becoming increasingly important both for individuals and industries. Mobile robotic technology is not only utilised by experts in this field but is also very popular among amateurs. However, implementing a mobile robot to perform tasks autonomously can be expensive because of the need for various types of sensors and the high price of robot platforms. Hence, in this paper we present a mobile robot localisation and navigation system which uses a LEGO ultrasonic sensor in an indoor map based on the LEGO MINDSTORM NXT. This provides an affordable and ready-to-use option for most robot fans. In this paper, an effective method is proposed to extract useful information from the distorted readings collected by the ultrasonic sensor. Then, the particle filter is used to localise the robot. After robot's position is estimated, a sampling-based path planning method is proposed for the robot navigation. This method reduces the robot accumulative motion error by minimising robot turning times and covering distances. The robot localisation and navigation algorithms are implemented in MATLAB. Simulation results show an average accuracy between 1 and 3 cm for three different indoor map locations. Furthermore, experiments performed in a real setup show the effectiveness of the proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge