Jeremy Weiss

Metric-Fair Prompting: Treating Similar Samples Similarly

Dec 08, 2025

Abstract:We introduce \emph{Metric-Fair Prompting}, a fairness-aware prompting framework that guides large language models (LLMs) to make decisions under metric-fairness constraints. In the application of multiple-choice medical question answering, each {(question, option)} pair is treated as a binary instance with label $+1$ (correct) or $-1$ (incorrect). To promote {individual fairness}~--~treating similar instances similarly~--~we compute question similarity using NLP embeddings and solve items in \emph{joint pairs of similar questions} rather than in isolation. The prompt enforces a global decision protocol: extract decisive clinical features, map each \((\text{question}, \text{option})\) to a score $f(x)$ that acts as confidence, and impose a Lipschitz-style constraint so that similar inputs receive similar scores and, hence, consistent outputs. Evaluated on the {MedQA (US)} benchmark, Metric-Fair Prompting is shown to improve performance over standard single-item prompting, demonstrating that fairness-guided, confidence-oriented reasoning can enhance LLM accuracy on high-stakes clinical multiple-choice questions.

Individual Fairness Guarantee in Learning with Censorship

Feb 16, 2023Abstract:Algorithmic fairness, studying how to make machine learning (ML) algorithms fair, is an established area of ML. As ML technologies expand their application domains, including ones with high societal impact, it becomes essential to take fairness into consideration when building ML systems. Yet, despite its wide range of socially sensitive applications, most work treats the issue of algorithmic bias as an intrinsic property of supervised learning, i.e., the class label is given as a precondition. Unlike prior fairness work, we study individual fairness in learning with censorship where the assumption of availability of the class label does not hold, while still requiring that similar individuals are treated similarly. We argue that this perspective represents a more realistic model of fairness research for real-world application deployment, and show how learning with such a relaxed precondition draws new insights that better explain algorithmic fairness. We also thoroughly evaluate the performance of the proposed methodology on three real-world datasets, and validate its superior performance in minimizing discrimination while maintaining predictive performance.

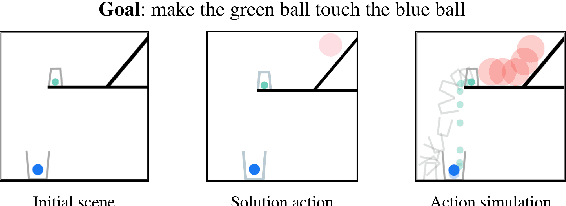

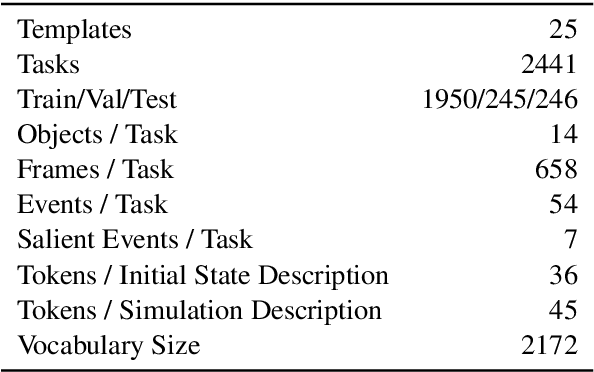

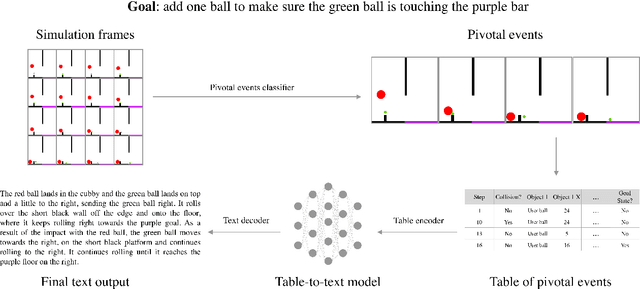

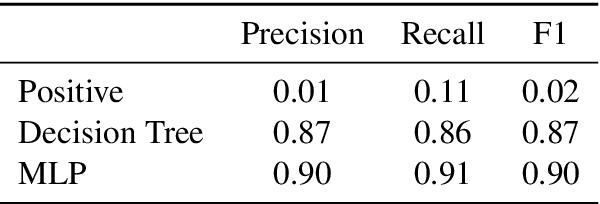

ESPRIT: Explaining Solutions to Physical Reasoning Tasks

May 14, 2020

Abstract:Neural networks lack the ability to reason about qualitative physics and so cannot generalize to scenarios and tasks unseen during training. We propose ESPRIT, a framework for commonsense reasoning about qualitative physics in natural language that generates interpretable descriptions of physical events. We use a two-step approach of first identifying the pivotal physical events in an environment and then generating natural language descriptions of those events using a data-to-text approach. Our framework learns to generate explanations of how the physical simulation will causally evolve so that an agent or a human can easily reason about a solution using those interpretable descriptions. Human evaluations indicate that ESPRIT produces crucial fine-grained details and has high coverage of physical concepts compared to even human annotations. Dataset, code and documentation are available at https://github.com/salesforce/esprit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge