Jeffrey M. Walls

Leveraging GNSS and Onboard Visual Data from Consumer Vehicles for Robust Road Network Estimation

Aug 03, 2024Abstract:Maps are essential for diverse applications, such as vehicle navigation and autonomous robotics. Both require spatial models for effective route planning and localization. This paper addresses the challenge of road graph construction for autonomous vehicles. Despite recent advances, creating a road graph remains labor-intensive and has yet to achieve full automation. The goal of this paper is to generate such graphs automatically and accurately. Modern cars are equipped with onboard sensors used for today's advanced driver assistance systems like lane keeping. We propose using global navigation satellite system (GNSS) traces and basic image data acquired from these standard sensors in consumer vehicles to estimate road-level maps with minimal effort. We exploit the spatial information in the data by framing the problem as a road centerline semantic segmentation task using a convolutional neural network. We also utilize the data's time series nature to refine the neural network's output by using map matching. We implemented and evaluated our method using a fleet of real consumer vehicles, only using the deployed onboard sensors. Our evaluation demonstrates that our approach not only matches existing methods on simpler road configurations but also significantly outperforms them on more complex road geometries and topologies. This work received the 2023 Woven by Toyota Invention Award.

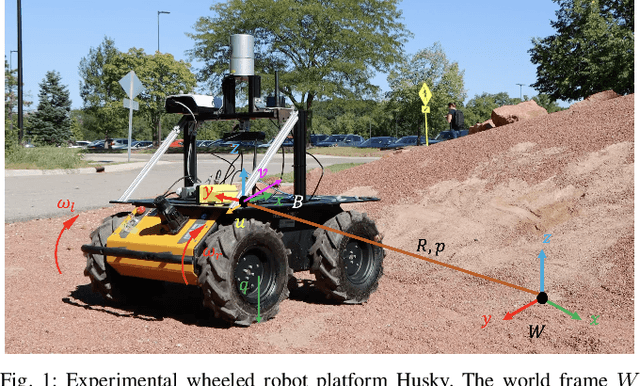

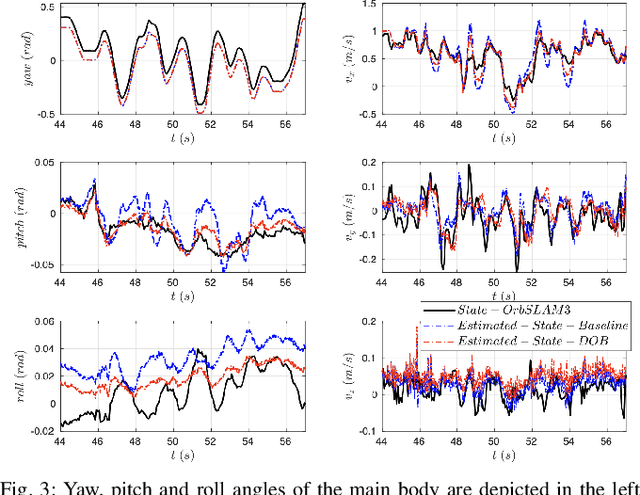

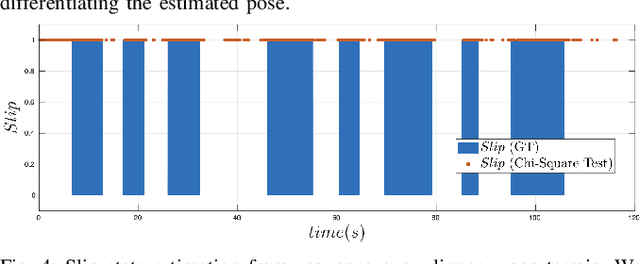

Fully Proprioceptive Slip-Velocity-Aware State Estimation for Mobile Robots via Invariant Kalman Filtering and Disturbance Observer

Sep 29, 2022

Abstract:This paper develops a novel slip estimator using the invariant observer design theory and Disturbance Observer (DOB). The proposed state estimator for mobile robots is fully proprioceptive and combines data from an inertial measurement unit and body velocity within a Right Invariant Extended Kalman Filter (RI-EKF). By embedding the slip velocity into $\mathrm{SE}_3(3)$ Lie group, the developed DOB-based RI-EKF provides real-time accurate velocity and slip velocity estimates on different terrains. Experimental results using a Husky wheeled robot confirm the mathematical derivations and show better performance than a standard RI-EKF baseline. Open source software is available for download and reproducing the presented results.

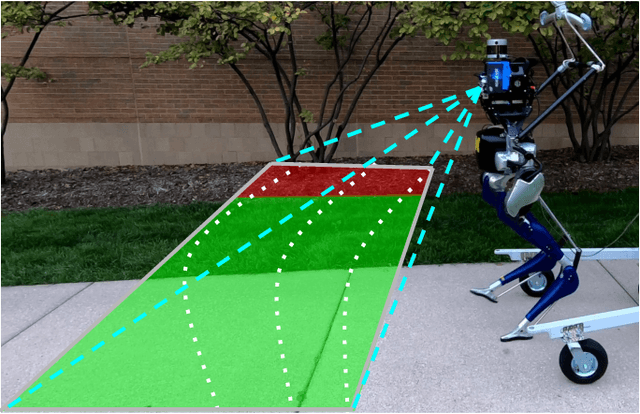

Multi-Task Learning for Scalable and Dense Multi-Layer Bayesian Map Inference

Jun 28, 2021

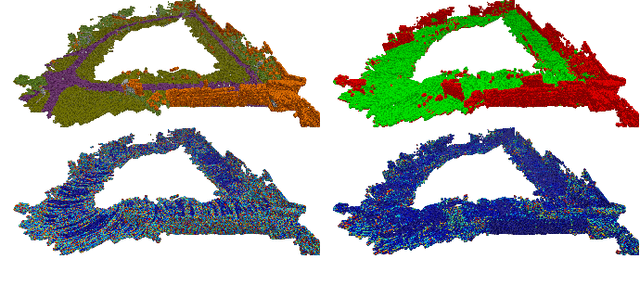

Abstract:This paper presents a novel and flexible multi-task multi-layer Bayesian mapping framework with readily extendable attribute layers. The proposed framework goes beyond modern metric-semantic maps to provide even richer environmental information for robots in a single mapping formalism while exploiting existing inter-layer correlations. It removes the need for a robot to access and process information from many separate maps when performing a complex task and benefits from the correlation between map layers, advancing the way robots interact with their environments. To this end, we design a multi-task deep neural network with attention mechanisms as our front-end to provide multiple observations for multiple map layers simultaneously. Our back-end runs a scalable closed-form Bayesian inference with only logarithmic time complexity. We apply the framework to build a dense robotic map including metric-semantic occupancy and traversability layers. Traversability ground truth labels are automatically generated from exteroceptive sensory data in a self-supervised manner. We present extensive experimental results on publicly available data sets and data collected by a 3D bipedal robot platform on the University of Michigan North Campus and show reliable mapping performance in different environments. Finally, we also discuss how the current framework can be extended to incorporate more information such as friction, signal strength, temperature, and physical quantity concentration using Gaussian map layers. The software for reproducing the presented results or running on customized data is made publicly available.

Legged Robot State-Estimation Through Combined Forward Kinematic and Preintegrated Contact Factors

Feb 25, 2018

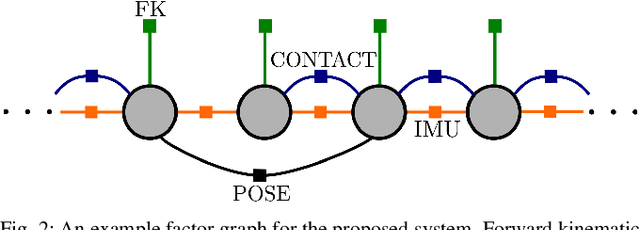

Abstract:State-of-the-art robotic perception systems have achieved sufficiently good performance using Inertial Measurement Units (IMUs), cameras, and nonlinear optimization techniques, that they are now being deployed as technologies. However, many of these methods rely significantly on vision and often fail when visual tracking is lost due to lighting or scarcity of features. This paper presents a state-estimation technique for legged robots that takes into account the robot's kinematic model as well as its contact with the environment. We introduce forward kinematic factors and preintegrated contact factors into a factor graph framework that can be incrementally solved in real-time. The forward kinematic factor relates the robot's base pose to a contact frame through noisy encoder measurements. The preintegrated contact factor provides odometry measurements of this contact frame while accounting for possible foot slippage. Together, the two developed factors constrain the graph optimization problem allowing the robot's trajectory to be estimated. The paper evaluates the method using simulated and real sensory IMU and kinematic data from experiments with a Cassie-series robot designed by Agility Robotics. These preliminary experiments show that using the proposed method in addition to IMU decreases drift and improves localization accuracy, suggesting that its use can enable successful recovery from a loss of visual tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge