Jeffrey H. Reed

Indoor Positioning for Public Safety: Role of UAVs, LEOs, and Propagation-Aware Techniques

Mar 15, 2025Abstract:Effective indoor positioning is critical for public safety, enabling first responders to locate at-risk individuals accurately during emergency scenarios. However, traditional Global Navigation Satellite Systems (GNSS) often perform poorly indoors due to poor coverage and non-line-of-sight (NLOS) conditions. Moreover, relying on fixed cellular infrastructure, such as terrestrial networks (TNs), may not be feasible, as indoor signal coverage from a sufficient number of base stations or WiFi access points cannot be guaranteed for accurate positioning. In this paper, we propose a rapidly deployable indoor positioning system (IPS) leveraging mobile anchors, including uncrewed aerial vehicles (UAVs) and Low-Earth-Orbit (LEO) satellites, and discuss the role of GNSS and LEOs in localizing the mobile anchors. Additionally, we discuss the role of sidelink-based positioning, which is introduced in 3rd Generation Partnership Project (3GPP) Release 18, in enabling public safety systems. By examining outdoor-to-indoor (O2I) signal propagation, particularly diffraction-based approaches, we highlight how propagation-aware positioning methods can outperform conventional strategies that disregard propagation mechanism information. The study highlights how emerging 5G Advanced and Non-Terrestrial Networks (NTN) features offer new avenues to improve positioning in challenging indoor environments, ultimately paving the way for cost-effective and resilient IPS solutions tailored to public safety applications.

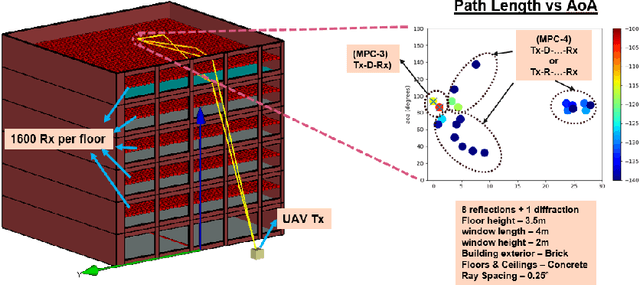

Impact of Frequency on Diffraction-Aided Wireless Positioning

Mar 15, 2025Abstract:This paper tackles the challenge of accurate positioning in Non-Line-of-Sight (NLoS) environments, with a focus on indoor public safety scenarios where NLoS bias severely impacts localization performance. We explore Diffraction MultiPath Components (MPC) as a critical mechanism for Outdoor-to-Indoor (O2I) signal propagation and its role in positioning. The proposed system comprises outdoor Uncrewed Aerial Vehicle (UAV) transmitters and indoor receivers that require localization. To facilitate diffraction-based positioning, we develop a method to isolate diffraction MPCs at indoor receivers and validate its effectiveness using a ray-tracing-generated dataset, which we have made publicly available. Our evaluation across the FR1, FR2, and FR3 frequency bands within the 5G/6G spectrum confirms the viability of diffraction-based positioning techniques for next-generation wireless networks.

Diffraction Aided Wireless Positioning

Sep 04, 2024

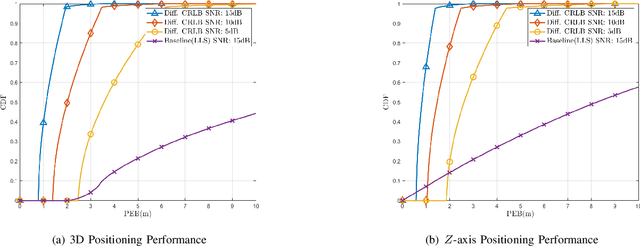

Abstract:Wireless positioning in Non-Line-of-Sight (NLOS) scenarios is highly challenging due to multipath, which leads to deterioration in the positioning estimate. This study reexamines electromagnetic field principles and applies them to wireless positioning, resulting in new techniques that enhance positioning accuracy in NLOS scenarios. Further, we use the proposed method to analyze a public safety scenario where it is essential to determine the position of at-risk individuals within buildings, emphasizing improving the Z-axis position estimate. Our analysis uses the Geometrical Theory of Diffraction (GTD) to provide important signal propagation insights and develop a new NLOS path model. Next, we use Fisher information to derive necessary and sufficient conditions for 3D positioning using our proposed positioning technique and finally to lower bound the possible 3D and z-axis positioning performance. On applying this positioning technique in a public safety scenario, we show that it is possible to greatly improve both 3D and Z-axis positioning performance by directly estimating NLOS path lengths.

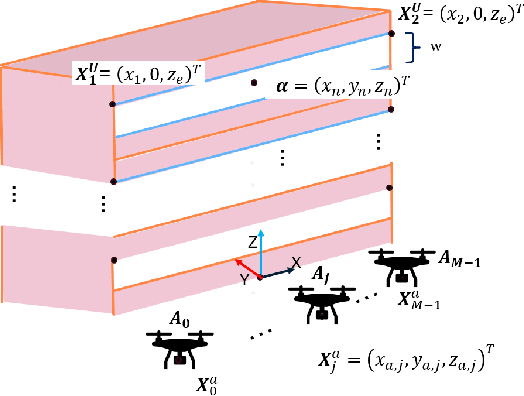

3D Positioning using a New Diffraction Path Model

May 09, 2024Abstract:Enhancing 3D and Z-axis positioning accuracy is crucial for effective rescue in indoor emergencies, ensuring safety for emergency responders and at-risk individuals. Additionally, reducing the dependence of a positioning system on fixed infrastructure is crucial, given its vulnerability to power failures and damage during emergencies. Further challenges from a signal propagation perspective include poor indoor signal coverage, multipath effects and the problem of Non-Line-OfSight (NLOS) measurement bias. In this study, we utilize the mobility provided by a rapidly deployable Uncrewed Aerial Vehicle (UAV) based wireless network to address these challenges. We recognize diffraction from window edges as a crucial signal propagation mechanism and employ the Geometrical Theory of Diffraction (GTD) to introduce a novel NLOS path length model. Using this path length model, we propose two different techniques to improve the indoor positioning performance for emergency scenarios.

Keep It Simple: CNN Model Complexity Studies for Interference Classification Tasks

Mar 06, 2023

Abstract:The growing number of devices using the wireless spectrum makes it important to find ways to minimize interference and optimize the use of the spectrum. Deep learning models, such as convolutional neural networks (CNNs), have been widely utilized to identify, classify, or mitigate interference due to their ability to learn from the data directly. However, there have been limited research on the complexity of such deep learning models. The major focus of deep learning-based wireless classification literature has been on improving classification accuracy, often at the expense of model complexity. This may not be practical for many wireless devices, such as, internet of things (IoT) devices, which usually have very limited computational resources and cannot handle very complex models. Thus, it becomes important to account for model complexity when designing deep learning-based models for interference classification. To address this, we conduct an analysis of CNN based wireless classification that explores the trade-off amongst dataset size, CNN model complexity, and classification accuracy under various levels of classification difficulty: namely, interference classification, heterogeneous transmitter classification, and homogeneous transmitter classification. Our study, based on three wireless datasets, shows that a simpler CNN model with fewer parameters can perform just as well as a more complex model, providing important insights into the use of CNNs in computationally constrained applications.

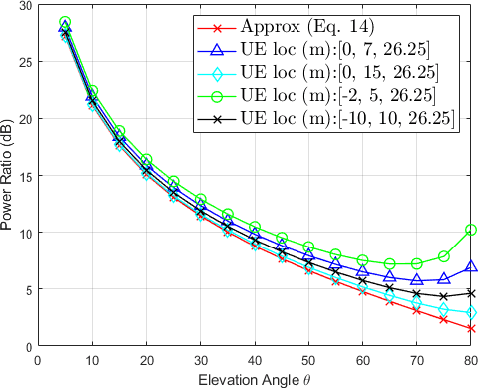

Line-of-Sight Probability for Outdoor-to-Indoor UAV-Assisted Emergency Networks

Feb 27, 2023Abstract:For emergency response scenarios like firefighting in urban environments, there is a need to both localize emergency responders inside the building and also support a high bandwidth communication link between the responders and a command-and-control center. The emergency networks for such scenarios can be established with the quick deployment of Unmanned Aerial Vehicles (UAVs). Further, the 3D mobility of UAVs can be leveraged to improve the quality of the wireless link by maneuvering them into advantageous locations. This has motivated recent propagation measurement campaigns to study low-altitude air-to-ground channels in both 5G-sub6 GHz and 5G-mmWave bands. In this paper, we develop a model for the link in a UAV-assisted emergency location and/or communication system. Specifically, given the importance of Line-of-Sight (LoS) links in localization as well as mmWave communication, we derive a closed-form expression for the LoS probability. This probability is parameterized by the UAV base station location, the size of the building, and the size of the window that offers the best propagation path. An expression for coverage probability is also derived. The LoS probability and coverage probabilities derived in this paper can be used to analyze the outdoor UAV-to-indoor propagation environment to determine optimal UAV positioning and the number of UAVs needed to achieve the desired performance of the emergency network.

Probability-Reduction of Geolocation using Reconfigurable Intelligent Surface Reflections

Oct 18, 2022

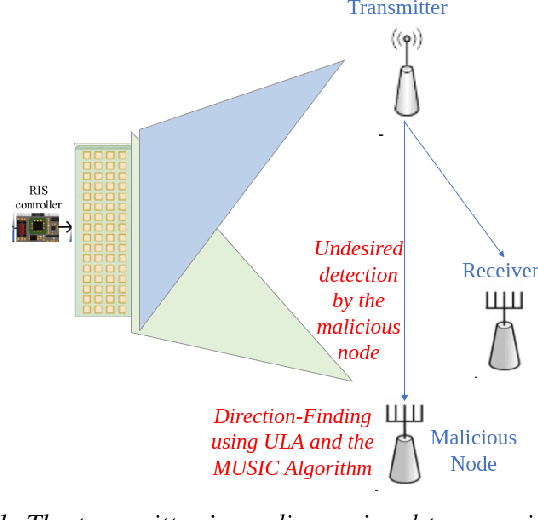

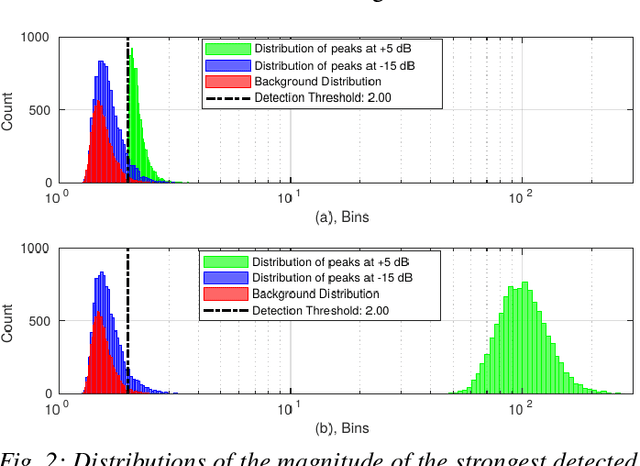

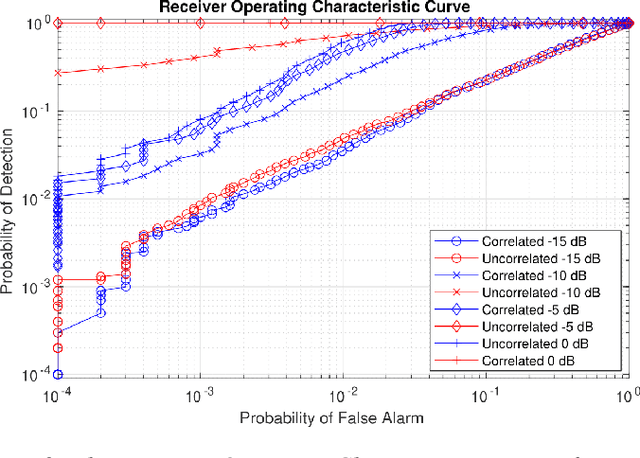

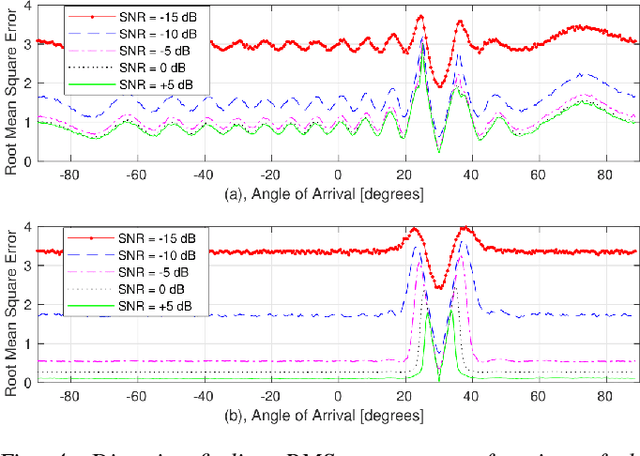

Abstract:With the recent introduction of electromagnetic meta-surfaces and reconfigurable intelligent surfaces, a paradigm shift is currently taking place in the world of wireless communications and related industries. These new technologies have enabled the inclusion of the wireless channel as part of the optimization process. This is of great interest as we transition from 5G mobile communications towards 6G. In this paper, we explore the possibility of using a reconfigurable intelligent surface in order to disrupt the ability of an unintended receiver to geolocate the source of transmitted signals in a 5G communication system. We investigate how the performance of the MUSIC algorithm at the unintended receiver is degraded by correlated reflected signals introduced by a reconfigurable intelligent surface in the wireless channel. We analyze the impact of the direction of arrival, delay, correlation, and strength of the reconfigurable intelligent surface signal with respect to the line-of-sight path from the transmitter to the unintended receiver. An effective method is introduced for defeating direction-finding efforts using dual sets of surface reflections. This novel method is called Geolocation-Probability Reduction using Dual Reconfigurable Intelligent Surfaces (GPRIS). We also show that the efficiency of this method is highly dependent on the geometry, that is, the placement of the reconfigurable intelligent surface relative to the unintended receiver and the transmitter.

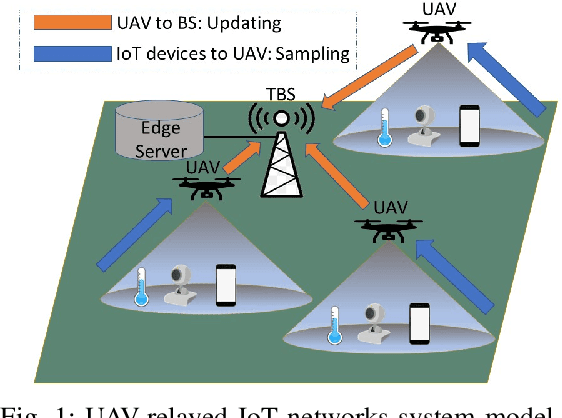

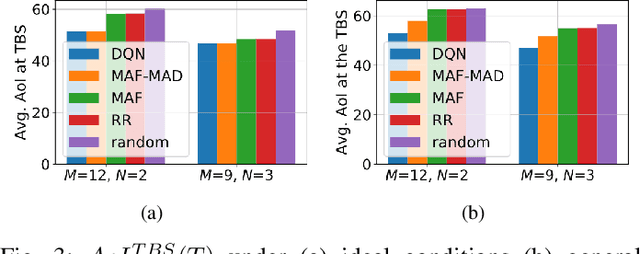

Learning based Age of Information Minimization in UAV-relayed IoT Networks

Mar 08, 2022

Abstract:Unmanned Aerial Vehicles (UAVs) are used as aerial base-stations to relay time-sensitive packets from IoT devices to the nearby terrestrial base-station (TBS). Scheduling of packets in such UAV-relayed IoT-networks to ensure fresh (or up-to-date) IoT devices' packets at the TBS is a challenging problem as it involves two simultaneous steps of (i) sampling of packets generated at IoT devices by the UAVs [hop-1] and (ii) updating of sampled packets from UAVs to the TBS [hop-2]. To address this, we propose Age-of-Information (AoI) scheduling algorithms for two-hop UAV-relayed IoT-networks. First, we propose a low-complexity AoI scheduler, termed, MAF-MAD that employs Maximum AoI First (MAF) policy for sampling of IoT devices at UAV (hop-1) and Maximum AoI Difference (MAD) policy for updating sampled packets from UAV to the TBS (hop-2). We prove that MAF-MAD is the optimal AoI scheduler under ideal conditions (lossless wireless channels and generate-at-will traffic-generation at IoT devices). On the contrary, for general conditions (lossy channel conditions and varying periodic traffic-generation at IoT devices), a deep reinforcement learning algorithm, namely, Proximal Policy Optimization (PPO)-based scheduler is proposed. Simulation results show that the proposed PPO-based scheduler outperforms other schedulers like MAF-MAD, MAF, and round-robin in all considered general scenarios.

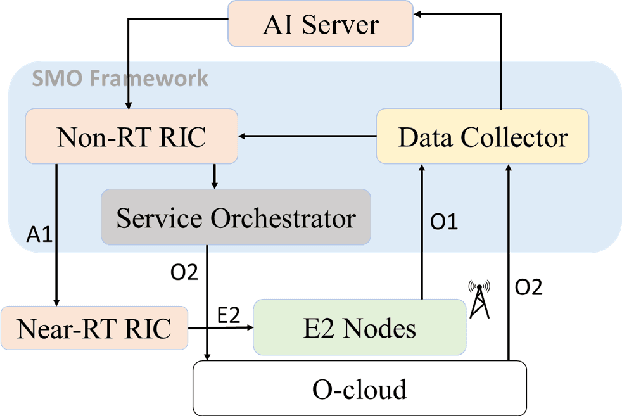

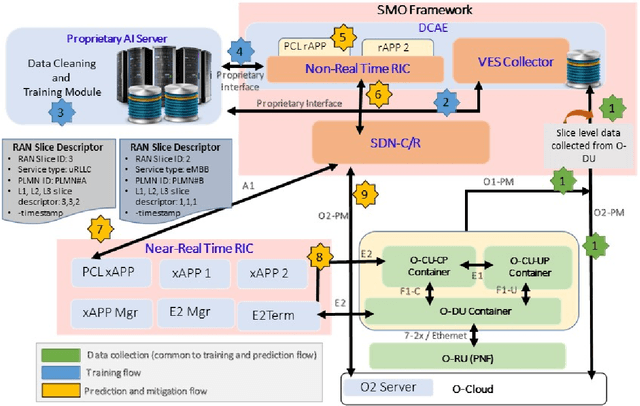

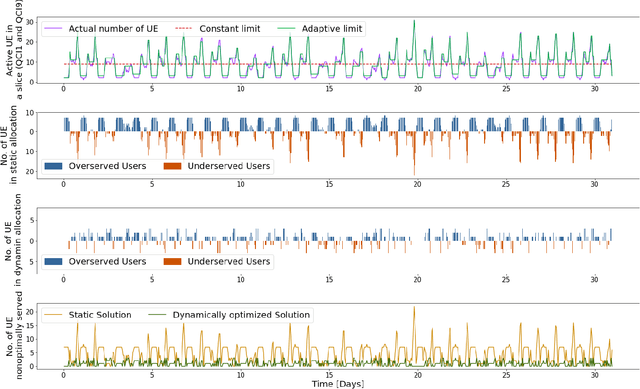

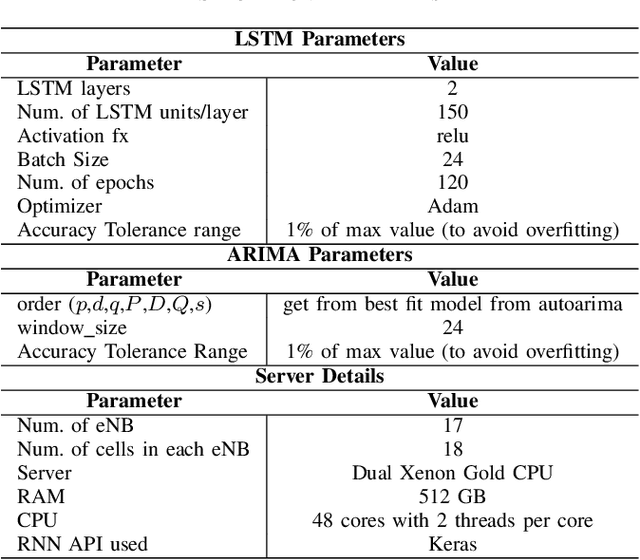

Predictive Closed-Loop Service Automation in O-RAN based Network Slicing

Feb 04, 2022

Abstract:Network slicing provides introduces customized and agile network deployment for managing different service types for various verticals under the same infrastructure. To cater to the dynamic service requirements of these verticals and meet the required quality-of-service (QoS) mentioned in the service-level agreement (SLA), network slices need to be isolated through dedicated elements and resources. Additionally, allocated resources to these slices need to be continuously monitored and intelligently managed. This enables immediate detection and correction of any SLA violation to support automated service assurance in a closed-loop fashion. By reducing human intervention, intelligent and closed-loop resource management reduces the cost of offering flexible services. Resource management in a network shared among verticals (potentially administered by different providers), would be further facilitated through open and standardized interfaces. Open radio access network (O-RAN) is perhaps the most promising RAN architecture that inherits all the aforementioned features, namely intelligence, open and standard interfaces, and closed control loop. Inspired by this, in this article we provide a closed-loop and intelligent resource provisioning scheme for O-RAN slicing to prevent SLA violations. In order to maintain realism, a real-world dataset of a large operator is used to train a learning solution for optimizing resource utilization in the proposed closed-loop service automation process. Moreover, the deployment architecture and the corresponding flow that are cognizant of the O-RAN requirements are also discussed.

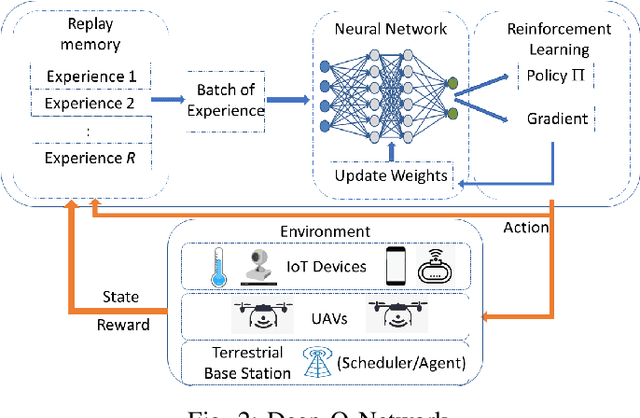

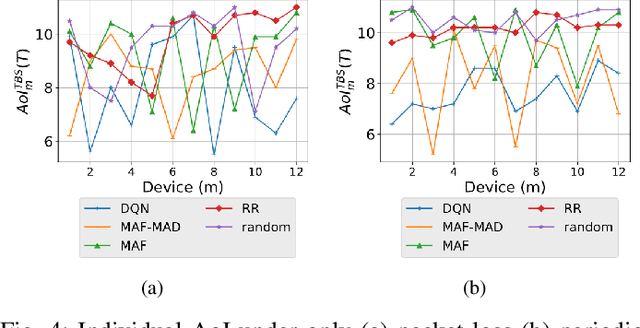

AoI-minimizing Scheduling in UAV-relayed IoT Networks

Jul 30, 2021

Abstract:Due to flexibility, autonomy and low operational cost, unmanned aerial vehicles (UAVs), as fixed aerial base stations, are increasingly being used as \textit{relays} to collect time-sensitive information (i.e., status updates) from IoT devices and deliver it to the nearby terrestrial base station (TBS), where the information gets processed. In order to ensure timely delivery of information to the TBS (from all IoT devices), optimal scheduling of time-sensitive information over two hop UAV-relayed IoT networks (i.e., IoT device to the UAV [hop 1], and UAV to the TBS [hop 2]) becomes a critical challenge. To address this, we propose scheduling policies for Age of Information (AoI) minimization in such two-hop UAV-relayed IoT networks. To this end, we present a low-complexity MAF-MAD scheduler, that employs Maximum AoI First (MAF) policy for sampling of IoT devices at UAV (hop 1) and Maximum AoI Difference (MAD) policy for updating sampled packets from UAV to the TBS (hop 2). We show that MAF-MAD is the optimal scheduler under ideal conditions, i.e., error-free channels and generate-at-will traffic generation at IoT devices. On the contrary, for realistic conditions, we propose a Deep-Q-Networks (DQN) based scheduler. Our simulation results show that DQN-based scheduler outperforms MAF-MAD scheduler and three other baseline schedulers, i.e., Maximal AoI First (MAF), Round Robin (RR) and Random, employed at both hops under general conditions when the network is small (with 10's of IoT devices). However, it does not scale well with network size whereas MAF-MAD outperforms all other schedulers under all considered scenarios for larger networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge