Vijay K. Shah

ORANSight-2.0: Foundational LLMs for O-RAN

Mar 07, 2025

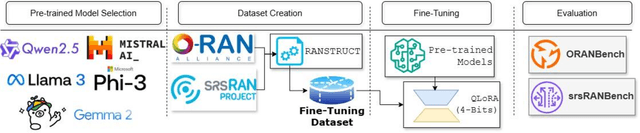

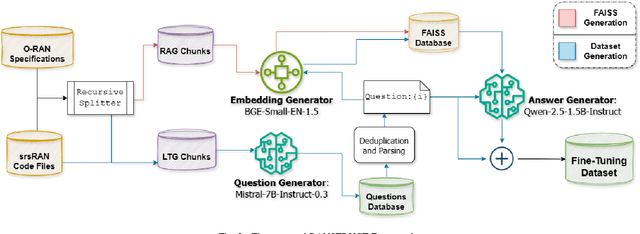

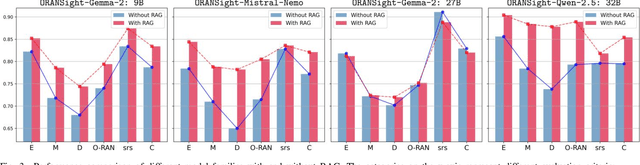

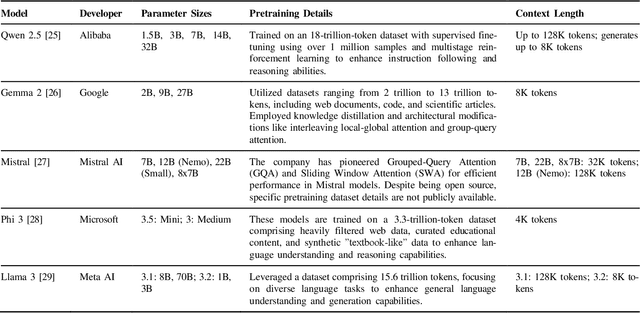

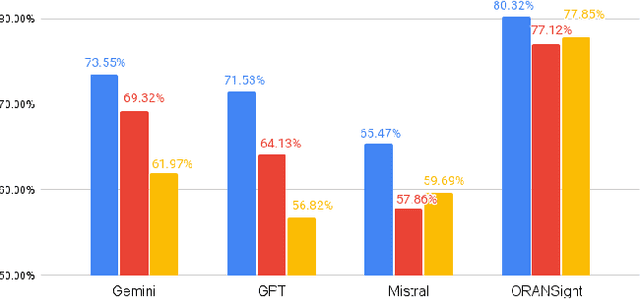

Abstract:Despite the transformative impact of Large Language Models (LLMs) across critical domains such as healthcare, customer service, and business marketing, their integration into Open Radio Access Networks (O-RAN) remains limited. This gap is primarily due to the absence of domain-specific foundational models, with existing solutions often relying on general-purpose LLMs that fail to address the unique challenges and technical intricacies of O-RAN. To bridge this gap, we introduce ORANSight-2.0 (O-RAN Insights), a pioneering initiative aimed at developing specialized foundational LLMs tailored for O-RAN. Built on 18 LLMs spanning five open-source LLM frameworks, ORANSight-2.0 fine-tunes models ranging from 1 to 70B parameters, significantly reducing reliance on proprietary, closed-source models while enhancing performance for O-RAN. At the core of ORANSight-2.0 is RANSTRUCT, a novel Retrieval-Augmented Generation (RAG) based instruction-tuning framework that employs two LLM agents to create high-quality instruction-tuning datasets. The generated dataset is then used to fine-tune the 18 pre-trained open-source LLMs via QLoRA. To evaluate ORANSight-2.0, we introduce srsRANBench, a novel benchmark designed for code generation and codebase understanding in the context of srsRAN, a widely used 5G O-RAN stack. We also leverage ORANBench13K, an existing benchmark for assessing O-RAN-specific knowledge. Our comprehensive evaluations demonstrate that ORANSight-2.0 models outperform general-purpose and closed-source models, such as ChatGPT-4o and Gemini, by 5.421% on ORANBench and 18.465% on srsRANBench, achieving superior performance while maintaining lower computational and energy costs. We also experiment with RAG-augmented variants of ORANSight-2.0 LLMs and thoroughly evaluate their energy characteristics, demonstrating costs for training, standard inference, and RAG-augmented inference.

ORAN-Bench-13K: An Open Source Benchmark for Assessing LLMs in Open Radio Access Networks

Jul 08, 2024

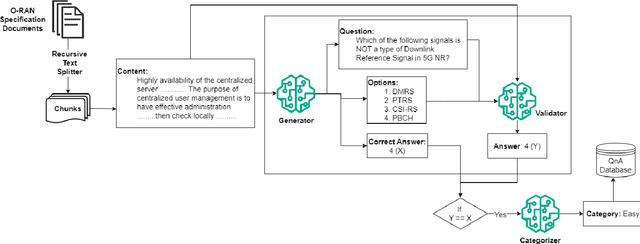

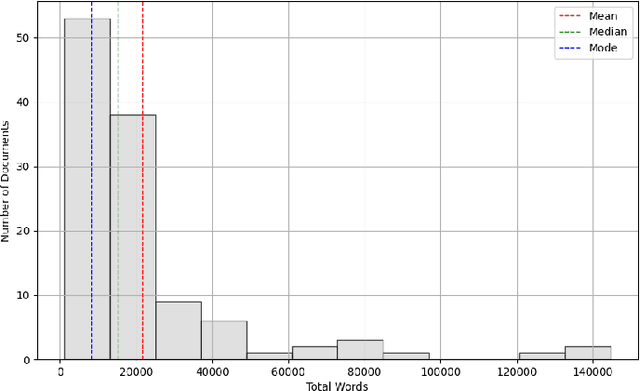

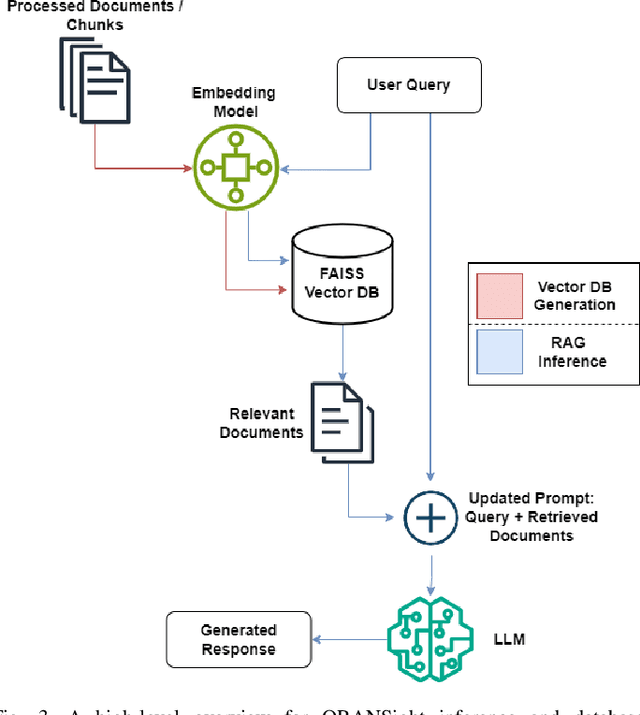

Abstract:Large Language Models (LLMs) can revolutionize how we deploy and operate Open Radio Access Networks (O-RAN) by enhancing network analytics, anomaly detection, and code generation and significantly increasing the efficiency and reliability of a plethora of O-RAN tasks. In this paper, we present ORAN-Bench-13K, the first comprehensive benchmark designed to evaluate the performance of Large Language Models (LLMs) within the context of O-RAN. Our benchmark consists of 13,952 meticulously curated multiple-choice questions generated from 116 O-RAN specification documents. We leverage a novel three-stage LLM framework, and the questions are categorized into three distinct difficulties to cover a wide spectrum of ORAN-related knowledge. We thoroughly evaluate the performance of several state-of-the-art LLMs, including Gemini, Chat-GPT, and Mistral. Additionally, we propose ORANSight, a Retrieval-Augmented Generation (RAG)-based pipeline that demonstrates superior performance on ORAN-Bench-13K compared to other tested closed-source models. Our findings indicate that current popular LLM models are not proficient in O-RAN, highlighting the need for specialized models. We observed a noticeable performance improvement when incorporating the RAG-based ORANSight pipeline, with a Macro Accuracy of 0.784 and a Weighted Accuracy of 0.776, which was on average 21.55% and 22.59% better than the other tested LLMs.

Preserving Data Privacy for ML-driven Applications in Open Radio Access Networks

Feb 15, 2024

Abstract:Deep learning offers a promising solution to improve spectrum access techniques by utilizing data-driven approaches to manage and share limited spectrum resources for emerging applications. For several of these applications, the sensitive wireless data (such as spectrograms) are stored in a shared database or multistakeholder cloud environment and are therefore prone to privacy leaks. This paper aims to address such privacy concerns by examining the representative case study of shared database scenarios in 5G Open Radio Access Network (O-RAN) networks where we have a shared database within the near-real-time (near-RT) RAN intelligent controller. We focus on securing the data that can be used by machine learning (ML) models for spectrum sharing and interference mitigation applications without compromising the model and network performances. The underlying idea is to leverage a (i) Shuffling-based learnable encryption technique to encrypt the data, following which, (ii) employ a custom Vision transformer (ViT) as the trained ML model that is capable of performing accurate inferences on such encrypted data. The paper offers a thorough analysis and comparisons with analogous convolutional neural networks (CNN) as well as deeper architectures (such as ResNet-50) as baselines. Our experiments showcase that the proposed approach significantly outperforms the baseline CNN with an improvement of 24.5% and 23.9% for the percent accuracy and F1-Score respectively when operated on encrypted data. Though deeper ResNet-50 architecture is obtained as a slightly more accurate model, with an increase of 4.4%, the proposed approach boasts a reduction of parameters by 99.32%, and thus, offers a much-improved prediction time by nearly 60%.

Context-Aware Spectrum Coexistence of Terrestrial Beyond 5G Networks in Satellite Bands

Feb 14, 2024

Abstract:Spectrum sharing between terrestrial 5G and incumbent networks in the satellite bands presents a promising avenue to satisfy the ever-increasing bandwidth demand of the next-generation wireless networks. However, protecting incumbent operations from harmful interference poses a fundamental challenge in accommodating terrestrial broadband cellular networks in the satellite bands. State-of-the-art spectrum-sharing policies usually consider several worst-case assumptions and ignore site-specific contextual factors in making spectrum-sharing decisions, and thus, often results in under-utilization of the shared band for the secondary licensees. To address such limitations, this paper introduces CAT3S (Context-Aware Terrestrial-Satellite Spectrum Sharing) framework that empowers the coexisting terrestrial 5G network to maximize utilization of the shared satellite band without creating harmful interference to the incumbent links by exploiting the contextual factors. CAT3S consists of the following two components: (i) context-acquisition unit to collect and process essential contextual information for spectrum sharing and (ii) context-aware base station (BS) control unit to optimize the set of operational BSs and their operation parameters (i.e., transmit power and active beams per sector). To evaluate the performance of the CAT3S, a realistic spectrum coexistence case study over the 12 GHz band is considered. Experiment results demonstrate that the proposed CAT3S achieves notably higher spectrum utilization than state-of-the-art spectrum-sharing policies in different weather contexts.

Keep It Simple: CNN Model Complexity Studies for Interference Classification Tasks

Mar 06, 2023

Abstract:The growing number of devices using the wireless spectrum makes it important to find ways to minimize interference and optimize the use of the spectrum. Deep learning models, such as convolutional neural networks (CNNs), have been widely utilized to identify, classify, or mitigate interference due to their ability to learn from the data directly. However, there have been limited research on the complexity of such deep learning models. The major focus of deep learning-based wireless classification literature has been on improving classification accuracy, often at the expense of model complexity. This may not be practical for many wireless devices, such as, internet of things (IoT) devices, which usually have very limited computational resources and cannot handle very complex models. Thus, it becomes important to account for model complexity when designing deep learning-based models for interference classification. To address this, we conduct an analysis of CNN based wireless classification that explores the trade-off amongst dataset size, CNN model complexity, and classification accuracy under various levels of classification difficulty: namely, interference classification, heterogeneous transmitter classification, and homogeneous transmitter classification. Our study, based on three wireless datasets, shows that a simpler CNN model with fewer parameters can perform just as well as a more complex model, providing important insights into the use of CNNs in computationally constrained applications.

Learning based Age of Information Minimization in UAV-relayed IoT Networks

Mar 08, 2022

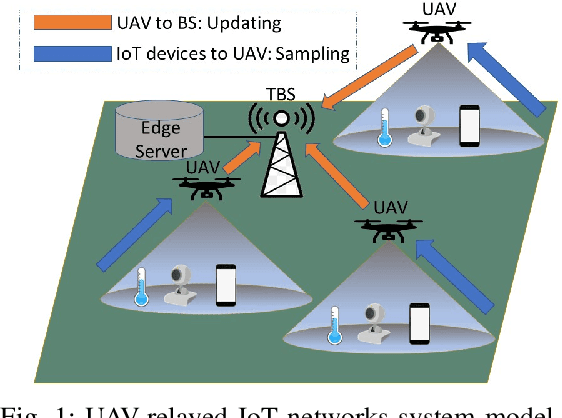

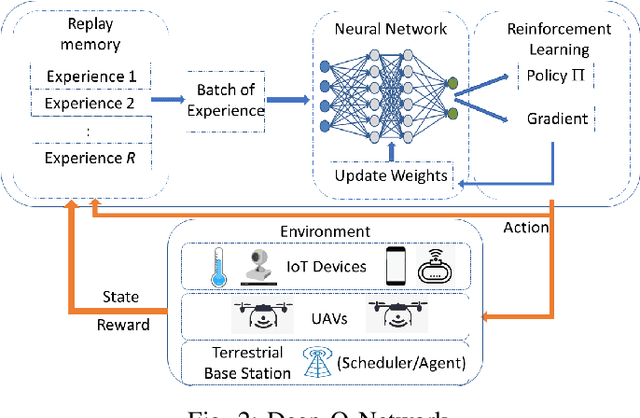

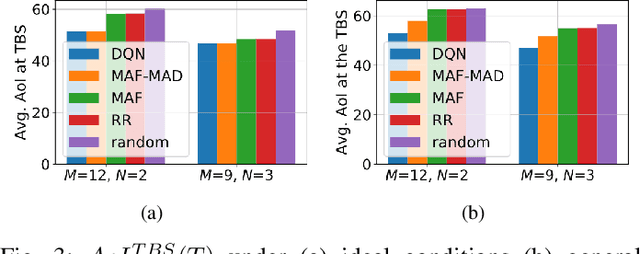

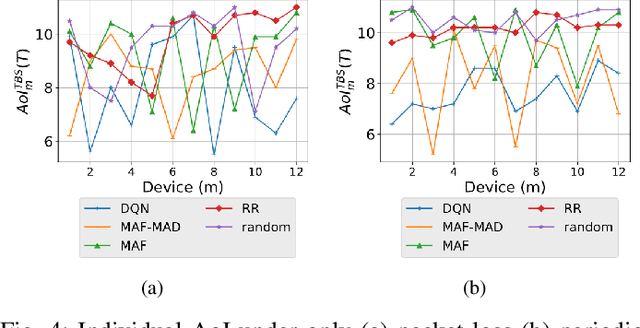

Abstract:Unmanned Aerial Vehicles (UAVs) are used as aerial base-stations to relay time-sensitive packets from IoT devices to the nearby terrestrial base-station (TBS). Scheduling of packets in such UAV-relayed IoT-networks to ensure fresh (or up-to-date) IoT devices' packets at the TBS is a challenging problem as it involves two simultaneous steps of (i) sampling of packets generated at IoT devices by the UAVs [hop-1] and (ii) updating of sampled packets from UAVs to the TBS [hop-2]. To address this, we propose Age-of-Information (AoI) scheduling algorithms for two-hop UAV-relayed IoT-networks. First, we propose a low-complexity AoI scheduler, termed, MAF-MAD that employs Maximum AoI First (MAF) policy for sampling of IoT devices at UAV (hop-1) and Maximum AoI Difference (MAD) policy for updating sampled packets from UAV to the TBS (hop-2). We prove that MAF-MAD is the optimal AoI scheduler under ideal conditions (lossless wireless channels and generate-at-will traffic-generation at IoT devices). On the contrary, for general conditions (lossy channel conditions and varying periodic traffic-generation at IoT devices), a deep reinforcement learning algorithm, namely, Proximal Policy Optimization (PPO)-based scheduler is proposed. Simulation results show that the proposed PPO-based scheduler outperforms other schedulers like MAF-MAD, MAF, and round-robin in all considered general scenarios.

AoI-minimizing Scheduling in UAV-relayed IoT Networks

Jul 30, 2021

Abstract:Due to flexibility, autonomy and low operational cost, unmanned aerial vehicles (UAVs), as fixed aerial base stations, are increasingly being used as \textit{relays} to collect time-sensitive information (i.e., status updates) from IoT devices and deliver it to the nearby terrestrial base station (TBS), where the information gets processed. In order to ensure timely delivery of information to the TBS (from all IoT devices), optimal scheduling of time-sensitive information over two hop UAV-relayed IoT networks (i.e., IoT device to the UAV [hop 1], and UAV to the TBS [hop 2]) becomes a critical challenge. To address this, we propose scheduling policies for Age of Information (AoI) minimization in such two-hop UAV-relayed IoT networks. To this end, we present a low-complexity MAF-MAD scheduler, that employs Maximum AoI First (MAF) policy for sampling of IoT devices at UAV (hop 1) and Maximum AoI Difference (MAD) policy for updating sampled packets from UAV to the TBS (hop 2). We show that MAF-MAD is the optimal scheduler under ideal conditions, i.e., error-free channels and generate-at-will traffic generation at IoT devices. On the contrary, for realistic conditions, we propose a Deep-Q-Networks (DQN) based scheduler. Our simulation results show that DQN-based scheduler outperforms MAF-MAD scheduler and three other baseline schedulers, i.e., Maximal AoI First (MAF), Round Robin (RR) and Random, employed at both hops under general conditions when the network is small (with 10's of IoT devices). However, it does not scale well with network size whereas MAF-MAD outperforms all other schedulers under all considered scenarios for larger networks.

RAN Slicing in Multi-MVNO Environment under Dynamic Channel Conditions

Apr 11, 2021

Abstract:With the increasing diversity in the requirement of wireless services with guaranteed quality of service(QoS), radio access network(RAN) slicing becomes an important aspect in implementation of next generation wireless systems(5G). RAN slicing involves division of network resources into many logical segments where each segment has specific QoS and can serve users of mobile virtual network operator(MVNO) with these requirements. This allows the Network Operator(NO) to provide service to multiple MVNOs each with different service requirements. Efficient allocation of the available resources to slices becomes vital in determining number of users and therefore, number of MVNOs that a NO can support. In this work, we study the problem of Modulation and Coding Scheme(MCS) aware RAN slicing(MaRS) in the context of a wireless system having MVNOs which have users with minimum data rate requirement. Channel Quality Indicator(CQI) report sent from each user in the network determines the MCS selected, which in turn determines the achievable data rate. But the channel conditions might not remain the same for the entire duration of user being served. For this reason, we consider the channel conditions to be dynamic where the choice of MCS level varies at each time instant. We model the MaRS problem as a Non-Linear Programming problem and show that it is NP-Hard. Next, we propose a solution based on greedy algorithm paradigm. We then develop an upper performance bound for this problem and finally evaluate the performance of proposed solution by comparing against the upper bound under various channel and network configurations.

Deep Learning for Fast and Reliable Initial Access in AI-Driven 6G mmWave Networks

Jan 06, 2021

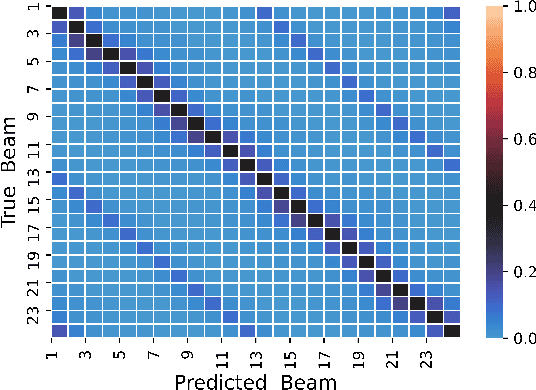

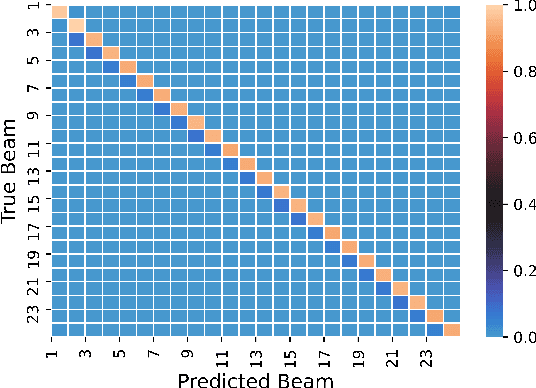

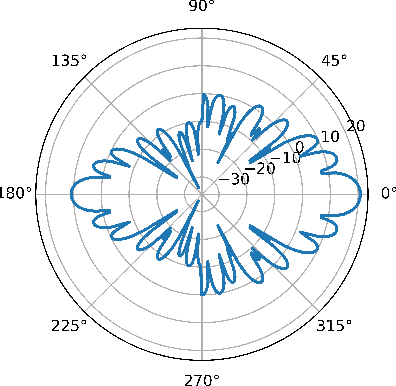

Abstract:We present DeepIA, a deep neural network (DNN) framework for enabling fast and reliable initial access for AI-driven beyond 5G and 6G millimeter (mmWave) networks. DeepIA reduces the beam sweep time compared to a conventional exhaustive search-based IA process by utilizing only a subset of the available beams. DeepIA maps received signal strengths (RSSs) obtained from a subset of beams to the beam that is best oriented to the receiver. In both line of sight (LoS) and non-line of sight (NLoS) conditions, DeepIA reduces the IA time and outperforms the conventional IA's beam prediction accuracy. We show that the beam prediction accuracy of DeepIA saturates with the number of beams used for IA and depends on the particular selection of the beams. In LoS conditions, the selection of the beams is consequential and improves the accuracy by up to 70%. In NLoS situations, it improves accuracy by up to 35%. We find that, averaging multiple RSS snapshots further reduces the number of beams needed and achieves more than 95% accuracy in both LoS and NLoS conditions. Finally, we evaluate the beam prediction time of DeepIA through embedded hardware implementation and show the improvement over the conventional beam sweeping.

Cross-layer Band Selection and Routing Design for Diverse Band-aware DSA Networks

Sep 08, 2020

Abstract:As several new spectrum bands are opening up for shared use, a new paradigm of \textit{Diverse Band-aware Dynamic Spectrum Access} (d-DSA) has emerged. d-DSA equips a secondary device with software defined radios (SDRs) and utilize whitespaces (or idle channels) in \textit{multiple bands}, including but not limited to TV, LTE, Citizen Broadband Radio Service (CBRS), unlicensed ISM. In this paper, we propose a decentralized, online multi-agent reinforcement learning based cross-layer BAnd selection and Routing Design (BARD) for such d-DSA networks. BARD not only harnesses whitespaces in multiple spectrum bands, but also accounts for unique electro-magnetic characteristics of those bands to maximize the desired quality of service (QoS) requirements of heterogeneous message packets; while also ensuring no harmful interference to the primary users in the utilized band. Our extensive experiments demonstrate that BARD outperforms the baseline dDSAaR algorithm in terms of message delivery ratio, however, at a relatively higher network latency, for varying number of primary and secondary users. Furthermore, BARD greatly outperforms its single-band DSA variants in terms of both the metrics in all considered scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge